Lexical and semantic selection

advertisement

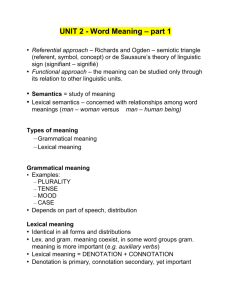

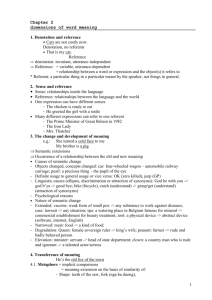

Lexical and semantic selection Options for grammar engineers and what they might mean linguistically Outline and acknowledgements 1. Selection in constraint-based approaches i. types of selection and overview of methods used in LKB/ERG ii. denotation 2. The collocation problem i. collocation in general ii. corpus data on magnitude adjectives iii. possible accounts 3. Conclusions Acknowledgements: LinGO/DELPH-IN, especially Dan Flickinger, also Generative Lexicon 2005 1(i): Types of grammatical selection syntactic: e.g., preposition among selects for an NP (like other prepositions) lexical: e.g., spend selects for PP headed by on Kim spent the money on a car semantic: e.g., temporal at selects for times of day (and meals) at 3am at three thirty five and ten seconds precisely Lexical selection lexical selection requires method of specifying a lexeme in the ERG, this is via the PRED value spend (e.g., spend the money on Kim) spend_v2 := v_np_prep_trans_le & [ STEM < "spend" >, SYNSEM [ LKEYS [ --OCOMPKEY _on_p_rel KEYREL.PRED "_spend_v_rel" ]]]. Lexical selection ERG relies on convention that different lexemes have different relations `lexical’ selection is actually semantic. cf Wechsler no true synonyms assumption, or assume that grammar makes distinctions that are more fine-grained than realworld denotation justifies. near-synonymy would have to be recorded elsewhere: ERG does (some) morphology, syntax and compositional semantics alternatives? orthography: but ambiguity or non-monotonic semantics lexical identifier: requires new feature PFORM: requires features, values Semantic selection Requires a method of specifying a semantically-defined phrase In ERG, done by specifying a higher node in the hierarchy of relations: at_temp := p_temp_le & [ STEM < "at" >, SYNSEM [ LKEYS [ --COMPKEY hour_or_time_rel, KEYREL.PRED _at_p_temp_rel ]]]. Hierarchy of relations Semantic selection Semantic selection allows for indefinitely large set of alternative phrases compositionally constructed time expressions productive with respect to new words, but exceptions allowable • approach wouldn’t be falsified if e.g., *at tiffin ERG lexical selection is a special case of ERG semantic selection! could assume featural encoding of semantic properties (alternatively or in addition to hierarchy) TFS semantic selection is relatively limited practically (see later) also idiom mechanism in ERG 1(ii): Denotation, grammar engineering perspective Denotation is truth-conditional, logically formalisable (in principle), refers to `real world’ (extension) Not necessarily decomposable Naive physics, biology, etc Must interface with non-linguistic components Minimising lexical complexity in broad-coverage grammars is practically necessary Plausible input to generator: reasonable to expect real world constraints to be obeyed (except in context) • the goat read the book Potential disambiguation is not a sufficient condition for lexical encoding The vet treated the rabbit and the guinea pig with dietary Vitamin C deficiency Denotation, continued Assume linkage to domain, richer knowledge representation language available TFS language for syntax etc, not intended for general inference Talmy example: the baguette lay across the road across - Figure’s length > Ground’s width identifying F and G and location for comparison in grammar? coding average length of all nouns? allowing for massive baguettes and tiny roads? But ... Trend in KR is towards description logics rather than richer languages. Need to think about the denotation to justify grammaticization (or otherwise) Linguistic criteria: denotation versus grammaticization? if temporal in/on/at have same denotation, selectional account is required for different distribution unreasonable to expect lexical choice for in/on/at in input to generator effect found cross-linguistically? predictable on basis of world knowledge? closed class vs open class Practical considerations about interfacing go along with linguistic criteria non-linguists expect some information about word meaning! allow generalisation over e.g., in/on/at in generator input, while keeping possibility of distinction 2(i) Collocation: assumptions Significant co-occurrences of words in syntactically interesting relationships `syntactically interesting’: for examples in this talk, attributive adjectives and the nouns they immediately precede `significant’: statistically significant (but on what assumptions about baseline?) Compositional, no idiosyncratic syntax etc (as opposed to multiword expression) About language rather than the real world Collocation versus denotation Whether an unusually frequent word pair is a collocation or not depends on assumptions about denotation: fix denotation to investigate collocation Empirically: investigations using WordNet synsets (Pearce, 2001) Anti-collocation: words that might be expected to go together and tend not to e.g., flawless behaviour (Cruse, 1986): big rain (unless explained by denotation) e.g., buy house is predictable on basis of denotation, shake fist is not 2(ii): Distribution of `magnitude’ adjectives some very frequent adjectives have magnituderelated meanings (e.g., heavy, high, big, large) basic meaning with simple concrete entities extended meaning with abstract nouns, non-concrete physical entities (high taxation, heavy rain) extended uses more common than basic not all magnitude adjectives – e.g. tall nouns tend to occur with a limited subset of these extended adjectives some apparent semantic groupings of nouns which go with particular adjectives, but not easily specified Some adjective-noun frequencies in the BNC number proportion quality problem part winds rain large 1790 404 0 10 533 0 0 high 92 501 799 0 3 90 0 big 11 1 0 79 79 3 1 heavy 0 0 1 0 1 2 198 Grammaticality judgments number proportion quality problem large * high heavy ? * big ? ? * * part ? winds rain * * * * * More examples impor tance success majority number proport ion quality role problem part winds support rain great 310 360 382 172 9 11 3 44 71 0 22 0 large 1 1 112 1790 404 0 13 10 533 0 1 0 high 8 0 0 92 501 799 1 0 3 90 2 0 major 62 60 0 0 7 0 272 356 408 1 8 0 big 0 40 5 11 1 0 3 79 79 3 1 1 strong 0 0 2 0 0 1 8 0 3 132 147 0 heavy 0 0 1 0 0 1 0 0 1 2 4 198 Judgments impor tance success majority number proporti on quality role problem part great large ? high ? * major * ? ? ? * ? winds ? strong ? ? * * * heavy ? * ? * * rain ? * * * ? * ? big support ? ? * * * ? Distribution Investigated the distribution of heavy, high, big, large, strong, great, major with the most common co-occurring nouns in the BNC Nouns tend to occur with up to three of these adjectives with high frequency and low or zero frequency with the rest My intuitive grammaticality judgments correlate but allow for some unseen combinations and disallow a few observed but very infrequent ones big, major and great are grammatical with many nouns (but not frequent with most), strong and heavy are ungrammatical with most nouns, high and large intermediate heavy: groupings? magnitude: dew, rainstorm, downpour, rain, rainfall, snowfall, fall, snow, shower: frost, spindrift: clouds, mist, fog: flow, flooding, bleeding, period, traffic: demands, reliance, workload, responsibility, emphasis, dependence: irony, sarcasm, criticism: infestation, soiling: loss, price, cost, expenditure, taxation, fine, penalty, damages, investment: punishment, sentence: fire, bombardment, casualties, defeat, fighting: burden, load, weight, pressure: crop: advertising: use, drinking: magnitude of verb: drinker, smoker: magnitude related? odour, perfume, scent, smell, whiff: lunch: sea, surf, swell: high: groupings? magnitude: esteem, status, regard, reputation, standing, calibre, value, priority; grade, quality, level; proportion, degree, incidence, frequency, number, prevalence, percentage; volume, speed, voltage, pressure, concentration, density, performance, temperature, energy, resolution, dose, wind; risk, cost, price, rate, inflation, tax, taxation, mortality, turnover, wage, income, productivity, unemployment, demand magnitude of verb: earner heavy and high 50 nouns in BNC with the extended magnitude use of heavy with frequency 10 or more 160 such nouns with high Only 9 such nouns with both adjectives: price, pressure, investment, demand, rainfall, cost, costs, concentration, taxation 2(iii): Possible empirical accounts of distribution 1. Difference in denotation between `extended’ uses of adjectives 2. Grammaticized selectional restrictions/preferences 3. Lexical selection • stipulate Magn function with nouns (MeaningText Theory) 4. Semi-productivity / collocation • plus semantic back-off 1 - Denotation account of distribution Denotation of adjective simply prevents it being possible with the noun. Implies that heavy and high have different denotations heavy’(x) => MF(x) > norm(MF,type(x),c) & precipitation(x) or cost(x) or flow(x) or consumption(x)... (where rain(x) -> precipitation(x) and so on) But: messy disjunction or multiple senses, openended, unlikely to be tractable. e.g., heavy shower only for rain sense, not bathroom sense Not falsifiable, but no motivation other than distribution. Dictionary definitions can be seen as doing this (informally), but none account for observed distribution. Input to generator? 2 - Selectional restrictions and distribution Assume the adjectives have the same denotation Distribution via features in the lexicon e.g., literal high selects for [ANIMATE false ] cf., approach used in the ERG for in/on/at in temporal expressions grammaticized, so doesn’t need to be determined by denotation (though assume consistency) could utilise qualia structure Problem: can’t find a reasonable set of cross-cutting features! Stipulative approach possible, but unattractive. 3 - Lexical selection MTT approach noun specifies its Magn adjective in Mel’čuk and Polguère (1987), Magn is a function, but could modify to make it a set, or vary meanings could also make adjective specify set of nouns, though not directly in LKB logic stipulative: if we’re going to do this, why not use a corpus directly? 4- Collocational account of distribution all the adjectives share a denotation corresponding to magnitude, distribution differences due to collocation, soft rather than hard constraints linguistically: adjective-noun combination is semi-productive denotation and syntax allow heavy esteem etc, but speakers are sensitive to frequencies, prefer more frequent phrases with same meaning cf morphology and sense extension: Briscoe and Copestake (1999). Blocking (but weaker than with morphology) anti-collocations as reflection of semi-productivity Collocational account of distribution computationally, fits with some current practice: • filter adjective-noun realisations according to ngrams (statistical generation – e.g., Langkilde and Knight, recent experiments with ERG) • use of co-occurrences in WSD back-off techniques requires an approach to clustering semantic spaces acquired from corpora generally, collect vectors of words which co-occur with the target best known is LSA: often used in psycholinguistics more sophisticated models incorporate syntactic relationships currently sexy, but severe limitations! dog bark house cat dog - 1 0 0 bark 1 - 0 0 Back-off and analogy back-off: decision for infrequent noun with no corpus evidence for specific magnitude adjective should be partly based on productivity of adjective: number of nouns it occurs with default to big back-off also sensitive to word clusters e.g., heavy spindrift because spindrift is semantically similar to snow semantic space models: i.e., group according to distribution with other words hence, adjective has some correlation with semantics of the noun Metaphor Different metaphors for different nouns (cf., Lakoff et al) `high’ nouns measured with an upright scale: e.g., temperature: temperature is rising `heavy’ nouns metaphorically like burden: e.g., workload: her workload is weighing on her Doesn’t lead to an empirical account of distribution, since we can’t predict classes. Assumption of literal denotation followed by coercion is implausible. But: extended metaphor idea is consistent with idea that clusters for backoff are based on semantic space Collocation and linguistic theory Collocation plus semantic space clusters may account for some of the `messy’ bits, at least for some speakers. in/on transport: in the car, on the bus Talmy: presence of walkway, `ragged lower end of hierarchy’ but trains without walkway, caravans with walkway? in/on choice perhaps collocational, not real exception to languageindependent schema elements Potential to simplify linguistic theories considerably. Success of ngrams, LSA models of priming. Practically testable: assume same denotation of heavy/high or in/on, see if we can account for distribution in corpus. Alternative for temporal in/on/at? Experiments with machine learning temporal in/on/at (Mei Lin, MPhil thesis, 2004): very successful at predicting distribution, but used lots of Treebank-derived features. Summary Selection in ERG Other aspects of ERG selection not described here: multiword expressions and idioms Collocational models as adjunct to TFS encoding Role of denotation is crucial Practical considerations about grammar usability Final remarks Grammar usability: A good broad-coverage grammar should have an account of denotation of closed-class words at least, but probably not within TFS encoding. Can we use semantic web languages for nondomain-specific encoding? Collocational techniques require much further investigation Can semantic space models be related to denotation (e.g., somehow excluding collocational component)? Idioms Idiom entry: stand+guard := v_nbar_idiom & [ SYNSEM.LOCAL.CONT.RELS <! [ PRED "_stand_v_i_rel" ], [ PRED "_guard_n_i_rel" ] !> ]. Idiomatic lexical entries: guard_n1_i := n_intr_nospr_le & [ STEM < "guard" >, SYNSEM [ LKEYS.KEYREL.PRED "_guard_n_i_rel“ ]]. stand_v1_i := v_np_non_trans_idiom_le & [ STEM < "stand" >, SYNSEM [ LKEYS.KEYREL.PRED "_stand_v_i_rel”]]. Idioms in ERG/LKB Account based on Wasow et al (1982), Nunberg et al (1994). Idiom entry specifies a set of coindexed MRS relations (coindexation specified by idiom type, e.g., v_nbar_idiom) Relations may correspond to idiomatic lexical entries (but may be literal uses: e.g., cat out of the bag – literal out of the). Idiom is recognised if some phrase matches the idiom entry. Allows for modification: e.g., stand watchful guard Messy examples among: requires group or plural or ? among the family (BNC) among the chaos (BNC) between: requires plural denoting two objects, but not group (?) fudge sandwiched between sponge (BNC) between each tendon (BNC) ? the actor threw a dart between the couple * the actor threw a dart between the audience (even if only two people in the audience)