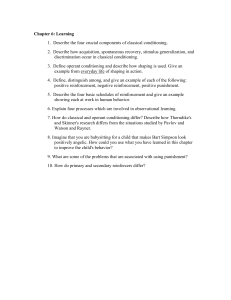

Chapter 8 Notes

advertisement

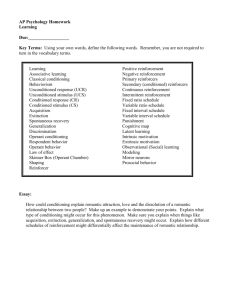

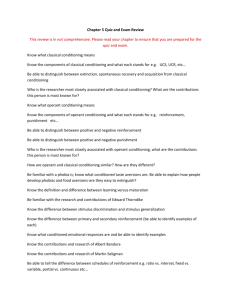

Unit 6, LEARNING notes, pp. 215-253 Updated for 2011; goes with Ch. 8 in 2007 book) Adaptability: capacity to learn new behaviors that help us cope with changing circumstances Learning: a relatively permanent change in behavior based on experience Habituation: an organism’s decreasing response to a stimulus with repeated exposure to it. Associative learning: forming an association between cause and effect Example: associating the sound of thunder with the sight of lightning Conditioning: the process of learning associations Operant conditioning: learning to associate a response with its consequence Observational learning: learning from the examples of others Classical conditioning: learning to associate to stimuli to anticipate events Behaviorism: Watson’s view that psychology should be an objective science based on observable behavior I. Classical conditioning What is classical conditioning and how did Pavlov’s work influence behaviorism? IVAN PAVLOV, JOHN WATSON page 218 Developed by Ivan Pavlov using dog salivation experiments Stimuli is associated with an involuntary response Respondent behavior: an automatic response to certain stimuli Unconditioned response (UCR or UR) the normal response; in Pavlov’s experiment it was the salivation of the dog. Unconditioned stimulus (UCS or US) the stimulus that triggers a normal response Conditioned response (CR) the response that is learned; in Pavlov’s exp. it was salivation to a metronome or turning fork and other sounds. Note: the UR and CR are almost always the same. Conditioned stimulus (CS) a neutral stimulus that triggers a learned response; in Pavlov’s experiment it was the sound The US should precede the CS by about ½ second. Acquisition, 220 What are the five major processes in CC? There are five major processes with classical conditioning 1. Acquisition: the initial formation of the association between the CS and the CR Higher order conditioning: the CS in one experience is paired with a new NS, creating a second CS. Ex: an animal that has learned a tone produces food may learn that a light signals the presentation of the tone. 2. Extinction: if the UCS is not presented, after a few times the organism loses receptivity to the CS 3. Spontaneous recovery: however, if the UCS is presented again with the CS, the organism begins to respond again 4. Generalization: the tendency to respond similarly to similar stimuli; 5. Discrimination: the ability to distinguish between different stimuli ___________________________________________________________________ Updating Pavlov, 223 Do cognitive processes and biological constraints affect classical conditioning? Behaviorist learning doesn’t allow us to generalize from one response to another, nor from one species to another Cognitive processes 1. Rescorla and Wagner (1972): animals learn to “expect” an unconditioned stimulus; this shows cognition at work: the animal learns the predictability of a second associated event after the first 2. Conditioning an alcoholic with a nauseating drink might not work because they are “aware” of what causes the nausea---the drink, not alcohol Martin Seligman found that dogs given repeated shocks with no opportunity to avoid them developed a passive resignation called learned helplessness. Some dogs just never gave up. He developed the idea of learned optimism and now teaches people to be more optimistic and less helpless. Biological predispositions Garcia and Koelling: 1. Animals can learn to avoid a drink that will make them sick, but not when it’s announced by a noise; so Pavlov was wrong in claiming that any stimulus could serve as a conditioned stimulus. 2. We are biologically prepared to learn certain associations and not others: we learn to fear snakes, but not flowers 3. Taste aversions result from biology. The smell and taste of a nauseating food become the CS for sickness. 4. Secondary disgust: an aversion that reminds a person of something that is considered disgusting in its own right. Ex. You won’t eat a piece of chocolate formed like dog feces. The biopsychosocial influences on learning (see fig. 6.9, p. 225): genetic predispositions, previous experiences, predictability of associations, culturally learned preferences, and motivations all influence learning. Many phobias are learned through classical conditioning. Systematic desensitization can also unlearn these fears. (see pp. 611-612) Pavlov’s legacy, 226 Virtually all organisms learn by classical conditioning. Pavlov showed how the process can be studied objectively. _____________________________________________________________________ Practical applications What have been some applications of classical conditioning? 1. Treating drug addiction 2. Treating emotional illness 5. Increasing the immune system’s responsiveness (Robert Ader’s rat experiment: rats who liked saccharine inadvertently were conditioned to lower their immune system when the sweet substance was paired with a chemical that made them nauseous) 6. Pavlov’s worked paved the way for John Watson’s Little Albert Study: He conditioned Albert to fear (CR) a white rat (NS) when the rat’s presence was paired with the sound of a loud noise (UCS). It only took seven pairings. After five days Albert became afraid of other fury white animals (generalization). Watson showed how emotions could be conditioned. An opposite effect to the Albert experiment can be created: you can learn not to fear things. Watson later worked in advertising and invented the concept of the “coffee break”. II. Operant conditioning BF SKINNER page 228 What is operant conditioning, and how does it differ from classical conditioning? Developed by B. F. Skinner where subjects voluntarily respond a certain way depending on the consequences (reward or punishment) Respondent behavior: it occurs as an automatic response to some stimulus Operant behavior: the behavior that acts upon a situation and produces consequences Ex: eating in your parent’s living makes them mad; your eating behavior may change depending on the kind of consequences you want (yelling or not) the situation to have Skinner’s experiments, 229 Law of effect: EL Thordinke’s idea that behavior that is rewarded is more likely to recur (one of Psychology’s few laws) Operant chamber: Skinner box used to reward animal behavior; this behavioral technology revealed the principles of behavior control Shaping: reinforcers guide behavior; gradually rewarding behavior as it approaches the desired target; important because organisms rarely perform the desired behavior at first. Successive approximations: rewarding responses that are closer to the target behavior Discriminative stimulus: it elicits a response after association with reinforcement; these are used to help animals form concepts Types of Reinforcers, 230 What are the basic types of reinforcers? Reinforcers: anything that increases the chance of a behavior occurring again 1. Positive: things the organism desires Ex: rewards like appraisal, money, and food 2. Negative: the removal (-) of aversive events Freeing someone from prison Taking a medicine to relieve a cold 3. Primary things that satisfy inborn biological needs Ex: food, water, warmth 4. Secondary (also called “conditioned”) learned things that are strengthened by association with primary reinforcers Ex: money that can be used to buy the primary reinforcer, food Ex: praise, high grades, smiles which can lead to the primary emotion, happiness Immediate and delayed page 231 Unlike rats, humans do respond to delayed reinforcement Four year olds have been shown to delay reinforcement; example: Walter Mischel’s marshmallow experiment) But drug users succumb to immediate gratification; the later ill effects often not enough to prevent addiction Teens fall to immediate gratification of sex over safe or saved sex Schedules of reinforcement pages 232-233 How do different reinforcement schedules affect behavior? a. Continuous A quick method of learning in which the desired behavior is reinforced every time when reinforcement stops extinction can quickly happen; a teacher awarding points for every assignment is an example. b. Partial reinforcing a behavior parts of the time; the acquisition is slower but more resistant to change c. Fixed ratio reinforcement after a fixed number of response Ex: getting candy after washing the floor every 3 times d. Variable ratio Ex: getting candy after the third floor washing, then the 2nd, then the 5th Ratio schedules produce high rates of responding; reinforcers increase as the numbers of responses increase. e. Fixed interval reinforcement after a fixed amount of time Ex: getting candy three hours after every time the floor is washed f. Variable interval reinforcement after an unpredictable amount of time Ex: getting candy two hours after the floor is washed, then 3, then 5 Variable schedules produce more consistent responding. Punishment, 234 How does punishment affect behavior? The opposite of reinforcement: the goal is to decrease behavior, not to increase it John Darley: swift and sure is the most effective punishment Positive punishment: administer something the subject doesn’t like Example: spanking; parking ticket Negative punishment withdrawal of something desirable Example: timeout (the child can get out of the time out if they agree to comply with parent requests); revoking privileges Punishment pros and cons 1. successfully stops behavior 2. drawback: the behavior is only suppressed, arising later when appropriate 3. punishment increases aggression because it models aggression as a way to cope 4. Also teaches discrimination 5. Can teach fear 6. If using it pair with reinforcement; notice the individual doing what you want and reward them (like the Norski Nod!) Extending Skinner page 235 Do cognitive processes and biological constraints affect operant conditioning? Just before his death, in his last speech, Skinner maintained that behavior is the key in psychology and not cognition. But, research has shown cognitive processes are much more important, even in conditioning. Latent learning demonstrating knowledge only when it is needed; Tolman and Honzik did the research. Ex. mice that explored a maze only demonstrate that they know the maze well by directly going to the food placed the previous time Cognitive map a mental image of your surroundings; mice developed this in Tolman’s studies; he showed there is more to learning than just association; cognition is also at work. Insight learning, 236 The solution suddenly appears in the mind. Intrinsic motivation 236 Performing an activity for its own sake Edward Deci showed that rewards can undermine motivation: if you already like doing something and are then rewarded for the activity, you may lose interest and only perform it for the reward. Extrinsic motivation behaving in a certain way to receive external rewards Overjustification effect giving a reward for something the organism already likes to do; here, the extrinsic motivator overrides intrinsic interest in the task This concept is related to cognitive dissonance (Leon Festinger’s concept on page 648): in change the difference between an attitude and action people will often change their attitude to support their action. This is unfavorable because the organism will lose interest in the activity Ex. Paying someone to do a puzzle they already like doing Some researchers claim rewards can raise performance and spark creativity. Don’t use them as bribes or to control, but to signal a good performance. Biological predispositions, 237 Skinner’s students Keller Breland and Marian Breland studied animals. They can be “taught” to do certain human behaviors using operant conditioning but then revert to the normal ways; this is known as “instinctive drift” with trained animals acting naturally even though trained. Superstitions are behaviors that are accidentally reinforced. Practical applications of Operant Conditioning: Skinner’s legacy. Page 238 1. Improving Student performance: computers replace teachers to properly reinforce learned behavior 2.Improving Work productivity Make reinforcement immediate Well-defined goals that are achievable 3.Helping shape child behaviors Reinforce good behavior Ignore whining Explain misbehaviors and give time outs 4.Improving athletic skills 5 Self-improvement: specify goals, monitor, reinforce, reduce rewards. Neil Miller’s concept of biofeedback can be used here. This is a cognitive strategy to monitoring of changes in physiological responses. Contrasting Classical and Operant Conditioning See table 6.4, page 241 III. Learning by observation ALBERT BANDURA page 242 What is observational learning and how is it enabled by mirror neurons? Observational learning---also called social learning--- researched by Albert Bandura in the 1960s to the present. Modeling watching specific behaviors of others and imitating them; we tend to mimic models who are similar to us. His famous experiment was the Bobo Doll experiment. Children acted violently after watching television programming which depicts violent characters that acted as models. Self-efficacy and learning 1. Albert Bandura: Your belief about your ability to learn to make changes in your life. 2. People high in self efficacy, have more control over their lives. 3. They take information from the environment and use it to improve their lives 4. Summary: If you believe you can do learn to do something, you are more likely to be able to achieve it. Mirrors in the brain, 243 Mirror neurons frontal lobe neurons; they fire when performing certain actions or watching others; allows for imitation, language learning and empathy; discovery of Giacomo Rizzolatti. We have a mirror neuron system that supports empathy (emotional understanding of others) and imitation. These neurons help infer the mental states of other and give rise to a theory of mind. People with autism lack this ability (see 422-423) Mirror neurons underlie our social behavior. Bandura’s experiments page 244 Bandura was looking at vicarious learning: when you see someone else rewarded for emitting a behavior. When the adult model was reinforced for aggressive behavior, the children who saw this in one version of the Bobo Doll experiment were far more likely to act aggressively themselves. This was in comparison to other groups of kids who saw the adult punished in another ending, or saw no consequence in this a third version. The effects were long lasting. Applications Behavior modeling used by businesses to train employees Positive Observational learning Prosocial behaviors actions that are beneficial, constructive, and not violent. These behaviors can prompts similar behaviors in others thus, prosocial. Research experiments show that children often do what their models (usually parents) do. Hypocritical parents: saying one thing and doing another. Their children will say what they say and do what they do. Antisocial effects, 247 Anti-social models can have anti-social effects Abusive parents might have aggressive children Television and observational learning George Gerbner’s studies: television does not reflect the real world Correlational studies link violence viewing with violent behavior (Craig Anderson’s research); but correlation does not mean causation. Leonard Eron’s studies: the more hours children watch, the more they are at risk for aggression and crime Myers asserts that he consensus is that violence on TV leads to aggressive behavior among children and teens. But this study is from 1982. The Supreme Court, in 2011, found a much more mixed picture in rejecting this claim. Myers cites two factors: One factor is imitation: kids copy what they see on TV. Exposure to violence desensitizes the viewer: watching cruelty promotes indifference Key terms: 251-252 (see study guide) AP Quiz: 252-253