A Tale of Market Efficiency

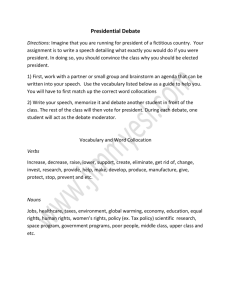

advertisement