Chapter 6 Reliability

advertisement

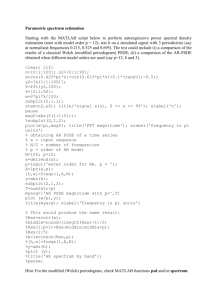

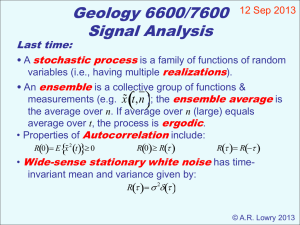

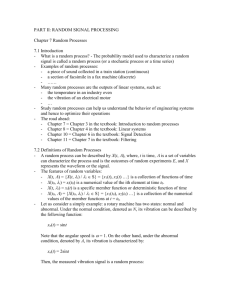

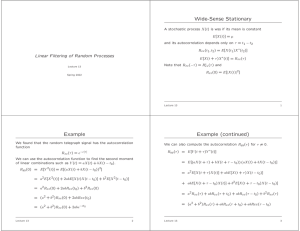

Chapter 6 Reliability Charles Spearman - a British psychologist first recognized the need for test "reliability." 3 types of reliability analysis: 1. test-retest, 2. alternate forms, 3. internal consistency. 1. Test-Retest - one group takes the same test at two different times. They should score similarly both times relative to each other. 2. Alternate Forms - like test-retest, BUT, the questions are slightly different to eliminate "Carryover Effects" (having taken the test once affecting scores on the second administration) 3. Internal Consistency - one administration only, the test is split into two halves. If all items are drawn from the same domain, then the two halves should correlate highly with each other. "X = T + E" - True Score (Reliability) Theory. Any raw score (X) is made up of True Score or "stable characteristics" (T) plus some Error (E). "T / X" - Proportion test score representing differences in "stable characteristics." "E / X" - Proportion test score representing "error." We will never know the exact proportion for any one person, BUT, we can calculate it in general for a group of people. Reliability Coefficient (rxx) - most simply, the test correlated with itself using Pearson's r. Use meaningful subscripts like "rx1x2" to keep things clear. If the above is true then: 2X = 2T + 2E and rxx = 2T / 2X 10 = 8 + 2 .8 = 8 / 10 (example with a reliable test) Factors Affecting Reliability (actual or apparent) A. Inconsistent Performance - (with test-retest or alternate forms) makes the test "appear" less reliable than it is. 1. True Score Change - somehow actual knowledge has changed at second testing (carryover effect). 2. Error Score Change - Something about the test situation (noisy?) or person (tired?) is different at second testing. B. Sampling Factors (item) - number and nature of items affect reliability. 1. Test Length - as the test gets longer, it gets more reliable. 2. Item Representativeness - the more completely the items cover (capture) the domain AND not having items that don't really belong to the domain produce higher reliability. C. Statistical Factors - characteristics of your samples (people) 1. Range Restriction - using a sample more "restricted" than the target audience of the test leading to an "underestimation" of the actual reliability. 2. Range Extension (Expansion) - using a sample "more varied" than the target audience of the test leading to an "overestimation" of the actual reliability. Types of Reliability Analysis - Using Two Sets of Scores 1. Test-Retest Reliability - the same group takes the same test at two times (1 & 2), the two sets of scores are correlated using Pearson's r (means and SD formula). 2. Alternate Forms Reliability - the same group takes two different but equivalent versions of the same test at two times (1 & 2), the two sets of scores are correlated using Pearson's r (means and SD formula). 3. Using One Set of Scores (Internal Consistency Reliability) - tells us something different, the extent to which all items tap the same domain. A. Split Half Reliability - simplest method, the test (e.g., 100 items) is split into two halves (50 items each, often odd-even). The two halves are correlated using Pearson's r. When doing odd-even split half, set up table differently than in book on pg. 195. Have names going across top. (not down the side) NOTE: because we are correlating 50 pairs of scores (not 100), the obtained r needs to be "CORRECTED." Spearman Brown Prophecy Formula - when the "correction factor" (N) is set at "2," it corrects the split half reliability coefficient. Corrected roe = (2) (r) / 1 + (2 - 1) (r) B. Guttman Formula - Use of Pearson's r for split half reliability assumes the variances of the two halves are about equal. If not, do not use. The Guttman formula can be used instead. Guttman roe = 2[1 - (O2 - E2) / X2] C. Cronbach's Alpha (and the two KR formulas) - "compare all possible split halves." Item variances (2i ) are summed. This is for LIKERT (continuous) items. K = number of test items alpha rxx = K / K - 1 ( 1 - 2i / 2x ) D. Kuder Richardson 20 (KR-20) - This is for DICHOTOMOUS (yes-no) items. Proportions of persons correct / endorsing each item "p" and incorrect / not endorsing each item "q" are multiplied and summed. KR-20 rxx = K / K - 1 ( 2x - pq / 2x ) E. Kuder Richardson 20 - This is for DICHOTOMOUS (yes-no) items ONLY IF ITEMS ARE OF EQUAL DIFFICULTY. Easy as it uses Xbar. KR-21 rxx = 1 - ( Xbar(K - Xbar) / K(2x ) ) The "alpha" formulas require No Split Half CORRECTION Negative Reliabilities - occasionally result when using one of the "alpha" formulas. It means the domain is not as well defined as it should be. If this happens, use regular split half reliability. Even more rarely, this happens with regular split half, then domain is really not well defined and rxx cannot be calculated. Which Reliability Formula to Use? - If we are measuring a single well focused domain such as a personality trait (shyness), then one of the "alpha" formulas will likely be superior. HOWEVER, if we are measuring a broader domain (a cumulative final exam) then simple split half may be the better way to go. Standard Error of Measurement and Confidence Intervals - tell us how close we think we have come (and with what level of confidence) to identifying the person's "true score" Standard Error of Measurement - the standard deviation of the hypothetical distribution of possible X scores a person might have obtained on a given testing. SEM = x * SQRT(1 - rxx) Confidence Interval - two values (upper and lower bounds) define an interval (centered on the score X). Tells us with what degree of certainty (e.g., 95%) we think the person's "true score" lies between the upper and lower bound values. Most IQ tests are reported in terms of this interval. 68% CI = X + or - 1(SEM) 95% CI = X + or - 1.96(SEM) Factors Affecting CI Width: 1. We want our CIs to be "Narrow" 2. Higher levels of confidence (e.g., 95 vs. 90) means a wider interval 3. lower reliability means a wider interval 4. more variability (SD, look back at SEM formula) means a wider interval Other uses for the Spearman Brown Formula - Increasing Reliability 1. What will the new reliability be if we make our test (N) times longer new rxx = ( N ) ( Current rxx) / 1 + (N) -1) (Current rxx) we choose (N) 2. How many times longer (N) do we need to make the test to achieve a desired reliability? (N) = (desired rxx) (1 - current rxx) / (current rxx) (1 - desired rxx) we choose desired rxx