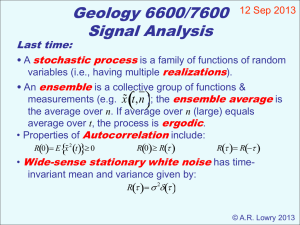

Random Processes

advertisement

PART II: RANDOM SIGNAL PROCESSING

Chapter 7 Random Processes

7.1 Introduction

- What is a random process? - The probability model used to characterize a random

signal is called a random process (or a stochastic process or a time series)

- Examples of random processes:

- a piece of sound collected in a train station (continuous)

- a section of facsimile in a fax machine (discrete)

- ……

- Many random processes are the outputs of linear systems, such as:

- the temperature in an industry oven

- the vibration of an electrical motor

- …

- Study random processes can help us understand the behavior of engineering systems

and hence to optimize their operations

- The road ahead:

- Chapter 7 = Chapter 3 in the textbook: Introduction to random processes

- Chapter 8 = Chapter 4 in the textbook: Linear systems

- Chapter 10 = Chapter 6 in the textbook: Signal Detection

- Chapter 11 = Chapter 7 in the textbook: Filtering

7.2 Definitions of Random Processes

- A random process can be described by X(t, ), where, t is time, is a set of variables

can characterize the process and is the outcomes of random experiments E, and X

represents the waveform or the signal.

- The features of random variables:

- X(t, ) = {X(t, i) / i S} = {x1(t), x2(t) …} is a collection of functions of time

- X(t0, i) = xi(t0) is a numerical value of the ith element at time t0.

- X(t, i) = xi(t) is a specific member function or deterministic function of time

- X(t0, ) = {X(t0, i) / i S} = {x1(t0), x2(t0) …} is a collection of the numerical

values of the member functions at t = t0,

- Let us consider a simply example: a rotary machine has two states: normal and

abnormal. Under the normal condition, denoted as N, its vibration can be described by

the following function:

xn(t) = sint

Note that the angular speed is = 1. On the other hand, under the abnormal

condition, denoted by A, its vibration is characterized by:

xa(t) = 2sint

Then, the measured vibration signal is a random process:

X(t, ) = {X(t, i) / i {N, A}} = {xn(t), xa(t)} = {sint, 2sint}

In particular:

X(t, N) = sint

X(t, A) = 2sint

X(0, ) = {0, 0}

X(/2, ) = {1, 2}

X(0, N) = 0;

X(0, A) = 0;

X(/2, N) = 1;

X(/2, N) = 2;

-

Note that at each specific time, the random process becomes a random variable.

Hence, we can only calculate its probability. For example, if the machine is equally

likely to be in the state of normal and abnormal, then:

P(X(/2) = 1) = ½

-

This is called the probabilistic structure of the random process.

Similar to the (inform) definition of probability, let n be the total number trails at t =

t0, and k be the number of trail that result in X(t0, ) = a, then:

k

n n

P( X (t 0 , ) a ) lim

-

We can define the joint and conditional probability in similar pattern.

The formal definition of random processes is based on the probability: Let S be the

sample space of a random experiment and t be a variable that can have values in the

set R1 (the real line). A real-valued random process X(t), t , is then a

measurable function on S that maps S onto R1. It can be described by its (nth

order) distribution function:

FX (t1 ), X (t2 ),..., X (tn ) ( x1 , x2 ,..., xn ) P X (t1 ) x1 ,..., X (t n ) xn , for all n and t

-

Random processes can be classified according the characteristics of t and the random

variable X(t) at time t:

X(t) is continuous

t is continuous

Continuous random process

X(t) is discrete

Discrete random process

t is discrete

Continuous random

sequence

Discrete random sequence

-

Random processes can also be classified based on the probabilistic structure. If the

probability distribution of a random process does not depend on the time, t, then, the

process is called stationary. Otherwise, it is called non-stationary. That is:

if P(X(t) x(t)) = P(X x)

-

then X(t) is stationary

else X(t) is nonstationary

This will be further discussed in the subsequent sections.

Another important type of random processes is the Markov process. Given a random

process, X(t), if

PX (t k ) / X (t k 1 ),..., X (t1 ) PX (t k ) / X (t k 1 )

-

Then, it is a Markov process. The most important feature of a Markov process is that

its current probability depends on only the immediate past.

For the example above, the random process is continuous random process, it is

nonstationary but Markov.

7.3 Representation of Random Processes

- Random processes can be described by their probability structures. There are other

alternative ways to describe random processes and these descriptions may be easier to

use when solving certain problems.

- The first alternative is the joint probability distribution. In general:

- The first order: P( X (t1 ) a1 ) represents the instantaneous amplitude

- The second order: P( X (t1 ) a1 , X (t 2 ) a2 ) represents the structure of the signal

(which is related to the spectrum discussed in subsequent sections)

- The higher (kth) order: P( X (t1 ) a1 , X (t 2 ) a2 ,..., X (t k ) ak ) represents the

details of the signal.

-

The joint probability representation is easy to use. However, two different process

may have the same joint probability distributions.

The other alternative form is the analytical form. For example:

X(t) = Asin(t + )

-

where, A conforms a Normal distribution and conforms an uniform distribution. In

general, the analytical form representation is unique but it is not always possible to

find an analytical form.

When two or more random processes are involved, say X(t) and Y(t), the following

concepts are important:

- The joint distribution:

P[X(t1) < x1, …, X(tn) < xn, Y(t’1) < y1, …, Y(t’n) < xn]

-

The cross-correlation function:

RXY (t1, t 2 ) EX * (t1 )Y (t 2 )

-

The cross-covariance function:

C XY (t1 , t 2 ) RXY (t1 , t 2 ) * X (t1 ) Y (t 2 )

-

The correlation coefficient:

rXY (t1 , t 2 )

-

C XY (t1 , t 2 )

C XX (t1 , t 2 )C XY (t1 , t 2 )

Equality: X(t) and Y(t) are equal if their respective member functions are identical

for each outcome of S.

Uncorrelated: X(t) and Y(t) are uncorrelated if C XY (t1 , t2 ) = 0, t1, t2

Orthogonal; X(t) and Y(t) are orthogonal if RXY (t1 , t 2 ) = 0, t1, t2

Independent: X(t) and Y(t) are independent if

P[X(t1) < x1, …, X(tn) < xn, Y(t’1) < y1, …, Y(t’n) < xn] =

P[X(t1) < x1, …, X(tn) < xn] P[Y(t’1) < y1, …, Y(t’n) < xn], t1, t2 … t’1, t’2

7.4 Some Special Random Processes

(1) Gaussian processes

- The most commonly used random process is Gaussian process. A Gaussian process,

X(t), t , can be described by its probability density function:

f x (x) 2

-

n2

1 2 1

1

exp x x 1 x x

2

where, t1, t2, …, tn , x = {x1, x2, …, xn}, and xi = X(ti). In addition, x is the mean

vector and is the covariance matrix.

Gaussian processes have several important features: Let

x1

11 12

x

2

21 22

... x

...

...

x

x 1 , x 1 ,

...

x2

xk x 2

...

...

...

...

xn

n1 n 2

Then:

... 1k

... 2 k

..

...

... kk

...

...

... nk

... 1n

... 2 n

... ... 11 12

... ... 21 22

... ...

... nn

(a) Independence: if ij = 0, i j, then X is independent.

(b) Partition at k: X1 has a k-dimensional multivariate Gaussian distribution with a

mean x1 and covariance 11.

(c) Reduction: if A is a k n matrix of rank k, then Y = AX has a k-variate Gaussian

distribution:

y A x

y A x AT

(d) Conditional probability: the conditional probability density function of X1 given

X2 = x2 is a k-dimensional multivariate Gaussian distribution with the mean and

covariance defined below:

X

1

X2

EX 1 / X 2 x2 x1 12 221 x 2 x2

X1 / X 2 11 12 221 21

-

An example: a work station sends out either a signal (1) or no signal (0). Because of

the noise disturbance, it is found that the signal can be described by a Gaussian

random process:

X(t) = {x1(t), x2(t)}

where, x1(t) ~ N(0, 1), x2(t) ~ N(1,1) and:

1 1

0 1

Note that X is not independent. The conditional probability of X1 given X2 = 2 is

Gaussian with:

X

1

X2

= 0 + (1)(1)-1(2 – 1) = 1

X 1 / X 2 = 1 – (1)(1)-1(0) = 1

By the way, since the probability function is independent on the time, the signal is

stationary. Is it also Markov? (Yes)

(2) Another important type of random process is binary random process (random binary

waveform). It is often used in data communication systems.

- Illustration of a binary random process

1

T

D

t

-1

-

-

The properties of the binary random process:

- Each pulse has a rectangular shape with a fixed duration T and a random

amplitude 1.

- Pulse amplitude are equally likely to be 1 (can be converted to 0 and 1)

- All pulse amplitude are statistically independent

- The start time of the pulse sequences is arbitrary and is equally likely between 0

and T.

The mathematical expression:

X (t )

Ak p(t kT D)

k

-

where, p(t) is a unit amplitude pulse of duration T, Ak is a binary random variable

representing the kth pulse, and D is the random start time with uniform distribution in

the interval [0, T].

An example of binary random process, in the above figure: {…1, -1, 1, -1, -1, …}

The mathematical expectations:

- E{X(t)} = 0;

- E{X2(t)} = 1;

t t

- R XX (t1 , t 2 ) 1 1 2

T

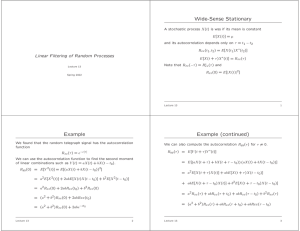

3.5. Stationarity

(1) Stationarity is one of the most important concepts in random signal processing. There

are two kinds of stationarities: strict-sense stationarity (SSS) and wide-sense

stationarity (WSS).

(2) Strict-sense stationarity

- definition: a random process X(t) is SSS if for all t1, t2, …, tk, t1 + , t2 + , …, tk +

and k = 1, 2, …,

P[X1(t)x1, X2(t)x2, …, Xk(t)xk] = P[X1(t+)x1, X2(t+)x2, …, Xk(t+)xk]

-

If the foregoing definition holds for all kth order distribution function k = 1, 2, …, N

but necessary for k > N, then the process is said to be Nth order stationary.

As a special case, the first order SSS is:

P[X(t)x] = P[X(t+)x]

-

That is, the probability distribution is independent of time t.

For a first order SSS random process:

E{X(t)} = x (a constant)

Note that the reverse is not true in general. That is, even if this equation holds, the

corresponding random process may not be SSS.

(3) Wide-sense stationarity

- WSS is much more widely used

- Definition: a random process X(t) is SSS if the following conditions are hold:

E{X(t)} = x (a constant)

E{X*(t)X(t+)} = Rxx()

-

where, X*(t) is the conjugate complex of X(t).

Example 1: Is the binary random process WSS? From (1), we know that:

E{X(t)} = 0;

R XX (t1 , t 2 ) 1

t1 t 2

T

Let t2 = t1 + , we have:

R XX (t1 , t1 ) 1

-

t1 t1

1

T

T

therefore, it is WSS.

multivariate random processes can be defined in a similar manner.

3.6. Autocorrelation and Power Spectrum

(1) Autocorrelation and power spectrum are very important for signal processing. It is

interesting to know that there are different ways to introduce power spectrum.

However, they all result in the same thing.

(2) Autocorrelation function

- the autocorrelation function of a real-valued WSS random process is defined as

RXX() = E{X(t)X(t+)}

-

the basic properties:

- it is related to the energy:

Rxx() = E{X2(t)} = average power (note that Rxx() 0)

-

it is an even function of :

Rxx() = Rxx(-)

it is bounded by Rxx() :

Rxx() Rxx()

If X(t) contains a periodic component, then Rxx() will also contain a periodic

component

If lim R XX ( ) C , then C X2

If Rxx() = Rxx(0) for some T0 0, then Rxx is periodic with a period T0

If Rxx(0) <

(3) Cross-correlation function and its properties

- Cross-correlation function is defined as follows:

-

RXY() = E{X(t)Y(t+)}

-

The cross-correlation function has the following properties

- RXY() = RXY(-)

- R XY ( ) R XX (0) RYY (0)

-

1

R XX (0) RYY (0)

2

RXY() = 0, if the processes are orthogonal, and

RXY() = XY, if the processes are independent

R XY ( )

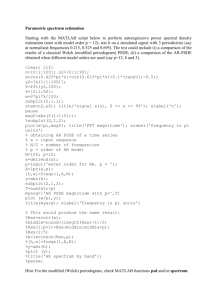

3.7. Power Spectrum

(1) Definition and properties

- Based on the autocorrelation function, the power spectrum (Wiener-Khinchine) can

be defined as follows:

S XX ( f ) F RXX ( ) RXX ( ) exp( j 2f )d

-

Note:

- This definition is applicable to all WSS random processes. How about the no

stationary random processes? (it does not make sense to calculate the power

spectrum).

- SXX(f) is called power spectral density function (psd).

Given the power spectral density function, SXX(f), the autocorrelation function can be

obtained:

RXX ( ) F 1 S XX ( f ) S XX ( f ) exp( j 2f )df

-

The properties of power spectrum:

- SXX(f) is real and nonnegative

-

The average power in X(t) is given by: E{ X 2 (t )} RXX (0) S XX ( f )df

- If X(t) real, RXX() will be even. Hence, SXX(f) will be even.

- If X(t) has periodic components, then SXX(f) will have impulses.

- We can define the cross-power spectral density function in a similar manner and find

its properties

(3) Lowpass and bandpass processes

- Definition: if the psd of a random process is zero for f> B (the bandwidth of the

process), then the process is called a lowpass process with the bandwidth B.

Similarly, the bandpass process is defined.

-B

-

bandpass

SXX(f)

lowpass

B

fc

fc+B/2

The power of a given frequency band, 0 f1 < f2, is as follows:

f2

PX [ f1 , f 2 ] 2 S XX ( f )df

f1

-

Some random processes may have psd functions with nonzero but insignificant values

over a large frequency bands, hence, the effective bandwidth (Beff) is defined:

Beff

1 S XX ( f )df

2 max S XX ( f )

This is illustrated as follows:

Max[SXX(f)]

Equal area

Beff

(4) Power spectral density function of random sequences

- We can define the power spectral function for random sequences by means of

summation (instead of integration).

(5) Examples.