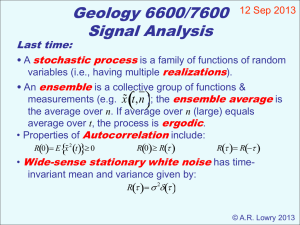

Linear Systems & Random Signals: Stationary Processes & Correlation

advertisement

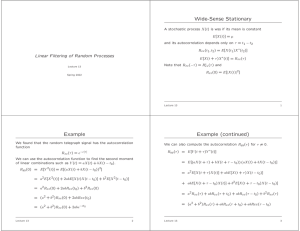

Response of Linear Systems to Random Signals

Stationary and Weakly Stationary Systems

Introduction: One of the most important applications of probability theory is the

analysis of random signals and systems. Here we will introduce the basic concept of

analyzing the response of an LTI system if the input is a random process.

Stationary processes: A process is stationary iff its statistical properties remain

unchanged under any time shift (time-invariant).

The requirement for a process to be stationary is rather stringent, which often renders

the study of such process impractical. To relax the requirement so that the results can

be applied to a wider range of physical systems and processes, we define a process

called weakly stationary or second-order stationary process.

Weakly stationary processes: A process {X(t), 0} is weakly stationary if its first two

moments remain unchanged under any time shift (time-invariant) and the covariance

between X(t) and X(s) depends only on |t – s|.

Ergodic Process

For an ergodic process, all statistical averages are equal to their time-average

equivalents.

Properties of Random Signals

Introduction: Since random signals are defined in terms of probability, one way to

characterize a random signal is to analyze the long-term observation of the process.

The following analysis assumes the (weakly) stationary/ergodic property.

The four basic properties of random signals:

o Autocorrelation function – A measure of the degree of randomness of a signal.

o Cross-correlation function – A measure of correlation between two signals.

o Autospectral density function – A measure of the frequency distribution of a

signal.

o Cross-spectral density function – A measure of the frequency distribution of

one signal (output) due to another signal (input).

Correlation functions:

o Autocorrelation function: The autocorrelation function Rxx(t) of a random

signal X(t) is a measure of how well the future values of X(t) can be predicted

based on past measurements. It contains no phase information of the signal.

Rxx ( ) E[ X (t ) X (t )] x1 x2 P( x1 , x2 ) dx1dx2

0 0

where x1 X (t ), x2 X (t ), and P( x1 , x2 ) joint PDF of x1 and x2

o Cross-correlation function: The cross-correlation function Rxy(t) is a measure

of how well the future values of one signal can be predicted based on past

measurements of another signal.

Rxy ( ) E[ X (t )Y (t )]

o Properties of correlations functions:

Rxx(-t) = Rxx(t) (even function)

Rxy(-t) = Ryx(t)

Rxx(0) = x2 + x2 = mean square value of x(t)

Rxx() = x2

The cross-correlation inequality: Rxy (t ) Rxx (t ) R yy (t )

o Example:

Rxx(t) of a sine wave process:

1

0 2

X (t ) A sin( 2 f t ) where P ( ) 2

0

elsewhere

Let x1 ( ) A sin[ 2 f t ] and x2 ( ) A sin[ 2 f (t ) ]

Rxx ( ) E[ x1 ( ) x2 ( )]

Rxx ( )

A2

2

2

sin[ 2 f t ] sin[ 2 f (t ) ] d

0

2

A

cos( 2 f )

2

2

R_xx( t )

0

2

0

0.005

t

Since the envelop of the autocorrelation function is constant as ,

we can conclude that any future values of a sine wave process can be

accurately predicted based on past measurements.

A time-delay system:

n(t)

x(t)

z-1

y(t)

Rxy ( ) E[ x(t ) y (t )]

y (t ) x(t t 0 ) n(t )

Rxy ( ) E x(t ) x(t t 0 ) n(t ) E x(t ) x(t t 0 )

mean0

Rxy ( ) Rxx ( t 0 ) R yy ( ) E[ y (t ) y (t )] Rxx ( ) Rnn ( )

Spectral density functions:

o Autospectral density function (autospectrum): The autospectral density

function Sxx(f) of a random signal X(t) is a measure of its frequency

distribution. It is the Fourier transform of the autocorrelation function.

S xx ( f )

Rxx (t ) e

R xx (t )

S xx ( f ) e

j 2 f t

dt 2 R xx (t ) e j 2 f t dt

0

j 2 f t

df 2 S xx ( f ) cos( 2 f t ) df

0

o Cross-spectral density function (cross-spectrum): The cross-spectral density

function Sxy(f) is a measure of the portion of the frequency distribution of one

signal that is a result of another signal. It is the Fourier transform of the crosscorrelation function.

S xy ( f )

2 f t

Rxy (t ) e dt

Rxy (t ) 2 S xy ( f ) cos(2 f t ) df

0

o Symmetry: Sxx(-f) = Sxx(f) (even function) Sxy(-f) = Sxy*(f) = Syx(f)

o Example:

White noise: The autocorrelation function of a white noise process is

an impulse function. The implication here is that white noise is totally

unpredictable since its autocorrelation function decays to zero at t > 0.

Of course, this is what we expect to see in a white noise process, a

random process with infinite energy.

Low-pass white noise: Assuming the low-pass white noise has a

magnitude of 1 and the bandwidth will vary. The autocorrelation

function is computed using IFFT.

S_nn f

f 200

1 if

R_nn ifft ( S_nn )

0 otherwise

2

S_nnf

10

1

0

R_nnt

0

200

0

10

400

0

50

f

S_nn f

1 if

t

f

100

R_nn

0 otherwise

2

S_nn

f

100

ifft ( S_nn )

5

R_nn

1

t

0

0

5

0

S_nn f

200

f

1 if

400

f

0

50

t

100

50

R_nn

0 otherwise

ifft ( S_nn )

2

2

S_nn

f

R_nn

1

t

0

2

0

0

200

f

400

0

50

t

100

The autocorrelation function of a low-pass white noise process is a

sinc [sin(x)/x] function. The implication here is that the predictability

of a low-pass white noise process is inversely proportional to the

bandwidth of the noise. Since the noise energy is proportional to the

bandwidth, this conclusion is consistent with common sense.

Band-pass white noise: Assuming the band-pass white noise has a

magnitude of 1 and the bandwidth will vary. The autocorrelation

function is computed using IFFT.

S_nn f

1 if ( f

100 ) ( f

200 )

R_nn

0 otherwise

2

S_nn

f

5

R_nn

1

t

0

0

5

0

S_nn f

200

f

1 if ( f

400

100 ) ( f

0

R_nn

2

f

50

t

100

110 )

0 otherwise

S_nn

ifft ( S_nn )

ifft ( S_nn )

0.5

R_nn

1

t

0

0

0.5

0

S_nn f

200

f

1 if ( f

400

100 ) ( f

0

50

t

100

101 )

R_nn

0 otherwise

ifft ( S_nn )

2

S_nn

f

R_nn

1

t

0

0

0

200

f

400

0

50

t

100

The autocorrelation function of a band-pass white noise process has

the similar property – the predictability is inversely proportional to the

bandwidth of the noise. In addition, the autocorrelation function

approaches the sine wave process as the bandwidth decreases, a

conclusion that can easily be drawn using LTI system theory for

deterministic systems.

LTI Systems

Introduction: LTI systems are analyzed using the correlation/spectral technique. The

inputs are assumed to be stationary/ergodic random with mean = 0.

Ideal system:

H(f)

x(t)

y(t)

h(t)

t

y(t ) x( ) h(t ) d

0

Y( f ) X ( f ) H( f )

Ideal model:

o Correlation and spectral relationships:

R yy (t ) h( ) h( ) Rxx (t ) d d

Rxy (t ) h( ) Rxx (t ) d

00

0

S yy ( f ) H ( f ) S xx ( f )

S xy ( f ) H ( f ) S xx ( f )

2

Total output noise energy: y2 S yy ( f ) df

o Example: LPF to white noise

1

H( f )

H ( f ) e j ( f )

1 j 2 f K

K RC

(LPF)

t

1

1

h(t ) e K u (t ) H ( f )

, and ( f ) tan 1 (2 f K )

K

1 (2 f K ) 2

For white noise : S xx ( f ) A

A

2

S yy ( f ) H ( f ) S xx ( f )

1 (2 f K ) 2

t

and

A K

R yy (t ) S yy ( f ) e j 2 f t df

e

2K

2y

0

A

A

S yy ( f ) df 2 1 (2 f K ) 2 df 2 K , or

2t

1 K

A

2

2

y A h (t ) dt A

e

dt

2

2K

0

0K

One-sided spectrum: G(f) = 2 S(f) for f 0

o Example: LPF to a sine process

1

H( f )

H ( f ) e j ( f )

1 j 2 f K

1 Kt

e

h(t ) K

0

H( f )

K RC

(LPF)

t0

t0

1

1 (2 f K )

2

( f ) tan 1 (2 f K )

1 2

A ( f f0 )

2

A2 ( f f 0 )

2

G yy ( f ) H ( f ) Gxx ( f )

2[1 (2 f K ) 2 ]

For sine wave : G xx ( f )

R yy (t ) G yy ( f ) cos (2 f t ) df

and

0

A2

R yy (t )

cos (2 f 0t )

2[1 (2 f 0 K ) 2 ]

y2 G yy ( f ) df

0

y2

0

A2 ( f f 0 )

df

2[1 (2 f K ) 2 ]

2

A

2[1 (2 f 0 K ) 2 ]

Models uncorrelated input and output noise: Gmn(f) = Gum(f) = Gvn(f) = 0

n(t)

H(f)

h(t)

u(t)

m(t)

v(t)

y(t)

x(t)

x(t ) u (t ) m(t ) y (t ) v(t ) n(t )

G xx ( f ) Guu ( f ) Gmm ( f ) G yy ( f ) Gvv ( f ) Gnn ( f )

G xy ( f ) Guv ( f ) H ( f ) Guu ( f ) Gvv ( f ) H ( f ) Guu ( f )

2

H( f )

G xy ( f )

Guu ( f )

G xy ( f )

G xx ( f ) Gmm ( f )

H( f )

2

Gvv ( f ) G yy ( f ) Gnn ( f )

Guu ( f ) G xx ( f ) Gmm ( f )