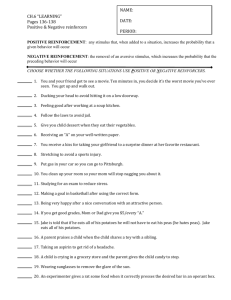

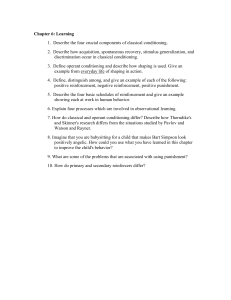

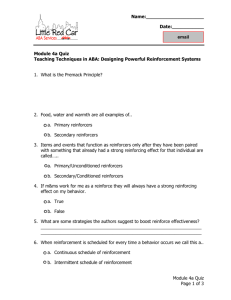

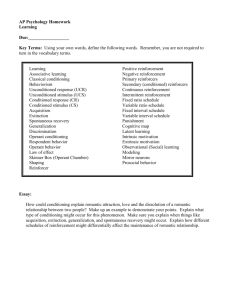

4. Chapter 4

advertisement