A New Discounting Model of Reinforcement

advertisement

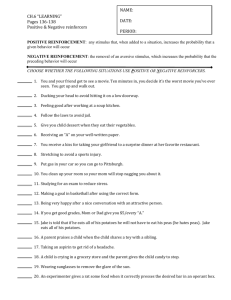

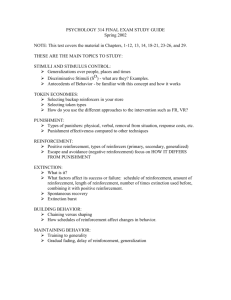

1 A New Discounting Model of Reinforcement Michael Lamport Commons and Alexander Pekker Harvard Medical School and University of Texas This paper presents a new model of additive discounting of reinforcement that follow Fantino’s (Squires & Fantino, 1971), Commons, Woodford and Ducheny’s (1982) Linear Noise Model and Mazur’s (1984; 2001) Hyperbolic Value Addition Model. Results indicate that present models do not account for situations when individual probe trials are included with lower rates of reinforcement. The new model accounts for such situations, fits existing data, and fits those data. The present model accounts for the contribution to value of three variables: the number of reinforcers, the relative rate of occurrence of those reinforcers that is often expressed as delay of reinforcement, and a new variable: the change in the relative delay of reinforcement. Without this new variable, there is no way to know the change in response rate if there is a change in value. The present model may enable the connection between micro and molar processes to be made. Introduction A fundamental question has been how is the value of reinforcers discounted and aggregated? This is important in both free operant situations and in discrete choice ones. The effectiveness of a reinforcement contingency in controlling behavior depends to a certain extent on how long the reinforcing events are ‘remembered’ by an organism and what conditions within situations affect memory for reinforcers. The long term goal has been to provide a mechanism to account for some portion of how animals make choices among, for example, various schedules of reinforcement in the steady state. To understand such adaptive organisms, the dynamic situations need to be understood, in addition to steady states: the sensitivity to change and reinforcement. This involves melioration. Although there is much work on static schedules of reinforcement, there is less on melioration and other forms of sensitivity to local reinforcement. This new model supplies the previously missing calculation in local reinforcement equations. Locality is well established in Vaughn (1981). Here we add an additional goal and a new model to satisfy it: that of understanding what mechanism underlies the shift in value given to reinforcement schedules when their density of reinforcement is altered. This step is essential to connect two processes that the quantitative analysis of behavior has treated only separately: the micro and the molar. The new model presented here will make possible that connection in future research. First the reasons based on previous data for the new model will be presented. This is followed by presenting the arguments showing previous static models failed to account for some of the data models and why. Then the model itself will be presented and how it fits the rest of the data. Finally, we discuss implications and directions for further research. Motivation for Developing the New Model We found that the static models such as those proposed by Commons (Commons, Woodford & Ducheny, 1982) and Mazur (1987) account for static data rather well. In static situations, samples from schedule are presented over long periods of time, in a preference situation; samples from a schedule are presented as consequences. Also Commons, Woodford and Ducheny showed that sample value were equivalent in preference and discrimination situations. In discrimination situations, the samples are presented as stimuli to be discriminated. This paper presents evidence from discrimination studies that show that when individual probe trials are included that contain lower rates of reinforcers, none of the current static accounts hold. This finding raises questions as to whether the previous models, which have become well-accepted, can in fact fully account for what is happening in this situation. The inclusion of relative delay enables a new dynamic model that fits existing data and accounts for all of the data, which existing models fail to do. Previous theories tried to account for reinforcer delay and resulting value decrementation. Mazur’s (1987) model is described by the equation [1] above. Vaughan (1976) suggested a dynamic a mechanism of melioration to account for choice on concurrent schedule. The insufficiency of the models is their confinement to static situations and inability to account for the actual dynamics raised by Vaughan. 2 Commons’ Discrimination procedure Commons (1973, 1979, 1981) ran four White Carneaux pigeons in one 256-trial session per day. Trials consisted of a stimulus period followed by a choice period. The procedure is diagrammed in Figure 2. The stimulus period: During the stimulus period, a sample consisting of four cycles was presented, as shown to the left of Figure 2. In this study, three different standard cycle lengths were used: 2 seconds, 3 seconds and 4 seconds, so that the entire stimulus period might last for 8 seconds, 12 seconds or 16 seconds. The sample could have come from a rich schedule with a 0.75 probability of a lighted center-key peck being reinforced at the end of a cycle, or from a lean schedule with a 0.25 probability of the lighted center-key peck being reinforced at the end of a cycle. The probability distributions for the two schedules are shown in are shown in Figure 3. The samples resembled a T schedule (Schoenfeld & Cole, 1972), but the reinforcers were delivered only at the end of the cycle. A total of 16 such samples, called substimuli, were generated. The cycles, ci were numbered so that c4, occurred at the beginning of the stimulus period and was the furthest from choice, and c1 occurred at the end of the stimulus period, just before the choice period. At the beginning of each cycle, the center key was illuminated. The first center-key peck in each of the four cycles darkened the center key and was reinforced probabilistically as describe above. The value of reinforcement on a cycle was represented by vi. Although the reinforcement probability was either .25 or .75 across all the cycles within a trial, the reinforcement probability was either 0 or 1 on a particular cycle i. That is, a center-key peck had a reinforcement value of (vi = 1) or (vi = 0) on each of the cycles. Only the first center-key peck in a cycle was reinforced at the end of the cycle. With the binary-numeral notation for substimulus, 0111 means v4 = 0, v3 = 1, v2 = 1, v1 = 1. In addition to the standard cycle lengths, and after the birds had stabilized, the standard cycle length would remain at the standard length for 224 trials, would be doubled on 16 probe trials, or would be tripled on another 16 probe trials. The position of the probe trials within a session was randomly distributed. The choice period: As shown on the right side of Figure 2, At the onset of the choice period the left red and right green side keys were illuminated; the center key stayed dark or was darkened if no key peck occurred in the last cycle of the stimulus period. Duration of the choice period was always twice the standard or base length cycle. The first side-key peck, whether correct or not, darkened both keys, and no further pecks were counted. If a substimulus sample from the rich schedule had been presented on the center key, the first left-key peck was reinforced; a right was not reinforced. If a sample from the lean schedule had been presented on the center key, the first right-key peck was reinforced; a left was not reinforced (Commons, 1983). Results Using the Discrimination Procedure In Figure 5, the decision rule is represented by the psychophysical relation between the perceived reinforcement density, zp(L), (value = v) and the actual reinforcement density. The decision rules for each bird are graphed with respect to cycle length, which was manipulated in two different ways. In one manipulation, the base or standard cycle length was changed and then maintained for a number sessions. In the second manipulation, the cycle length was changed by doubling or tripling it on probe trials. In column 1, the psychophysical relation is shown for all trials together. As can be seen, in z form, the mean perceived density was 0 for the mean actual density of 2 (density 2 substimuli) as it should have been: This means that these substimuli were seen as coming equally from either distribution. The perceived values were symmetrically distributed about 0 with perceived density ranging from a value of -2.2 for density 0 substimuli to +2.2 for density 4 substimuli. The points in Column 1 and in Column 2 were well described by the regression lines fit by the median method (Mosteller & Tukey, 1977) with r2 values ranging from .98 to .99. The rest of points also fall on the lines but regression coefficients of flat lines are close to 0. In column 2, the psychophysical relation is shown for the standard 3-second cycle length. When only these “standard” cycle lengths are examined, functions relating perceived density to number of reinforcers were steeper than those for the combined trials seen in Column 1. This suggests that the aggregated graph does not accurately represent the decision rules that pigeons may have used in each of the individual situations. The difference between Column 1 and 2 may occur because the aggregated data shown in Column 1 includes probe trial data. The Affects of Lengthening Delay on Probes Trials 3 Columns 3 and 4 show the effects of doubling and tripling the cycle length, respectively, in probe trials. As can be seen, probe trials flattened the slope of the overall data slightly, more so for some birds than others. Finally, Column 5 shows the effect of increasing the number of cycles to 6. Whereas these slopes are a little flatter than the standard 4 cycle situation, they nevertheless are quite similar to the overall situation graphed in Column 1. The relations shown within the figure rejected a number of ways that pigeons might scale reinforcement density in samples, while supporting others. One way to see how density was scaled is by examining the role played by two ways of lengthening cycles: a) changing the standard cycle length over an extended period of time, and b) changing cycle length on selected trials by doubling or tripling base cycle length. Static Models fail to account for all the discrimination data The Commons, Woodford and Ducheny (1982) and the Mazur model (1984; 1987) seemed to solve a number of problems with earlier models, and do account for the steady state discrimination situation. But they do not work in the discrimination situation when the times between reinforcers are changed even though the number of reinforcers remains constant. In probe trials, the time between possible reinforcers changed from 3 to 4 or 5 seconds. Consider a number of possible variables that could control value but do not at least singly: 1) In the static or dynamic situation, if absolute numbers of reinforcers were the only underlying variable to which the pigeons were sensitive, changing the timing would not make a difference. Any model that suggests that the birds responded simply on the basis of number of reinforcers, independently of context or time, must predict that the momentary changes in cycle length should have no effect on perceived value. Any model that depends on the number of reinforcers alone is rejected by the fact that there were changes in slope with increased cycle lengths, indicating that time was indeed important. The standard cycle slopes were 1.0, 1.2, 1.2 at 2, 3, and 4 seconds respectively; doubling slopes were .49, .42 and .41 (clearly lower); and the tripling slopes were lower still, at .24, .21 and .15. 2) In the static situation, another possibility is that longer probe cycles result in memory of a large number of nonreinforcements (without truncation of time period over which memory occurs). But when the number of cycles was changed from 4 to 6, the slopes only minimally change. This shows that absolute time was not the only controlling variable. 3) A model based on simple rate of reinforcement would predict that there should be more decrementation of the value of reinforcers that occurred further away from choice than the ones that were more immediate. This loss is value should even be greater when the base cycle times are greater. As Figure 5 shows, only one pigeon behaved in a way that would be predicted by the Commons-Woodford/Mazur models without a change in parameters. Any model that proposes that the birds responded on the basis of the relative time between reinforcers or rate of reinforcement alone must predict that perceived value should be inversely proportional to momentary cycle length. If the standard cycle is doubled on a series of trials, the perceived value should be halved. Likewise, the ratio of the slopes of functions relating perceived density to actual density should be halved. Doubling and tripling standard cycle lengths decreased the perceived density more than predicted by time- or simple relative rate of reinforcementaveraging models. The ratio of the slopes, double to standard and trip to standard would be 2:1 and 3:1, respectively, if the weighted average rate or weighted average time model were true in its simplest form. Instead, the ratios of the slopes for the average of the four birds are 2.0, 2.9, and 2.9 for 2-, 3- and 4-second cycle lengths for doubling and 4.2, 5.7, and 8.0 for tripling. Whereas these slope changes are in the right direction, they clearly deviate from ratios predicted by time or rate averaging, especially as standard cycle length increases. There may be an interaction between standard cycle length and probe value: At least for Birds 84 and 995, tripling the 4-second standard had a larger decremental effect on the slope than tripling the 2-second standard. These findings are not surprising. The birds do not compensate for the fact that the probe substimuli start much earlier than the standard. The decrement in perceived density is greater than would be the case if those earlier events in the substimuli were not there. Slow change eliminates the relative time variable, leaves only the delay itself and number of reinforcers. The higher the rate of change in delay, the more severe the effect. 4 4) In the dynamic situation, the other three pigeons do not fit the Commons/Mazur model. When probe trials were inserted, as shown in Figure 4 column panels 3 and 4, the models do not really provide a reasonable account. As suggested above, a model that includes a notion of weighted relative rate or weighted relative time would work better at accounting for both the static situation and the situation with probes. Hence, a model is needed that is not static because is includes just a fixed delay but also is dynamic because it includes changes in delay. It was also important to make these relative to the static delay. Hence the new model is a relativistic dynamic model whereas the former models were static and not relativistic. What the New Model Has To Do Another alternative that addresses the dynamic situation is that the birds perceived something like a weighted average rate or weighted average time between reinforcers. Such a model should include a term for the interaction between standard cycle length and ratio of probe length to standard. We suggest the term should be change in delay (cycle length) divided by delay (the base cycle length). Such a model could help identify how organisms actually make decisions as to how much reinforcement they are receiving. If simply average reinforcement rate determined perceived density, reinforcers occurring more cycles away from choice would not be weighted less. The proposed new model introduced below make it possible to see how perceived value of individual reinforcers decrease farther from choice. It allow for three versions of weighting have to be considered (Commons, Woodford & Trudeau, 1991). One would hypothesize a negative power function decay alone is important. A second would hypothesize that changes in relative time (the inverse of relative rate) must also be considered. Third, a model that combines the first two should be considered. Proposed New Model For presentation purposes, we omit the history of models’ development and our calculations that showed their algebraic near-equivalence; both history and that near-equivalence enabled the present model. The approach we take here simply builds upon Mazur’s (1987) equation which is equivalent to Commons et al (1982). [1] n Ai v pi i 1 1 kdi where pi is the probability of a reinforcer being delivered, di is the delay, Ai is the value of the reinforcer if delivered immediately, k is sensitivity to delay, and i is the instance. We omit the exponent that represents a generalization of the hyperbolic form to a power function because it did not improve the fits here. The equation we propose is as follows: [2] f (d i d i , i ) Ai 1 k1 d i k 2 d i di where Ai is the sensitivity to reinforcement (the value of the reinforcer if delivered immediately), k1 is sensitivity to delay, and i is the instance or cycle, di is the delay (i.e., cycle length), di is the change in delay relative to the base di, k2 is the sensitivity to changes in delay. Note that when there is no change in the delay, k2Δdi = 0 this new equation just becomes just equation [1]. The new term k2Δdi is in the denominator. With respect to Figure 4, especially columns 3 and 4, when Δdi goes to infinity, the value of p(L) goes to 0. Likewise, as di becomes very large, p(L) also goes to 0. Explanation of the New Model’s Fit to the Data 5 Equation [2] describes the Columns 3 and 4 of Figure 4 accounting for the flattening of the slopes. Note that the lines though the points fit relatively well. The significance in the increase in delay, di, in the probe trials is very much relative to the delay in the trials without probes, di itself. This is relative delay, d i . di Essentially, this says that the significance of the change in delay, di is proportional to the original delay di, which seems fairly logical. If the delay is already very long (i.e., di is large) and we increase it by a little amount d, the d i is small and increases the denominator little) compared to the case if the di d i original delay di is small or even comparable to di (i.e., is large and increases the denominator a lot). di Note that equation [2] ensures that f d i d i , i f d i , i because extra delay results in less valuing and effect should be relatively small (i.e., therefore lower zp(L), as more or less seen in figure 4. This is what the previous models could not do. The change of d from 3 to 4 or 5 seconds is large compared to the base d of 3 seconds. Hence zp(L) is much smaller or even 0 as is seen for pigeons 30 and 102 on panel 4. The parameter k2 is the birds’ sensitivity to changes in relative delay. When k2 is small, they are insensitive to changes in delay as was the case with pigeon 995 and to some extent, pigeon 84. When k2 is large as was the case with pigeons 30 and 102, changes in delay affect their performance dramatically. With the inclusion of the term d i , the dynamics of inserting probes that changed delay di momentarily were well accounted for by equation 2, the new model. Conclusions Models of choice need to take into account not only the static decrementation of value caused by delay but also the dynamic way as well. The static way means in preference situations, as reinforcers that come further after choice, they have less effect. The dynamic way addresses the situation when the rate of reinforcement changes. The new model accounts for the change in the relative delay between reinforcements. When there is a shift to greater delays on probe trials, the samples are less valued when posed with such choice. It was also found that how organisms discriminate sample value, reflects their actual experience in near the past as well as how much time has past. Our new model suggests that pigeons use changes in relative time between reinforcers as well as absolute time to determine value in the first place. Changes in relative time is consistent with the fundamental assumptions of Herrnstein and Vaughan (1980; Herrnstein, 1982) theory of melioration. Vaughan (1981) suggests that reinforcer value is always found in context of some background reinforcement rate. It is the change in local rate of reinforcement to which the organisms are sensitive. But there never was an explicit equation show how to determine what was local, something the new model does. Because of melioration, there is always a comparison of IRTs or (inter-reinforcement times) or local rate of reinforcement to this background rate of reinforcement. The second is consistent with “forgetting” models of Commons, Woodford and Ducheny (1982) and Mazur (1987). This suggests that absolute time plays a role in discounting or the decrementation of value. One might speculate then that there are three mechanisms surrounding reinforcement: 1) the static elicitations as in behavioral momentum (Nevin, 1992) and immediate value of a reinforcer which is a respondent process; 2) the absolute time to an instance of reinforcement or absolute rate of reinforcement which is a memorial function; and 3) the change in relative rate or relative time of reinforcement, which suggest there are mechanisms to keep track of the acceleration or deceleration of reinforcement rate. Unanswered Questions Would the pigeons move from using relative time between reinforcers to estimating the number of reinforcers in a sample if they were run to steady state on variation of cycle lengths occurred during each session with about equal lengths used. Other questions might also be answered with some variations in the procedure. 6 1. All the reinforcers that would normally be delivered were non-contingently delivered. That would answer the question as to what extent do responses contribute to the remembering of reinforcers. 2. If all the reinforcers that would normally be delivered over 4 cycles were delivered in just 1 cycle. This would test if number could be trained as a variable at all or whether there the effective stimulus characteristics really have to do with rate at which the reinforcers come. 3. If the range of times for a cycle were varied from single, to 1.5, double, 2.5 to triple and quad tripled. This would measure to what degree time alone, or rate where controlling. 4. The number of cycle would be varied, 1, 2, 3 4, 5, 6, 7, 8 or more cycles. This is another variable. It says that number of possible reinforcement positions is also controlling. One would use even more cycles with humans. One might set a constant highest possible d’. 5. One might also look at .1 versus .6, an equal difference in probability as .25 and .75, as well as .4 and .9. This would allow one to see if symmetry around chance plays any role. In all the above cases the dependent variable would be the slope of the probit form of the decision curves. 6. To see if the new model might throw light on concurrent schedules, one might take the t-tau form of VI and see if one can determine its overall value from the model. 7. One also might see if one could see the melioration effects from using the model to estimate the change in value when the analog schedules reinforcement rate was altered. Where does this leave us with respect to whole schedules such as VI and choices between schedules such as concurrent schedules? Even without these answers, we might be able to explain choice between schedules using molecular data and the new model. The decrementation of value is a joint function of time and change in relative time and together they explain why melioration works the way it does. Out new model specifies at the micro level the processes that are taking place. Melioration explains some of matching. With schedules such as VI, there would still be problems, not the least being that what the organism does effects the timing and rate of reinforcement. Performance on schedules such as VI are under determined and there is drift (Pear, Rector & Legris, 1982). There could be analogs to those schedules based on t and tau as Schoenfeld and Cole (1972) suggested that might be easier to model using the present findings. The present model solves half of the problem of creating a event to event process model of melioration and therefore matching. It solves how the value changes with changes in schedules. What is missing is how value changes rate and choice. 7 References Commons, M. L. (1973). Decision rules and isosensitivity curves for the discrimination of reinforcement density using a choice procedure. In partial fulfillment of the requirements for the degree of Doctor of Philosophy, Department of Psychology, Faculty of Pure Science, Columbia University, New York City, New York. Commons, M. L. (1978). How time between reinforcement opportunities in reinforcement schedule samples affects their discriminability and perceived value. Paper presented at the First Harvard Symposium on Quantitative Analyses of Behavior: Schedules as Discriminative Stimuli, Harvard University, Cambridge. Commons, M. L. (1979). Decision rules and signal detectability in a reinforcement density discrimination. Journal of the Experimental Analysis of Behavior, 32, 67-74. Commons, M. L. (1981). How reinforcement density is discriminated and scaled. In M. L. Commons & J. A. Nevin (Eds.), Quantitative analyses of behavior: Vol. 1. Discriminative properties of reinforcement schedules (pp. 51-85). Cambridge, MA: Ballinger. Commons, M. L., & Ducheny, J. R. (1979). The relationship between perceived density of reinforcement in a schedule sample and its reinforcement value. Paper presented at the Second Harvard Symposium on Quantitative Analyses of Behavior: Matching and Maximizing Accounts, Harvard University, Cambridge. June.] Commons, M. L., Woodford, M., & Ducheny, J. R. (1982). The relationship between perceived density of reinforcement in a schedule sample and its reinforcing value. In M. L. Commons, R. J. Herrnstein, & H. Rachlin (Eds.), Quantitative analyses of behavior: Vol. 2. Matching and maximizing accounts (pp. 25-78). Cambridge, MA: Ballinger. Commons, M. L., Woodford, M., & Trudeau, E. J. (1991). How each reinforcer contributes to value: "Noise" must reduce reinforcer value hyperbolically. In M. L. Commons, M. C. Davison, J. A. Nevin (Eds), Signal detection: Vol. 11. Quantitative analyses of behavior (pp. 139-168). Hillsdale, NJ: Lawrence Erlbaum Associates. Davison, M. (1988). Delay of reinforcers in a concurrent-chain schedule: An extension of the hyperbolic-decay model. Journal of Experimental Analysis Behavior; 50(2): 219–236. Dickinson, A. (1980). Contemporary animal learning theory. Cambridge, England: Cambridge University Press. Dunn, R., & Fantino, E. (1982). Choice and the relative immediacy of reinforcement. Journal Experimental Analysis of Behavior, 38(3):321–326. Egan, J. P. (1975). Signal detection theory and ROC analysis. New York, NY: Academic Press. Fantino, E. J., Abarca, N. & Dunn, R. M. (1987). The delay-Reduction hypothesis: Extensions to Foraging and Three-Alternative Choice Quantitative analyses of behavior: Vol. 5. The effect of delay and of intervening events on reinforcement value. (pp. ). Hillsdale, NJ: Lawrence Erlbaum Associates Grace, R. C., & McLean, A. P. (in press). Integrated versus segregated accounting and the magnitude effect in temporal discounting. Psychonomic Bulletin and Review. Hall, G., & Pearce, J. M. (1982). Changes in stimulus associability during conditioning: Implications for theories of acquisition. In M. L. Commons, R. J. Herrnstein, & A. R. Wagner (Eds.), Quantitative analyses of behavior: Vol. 3. Acquisition (pp. 221-240). Cambridge, MA: Ballinger. Herrnstein, R. J. (1981). Self control as response strength. In C. M. Bradshaw, C. P. Lowe & F. Szabadi (Eds.) Recent developments in the quantification of steady-state operant behavior. (pp. 3–20). Elsevier/North-Holland; Amsterdam. 8 Herrnstein, R. J. (1982). Melioration as behavioral dynamism. In M. L. Commons, R. J. Herrnstein, & H. Rachlin (Eds.), Quantitative analyses of behavior: Vol. 2. Matching and maximizing accounts (pp. 433-458). Cambridge, MA: Ballinger. Herrnstein, R. J., & Vaughan, W., Jr. (1980). Melioration and behavioral allocation. In J. E. R. Staddon (Ed.), Limits to action: The allocation of individual behavior (pp. 143-176). New York: Academic Press Mazur, J. E. (1981). Optimization theory fails to predict performance of pigeons in a two-response situation Science 13: 823-825. Mazur, J. E. (1984). Tests of an equivalence rule for fixed and variable reinforcer delays.. Journal of Experimental Psychology: Animal Behavior Processes, 10: 426-436. Mazur, J. E. (1987). An Adjusting procedure for studying delayed reinforcement. In M. L. Commons, J. E. Mazur, J. A Nevin, & H. Rachlin, H. (Eds.). Quantitative analyses of behavior: Vol. 5. Effect of delay and intervening events on value. (55 - 73). Hillsdale, NJ: Lawrence Erlbaum Associates. Mazur, J. E. (2001). Hyperbolic value addition and general models of animal choice. Psychological Review, 108, 96-112. Mosteller, F. & Tukey, J. W. (1977). Data analysis and regression: A second course in statistics, Reading, Massachusettes.Addison-Wesley. Nevin, J. A. (1992). An integrative model for the study of behavioral momentum. Journal of the Experimental Analysis of Behavior, 57, 301-316. Pear, J. J., Rector, B.L., & Legris, A. (1982). Towards analyzing the continuity of behavior. In M. L. Commons, R. J. Herrnstein, & H. Rachlin (Eds.), Quantitative analyses of behavior: Vol. 2. Matching and maximizing accounts (pp. 3-24). Cambridge, MA: Ballinger. Pearce, J. M., Kaye, H., & Hall, G. (1982). Predictive accuracy and stimulus associability: Development of a model for Pavlovian learning. In M. L. Commons, R. J. Herrnstein, & A. R. Wagner (Eds.), Quantitative analyses of behavior: Vol. 3. Acquisition (pp. 241-256). Cambridge, MA: Ballinger. Raiffa, H. (1968). Decision analysis: Introductory lectures on choices under uncertainty. Reading, MA: AddisonWesley. Raiffa, H., & Schlaifer, R. O. (1961). Applied statistical decision theory. Boston: Division of Research, The Harvard Business School. Schoenfeld, W. N., & Cole, B. K. (1972). Stimulus schedules: the t-tau systems. New York: Harper and Row. Squires, N., & Fantino, E. (1971). A model for choice in simple concurrent and concurrent-chains schedules. Journal of the Experimental Analysis of Behavior; 15(1): 27–38. Stubbs, D. A,, Pliskoff, S. S.,& Reid, H. M. (1977). Concurrent schedules: A quantitative relation between changeover behavior and its consequences. Journal of the Experimental Analysis of Behavior. 27:85–96. Williams, B. (1982). Blocking the response-reinforcer association. In M. L. Commons, R. J. Herrnstein, & A. R. Wagner (Eds.), Quantitative analyses of behavior: Vol. 3. Acquisition (pp. 427-448). Cambridge, MA: Ballinger. Vaughan, W., Jr. (1976). Optimization and reinforcement. Unpublished doctoral thesis, Harvard University. 9 Vaughan, W.., Jr. (1981). Melioration, matching, and maximization. Journal of the Experimental Analysis of Behavior, 36(2): 141–149. Vaughan, W., Jr., & Herrnstein, R. J. (1987). Stability, melioration, and natural selection. In L. Green, & J.H. Kagel (Eds.), Advances in Behavioral Economics, Vol. 1 (pp. 185-215). Norwood, NJ: Ablex. 10 Chung and Herrnstein (1967) 1 Commons (1973, 1978 ....................................................................................................................................................2 Commons (1978 ..............................................................................................................................................................1 Commons (1978) .............................................................................................................................................................1 Commons and Ducheny ..................................................................................................................................................1 Commons, 1978, 1981 .....................................................................................................................................................4 Commons, 1983 ...............................................................................................................................................................2 Commons, et al (1982 ......................................................................................................................................................2 Commons, M. L., & Ducheny, J. R. (1979 .....................................................................................................................8 Commons, Woodford & Ducheny, 1982 .........................................................................................................................4 Commons, Woodford & Trudeau, 1991 ..........................................................................................................................4 Commons, Woodford and Ducheny (1982) ................................................................................................................. 1, 4 Commons, Woodford and Ducheny (1982), ....................................................................................................................1 Commons, Woodford and Ducheny, 1982) .....................................................................................................................4 Commons, Woodford and Ducheny’s (1982) ..................................................................................................................1 Commons, Woodford, & Ducheny, 1982; .......................................................................................................................6 Commons, Woodford, & Trudeau, 141 ...........................................................................................................................6 Dickenson, 1980 ..............................................................................................................................................................6 Egan, 1975 .......................................................................................................................................................................6 Gibbon's (1977) ...............................................................................................................................................................4 Hall & Pearce, 1982.........................................................................................................................................................6 Herrnstein (1981).............................................................................................................................................................1 Herrnstein and Vaughn (1980 ..........................................................................................................................................6 Herrnstein and Vaughn (1980, Herrnstein, 1982 .............................................................................................................6 Herrnstein, 1982 ..............................................................................................................................................................6 Herrnstein's (1981) ..........................................................................................................................................................4 Mazur (198 ......................................................................................................................................................................1 Mazur (1981) ...................................................................................................................................................................3 Mazur (1984, 1987 ...................................................................................................................................................... 2, 4 Mazur (1984, 1987) .........................................................................................................................................................5 Mazur and Hastie (1978 ..................................................................................................................................................1 Mazur and Hastie (1978) .................................................................................................................................................5 Mazur and Hastie’s (1978) ..............................................................................................................................................4 Mazur, 1987 .....................................................................................................................................................................6 Mazur’s (1981) ................................................................................................................................................................3 Mazur’s (2001) ...............................................................................................................................................................1 Mazur’s 1987 ...................................................................................................................................................................3 McCarthy & White, 1987 ................................................................................................................................................1 Pear, Rector & Legris, 1982 ............................................................................................................................................7 Pearce, Kaye & Hall, 1982 ..............................................................................................................................................6 Raiffa & Schlaifer, 1961 ..................................................................................................................................................6 Raiffa, 1968 .....................................................................................................................................................................6 Schoenfeld & Cole, 1972) ...............................................................................................................................................2 Schoenfeld and Cole (1972) ............................................................................................................................................7 Squires & Fantino, 1971 ..................................................................................................................................................1 Steele-Feldman (2006) ....................................................................................................................................................4 Steele€Feldman, 2006 .....................................................................................................................................................2 Stubbs, Pliskoff,& Reid, 1977) .......................................................................................................................................3 Vaughn, 1981 ..................................................................................................................................................................6 Williams, 1982 ................................................................................................................................................................6 11 Figure Captions Figure 1: The top two panels show the fits of perceived value to data for 4-cycle and 6-cycle discrimination trials. The bottom panel shows preferred value data from the preference situation. Reproduced from Commons, Woodford and Ducheny (1982) with permission of Erlbaum Associates and Harper and Row. Figure 2: Diagram of stimulus period and choice period during a discrimination trial. Reproduced from Commons, Woodford and Ducheny (1982) with permission of Erlbaum Associates and Harper and Row. Figure 3 Overall probability distribution during the lean schedule (p = .25) and the rich schedule (p =.75), for the 4 cycle situation (left panel) and the 6 cycle situation (right panel). Reproduced from Commons, Woodford and Ducheny (1982) with permission of Erlbaum Associates and Harper and Row. Figure 4: Data from discrimination schedule study. On the y-axis is perceived value. The left column is of the overall data with the regular and probe trials combined. The send column shows the regular trial. The third column shows the doubling of cycle length probe trials. The fourth column shows the tripling of cycle length probe trials. The six column shows six cycles rather than four cycles. Reproduced from Commons, Woodford and Ducheny (1982) with permission of Erlbaum Associates and Harper and Row 12 13 DISCRIMINATION TRIAL FR 1 C4 C3 SR L RICH C2 C1 R FR 1 NO SR T SCHEDULE SAMPLE FR 1 C4 C3 NO SR L LEAN C2 C1 R FR 1 SR T SCHEDULE SAMPLE STIMULUS PERIOD CHOICE PERIOD 4X CYCLE LENGTH IN SECONDS 2X CYCLE LENGTH IN SECONDS DISCRIMINATION TRIAL FR 1 RICH C4 C3 C2 SR L C1 R FR 1 NO SR T SCHEDULE SAMPLE FR 1 LEAN C4 C3 C2 NO SR L C1 R T SCHEDULE SAMPLE FR 1 SR STIMULUS PERIOD CHOICE PERIOD 4X CYCLE LENGTH IN SECONDS 2X CYCLE LENGTH IN SECONDS 14 15 16