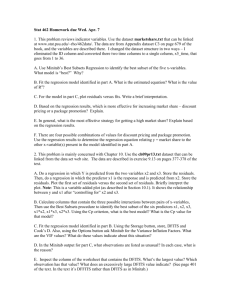

The following partial MINITAB regression output

advertisement