Machine Learning HW1 Linear Regression Due April 7, 2014 In this

advertisement

Machine Learning

HW1 Linear Regression

Due April 7, 2014

In this exercise, you will practice linear regression for a surface fitting problem by

using the Maximum Likelihood (ML) approach, the Maximum A Posteriori (MAP)

approach, and Bayesian linear regression. Besides, you will practice cross-validation

to select model parameters for the surface fitting problem. Please write MATLAB

programs to solve the following problems.

Data:

In this exercise, the input data are the measured

relative humidity across the United States.

(http://graphical.weather.gov/sectors/conus.php)

You are given one Training _data_hw1.mat file and one

Testing _data_hw1.mat file.

In the Training _data_hw1.mat file,

X_train is a 400x3 matrix, whose first column and second column record the

vertical position x1 and horizontal position x2 of the 400 training points,

respectively. The third column records the “sea index” x3. For each data point,

the corresponding entry x3= 1 for sea and x3= 0 for land.

T_train is a 400x1 vector, which records the relative humidity (target values) of

the training points.

In the Testing_data_hw1.mat file,

X_test is a 53200x3 matrix, whose first column and second column record the

vertical position and horizontal position of 53200 testing points, respectively.

The third column records the “sea index”.

T_test is a 53200x1 vector, which records the relative humidity (target values) of

the testing points.

x1_bound is a vector recording the min/max values of the vertical index.

X2_bound is a vector recording the min/max values of the horizontal index.

Feature Vector:

The feature vector is defined as

( x) 1 ( x),..., k ( x),..., P ( x), P 1 ( x), P 2 ( x)

To define the feature vector, we uniformly place P Gaussian basis functions over the

spatial domain, with P = O1 O2. Here, O1 and O2 denote the number of locations

along the horizontal and vertical directions, respectively. For 1 k P , we define

the Gaussian basis functions as

( x1 i ) 2 ( x2 j ) 2

, for 1 i O1 , 1 j O2 ,

2

2

2

s

2

s

1

2

k ( x) exp

whe re k O2 (i 1) j ,

x1 _max - x1 _min

O1 1

(i 1),

x _max - x 2 _min

( j 1),

j 2

O

1

2

s1 ( x1 _max - x1 _min)/( O1 1),

i

s2 ( x 2 _max - x 2 _min)/( O2 1).

Besides the P Gaussian basis function, we define the last two components of the

feature vector as P1 ( x) x3 (sea index) and P2 ( x) 1 (bias).

Linear Regression:

Please fit the data by using the linear prediction form

P2

y(x, w) w j j (x)

j 1

and minimizing the mean square error function:

E (w )

I.

1

2N

N

y (x(i ), w ) t (i) .

2

i 1

Maximum Likelihood (ML) approach

Here, we ask you to estimate the coefficient vector wML that minimizes the

mean square error. Solve the following questions for (O1, O2) = (4,4), (8,8),

(12,12), (16,16) and (20,20), respectively.

1. Compute wML with the training data X_train and T_train.

2. Use the testing data X_test to predict the output value for each point and

plot the distribution Y(x1, x2) of the predicted relative humidity over the

spatial region x1_min x1 x1_max and x2_min x2 x2_max.

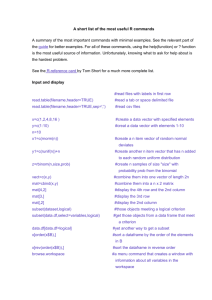

Example

3. Plot the squared error distribution

y ( x1 , x2 ) t ( x1 , x2 )

2

over the spatial

region x1_min x1 x1_max and x2_min x2 x2_max. Please also plot the training

data on the distribution map.

Example (red points are training data)

II.

Maximum A Posteriori (MAP) approach

1

Please apply a Gaussian model for the prior p(w) N (w | m0 0, S0 I) . The

precision of the model p(t | X , w, ) is chosen to be 1 0.1 .

Solve the following questions for (O1, O2) = (4,4), (8,8), (12,12), (16,16) and

(20,20), respectively.

1. Compute wMAP based on the training data.

2. Use the testing data X_test to predict the output value for each point and

plot the distribution Y(x1, x2) of the predicted relative humidity over the

spatial region x1_min x1 x1_max and x2_min x2 x2_max.

3. Plot the squared error distribution

y ( x1 , x2 ) t ( x1 , x2 )

2

over the spatial

region x1_min x1 x1_max and x2_min x2 x2_max. Please also plot the training

data on the distribution map.

III. Bayesian approach

Apply the likelihood model and the prior model which you have used in the

previous MAP problem. Compute the mean m N and the covariance matrix

S N for the posterior distribution p(w | D) N (w | m N , S N ) . Solve the

following questions for (O1, O2) = (4,4), (8,8), (12,12), (16,16) and (20,20),

respectively.

1. By applying the posterior mean m N , please plot the predicted surface

y( x1 , x2 ) versus x1 and x2.

2. Assume

the

deduced

predictive

distribution

is

expressed

as

p(t | x, D) N (t | mTN ( x), N2 ( x)) . Please plot the distribution N2 ( x1 , x2 ) of

all testing data over the spatial range x1_min x1 x1_max and x2_min x2 x2_max.

Please also plot the training data on the distribution map.

Example (red points are training data)

3. Please generate three sets of linear coefficients from the parameter

posterior distribution and plot the corresponding surfaces y( x1 , x2 ) for

each set.

IV. Cross validation

Revisit the MAP approach. Apply 5-fold cross-validation in your training stage to

select the best (O1, O2) from the candidate set {(O1, O2) = (1+k, 1+k), where k =

{3,4, …, 19}.

Please plot the averaged validation MSE and the testing MSE versus k.

V.

Bonus

You are encouraged to design and to discuss your own regression method to

obtain more accurate fitting results.