Notes week 3: Comparing samples

advertisement

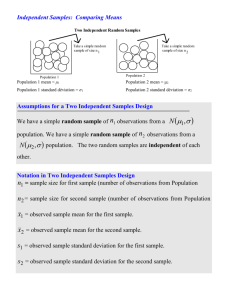

6. Testing for differences between 2-independent samples

The tests of section 5 have considered paired observations on the same subject or

related subjects. Here we consider tests for differences between 2 independent

samples from different populations. These are the kind of tests that would be applied

if your data consisted of measurements on a treatment group, and an independent,

unrelated control group. As with the case of paired data there are parametric methods,

that can be used when we can assume that the population distributions are normal or

sample sizes are large. Moreover, we will discuss non-parametric or distribution-free

methods that can be applied when these assumptions are violated. We begin with the

parametric approaches first.

6.1 Independent samples T-test

Suppose we have 2 random samples of observations from 2 populations A and B. Let

nA and nB denote the sample sizes. Suppose that the sample means and variances from

the two populations are x A , s A2 and x B , s B2 .

We assume that the population

2

distributions of the 2 populations are N(A, A ) and N(B, B2 ) and we wish to test for

any difference in A and B. Clearly we should base our test on the difference

between the sample means x A and x B , taking account of the sample sizes nA and nB

an also how variable the populations appear to be (as measured by s A2 and s B2 ). Let us

suppose that the population variances A2 and B2 are equal (the common variance

assumption). Then in this case we can carry out a t-test of the hypothesis

H0: A = B

via the following 2 steps.

1. First compute the pooled sample variance

s

2

p

n A 1s A2 nB 1s B2

n A nB 2

2. Then compute the t-statistic

t

( x A xB )

sp

1

1

n A nB

which, under H0, has a t-distribution on nA + nB - 2 degrees of freedom.

Then for a 2-sided test, where we test H0 against the general alternative A ≠ B,

the probability is 2P( t n A nB 2 | t |) . For a 1-sided test e.g. where we test against

the alternative hypothesis that A > B, we compute P( t n A nB 2 t ) .

This is essentially the calculation carried out by SPSS.

Unfortunately there may be evidence that the variances A2 and B2 are not equal, in

which case an alternative version of the 2-sample t-test should be used. This is done

automatically by SPSS and involves a somewhat different test which does not assume

equality of variances. The results of this test should be quoted whenever the built-in

test of equality of variances returns a low p-value.

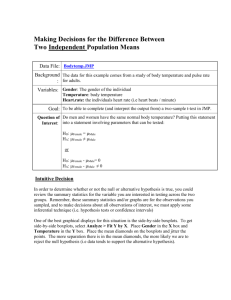

Example: In a trial to test the efficacy of an anti-anxiety drug, self-reported anxiety

scores of a number of patients were recorded. Six of the patients (group 1) had been

treated for the previous month with a placebo, whilst the remaining 8 (group 2) were

treated with an anti-anxiety drug. The data are shown in the following table.

Patient No.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

Score Group

63.00

1

75.00

1

82.00

1

66.00

1

59.00

1

62.00

1

72.00

2

48.00

2

59.00

2

65.00

2

53.00

2

61.00

2

57.00

2

73.00

2

To test for differences in the population mean score for the two treatments, go to

Analyze -> Compare Means -> Independent Samples T-test.

Place 'Score' in the test variable window and Group(1,2) as the grouping variable.

In the output window you should see the following.

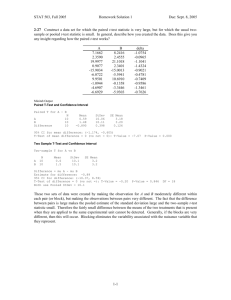

Group Statistics

score

group

1

2

N

6

8

Mean

67.8333

61.0000

Std. Deviation

8.84119

8.73417

Std. Error

Mean

3.60940

3.08800

From this we see that the two sample standard deviations are very close. We should

expect that any test of equality of variances should not reject the hypothesis that they

are equal.

Independent Samples Test

Levene's Test for

Equality of Variances

F

score

Equal variances

as sumed

Equal variances

not ass umed

.021

Sig.

.887

t-test for Equality of Means

t

df

Sig. (2-tailed)

Mean

Difference

Std. Error

Difference

95% Confidence

Interval of the

Difference

Lower

Upper

1.441

12

.175

6.83333

4.74116

-3.49676

17.16343

1.439

10.847

.178

6.83333

4.75010

-3.63957

17.30623

We find that this is the case Levene's Test for equality of variance returns a large pvalue so we could justifiably make the common variance assumption and quote the

upper significance (0.175) for the 2-tailed test. It would make little difference if we

used the lower test. The message is the same - there is no significant evidence of a

difference in mean score between the two treatments (placebo and drug). This is also

reflected in the wide confidence interval for the mean difference.

Make sure you indicate which test you have selected when you quote the p-value.

We now consider a non-parametric approaches to comparing populations on the basis

of independent samples from each.

6.1. Mann-Whitney U-test /Wilcoxon-rank sum test (See Howell p. 647)

This is a very well known test for detecting differences in the distributions of two

populations from two independent random samples. It is the distribution-free analogy

of the t-test for two independent samples. It is in fact a very intuitive test which that

people probably use informally when making judgements or comparison between

populations.

Example: Suppose that 5 golfers from the US and 5 golfers from the UK compete in

a stroke-play competition. The nationalities of the golfers in 1st to last position are as

follows:

Rank Country

Score

1st:

US,

66

2nd US,

67

3rd: US;

69

4th= US, UK;

72, 72

6th

UK;

74

7th

US

75

8th: UK

78

9th: UK

79

10th UK

81

Your American friend claims that this result is incontravertable proof that American

golfers are better than UK golfers. Does he/she have a point?

It seems unlikely that golf scores will be normally distributed and the sample sizes

here are small (5 from each population). The parametric t-test does not seem to be

overly sensible. How can we test for differences without assuming any particular

distribution for golf scores?

Your friend's hypothesis is based on the fact that most of the US golfers appear in the

top part of the ranking (with obviously a majority of the UK in the bottom part). But

is this disparity significant? How often would a more extreme 'division' occur if the

distribution of ability were identical between UK and US golfers?

A natural way to measure the degree of 'extremeness' is to count up the rankings for

the American golfers (with appropriate resolution of the tied ranks). The MannWhitney U-test is one way of measuring how 'extreme' the disparity in the ranks

actually is. It is based on the logic that, if there were no difference in the distribution

of ability (the null hypothesis H0), then the ordering of the golfers should be a

completely random ordering of the 10. We present the test for general situations and

then apply it to the above data.

The Mann-Whitney U-test

Suppose we have two independent groups (1 and 2) of individuals with n1 and n2 in

each (where n1 n2). Suppose that a measurement is taken on each of the (n1 + n2)

individuals and these are ranked from smallest to largest. Let R1 denote the sum of

the ranks for population 1, and let

U1 R1

n1 n1 1

.

2

U1 is a measure of the extent to which R1 exceeds its minimum possible value. Now

when we observe a particular value for U1 we must determine the frequency with

which a value that is more extreme than that observed will occur by chance when the

ranking is a random ordering of the (n1 + n2) individuals. How this is done depends

on whether we are conducting a 1 or 2-sided test.

We also calculate U 2 R2

n2 n2 1

, which we can get directly from U1, since it

2

turns out that

U2 = n1n2 - U1.

Moreover, it can be shown that under H0, U1 and U2 have exactly the same

distribution.

1-sided tests: Suppose our alternative hypothesis is such that we believe the ranks in

group 1 will typically be lower than in group 2. Then, if U1 = U and this is the lower

of the values U1 and U2, we would report as our p-value the probability

P(U1 U | H0). If U2 were the smaller value then there would be no evidence against

H0.

2-sided tests: For the 2-sided test we will reject for values of U1 that are too small

and also if we get a value of U2 that is too small. In this case we we take the minmum

of U1 and U2 , U = min{U1, U2} and report 2P(U1 U) as the p-value.

Tied ranks: If we have one or more tied scores then we can either assign an average

rank to each of the tied ranks, or break the tie in such a way that it's harder to reject

H0.

For the golfing example let group 1 be the US team and group 2 the UK team. We

have n1 = n2 = 5. Suppose we resolve the tied ranks by giving them both the value 4.5

(average of the tied value). Now

n1 n1 1

= (1 + 2 + 3 + 4.5 + 7) - 56/2 = 17.5 - 15 = 2.5

2

U2 = 37.5 - 15 = 22.5

(also = 25 - U1).

U1 = R1 -

For a 1-sided test we compute P(U1 < 2.5).

An idea of this 1-sided probability can be obtained from Table 21 (p. 66) of the blue

tables.

Look for the row of entries for n1 = 5, n2 = 5. These give you the largest integer value

for U1 for which the 1-sided p-value would is less than 5%, 2.5%, 1%, 0.5% or 0.1%.

Our value of 2.5 corresponds to a p-value (1-sided) which is less than 5% and perhaps

also less than 2.5%, with a 2-sided p-value certainly less than 10% and perhaps less

than 5%. There appears to be some evidence that there is a difference in ability.

Fortunately, if you're using SPSS you don't have to use the tables. SPSS computes an

exact p-value for the 1-and 2-sided tests.

After typing in scores:

Country Score

1

66

1

67

1

69

1

72

1

75

2

72

2

74

2

78

2

79

2

81

.

Go to Analyze -> Nonparametric tests -> 2 Independent samples

Then fill in the test and grouping variable, click the 'Exact' button, and check 'Exact'

in the dialogue box.

Mann-Whitney Test

Ranks

Score

Country

1

2

Total

N

Mean Rank

3.50

7.50

5

5

10

Sum of Ranks

17.50

37.50

Test Statisticsb

Mann-Whitney U

Wilcoxon W

Z

As ymp. Sig. (2-tailed)

Exact Sig. [2*(1-tailed

Sig.)]

Exact Sig. (2-tailed)

Exact Sig. (1-tailed)

Point Probability

Score

2.500

17.500

-2.095

.036

a

.032

.040

.020

.008

a. Not corrected for ties.

b. Grouping Variable: Country

You will see that the test returns not only the Mann-Whitney U, but also Wilcoxon W

statistic. The latter is an alternative statistic which is closely related to the MannWhitney U and is obtained directly from the sum of the ranks. We will not use the

Wilcoxon form of the test in this course. .

The test also returns a 'Z-score'. This calculation is based on the assumption that for

larger sample sizes (say > 10 in each group) the distribution of U1 under H0 is

nn

n n n n2 1

approximately normal with mean 1 2 and variance 1 2 1

. In our case

12

2

U1 ~ N(12.5, 22.9) (approx) and the quoted Z is approximately

Z

(2.5 12.5)

= -2.09

22.9

The asymptotic 2-tailed significance is 2Pr(Z -2.09) where Z ~ N(0, 1).

Exercise: Apply the Mann-Whitney U-test to analyse the anti-anxiety drug trial data

in Section 6.1. Compare your results with the t-test analysis.