Case GARCH – Conditionally Heteroscedastic Models

advertisement

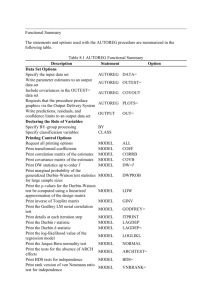

Case GARCH: Modeling Volatility Dynamics A. Conditional Heteroscedasticity This lesson introduces you to a recent development in forecasting asset returns. ARMA forecasting model, which includes a random process as a special case, tracks the level, defined by the mean at each time period t, of the variable given the information available at time t. However, asset returns are also subject to variability around the mean, which is measured by the variance of the variable for t. ARMA models assume constancy of the variance, i.e., homoscedasticity. However, high frequency asset returns are not homoscedastic. Figure 1A below plots 1268 daily returns of CISCO stocks from June 25, 1995 to July 5, 2001. There is no obvious upward or downward trend, i.e., the mean at t appears constant. However, the variability is not uniform, and shows chunks mixed with occasional spikes. Let et (residual at time t) = return at time t – sample mean. Then, the squared residual et2 estimates the variance of the return for t. The plot of the squared residuals does not appear to follow a random process. Also, similar values of squared residuals come in chunks. See Figure 1B. Figure 1A: The daily return, 6/25/95 to 7/5/01 - CSCO Figure 1B The squared daily return, 6/25/95 to 7/5/01 - CSCO 0.06 0.3 0.05 0.2 0.04 0.1 0.03 0.0 0.02 -0.1 -0.2 6/25/96 0.01 5/26/98 0.00 6/25/96 4/25/00 5/26/98 CSCO_R_R CSCO_R 1 4/25/00 The chunkiness of squared residuals is the result of dependence of the variance of the return at time t on variances at preceding periods. We can confirm this by computing the correlogram of squared residuals. See Figure 1C. The standard error of a sample autocorrelation for 1268 observations from a random process is approximately: 1 0.028 1268 Comparing ACF values in Figure 1C with the standard error, we conclude that ACF are highly significant at all lags. Q-stats are also highly significant. This dependence of the variance at time t on variances of preceding periods is called the conditional heteroscedasticity. Conditional heteroscedasticity is quite common for high frequency asset return data. Figure 1C: Correlogram of the Squared Daily Returns, 6/25/95 to 7/5/01 - CSCO Sample: 6/25/1996 7/05/2001 Included observations: 1268 Autocorrelation Partial Correlation |* |* |* |* | |* |* |* |* |* |* |* | | | |* |* |* | |* | | | | | | | | | | | | | | | | | | | | 1 2 3 4 5 6 7 8 9 10 AC PAC Q-Stat Prob 0.180 0.175 0.105 0.083 0.057 0.129 0.114 0.185 0.079 0.191 0.180 0.147 0.054 0.036 0.017 0.101 0.069 0.131 -0.003 0.131 41.268 80.204 94.164 103.04 107.20 128.28 144.96 188.82 196.87 243.77 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 0.000 We should also note that the spikes in Figure 1A or 1B most likely do not reflect conditional heteroscedasticity. They could be caused by sudden high variances or shifts of the mean for the periods. 2 Conditional heteroscedasticity may be present for daily returns, but it may not be so for the monthly returns. As an example, Figures 2A through 2C show the results for the monthly return of CISCO stock for the corresponding time range. The correlogram of squared residuals does not indicate serial correlation. Figure 2A The monthly return, 96:7 to 00:6 - CSCO Figure 2B The squared monthly returns, 96:7 to 00:6 - CSCO 0.08 0.3 0.2 0.06 0.1 0.04 0.0 -0.1 0.02 -0.2 0.00 96:07 97:01 97:07 98:01 98:07 99:01 99:07 00:01 -0.3 96:07 97:01 97:07 98:01 98:07 99:01 99:07 00:01 CSCO_MR_SQ CSCO_MR Figure 2C: Correlogram of the squared monthly return - CSCO Sample: 1996:07 2000:06 Included observations: 48 Autocorrelation Partial Correlation . |. . *|. . *|. . *|. .*| . . |*. .|. . |*. .|. .*| . . |. . *|. . *|. . *| . **| . . |*. .*| . . |*. .|. .*| . | | | | | | | | | | | | | | | | | | | | 1 2 3 4 5 6 7 8 9 10 3 AC PAC Q-Stat Prob -0.030 -0.118 -0.058 -0.104 -0.159 0.118 -0.009 0.103 0.026 -0.142 -0.030 -0.119 -0.066 -0.125 -0.191 0.068 -0.066 0.093 0.002 -0.137 0.0467 0.7718 0.9498 1.5373 2.9436 3.7386 3.7435 4.3818 4.4238 5.6970 0.829 0.680 0.813 0.820 0.709 0.712 0.809 0.821 0.881 0.840 B. Modeling Conditional Heteroscedasticity Forecasting the conditional standard deviation is important as the volatility affects the asset price. In 1982, an Economist Engle, developed a statistical model for conditional heteroscedasticity. In below we introduce the model in the context of forecasting stock returns. Consider the following formulation for the return of a stock. rt t rt is the return at time, say day, t and t is an independent observation from N (0, t2 ). Under a random walk hypothesis: t2 2 for all t, i.e., the variance of t is constant for all t. As noted earlier, we say that the return rt is homoscedastic. Figure 1A through 1C, the variance of the daily return of CISCO stocks do not follow homoscedasticity, but show a dependence on the variances of preceding periods. One way to model this serial correlation of the variance is: t2 t21 (1) This formulation is called an Autoregressive Conditional Heteroscedastic (ARCH) model. The reason why it is called so is that t2 is an unbiased estimate of t2 , i.e., E t2 t2 , so that defining the estimation error t t2 t2 , we can re-write (1) for t2 as follows: t2 t2 t t21 t (2) (2) is an AR(1) specification for the squared residual. Unlike the AR(1) model for the mean, it is the error term t is not constant. We could thus say that (2) is a heteroscedastic AR(1). One can generalize (2) to include squared residuals with lags 2, 3 and so on as explanatory variables. Following the standard notation for AR models, it is convenient to denote (2) as ARCH(1). Let (L) be a q-th order polynomial in lag operator L, i.e., L 1L p Lp 4 Then, ARCH (p) is: t2 L t2 As we have learned in ARMA modeling for the mean, it is not wise to keep increasing lagged squared residual terms for explaining the serial dependence of the variance. Instead, a useful parsimonious generalization of (1) is to include t21 term as an explanatory variable, i.e: t2 0 t21 t21 (3) The generalization is proposed by Bollerslev in 1986 and is called GARCH (Generalized ARCH). It can be shown that (3) is an heteroscedastic ARMA (1,1) specification for the squared residual. See below. From (3), t2 t2 t t21 t21 t21 t21 (t 1) t t t21 t21 1 (t 1) t t21 t (t 1) It is heteroscedastic, because t is not constant. Following ARCH(1), (3) is denoted as GARCH(1,1). Let L be a q-th order polynomial in L, i.e., L 1L q Lq Then GARCH(p, q) is: t2 L t2 L t2 5 C. Model Fitting Using Eviews EViews offer routines that are especially suited for testing for conditional heteroscedasticity in the residual and proceeding to fit ARCH or a GARCH models. We will illustrate these features in below. We use the daily return of CSCO stocks as our data. We begin with fitting a homoscedastic model: csco_r t Select Quick/Estimate Equation and open the panel as shown below. Specify the model for the mean in the upper space. Note that the Method window indicates LS – Least Squares, which is the best estimation method for homoscedastic data. Click OK and the output is generated. Select View/Residual Tests and open the menu. See the panel below. A variety of tests for goodness of random process appears. A useful test for the presence of conditional heteroscedasticity in the residual is Correlogram – Squared Residuals which we have seen before in Figure 1C. Another useful test is ARCH – LM test. LM stands for Lagrange multiplier. 6 Select the test, the following window opens: The LM test uses autoregression of the squared residuals. If ARCH(1) is suspected, we enter 1 in the window. Here is the result of the test. 7 ARCH Test: F-statistic Obs*R-squared 45.18800 43.69846 Probability Probability 0.000000 0.000000 Test Equation: Dependent Variable: RESID^2 Method: Least Squares Sample(adjusted): 6/27/1996 5/04/2001 Included observations: 1267 after adjusting endpoints Variable Coefficient Std. Error t-Statistic Prob. C RESID^2(-1) 0.001039 0.186028 8.70E-05 0.027674 11.95071 6.722202 0.0000 0.0000 R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood Durbin-Watson stat 0.034490 0.033726 0.002831 0.010138 5636.875 2.053261 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion F-statistic Prob(F-statistic) 0.001275 0.002880 -8.894830 -8.886710 45.18800 0.000000 The test statistic is the number of observations, T times R2 of the autoregression, i.e., T R 2 . In the absence of ARCH(1), the coefficient of et21 is zero, the null hypothesis, and T R 2 should follow a 2 distribution with the number of degrees of freedom 1, which predicts that the T R 2 exceeds 3.84 (from a table of 2 distribution) only 5% of the time. The value of the test statistic for this example is so high that we will not hesitate rejecting the null hypothesis. We now proceed to fit an ARCH(1) model for the data. Going back to the beginning of this section, this time, after selecting Quick/Estimate Equation, open the pull down menu for Method and select ARCH – Autoregressive Conditional Heteroscedasticity. 8 The panel as shown below opens and indicates that it is ready to fit a GARCH(1, 1). For fitting ARCH(1), replace 1 in GARCH window by 0 and click OK. Here are the results for ARCH(1). Table 1: Fitting ARCH(1) for daily returns of CSCO stocks Dependent Variable: CSCO_R Method: ML - ARCH Sample(adjusted): 6/26/1996 5/04/2001 Included observations: 1268 after adjusting endpoints Convergence achieved after 13 iterations C Coefficient Std. Error z-Statistic Prob. 0.001883 0.000879 2.141806 0.0322 22.65828 7.835731 0.0000 0.0000 Variance Equation C ARCH(1) R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood 0.000884 0.331873 3.90E-05 0.042354 -0.000129 -0.001710 0.035747 1.616493 2475.264 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion Durbin-Watson stat 9 0.001478 0.035717 -3.899471 -3.887298 2.104299 The following extracts the main results for the variable. csco _ rt 0.003038 t t N 0, t2 t2 0.000884 0.33187 t21 (4) This time, we fit GARCH(1,1) for the same data. Sample(adjusted): 6/26/1996 5/04/2001 Included observations: 1268 after adjusting endpoints Convergence achieved after 28 iterations C Coefficient Std. Error z-Statistic Prob. 0.003038 0.000821 3.700891 0.0002 2.972380 6.651751 63.36457 0.0030 0.0000 0.0000 Variance Equation C ARCH(1) GARCH(1) R-squared Adjusted R-squared S.E. of regression Sum squared resid Log likelihood 1.69E-05 0.083822 0.904858 -0.001909 -0.004287 0.035793 1.619371 2565.158 5.69E-06 0.012601 0.014280 Mean dependent var S.D. dependent var Akaike info criterion Schwarz criterion Durbin-Watson stat 0.001478 0.035717 -4.039682 -4.023451 2.100559 The estimated model for the daily return of CSCO stocks is: r 0.003038 t with t N 0, t2 (5) t2 0.0000169 0.083822 t21 0.904858 t21 Which model – ARCH(1) or GARCH(1,1) fitts the data best? First of all, the coefficient of GARCH(1) term, i.e., t21 is highly significant. Second, the estimation method uses the method of maximum likelihood. Comparing the value of Log likelihood, GARCH has a higher value, which means that the data are more likely from from GARCH process than ARCH. Third, GARCH uses one more parameter than ARCH. Both Akaike information criterion and Schwarz criterion adjust the likelihood for the number of parameters. The lower the values of these criteria, the better the fit. GARCH has lower values than ARCH for both criteria. From these tests, we would opt for GARCH over ARCH. 10 Is there rooms for improvement? To answer the question, we select View/Rresidual Tests/Correlogram – Squared Residuals in the GARCH output. Eviews computes the correlogram for the standardized residual: zt et t Here is the result: We can also select View/Rresidual Tests/ARCH LM test and get the following result (only a part of the output is shown). ARCH Test: F-statistic Obs*R-squared 4.324190 4.316272 Probability Probability 0.037775 0.037750 Test Equation: Dependent Variable: STD_RESID^2 Method: Least Squares Sample(adjusted): 6/27/1996 5/04/2001 Included observations: 1267 after adjusting endpoints Variable Coefficient Std. Error t-Statistic Prob. C STD_RESID^2(-1) 0.942968 0.058457 0.055291 0.028111 17.05459 2.079469 0.0000 0.0378 11 These tests indicate that the conditional heteroscedasticity is mostly eliminated for the standardized residual. (AC are still significant but their values are small.) Therefore, we conclude that the daily stock return of CISCO stocks follow GARCH(1,1) as summarized in (4). It is instructive to compute the correlogram for the standardized squared residual. The autocorrelations are highly significant after lag 1, which indicates there is still significant conditional heteroscedasticity left in the standardized residual. How does the estimated model track the heteroscedasticity in the data? In the GARCH(1,1) estimation output, open View/Conditional SD menu: 12 Then, the graph of the standard deviation as computed by equation (4) appears. See below. 0.10 0.08 0.06 0.04 0.02 0.00 6/26/96 5/27/98 4/26/00 D. Forecasting Using GARCH Here is the GARCH(1,1) estimated for the daily return of CSCO stocks shown earlier. r 0.003038 t with t N 0, t2 (5) t2 0.0000169 0.083822 t21 0.904858 t21 How can we use this model for forecasting purposes? First, for constructing an interval forecast of the return, we can account for changing standard deviation by applying the second equation in (5) for t2 . Second, forecasting the future value of the variance itself is important for assessing the risk of investing in CSCO stocks. These forecast advantages of the model are for short run. For a long run forecast of the variance, the MA effect will quickly disappear and the AR effect converges to the following value: 0.0000169 0.001493 1 1 0.083822 0.904858 13 which is the sample variance of the data. That is, for a long run forecast, the sample mean and the sample variance are the best forecast of the expected return and the risk. 14