Vegetation Modeling I (5.3 MB ppt)

advertisement

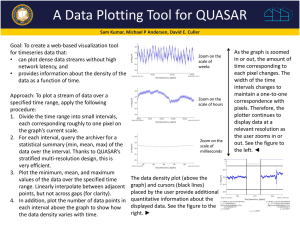

Vegetation Modeling

1

Outline

Model types

Predictive models

Predictor data

Predictive model types (parametric/nonparametric)

Model Example (Tree-based/Random Forests)

Modeling dataset

• Response/Predictor data

• Discussion – scale of predictors

• Predictor data extraction

Data exploration

•

•

•

•

Summary statistics/NA values

attaching data frame

Predictor variables

Response variables (binary, continuous)

Model generation

• Tree-based models

• Random Forests (classification/regression trees)

• Variable importance/proximity

Model prediction

• Polygon data

• Predictor data (clipping, stacking)

• Apply model and display map

Modelmap

• Build model

• Model diagnostics

• Make map

2

Vegetation Modeling

## In general, there are 3 reasons we model vegetation:

# 1. Explanatory: to explain why something is happening… find a pattern or a cause.

# 2. Descriptive: to see an association between variables, not aimed at prediction.

# 3. Predictive: to predict an occurrence based on known attributes.

## Examples:

Is the height of a tree related to its diameter?

Did the last 20 years of drought affect tree mortality rates?

Are forest disturbances changing the carbon cycle?

Can we predict the distribution of vegetation 100 years from now based on climate models?

Can we predict the current distribution of vegetation across the landscape from the spectral

signature of remotely-sensed data?

## An article on different reasons for modeling.

Shmueli, G. 2010. To Explain or to Predict? Statistical Science 25(3):289-310.

3

Predictive Vegetation Models

## Predicting the distribution of vegetation across the landscape from available maps of

environmental variables, such as geology, topography, and climate and spectral data

from remotely-sensed imagery or products.

## Statistical models are built by finding relationships between vegetation data and the

available digital data and then applied across given digital landscapes.

Integrate forest inventory data . .

. .with available digital data . .

• Satellite imagery

• DEMs

• Soils

. .and generate maps of forest attributes.

y = f(x1 + x2 + xn)

. .using flexible statistical

models and GIS tools . .

4

Predictor Data

## Digital environmental data and remotely-sensed products are becoming increasingly

more available at many different scales.

Remotely-sensed data and derived products

•

•

Snapshot of what is on the ground

Caution: represents current vegetation distributions based on reflectance patterns, but does not

explain potential occurrences of vegetation.

Topography variables (elevation, aspect, slope)

•

•

No direct physiological influence on vegetation

Caution: these variables are local to the model domain, be careful when extrapolating over space

or time.

Climate variables (temperature, precipitation)

•

•

•

Directly related to physiological responses of vegetation

Surrogates for topographical variables

Caution: these variables are better for extrapolating over space and time, but recognize other

limitations, such as current location and dispersal range, and species competition may also

change through time..

Other surrogates (geology, soils, soil moisture availability, solar radiation)

•

•

Resources directly used by plants for growth

Caution: similar to climate variables

5

Predictive Model Types

Model Objective:

Find a relationship between vegetation data and predictor data for prediction purposes.

The model, in its simplest form, looks like the following:

y ~ f (x1 + x2 + x3 + …) + ε,

where y is a response variable, the x's are predictor variables, and ε is the associated error.

There are many different types of models

Parametric models – make assumptions about the underlying distribution of the data.

• Maximum likelihood classification

• Discriminant analysis

• General linear models (ex. linear regression)

• ...

Nonparametric models – make no assumptions about the underlying data distributions.

• Generalized additive models

• Machine-learning models

• Artificial neural networks

• ...

6

Predictive Model Types

Parametric Models

Parametric models – make assumptions about the underlying distribution of the data

Assumptions:

• The shape of the distribution of the underlying population is bell-shaped (normal).

• Errors are independent.

• Errors are normally distributed with mean of 0 and a constant variance.

Advantages:

• Easy to interpret

• High power with low sample sizes

Disadvantages:

• If sample data are not from a normally-distributed population, may lead to incorrect

conclusions.

Examples:

• Maximum likelihood classification

• Discriminant Analysis

• Linear Regression

• Multiple linear regression

• Generalized linear models (parametric/nonparametric)

7

Predictive Model Types

Parametric Models – Regression Example

Previous example: Using regression to fill in missing values.

## Import data and subset for sp19 only

path <- "C:/Peru/Rworkshop" # Where workshop material is

setwd(path)

tree <- read.csv("PlotData/tree.csv", stringsAsFactors=FALSE)

sp19 <- tree[( tree$SPCD==19 & !is.na(tree$DIA) ),]

# Start off with a scatter plot

par(mfrow=c(1,2))

plot(sp19$DIA, sp19$HT, xlab ="Diameter", ylab ="Height",

main = "Subalpine Fir")

abline(lm(sp19$HT ~ sp19$DIA))

# We saw some heteroscedasticity (unequal variance), and transformed data to log scale.

plot(log(sp19$DIA), log(sp19$HT), xlab ="Diameter", ylab ="Height",

main = "Subalpine Fir, log scale")

abline(lm(log(sp19$HT) ~ log(sp19$DIA)))

# We looked at summary of models and saw lower residual error and higher R2 values.

r.mod <- lm(HT~DIA,data=sp19)

summary(r.mod)

r.mod.log.ht.dia <- lm(log(HT)~log(DIA),data=sp19)

summary(r.mod.log.ht.dia)

8

Predictive Model Types

Parametric Models – Regression Example

Previous example cont..

# Then we looked at the residuals versus the fitted values and normal QQ-plots.

par(mfrow=c(2,2))

plot(r.mod$fitted,r.mod.log.ht.dia$residuals, xlab="Fitted",ylab="Residuals",

main ="Fitted versus Residuals")

abline(h=0)

qqnorm(r.mod$residuals, main="Normal Q-Q Plot")

qqline(r.mod$residuals)

plot(r.mod.log.ht.dia$fitted,r.mod.log.ht.dia$residuals,

xlab="Fitted",ylab="Residuals",

main ="Fitted versus Residuals, log scale")

abline(h=0)

qqnorm(r.mod.log.ht.dia$residuals, main="Normal Q-Q Plot, log scale")

qqline(r.mod.log.ht.dia$residuals)

# NOTE:

# Using transformations, we were able to work with data that was nonlinear, with unequal

# variance structure using a parametric model.

9

Predictive Model Types

Nonparametric Models

Nonparametric models – make no assumptions about the underlying distribution of

the data.

Note: Vegetation data typically are not normally distributed across the landscape,

therefore it is most often better to use a nonparametric model.

## Advantages:

# If sample data are not from a normally-distributed population, using a parametric may

lead to incorrect conclusions.

## Disadvantages:

# Need larger sample sizes to have the same power as parametric statistics

# Harder to interpret

# Can overfit data

## Examples:

# Generalized additive models

# Classification and regression trees (i.e. CART)

# Artificial neural networks (ANN)

# Multivariate adaptive regression splines (MARS)

# Ensemble modeling (i.e. Random Forests)

10

Random Forests

Random Forests (Breiman, 2001)

Generates a series of classification

and regression tree models..

.. sampling, with replacement, from

training data (bootstrap)

.. selecting predictor variables at

random for each node

.. outputting the class that most

frequently results

.. and calculating an out-of-bag error

estimate

.. and measuring variable importance

through permutation

randomForest – Liaw & Wiener

ModelMap – Freeman & Frescino

Modeling Example

12

Model Example

Objective:

Using Random Forests to find relationships between forest inventory data and six

spatial predictor layers. The models will be used to make predictions across a

continuous, pixel-based surface.

80 TM B3

9200’ Elev

95° Aspect

20% Slope

60 TM B3

8000’ Elev

160° Aspect

15% Slope

120 TM B3

10500’ Elev

10° Aspect

12 % Slope

Landsat TM

Elevation

Aspect

Slope

10 %

cover

35 %

cover

Extract data from each

layer at each sample plot

location.

Landsat TM

Elevation

Aspect

Slope

80 %

cover

Prediction

(% Tree crown

cover)

Generate spatially explicit maps of

forest attributes based on cell by cell

predictions.

Model Example

The model form:

y ~ f (x1 + x2 + x3 + x4 + x5 + x6) + ε,

where y is forest inventory data, and the x's are the predictor variables listed below,

including satellite spectral data and topographic variables.

## For this example,

# We will look at 2 responses:

# Presence of aspen

Binary response of 0 and 1 values (1=presence)

# Total carbon

Continuous response

# With 5 predictor variables:

# Landsat Thematic Mapper, band 5

# Landsat Thematic Mapper, NDVI

# Classified forest/nonforest map

# Elevation

# Slope

# Aspect

30-m spectral data, band 5

30-m spectral data, NDVI

250-m classified MODIS, resampled to 30 m

30-m DEM

30-m DEM –derived

30-m DEM - derived

14

Study Area

Utah, USA

15

Study Area

Model data set:

Uinta Mountains, Utah,USA

Highest East-West oriented

mountain range in the

contiguous U.S. - up to

13,528 ft (4,123 m)

Apply model to:

High Uinta Wilderness

## Vegetation

5 different life zones:

1. shrub-montane

2. aspen

3. lodgepole pine

4. spruce-fir

5. alpine

16

Modeling Dataset

17

Data for Modeling

# Load libraries

library(rgdal)

library(raster)

library(rpart)

library(car)

library(randomForest)

library(PresenceAbsence)

library(ModelMap)

#

#

#

#

#

#

#

GDAL operations for spatial data

Analyzing gridded spatial data

Recursive partitioning and regression trees

For book (An R Companion to Applied Regression)

Generates Random Forest models

Evaluates results of presence-absence models

Generates and applies Random Forest models

18

Response Data

# Forest Inventory data (Model response)

# We have compiled this data before, let's review.

options(scipen=6)

plt <- read.csv("PlotData/plt.csv", stringsAsFactors=FALSE)

tree <- read.csv("PlotData/tree.csv", stringsAsFactors=FALSE)

ref <- read.csv("PlotData/ref_SPCD.csv", stringsAsFactors=FALSE)

## The plt table contains plot-level data, where there is 1 record (row) for each plot. This

table has the coordinates (fuzzed) of each plot, which we will need later.

dim(plt)

head(plt)

## Total number of plots

## Display first six plot records.

## The tree table contains tree-level data, where there is 1 record (row) for each tree on a

plot. We need to summarize the tree data to plot-level for modeling.

dim(tree)

head(tree)

## Total number of trees

## Display first six tree records.

# First, let's add the species names to the table.

# Merge species names to table using a reference table of species codes

tree <- merge(tree, ref, by="SPCD")

head(tree)

19

Response Data

# Forest Inventory data (Model response)

## We have 2 responses:

# Presence of aspen

# Total carbon

Binary response of 0 and 1 values (1=presence)

Continuous response

## Let's compile presence of aspen and append to plot table.

# First, create a table of counts by species and plot.

spcnt <- table(tree[,c("PLT_CN", "SPNM")])

head(spcnt)

# For this exercise, we don't care about how many trees per species, we just want presence

# or absence, therefore, we need to change all values greater than 1 to 1.

spcnt[spcnt > 1] <- 1

# We are only interested in aspen presence, so let's join the aspen column to the plot table.

spcntdf <- data.frame(PLT_CN=row.names(spcnt), ASPEN=spcnt[,"aspen"])

plt2 <- merge(plt, spcntdf, by.x="CN", by.y="PLT_CN")

dim(plt)

dim(plt2)

# Notice there are fewer records (plots with no trees)

plt2 <- merge(plt, spcntdf, by.x="CN", by.y="PLT_CN", all.x=TRUE)

dim(plt2)

20

Response Data

## Forest Inventory data (Model response)

## Now, let's compile total carbon by plot and append to plot table.

# First, create a table of counts by plot.

pcarbon <- aggregate(tree$CARBON_AG, list(tree$PLT_CN), sum)

names(pcarbon) <- c("PLT_CN", "CARBON_AG")

# Carbon is stored in FIA database with units of pounds. Let's add a new variable,

CARBON_KG, with conversion to kg.

pcarbon$CARBON_KG <- round(pcarbon$CARBON_AG * 0.453592)

# Now we can join this column to the plot table (plt2).

plt2 <- merge(plt2, pcarbon, by.x="CN", by.y="PLT_CN", all.x=TRUE)

dim(plt2)

head(plt2)

# Change NA values to 0 values.

plt2[is.na(plt2[,"ASPEN"]), "ASPEN"] <- 0

plt2[is.na(plt2[,"CARBON_KG"]), "CARBON_KG"] <- 0

plt2$CARBON_AG <- NULL

head(plt2)

21

Response Data

# We need to extract data from spatial layers, so let's convert the plot table to a

SpatialPoints object in R.

## We know the projection information, so we can add it to the SpatialPoints object.

prj4str <- "+proj=longlat +ellps=GRS80 +datum=NAD83 +no_defs"

ptshp <- SpatialPointsDataFrame(plt[,c("LON","LAT")], plt,

proj4string = CRS(prj4str))

## Display the points

plot(ptshp)

22

Predictor Data

## Predictor variables:

# Landsat Thematic Mapper, band 5

# Landsat Thematic Mapper, NDVI

# Classified forest/nonforest map

# Elevation

# Slope

# Aspect

30-m spectral data, band 5, resampled to 90 m

30-m spectral data, NDVI, resampled to 90 m

250-m classified MODIS, resampled to 90 m

30-m DEM, resampled to 90 m

90-m DEM – derived in a following slides

90-m DEM – derived in a following slides

## Set file names

b5fn <- "SpatialData/uintaN_TMB5.img"

ndvifn <- "SpatialData/uintaN_TMndvi.img"

fnffn <- "SpatialData/uintaN_fnfrcl.img"

elevfn <- "SpatialData/uintaN_elevm.img"

#

#

#

#

Landsat TM–Band5

Landsat TM–NDVI

Forest type map (reclassed)

Elevation (meters)

Note: If you don't have uintaN_fnfrcl.img, follow steps on last slide of this presentation (Appendix 1)

## Check rasters

rastfnlst <- c(b5fn, ndvifn, fnffn, elevfn)

rastfnlst

sapply(rastfnlst, raster)

23

Predictor Data

# TM Band 5 (uintaN_TMB5.img)

# TM NDVI (uintaN_TMndvi.img)

24

Predictor Data

# Forest/Nonforest map (uintaN_fnf.img)

# DEM (uintaN_elevm.img)

25

Predictor Data

## Now, let's generate slope from DEM. Save it to your SpatialData folder.

help(terrain)

help(writeRaster)

slpfn <- "SpatialData/uintaN_slp.img"

slope <- terrain(raster(elevfn), opt=c('slope'), unit='degrees',

filename=slpfn, datatype='INT1U', overwrite=TRUE)

plot(slope, col=topo.colors(6))

# Add slope file name to rastfnlst

rastfnlst <- c(rastfnlst, slpfn)

rastfnlst

26

Predictor Data

## We can also generate aspect from DEM. Save it to your SpatialData folder.

help(terrain)

## This is an intermediate step, so we are not going to save it.

aspectr <- terrain(raster(elevfn), opt=c('aspect'), unit='radians')

aspectr

# Note: Make sure to use radians, not degrees

plot(aspectr, col=terrain.colors(6))

27

Predictor Data

## Aspect is a circular variable. There are a couple ways to deal with this:

## 1. Convert the values to a categorical variable (ex. North, South, West, East)

## We derived aspect in radians. First convert radians to degrees.

aspectd <- round(aspectr * 180/pi)

aspectd

## Now, create a look-up table of reclass values.

help(reclassify)

frommat <- matrix(c(0,45, 45,135, 135,225, 225,315, 315,361), 5, 2)

frommat

frommat <- matrix(c(0,45, 45,135, 135,225, 225,315, 315,361), 5, 2, byrow=TRUE)

frommat

tovect <- c(1, 2, 3, 4, 1)

rclmat <- cbind(frommat, tovect)

rclmat

## Reclassify raster to new values.

aspcl <- reclassify(x=aspectd, rclmat, include.lowest=TRUE)

aspcl

unique(aspcl)

bks <- c(0,sort(unique(aspcl)))

# Break points

cols <- c("dark green", "wheat", "yellow", "blue")

# Colors

labs <- c("North", "East", "South", "West")

# Labels

lab.pts <- bks[-1]-diff(bks)/2

# Label position

plot(aspcl, col=cols, axis.args=list(at=lab.pts, labels=labs), breaks=bks)

28

Predictor Data

## 2. Convert to a linear variable (ex. solar radiation index; Roberts and Cooper 1989)

aspval <- (1 + cos(aspectr+30))/2 ## Roberts and Cooper 1989

aspval

plot(aspval)

## Let's multiply by 100 and round so it will be an integer (less memory)

aspval <- round(aspval * 100)

aspval

plot(aspval)

# Save this layer to file

aspvalfn <- "SpatialData/uintaN_aspval.img"

writeRaster(aspval, filename=aspvalfn, datatype='INT1U', overwrite=TRUE)

# Add aspval to rastfnlst

rastfnlst <- c(rastfnlst, aspvalfn)

## Converts aspect into solar radiation equivalents, with a correction of 30 degrees to reflect

## the relative heat of the atmosphere at the time the peak radiation is received.

## Max value is 1.0, occurring at 30 degrees aspect; min value is 0, at 210 degrees aspect.

## Roberts, D.W., and S. V. Cooper. 1989. Concepts and techniques in vegetation mapping. In Land

classifications based on vegetation: applications for resource management. D. Ferguson, P. Morgan, and F.

D. Johnson, editors. USDA Forest Service General Technical Report INT-257, Ogden, Utah, USA.

29

Discussion - Scale of Predictors

## Tools in R for handling scale issues. See help on functions for further details.

# focal - applies a moving window function across pixels without changing the resolution.

# Note: It works but takes a lot of time on large rasters.

# aggregate - aggregates pixels to lower resolution.

# resample – resamples pixels to match extent or pixel size of another raster.

Predictor Data Extraction

## The next step is to extract the values of each raster at each sample point location.

80 TM B3

9200’ Elev

95° Aspect

20% Slope

60 TM B3

8000’ Elev

160° Aspect

15% Slope

120 TM B3

10500’ Elev

10° Aspect

12 % Slope

Landsat TM

Elevation

Aspect

Slope

10 %

cover

35 %

cover

Extract data from each

layer at each sample plot

location.

80 %

cover

Predictor Data Extraction

## We need to check the projections of the rasters. If the projections are different,

## reproject the points to the projection of the rasters, it is much faster.

## We will use the plt2 table with LON/LAT coordinates and the response data attached.

head(plt2)

## We know the LON/LAT coordinates have the following projection:

prj4str <- "+proj=longlat +ellps=GRS80 +datum=NAD83 +no_defs"

# Check projections of each raster..

sapply(rastfnlst, function(x){ projection(raster(x)) })

## Reproject SpatialPoints object to match raster projections.

help(project)

rast.prj <- projection(raster(rastfnlst[1]))

xy <- cbind(plt$LON, plt$LAT)

xyprj <- project(xy, proj=rast.prj)

32

Predictor Data Extraction

## Extract values (raster package)

help(extract)

# Let's extract values from 1 layer.

tmp <- extract(raster(elevfn), xyprj)

head(tmp)

# Now, let's create a function to extract, so we can extract from all the rasters at the same time.

extract.fun <- function(rast, xy){ extract(raster(rast), xy) }

# Now, apply this function to the vector list of raster file names.

rastext <- sapply(rastfnlst, extract.fun, xyprj)

# Look at the output and check the class.

head(rastext)

class(rastext)

33

Predictor Data Extraction

## Extract values (raster package) cont.. change names

# Let's make the column names shorter.

colnames(rastext)

# Use the rastfnlst vector of file names to get new column names.

# First, get the base name of each raster, without the extension.

cnames <- unlist(strsplit(basename(rastfnlst), ".img"))

cnames

# We could stop here, but let's make the names even shorter and remove

# 'uintaN_' from each name.

cnames2 <- substr(cnames, 8, nchar(cnames))

cnames2

# Now, add names to matrix. Because the output is a matrix, we will use colnames.

colnames(rastext) <- cnames2

head(rastext)

34

Predictor Data Extraction

# Now, let's append this matrix to the plot table with the response data (plt2).

head(plt2)

# We just want the response variables, so let's extract these columns along with the unique

identifier of the table (CN, ASPEN, CARBON_KG).

modeldat <- cbind(plt2[,c("CN", "ASPEN", "CARBON_KG")], rastext)

head(modeldat)

# Check if this is a data frame

is.data.frame(modeldat)

dim(modeldat)

# Let's also append the projected xy coordinates for overlaying with raster layers.

modeldat <- cbind(xyprj, modeldat)

head(modeldat)

colnames(modeldat)[1:2] <- c("X", "Y")

head(modeldat)

35

Data Exploration

36

Model Data Exploration

## What to look for:

# NA values

# Outliers

# Correlations

# Non-normal distributions

# Changes in variability

# Clustering

# Non-linear data structures

37

Model Data Exploration

Summary statistics

## Summary statistics

str(modeldat)

summary(modeldat)

head(modeldat)

dim(modeldat)

# We need to convert categorical variables to factors

modeldat$ASPEN <- factor(modeldat$ASPEN)

modeldat$fnfrcl <- factor(modeldat$fnfrcl)

## Now, display summary statistics again and notice changes for ASPEN and fnfrcl

str(modeldat)

summary(modeldat)

head(modeldat) # notice head does not show which variables are factors

38

Model Data Exploration

NA Values

## Check for NA values.

modeldat[!complete.cases(modeldat),]

modeldat.NA <- modeldat[!complete.cases(modeldat),]

dim(modeldat.NA)

modeldat.NA

# We can overlay plots with NA values on raster.

plot(raster(aspvalfn))

points(modeldat.NA, pch=20)

# Most R model functions will handle or remove NA values, but for this example, let's

remove the plots with NA values from our dataset now.

modeldat <- modeldat[complete.cases(modeldat),]

dim(modeldat)

39

Model Data Exploration

attach data frame

## Attaching a data frame. This is useful if you are exclusively working with 1 data frame.

## Caution: data frame variable names must be unique to data frame.

## Let's save modeldat object and clean up before we attach the data frame.

save(modeldat, file="Outfolder/modeldat.Rda")

# Now, remove all objects except modeldat.

ls()[ls() != "modeldat"]

rm(list = ls()[ls() != "modeldat"])

ls()

# .. and attach modeldat

attach(modeldat)

head(modeldat)

ASPEN

# Display column vector without using $

# Notes:

# To load the saved model object

# load(file="Outfolder/modeldat.Rda")

# Make sure to detach data frame when done using it..

# detach(modeldat)

40

Model Data Exploration

Predictors

## Check for outliers and correlations among predictors.

## Let's look at an example using 2 predictors (elevm, TMB5)

preds <- cbind(elevm, TMB5)

## Correlation between predictor variables, to determine strength of relationship

cor(preds)

round(cor(preds),4)

## Scatterplots

plot(preds)

## Add a regression line

abline(lm(TMB5 ~ elevm))

## Now let's add a smoother line for more information about data trend

lines(loess.smooth(elevm, TMB5), col="red")

## Another way

library(car)

scatterplot(TMB5 ~ elevm)

help(scatterplot)

41

Model Data Exploration

Predictors

## Correlation cont..

# We can now see a non-linear trend in the data where, TMB5 spectral values decrease as

elevations rise up to around 3000 meters, then begins to increase in value as elevations

continue to rise.

# This suggests a non-parametric relationship.

help(cor)

# The default uses pearson correlation coefficient, but spearman may be a better choice for

nonlinear data structures.

round(cor(preds, method="spearman"),4)

## Let's look at all predictors at once

names(modeldat)

preds <- modeldat[-c(1:5)]

head(preds)

## Correlation between predictor variables (to minimize number of predictors)

cor(preds)

str(preds)

## Note Error : 'x' must be numeric (remove factor variable from analysis)

round(cor(preds[,-3], method="spearman"),4)

42

Model Data Exploration

Predictors

## Scatterplots

pairs(preds)

help(pairs) #select Scatterplot Matrices from graphics package

## In help doc, scroll down to Examples and copy and paste the 2 functions:

# panel.hist

# panel.cor

pairs(preds, lower.panel = panel.smooth, upper.panel = panel.cor)

## Change cor function to spearman within panel.cor

panel.cor <- function(x, y, digits = 2, prefix = "", cex.cor, ...)

{ usr <- par("usr"); on.exit(par(usr))

par(usr = c(0, 1, 0, 1))

r <- abs(cor(x, y, method="spearman"))

txt <- format(c(r, 0.123456789), digits = digits)[1]

txt <- paste0(prefix, txt)

if(missing(cex.cor)) cex.cor <- 0.8/strwidth(txt)

text(0.5, 0.5, txt, cex = cex.cor * r)

}

pairs(preds, lower.panel = panel.smooth, upper.panel = panel.cor)

## Another way

scatterplotMatrix(preds[,-3])

43

Model Data Exploration

Predictors

## Check for outliers

plot(TMB5, TMndvi)

identify(TMB5, TMndvi)

# Click on outliers and press esc key to escape

## Display the outliers

modeldat[c(58,60),]

plot(raster("SpatialData/uintaN_TMB5.img"))

points(modeldat[c(58,60),], pch=20)

## Let's remove these outliers from our dataset

modeldat <- modeldat[-c(58,60),]

dim(modeldat)

length(TMB5)

## Detach and reattach data frame

detach(modeldat)

attach(modeldat)

dim(modeldat)

length(TMB5)

save(modeldat, file="Outfolder/modeldat.Rda") ## Resave modeldat object

# load(file="Outfolder/modeldat.Rda")

## Check scatterplot again

plot(TMB5, TMndvi)

## ..and for all predictors

preds <- modeldat[-c(1:5)]

pairs(preds, lower.panel = panel.smooth, upper.panel = panel.cor)

44

Model Data Exploration

Predictors

## We can also look at the distribution of each predictor across its range of values and its

diversion from normality using the cumulative density function we looked at earlier.

## This is only meaningful for continuous predictors

plot(sort(elevm))

lines(c(1, length(elevm)), range(elevm), col=2)

## Now, let's make a little function to look at the rest of the continuous predictors

pdistn <- function(x){

plot(sort(x))

lines(c(1, length(x)), range(x), col=2)

}

par(ask=TRUE)

# Press enter to go to next display

pdistn(elevm)

pdistn(slp)

pdistn(TMB5)

pdistn(TMndvi)

pdistn(aspval)

par(ask=FALSE)

## For categorical predictors, let's use table to look at the distribution of samples by value.

table(fnfrcl)

45

Model Data Exploration

Response

## Let's look at the distribution and amount of variability of the sample response data.

## Check for normality (bell-shaped curve)

## Histograms and density functions

par(mfrow=c(2,1))

hist(CARBON_KG)

hist(CARBON_KG, breaks=5)

## Overlay density function (smoothed) on the histogram with continuous response.

hist(CARBON_KG, breaks=10, prob=TRUE)

lines(density(CARBON_KG))

## Data with lots of 0 values tend to have this shape. This is not a normal distribution

## Therefore, using a nonparametric model, such as Random Forests is a good idea.

## Let's look at the distribution if there were no 0 values (plots without trees)

hist(CARBON_KG[CARBON_KG>0], breaks=10, prob=TRUE)

lines(density(CARBON_KG[CARBON_KG>0]))

## The shape of the distribution is similar, with much more higher values than lower values.

par(mfrow=c(1,1))

46

Model Data Exploration

Data distributions – Binomial response

## Let's look at the distribution of the sample response data with a couple predictors.

## We will start with presence/absence of aspen. We know this is a binomial distribution

with 0 and 1 values.

## Let's explore a little more.

## Plot elevation as a function of ASPEN, bar representing median value.

boxplot(elevm ~ ASPEN, main="Aspen Presence/Absence")

## Add names

boxplot(elevm ~ ASPEN, main="Aspen Presence/Absence", names=c("Absence",

"Presence"))

# Note: the bold line is median value

## Add points with mean values

means <- tapply(elevm, ASPEN, mean)

points(means, col="red", pch=18)

# Note: overall, presence of aspen tends to be at the lower elevations of the sample plots.

# The distributions are slightly skewed with the median elevm values higher than mean

elevm values.

47

Model Data Exploration

Data distributions – Binomial response

## Other ways to explore relationships between the response and predictors.

## We can look at the differences of presence vs absence with 2 predictors.

plot(elevm, ASPEN)

## We can look at the differences of presence vs absence with 2 predictors (elevation and slope).

par(mfrow=c(1,2))

plot(elevm[ASPEN==1], slp[ASPEN==1])

plot(elevm[ASPEN==0], slp[ASPEN==0])

## Now, let's make it more meaningful by adding labels and using the same scale for each.

xlab <- "Elevation"

ylab <- "Slope"

xlim <- c(2000, 4000)

ylim <- c(0,40)

plot(elevm[ASPEN==1], slp[ASPEN==1], xlab=xlab, ylab=ylab, xlim=xlim, ylim=ylim,

main="Aspen Present")

plot(elevm[ASPEN==0], slp[ASPEN==0], xlab=xlab, ylab=ylab, xlim=xlim, ylim=ylim,

main="No Aspen")

par(mfrow=c(1,1))

48

Model Data Exploration

Data distributions – Binomial response cont.

## Other ways to explore relationships between the response and predictors (1 plot)

## We can also color the points based on a factor (ASPEN)

plot(slp, elevm, col=ASPEN, pch=20, xlab="Slope", ylab="Elevation")

## Add a legend (using default colors)

legend(x=35, y=2200, legend=levels(ASPEN), col=1:length(ASPEN), pch=20)

help(legend)

## Now, do it again using your own color choice

palette(c("blue", "green"))

# Change color palette first

plot(slp, elevm, col=ASPEN, pch=20, xlab="Slope", ylab="Elevation")

legend(x=35, y=2200, legend=levels(ASPEN), col=1:length(ASPEN), pch=20)

palette("default")

# Change color palette back to default colors

## Or..

plot(slp, elevm, col=c("red", "blue"), pch=20, xlab="Slope", ylab="Elevation")

legend(x=35, y=2200, legend=levels(ASPEN), col=c("red", "blue"), pch=20)

## Another way:

scatterplot(slp ~ elevm|ASPEN, data=modeldat)

## For categorical predictors, use table function again

table(ASPEN, fnfrcl)

49

Model Data Exploration

Data distributions – Continuous response

## Other ways to explore relationships between the response and predictors.

## We can look at relationship between elevation and CARBON_KG

plot(elevm, CARBON_KG, xlab="Elevation", ylab="Carbon(kg)")

## Without 0 values

plot(elevm[CARBON_KG>0], CARBON_KG[CARBON_KG>0], xlab="Elevation",

ylab="Carbon (kg)")

## Add regression and smoother lines.

par(mfrow=c(2,1))

## With 0 values

plot(elevm, CARBON_KG, xlab="Elevation", ylab="Carbon(kg)")

line.lm <- lm(CARBON_KG ~ elevm)

abline(line.lm, col="red")

line.sm <- lowess(elevm, CARBON_KG)

lines(line.sm, col="blue")

## Without 0 values

plot(elevm[CARBON_KG>0], CARBON_KG[CARBON_KG>0],xlab="Elevation",ylab="Carbon(kg)")

line.lm.w0 <- lm(CARBON_KG[CARBON_KG>0] ~ elevm[CARBON_KG>0])

abline(line.lm.w0, col="red")

line.sm <- lowess(elevm[CARBON_KG>0], CARBON_KG[CARBON_KG>0])

lines(line.sm, col="blue")

par(mfrow=c(1,1))

50

Model Generation

51

Model Generation

y ~ f (x1 + x2 + x3 + x4 + x5 + x6) + ε

80 TM B3

9200’ Elev

95° Aspect

20% Slope

60 TM B3

8000’ Elev

160° Aspect

15% Slope

120 TM B3

10500’ Elev

10° Aspect

12 % Slope

Landsat TM

Elevation

Aspect

Slope

10 %

cover

35 %

cover

Extract data from each

layer at each sample plot

location.

80 %

cover

Tree-based Models

## Strengths of Tree-based models

# Easy to interpret

# Adaptable for handling missing values

# Handles correlated predictor variables

# Predictor variable interactions are automatically included

# Handles categorical or continuous predictor variables

## Weaknesses of Tree-based models, such as Random Forests

# Optimization is based on each split, not necessary the overall tree model

# Continuous predictors are treated as categorical, thus inefficient

# Nonparametric, thus loses some the power of parametric statistics

# Tendency to overfit data

53

Tree-based Models

## What does this mean, in simple form..

# Using our example dataset,

load("Outfolder/modeldat.Rda")

library(rpart)

## Classification tree

asp.tree <- rpart(ASPEN ~ TMB5 + TMndvi + fnfrcl + elevm + slp + aspval,

data=modeldat, method="class")

plot(asp.tree)

text(asp.tree, cex=0.75)

## Regression tree

carb.tree <- rpart(CARBON_KG ~ TMB5 + TMndvi + fnfrcl + elevm + slp + aspval,

data=modeldat)

plot(carb.tree)

text(carb.tree, cex=0.75)

54

Random Forests

Random Forests (Breiman, 2001)

Generates a series of classification and

regression tree models..

.. sampling, with replacement, from

training data (bootstrap)

.. selecting predictor variables at random

for each node

.. outputting the class that most

frequently results

.. and calculating an out-of-bag error

estimate

.. and measuring variable importance

through permutation

randomForest – Liaw & Wiener

ModelMap – Freeman & Frescino

Breiman, L. (2001). Random forests. Machine Learning J. 45 5- 32.

Liaw, A.; Wiener, M. 2002. Classification and Regression by randomForest. ISSN 2/3:18-22.

Freeman, E.A. et al. 2012. ModelMap: an R package for Model Creation and Map Production. CRAN R vignette.

Random Forests Model

## Strengths Random Forests (Breiman 2001)

# Bootstrap sample – A random selection of plots used to construct one tree.

# Boosting – Successive trees are constructed using information from previous trees, and a

weighted vote is used for prediction.

# Bagging – Each tree is independently constructed, where the majority (or average) vote is

used for prediction. Breiman's Random Forests uses bagging.

# Predictor selection - Each node is split using a randomly selected subset of predictors. In

standard trees, all variables are used. This difference is more robust against overfitting.

# Two main parameters

# 1. Number of trees (bootstrap samples) to generate (ntree)

# 2. Number of variables in the random subset of predictors at each node (mtry)

# Predictions – Aggregate predictions of n trees

# For categorical response (Classification trees) – majority votes

# For continuous response (Regression trees) – average of regression

# Error rate – based on training data ('out-of-bag', or OOB)

# For each tree (bootstrap sample)

# For continuous response (Regression trees) – average of regression

56

Random Forests Model

classification tree

## Now, let's use the randomForests package – Classification tree

library(randomForest)

help(randomForest)

## Let's try with ASPEN binary, categorical response (presence/absence)

set.seed(66)

asp.mod <- randomForest(ASPEN ~ TMB5 + TMndvi + fnfrcl + elevm + slp + aspval,

data=modeldat, importance = TRUE)

## Default parameters:

# ntree = 500

# mtry = sqrt(p)

# nodesize = 1

# replace = TRUE

# Number of trees

# Number of predictors (p) randomly sampled at each node

# Minimum size of terminal nodes

# Bootstrap samples are selected with replacement

## Look at results

asp.mod

summary(asp.mod)

names(asp.mod)

57

Random Forests Model

Classification tree

## Classification tree - Output

names(asp.mod)

err <- asp.mod$err.rate

head(err)

tail(err)

mat <- asp.mod$confusion

mat

# Out-Of-Bag (OOB) error rate (of i-th element)

# Confusion matrix

58

Random Forests Model

Classification tree

## Classification tree - Output

# Plot the number of trees by the error rate

plot(1:500, err[,"OOB"], xlab="Number of trees", ylab="Error rate")

# Note: how many trees needed to stabilize prediction

## Calculate the percent correctly classified from confusion (error) matrix

mat

pcc <- sum(diag(mat[,1:2]))/sum(mat) * 100

pcc

pcc <- round(pcc, 2)

## Round to nearest 2 decimals

pcc

library(PresenceAbsence)

pcc(mat[,1:2], st.dev=TRUE)

Kappa(mat[,1:2], st.dev=TRUE)

## The Kappa statistic summarizes all the available information in the confusion matrix.

## Kappa measures the proportion of correctly classified units after accounting for the probability of

chance agreement.

59

Random Forests Model

## Now, let's use the randomForests package – regression tree

## Now, let's try with the continuous, CARBON_KG response

set.seed(66)

carb.mod <- randomForest(CARBON_KG ~ TMB5 + TMndvi + elevm + slp + aspval,

data=modeldat, importance = TRUE)

## Default parameters:

# ntree = 500

# mtry = p/3

# nodesize = 5

# replace = TRUE

# Number of trees

# Number of predictors (p) randomly sampled at each node

# Minimum size of terminal nodes

# Bootstrap samples are selected with replacement

## Look at results

carb.mod

summary(carb.mod)

names(carb.mod)

60

Random Forests Model

Regression tree

## Regression tree - Output

names(carb.mod)

mse <- carb.mod$mse

rsq <- carb.mod$rsq

# Mean square error (of i-th element)

# Pseudo R-squared (1-mse/Var(y))(of i-th element)

head(mse)

tail(mse)

tail(rsq)

61

Random Forests Model

Regression tree

## Regression tree - Output

# Plot the number of trees by the mse (Mean Square Error)

plot(1:500, mse, xlab="Number of trees", ylab="Mean Square Error rate")

# Note: how many trees needed to stabilize prediction

# Similarly, plot the number of trees by the rsq (R-Squared)

plot(1:500, mse, xlab="Number of trees", ylab="R-Squared")

# Again: how many trees needed to stabilize prediction

62

Random Forests Model

Variable Importance

## Other information from RandomForest model (importance=TRUE)

# Variable importance (Breiman 2002)

# Estimated by how much the prediction error increases when the OOB data for that variable is

permuted while all others are left unchanged.

# randomForests computes different measures of variable importance

# 1. Computed from OOB data, averaged over all trees and normalized by the standard

deviation of the differences.

Classification trees – error rate (Mean Decrease Accuracy)

Regression trees – Mean Square Error (%IncMSE)

# 2. The total decrease in node impurities from splitting on the variable, averaged over all trees.

Classification trees – measured by Gini index (Mean Decrease Gini)

Regression trees – measured by residual sum of squares (IncNodePurity)

63

Random Forests Model

Variable Importance - Classification

## Variable importance – Classification tree

## Get importance table

asp.imp <- abs(asp.mod$importance)

asp.imp

## Get the number of measures (columns) and number of predictors

ncols <- ncol(asp.imp)

numpred <- nrow(asp.imp)

## Number of measures

## Get number of predictors

## Plot the measures of variable importance for ASPEN presence/absence

par(mfrow=c(2,2))

for(i in 1:ncols){

## Loop thru the different importance measures

ivect <- sort(asp.imp[,i], dec=TRUE)

## Get 1st measure, descending order

iname <- colnames(asp.imp)[i]

## Get name of measure

# Generate histogram plot (type='h') with no x axis (xaxt='n')

plot(ivect, type = "h", main = paste("Measure", iname), xaxt="n",

xlab = "Predictors", ylab = "", ylim=c(0,max(ivect)))

# Add x axis with associated labels

axis(1, at=1:numpred, lab=names(ivect))

}

64

Random Forests Model

Variable Importance - Regression

## Let’s make a function and plot importance values for CARBON_KG model.

plotimp <- function(itab){

ncols <- ncol(itab)

numpred <- nrow(itab)

## Number of measures

## Get number of predictors

## Plot the measures of variable importance

par(mfrow = c(ncols/2,2))

for(i in 1:ncols){ ## Loop thru the different importance measures

ivect <- sort(itab[,i], dec=TRUE) ## Get 1st measure, sorted decreasing

iname <- colnames(itab)[i]

## Get name of measure

# Generate histogram plot (type='h') with no x axis (xaxt='n')

plot(ivect, type = "h", main = paste("Measure", iname), xaxt="n",

xlab = "Predictors", ylab = "", ylim=c(0,max(ivect)))

# Add x axis with associated labels

axis(1, at=1:numpred, lab=names(ivect)) }

}

## Check function with ASPEN model

plotimp(asp.imp)

## Now, run funtion with CARBON_KG model

plotimp(carb.mod$importance)

65

Random Forests Model

Proximity

## Other information from RandomForest model (proximity=TRUE)

# Measure of internal structure (Proximity measure)

# - The fraction of trees in which each plot falls in the same terminal node.

# - Similarity measure - in theory, similar data points will end up in the same terminal node.

## Let's try adding proximity to CARBON_KG model

set.seed(66)

carb.mod <- randomForest(CARBON_KG ~ TMB5 + TMndvi + elevm + slp + aspval,

data=modeldat, importance = TRUE, proximity = TRUE)

names(carb.mod)

carb.prox <- carb.mod$proximity

head(carb.prox)

66

Model Prediction

67

Study Area

Model data set:

Uinta Mountains, Utah,USA

Highest East-West oriented

mountain range in the

contiguous U.S. - up to

13,528 ft (4,123 m)

Apply model to:

High Uinta Wilderness

## Vegetation

5 different life zones:

1. shrub-montane

2. aspen

3. lodgepole pine

4. spruce-fir

5. alpine

68

Polygon Data

## Let's import and display the 2 polygon layers as well.

## Set dsn and polygon layer names

dsn <- "SpatialData"

aoinm <- "uintaN_aoi"

wildnm <- "uintaN_wild"

# AOI boundary

# Wilderness boundary (mapping AOI)

## Import polygon shapefiles

bndpoly <- readOGR(dsn=dsn, layer=aoinm, stringsAsFactors=FALSE)

wildpoly <- readOGR(dsn=dsn, layer=wildnm, stringsAsFactors=FALSE)

## Check projections of all 3 layers to see if we can display them together.

sapply(c(bndpoly, wildpoly), projection)

## Now we can display all 3 layers

par(mfrow=c(1,1))

plot(bndpoly, border="black", lwd=3)

plot(wildpoly, add=TRUE, border="red",

lwd=2)

69

Predictor Data

## Now, we need to clip the raster predictor layers to the extent of the wilderness

polygon

## Set file names

b5fn <- "SpatialData/uintaN_TMB5.img"

ndvifn <- "SpatialData/uintaN_TMndvi.img"

fnffn <- "SpatialData/uintaN_fnfrcl.img"

elevfn <- "SpatialData/uintaN_elevm.img"

slpfn <- "SpatialData/uintaN_slp.img"

(degrees)

aspfn <- "SpatialData/uintaN_aspval.img"

#

#

#

#

#

Landsat TM–Band5

Landsat TM–NDVI

Forest type map

Elevation (meters)

Derived slope

# Derived aspect value

## Check rasters

rastfnlst <- c(b5fn, ndvifn, fnffn, elevfn, slpfn, aspfn)

sapply(rastfnlst, raster)

## Compare projections of rasters with projection of wilderness polygon

projection(wildpoly)

70

Predictor Data

Clip layers

## Clip raster layers

## Let's clip the elevm raster using crop function

help(crop)

elevclip <- crop(raster(elevfn), extent(wildpoly))

## Now display the new raster.

plot(elevclip)

## Note: Notice it didn't clip to boundary, it clipped to extent of boundary

## Add polygon layer to display

plot(wildpoly, add=TRUE)

## We need to crop further by applying a mask with the polygon layer.

elevclip <- mask(elevclip, wildpoly)

plot(elevclip)

71

Predictor Data

Clip layers

## Create a function to clip the all the layers, saving to the working directory. Use

rastfnlst for raster names and build new names from the list. Return the new name

of the raster.

cliprast <- function(rastfn, poly){

## Create new name from rastfn

rastname <- strsplit(basename(rastfn), ".img")[[1]]

newname <- substr(rastname, 8, nchar(rastname))

newname <- paste("Outfolder/",newname, "_clip.img", sep="")

## Crop raster

rastclip <- crop(raster(rastfn), extent(poly))

## Mask raster and save to working directory with newname

rastclip <- mask(rastclip, poly, filename=newname, overwrite=TRUE)

print(paste("finished clipping",rastname))

flush.console()

return(newname)

}

clipfnlst <- {}

for(rastfn in rastfnlst){

clipfn <- cliprast(rastfn, wildpoly)

clipfnlst <- c(clipfnlst, clipfn)

}

72

Predictor Data

Create Raster Stack

## Check clipped rasters.

sapply(clipfnlst, raster)

## For prediction, the predictor layers must be consistent. Check the following:

# dimensions – all layers must have the same number of rows and columns

# resolution – all layers must have the same resolution

# extent – all layers must have the same extent

# projection – all layers must have same projection

## Create a stack of all the predictor layers

clipstack <- stack(clipfnlst)

clipstack

## Add names to stack layers

stacknames <- unlist(strsplit(basename(clipfnlst), "_clip.img"))

names(clipstack) <- stacknames

clipstack

73

Apply Model

y ~ f (x1 + x2 + x3 + x4 + x5 + x6) + ε

80 TM B3

9200’ Elev

95° Aspect

20% Slope

60 TM B3

8000’ Elev

160° Aspect

15% Slope

10 %

cover

120 TM B3

10500’ Elev

10° Aspect

12 % Slope

Landsat TM

Landsat TM

Elevation

Elevation

Aspect

Aspect

Slope

Slope

80 %

cover

35 %

cover

Extract data from each

layer at each sample plot

location.

Prediction

(% Tree crown

cover)

Generate spatially explicit maps of

forest attributes based on cell by cell

predictions.

Apply Model & Display Map

ASPEN

## Predict across stack pixels.

asp.predict <- predict(clipstack, asp.mod)

asp.predict

plot(asp.predict)

# Plot with color breaks

cols <- c("dark grey", "green")

plot(asp.predict, col=cols, breaks=c(0,0.5,1))

colors()

# Or, a little fancier.. create function to color categorical raster classes

colclasses <- function(rast, cols, labs){

nc <- length(cols)

minval <- cellStats(rast, 'min')

maxval <- cellStats(rast, 'max')

bks <- seq(minval,maxval,length.out=nc+1)

#

#

#

#

Number of classes

minimum value of raster

maximum value of raster

break points

lab.pts <- bks[-1]-diff(bks)/2

# label points

plot(rast, col=cols, axis.args=list(at=lab.pts, labels=labs),

breaks=bks)

}

colclasses(asp.predict, cols, labs=c("Absence", "Presence"))

par()

help(par)

par(omi=c(0,0,0,0.5))

# Changes outside margins

colclasses(asp.predict, cols, labs=c("Absence", "Presence"))

75

Apply Model & Display Map

CARBON_KG

## Predict across stack pixels.

carb.predict <- predict(clipstack, carb.mod)

## Plot with heat color ramp

plot(carb.predict, heat.colors(10))

## Plot with grey scale

plot(carb.predict, col=grey(256:0/256))

## Plot with green scale

my.colors <- colorRampPalette(c("white", "dark green"))

plot(carb.predict, col=my.colors(10))

76

ModelMap

Build Model

library(ModelMap)

help(package=ModelMap)

predList <- stacknames

#predList <- c("elevm", "TMndvi")

## Build random forest model

asp.mod2 <- model.build(

model.type = "RF",

qdata.trainfn = modeldat,

folder = "Outfolder",

predList = predList,

predFactor = "fnfrcl",

response.name = "ASPEN",

response.type = "categorical",

unique.rowname = "CN",

seed = 66)

asp.mod2

ModelMap

Model diagnostics

asp.mod2d <- model.diagnostics(

model.obj = asp.mod2,

qdata.trainfn = modeldat,

folder = "Outfolder",

response.name = "ASPEN",

prediction.type = "OOB",

unique.rowname = "CN")

ModelMap

Make Map

# Add full path to beginning of each name in predList

predPath <- sapply(predList,

function(x){paste("SpatialData/uintaN_", x, ".img", sep="")})

predPath

# Generate rastLUTfn. See help(model.mapmake) for details.

numpreds <- length(predList)

rastLUTfn <- data.frame(matrix(c(predPath, predList, rep(1,numpreds)),

numpreds, 3), stringsAsFactors=FALSE)

rastLUTfn

## Check rasters

sapply(rastfnlst, raster)

## Make Map

a <- model.mapmake(

model.obj = asp.mod2,

folder = "Outfolder",

rastLUTfn = rastLUTfn,

make.img = TRUE,

na.action = "na.omit")

Exercise

## 1. Create a map of presence of lodgepole within the Uinta Wilderness area, using a Random

Forests model and the predictors from modeldat. Hint: Load the plt and tree table again and use

code from slide #20 to create a binary response variable for lodgepole presence.

Also, set a seed to 66 so you can recreate the model.

## 2. How many plots in model data set have presence of lodgepole pine?

## 3. Display 1 scatterplot of the relationship of elevation and NDVI values with lodgepole pine

presence and absence as different colors. Hint: see slide #49. Make sure to label plots and add

a legend.

## 4. What is the variable with the highest importance value based on the Gini index?

## 5. What percentage of the total area is lodgepole pine? How does this compare with the

percentage of aspen?

Appendix 1

## Reclass raster layer to 2 categories

fnf <- raster("SpatialData/uintaN_fnf.img")

## Create raster look-up table

fromvect <- c(0,1,2,3)

tovect <- c(2,1,2,2)

rclmat <- matrix(c(fromvect, tovect), 4, 2)

## Generate raster and save to SpatialData folder

fnfrcl <- reclassify(x=fnf, rclmat, datatype='INT2U',

filename="SpatialData/uintaN_fnfrcl.img", overwrite=TRUE)