Defining Quantiles for Functional Data

advertisement

The University of Melbourne

Department of Mathematics and Statistics

Defining Quantiles for Functional Data

with an Application to the Reversal of Stock Price Decreases

Simon Walter

Supervisor: Peter Hall

1.2

1.1

1.0

0.9

0.8

0.7

10

20

Honours Thesis

October 28, 2011

30

40

Preface

This thesis introduces and motivates a surrogate to quantiles for functional data defined on a

closed interval of the real line. The central results are Theorems 3.6 and 3.9, which show that the

quantiles introduced characterise the distribution of a functional random variable under relatively

weak conditions. Three applications of these quantiles are also suggested: describing functional

datasets, detecting outliers, and constructing functional qq-plots.

In univariate data analysis, statistics based on quantiles are versatile and popular: the median

measures central tendency; inter-quartile range measures dispersion; quantile-based statistics

can measure skewness and kurtosis; and qq-plots are used to assess the fidelity of a sample to a

specified distribution or the equality of the distribution of two samples. It is therefore natural

to try to extend the definition of quantiles to multi-dimensional data. Quantiles are defined by

ordering values of a random variable; and since there is no natural order for Rn when n ≥ 2, there

is no trivially obvious extension. Nonetheless, substantial progress has been made: the definition

of multivariate quantiles given by Chaudhuri (1996) is perhaps the most successful, but several

approaches are reviewed in Serfling (2002). Easton & McCulloch (1990) and Liang & Ng (2009)

show that a plots with similar characteristics as the univariate qq-plot can be constructed for

multivariate distributions. More recently, Fraiman & Pateiro-López (2011) have suggested a

definition of quantiles tailored for use in functional data analysis. Fraiman & Pateiro-López

(2011) suggest a similar definition to the multivariate quantiles described in Kong & Mizera

(2008).

This thesis takes a different approach; we exploit the fact that functional data are defined on

a continuum and appeal directly to the definition of univariate quantiles. There is no analogue

to this approach for finite-dimensional multivariate distributions. We will see that functional

quantiles defined in this way share many (but not all) of the characteristics of univariate quantiles.

These quantiles are also substantially easier to compute and analyse than the quantiles proposed

by Fraiman & Pateiro-López (2011) and Chaudhuri (1996).

The first part of this thesis is a literature review; it does not contain any original material.

Chapter 1 is a selective introduction to functional data analysis; Chapter 2 describes existing

methods for extending quantiles to multivariate distributions. The original contribution of this

thesis is contained entirely in the second part: Chapter 3 describes a generalisation of quantiles for

functional data; proves theoretical characteristics of the quantiles defined and suggests methods

1

2

to estimate them; Chapter 4 describes applications of the quantiles defined; and Chapter 5

highlights deficiencies in the quantiles developed and suggests further avenues of fruitful enquiry.

The functional quantiles introduced are demonstrated by analysing the historical stock prices

of NASDAQ listed companies to determine whether large single-day declines are disproportionately followed by a partial reversal in subsequent trading. The dataset used is described in

Section 1.5.

The following conventions will be followed: ‘⊂’ will be used in its wide interpretation, that

is A ⊂ B includes A = B; ‘c0 will be used to denote complementation, so Ac = Ω\A; and ‘| · |’

will be used to denote the cardinality of a set. ‘⊥⊥’ will be used to denote independence, so

if X and Y are random variables then X ⊥⊥ Y means X and Y are independent; ‘∼’ will be

denote equality of distribution, so X ∼ Y iff X and Y have the same distribution, similarly if

X Y then X and Y do not have the same distribution; 1{condition} will be used to denote the

indicator function so 1{condition} = 1 if the condition is true and 0 if it is not; I will be used

to denote an arbitrary closed interval of the real line [a, b] where a, b ∈ R and a < b; and ∂ will

be used to denote the partial derivative of a function with respect to t. Important definitions,

theorems and lemmas will be framed. All other symbols used have standardised meanings.

I would like to acknowledge my supervisor, Peter Hall, for his generosity with both his time

and ideas. All inaccuracies and any infelicities of language are, however, mine and mine alone.

This work was supported by the Maurice H. Belz Scholarship.

Contents

I

Literature Review

5

1 Functional data analysis

6

1.1

Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

6

1.2

Formal definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

1.2.1

Preliminary definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

1.2.2

Defining functional random variables . . . . . . . . . . . . . . . . . . . . .

10

1.2.3

1.3

Defining functional datasets . . . . . . . . . . . . . . . . . . . . . . . . . .

10

Constructing functional datasets . . . . . . . . . . . . . . . . . . . . . . . . . . .

11

1.3.1

Assumptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

12

1.3.2

Basis smoothing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

13

1.3.3

Kernel smoothing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

14

1.4

Registration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

15

1.5

A sample dataset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

16

2 Multivariate quantiles

2.1

The failure of the ordinary approach . . . . . . . . . . . . . . . . . . . . . . . . .

2.2

Assessing the quality of multivariate quantiles . . . . . . . . . . . . . . . . . . . .

20

2.3

Methods for constructing multivariate quantiles . . . . . . . . . . . . . . . . . . .

21

2.3.1

Norm minimisation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

2.3.2

Functional quantiles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

21

Room for improvement? . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

22

2.4

II

19

Quantiles for Functional Data

19

23

3 Defining functional quantiles

3.1 Functional quantiles . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

24

24

3.1.1

Defining functional quantiles . . . . . . . . . . . . . . . . . . . . . . . . .

24

3.1.2

Properties of functional quantiles . . . . . . . . . . . . . . . . . . . . . . .

26

3.1.3

Characterising a functional random variable . . . . . . . . . . . . . . . . .

27

3

CONTENTS

3.2

4

Empirical functional quantiles . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

35

3.2.1

Defining empirical quantiles . . . . . . . . . . . . . . . . . . . . . . . . . .

35

3.2.2

Properties of empirical quantiles . . . . . . . . . . . . . . . . . . . . . . .

36

3.2.3

Missing data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

40

4 Applications

4.1

4.2

4.3

42

Describing functional datasets . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

42

4.1.1

Location . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

42

4.1.2

Dispersion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

44

4.1.3

Skewness . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

44

4.1.4

Alternatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

Detecting outliers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

4.2.1

The classification process . . . . . . . . . . . . . . . . . . . . . . . . . . .

46

4.2.2

An example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

46

4.2.3

Alternatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

QQ-plots

. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

4.3.1

Univariate qq-plots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

48

4.3.2

Functional qq-plots . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

49

4.3.3

Alternatives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

51

5 Epilogue

5.1

5.2

52

Further refinements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

52

5.1.1

Characterising a functional random variable . . . . . . . . . . . . . . . . .

52

5.1.2

More robust measures of dispersion and skewness . . . . . . . . . . . . . .

53

5.1.3

Better identification of outliers . . . . . . . . . . . . . . . . . . . . . . . .

53

5.1.4

Problems with functional qq-plots . . . . . . . . . . . . . . . . . . . . . .

54

Future research directions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

54

5.2.1

Using quantiles to construct formal tests . . . . . . . . . . . . . . . . . . .

54

5.2.2

A goodness-of-fit test based on functional qq-plots . . . . . . . . . . . . .

54

Bibliography

56

Part I

Literature Review

5

Chapter 1

Functional data analysis

This chapter reviews the areas of functional data analysis required to apply the original methods

developed in this thesis. In Section 1.2 we examine the definitions at the core of functional data

analysis; in Section 1.3 methods for constructing functional datasets from a finite number of

points are described; and in Section 1.4 popular methods for registering or aligning functional

data to remove uninformative phase variation are illustrated.

There is a large and rapidly expanding literature on functional data analysis. A complete

introduction is available in Ramsay & Silverman (1997, 2005) and Ferraty & Vieu (2006); a

description of the state of the art is available in Ferraty (2010, 2011) and Ferraty & Romain

(2011).

1.1

Introduction

Functional data analysis is the analysis of data that may be modelled as being generated by a

random function varying over a continuum. The most common objects of study are continuous

time series, but general random curves, random surfaces and higher dimensional analogues are

also studied. The quantiles developed in this thesis are only immediately applicable to random

variables defined on a set of injective functions all with domain I where I is a closed interval of

R; so this introduction will focus primarily on the statistical analysis of such random variables.

To provide a feel for some typical analyses, Figure 1.1 plots examples of four canonical

datasets from Ramsay & Silverman (2005): the top right chart shows selected observations from

data collected by Jones & Bayley (1941) in the Berkeley Growth Study. This study measured the

heights of 61 children born in 1928 or 1929 at regular intervals from birth to the age of 18. Top

left shows annual temperature variation for a selection of Canadian weather stations. Bottom

left shows the US non-durable goods index and bottom right shows twenty recordings of the

force exerted between the thumb and index finger of a subject during a brief impulse where the

subject aimed to achieve a 10N force. The aspect of these datasets that makes them amenable to

6

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

7

functional data analysis is that it is reasonable to suggest that, although measurements are only

obtained at discrete intervals, measurements could, in theory, be obtained at any time during the

period of analysis. It is therefore natural to analyse the data as if it were defined in continuous

time.

Functional data analysis is assuming increasing importance in statistics because of the growing

use of technology to collect very high-dimensional datasets that are often best analysed by

assuming the data vary over some continuum. Typical applications include: the detection of

forged signatures in Geenens (2011), the analysis of crime statistics in Ramsay & Silverman

(2002), and the intepretation of economic and financial time series in Cai (2011). Note, however,

that functional data analysis is not a viable option for every very high dimensional dataset; for

example, DNA microarray data must usually be treated as discrete data, and so should not be

modelled by a random function varying over a continuum.

1.2

Formal definitions

The informal definition given in the introduction can be recast to say that functional data analysis

is the analysis of data generated by a functional random variable. Defining functional random

variables concretely requires some preliminary definitions.

1.2.1

Preliminary definitions

Definition 1.1 (Metric spaces) The pair (M, d) is a metric space iff M is a set and d is a

function mapping M × M → R such that for each x, y, z ∈ M :

1. d(x, y) ≥ 0 (non-negativity)

2. d(x, y) = 0 iff y = x (identity of discernibles)

3. d(x, y) = d(y, x) (symmetry); and

4. d(x, z) ≤ d(x, y) + d(y, z) (triangle inequality)

The abbreviated notation M will be used to denote the metric space (M, d).

Metric spaces are too general for the methods developed later so we identify certain metric spaces

with useful characteristics.

Definition 1.2 (Compactness and connectedness)

1. A metric space M is compact iff for each {Uα }α∈A satisfying (Uα )α∈A ⊂ M and M =

S

S

α∈A Uα there is some finite set B ⊂ A such that M =

α∈B Uα .

2. A metric space M is connected iff there are no open sets U1 and U2 such that U1 ∩ U2 = ∅

and U1 ∪ U2 = M .

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

8

3

8 0

4

9

1

5

2

6

7

0

4

8

3

9

5

1

2

8

6

7

4

2

8

3

5

9

3

1

0

4

2

5

17

9

6

3

8

6

9

1

4

5

20

3

6

9

8

4

1

5

7

3

2

00

4

9

1

5

0

2

66

77

7

8

3

0

4

9

1

5

2

6

7

8

0

3

4

9

5

1

2

6

7

8

0

3

4

9

5

2

1

6

7

8

8888888888

88800000000000

8

555

55

55

44

5

4444

8 00 9

5

22222

4

22

6666

5

666

29999999

4

6

99

777

244

7

6

3

33333333

7

2

8 0

9

4

5

6

33

9

2

6

4

111111

333

77

111

5

1

8

0

2

9

1

6

3

7

1

4

5

1

2

9

8

3

0

7

6

4

1

5

2

9

7

39

4

5

16 77

2

4

30

880

5

2

4

7

30

9

5

2

4

66

0

5

11

9

2

3

77

4

6

0

5

1

9

7

2

3

4

6

1

5

7

9

5

2

4

6

1

7

9

2

67

17

6

7

Canadian Weather Station Data

20

Temperature (C)

140

80 100

height (cm)

180

Berkeley Growth Data

8

10

0

−10

−20

−30

5

10

2

2 3 2

3

1 2

2 3

1

1 1

3

3 1

1

2

2

1

3 1

4

3

1

1

1 Rupert

4

2 Pr.

1

4

2

4

3

3 2

Montreal

2 2

3

4

3

3

4 Edmonton

15

4

Jan

age

4Resolute

Apr Jun

Sep

Dec

Month

Pinch Force Data

6

4

0

10

2

20

Force (N)

50

8

100

10

The US Nondurable Goods Index

Nondurable Goods Index

4

4 4 4

1920

1940

1960

Time

1980

2000

0.00

0.10

0.20

0.30

Seconds

Figure 1.1: Examples of functional datasets frequently used to describe new and existing techniques; the data and the constructed plots are drawn from Ramsay & Silverman (2005). The

plots were reconstructed by Ramsay et al. (2010).

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

9

The domain of each function in the set on which a functional random variable is defined is a

continuum:

Definition 1.3 (Continuums)

1. A continuum is a compact connected metric space.

2. A continuum M is non-degenerate iff |M | > 1.

The standard examples of continuums are R and Rn for n ∈ N. Most functional data analysis

involves data defined on I1 or I1 × . . . × In where (Ii )i∈{1,...,n} is a closed interval of R.

One important characteristic of functional data is that they are uncountably infinite-dimensional;

it suffices to show any non-degenerate continuum is uncountably infinite to establish this characteristic.

Theorem 1.1 If M is a non-denegerate continuum then M is uncountable.

Proof Since |M | > 1 by Definition 1.3, there must exist two points x, y ∈ M such that x 6= y.

Now define the function f : M → R such that f (z) = d(z, x) and define r = d(x, y). Since

x 6= y, Definition 1.1(2) implies r > 0. Since (0, r) is uncountable it is sufficient to show that

f is a surjection on to (0, r). Pick r1 ∈ (0, r). Suppose, to get a contradiction, that there is

no point p ∈ M such that f (p) = r1 . Then there exists a partition of M into the open sets

{m ∈ M, f (m) > r1 } and {m ∈ M, f (m) < r1 }. This contradicts Definition 1.2(2), so we

conclude that M is uncountable.

Some further preliminary definitions are required to define random variables precisely.

Definition 1.4 (σ-algebras) Let Ω be a set and let F be a subset of 2Ω . F is a σ-algebra on

Ω iff three conditions are satisfied: (i) ∅ ∈ F; (ii) if the set A ∈ F then Ac ∈ F; and (iii) if the

S∞

sets (Ai )i∈{1,2,...} ∈ F then i=1 Ai ∈ F.

Definition 1.5 (Probability spaces) (Ω, F, P) is a probability space iff

1. The sample space, Ω, is an arbitrary set;

2. F is a σ-algebra on Ω.

3. The probability measure, P is a function mapping F → [0, 1] satisfying:

(i) if A ∈ F then P(A) ≥ 0 (non-negativity);

(ii) P(Ω) = 1 (unitarity); and

S∞

P∞

(iii) if {A1 , A2 , . . .} is a disjoint collection of elements of F then P( i=1 Ai ) = i=1 P(Ai )

(countable additivity).

Definition 1.6 (Random variables) Let (Ω, F, P) be a probability space, let E be a set and

E, a σ-algebra on E. A random variable is a function, X : (Ω, F) → (E, E) such that if B ∈ E

then the pre-image of B: X −1 (B) = {ω ∈ Ω : X(ω) ∈ B} ∈ F.

The abbreviated notation X : Ω → E will frequently be used to denote X : (Ω, F) → (E, E).

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

10

4

1.5

1.0

3

0.5

0.2

0.4

0.6

0.8

1.0

2

1

2

3

4

5

6

7

-0.5

1

-1.0

-1.5

0

Figure 1.2: Examples of realisations of functional random variables. The chart on the left plots

three realisations of a standard Wiener process, the chart on the right plots three realisations of

a standard Poisson process.

1.2.2

Defining functional random variables

Now we have sufficient background to formulate a precise definition of functional random variables:

Definition 1.7 (Functional random variables) A random variable X : Ω → E is a

functional random variable iff every χ ∈ E is a function χ : M → F for some non-degenerate

continuum M.

X is a real functional random variable iff every χ ∈ E is a function mapping I → R

where I = [a, b] for a, b ∈ R and a < b.

In practice, many problems in functional data analysis choose E ⊂ C n , where C n is the set

of all continuous n-differentiable functions with domain I. Examples of realisations of functional

random variables are shown in Figure 1.2.

1.2.3

Defining functional datasets

A dataset generated by a functional random variable is a functional dataset; this is defined as a

straightforward extension of the univariate and multivariate dataset.

Definition 1.8 (Functional dataset) A set {X1 , X2 , . . . , Xn } is a functional dataset iff

for each i, j ∈ {1, . . . , n}, Xi ∼ X , for some functional random variable X ; and, Xi ⊥⊥ Xj if

i 6= j.

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

11

An element of a functional dataset is called a functional datum. {χ1 , χ2 , . . . , χn } is

used to denote the non-random observation of a functional dataset.

So functional data analysis consists of making inferences and predictions about the functional

random variable X from the functional dataset {X1 , X2 , . . . , Xn }. The natural question that

arises at this point is how do we observe an uncountably infinite dimensional functional random

variable? The answer is that we do not; instead, we construct a functional datum from a finite

sample.

1.3

Constructing functional datasets

This thesis illustrates the construction of functional dataset only for the case of real functional

random variables; that is, functional random variables defined on a set of functions all with

domain I. The problem of constructing a functional dataset is usually solved using a two step

procedure. First, take a dense but finite sample of a single observation of a functional random

variable (in the context of the Berkeley Growth Study, this means we should identify a single

child and measure their height at frequent intervals). Second, using the dense sample reconstruct

the behaviour of the functional datum over its whole domain. The problem of data collection is

usually easily solved, so the focus of this section is the second step of this procedure. The data

we are able to observe is a finitely observed functional dataset:

Definition 1.9 (Finitely observed functional dataset)

The set {{X1 (t1,1 ), X1 (t1,2 ), . . . , X1 (t1,m1 )}, . . . , {Xn (tn,1 ), Xn (tn,2 ), . . . Xn (tn,mn )}} is a

finitely observed functional dataset iff {X1 , . . . , Xn } is a functional dataset generated by a

real functional random variable; and for each i ∈ {1, . . . , n}, j ∈ {1, . . . , mi } we have ti,j ∈ I

and Xi (ti,j ) is the value of Xi at ti,j .

Now the task is to construct the functional dataset using only information contained in the

finitely observed functional dataset. To have any hope of performing this construction we must

make assumptions about the behaviour of the functional random variable. To see why, consider

white Gaussian noise, {X (t)}t∈[0,1] , and suppose we observe a single realisation, {χ(t)}t∈[0,1] , at

only a finite number of points {χ(t1 ), χ(t2 ), . . . , χ(tn )} (see Figure 1.3). Now by construction,

X (t) ⊥

⊥ X (s) if s 6= t so information about the value of χ at {t1 , t2 , . . . , tn } provides no information at all about its behaviour at other times. It is, therefore, impossible to use the sample to

conclude anything about the values of χ at unobserved times, and impossible to reconstruct the

infinite dimensional functional datum χ.

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

12

3

2

1

0

-1

-2

0.0

0.2

0.4

0.6

0.8

1.0

Time

Figure 1.3: White Gaussian noise. A functional datum, χ is shown in blue; the finite sequence

of points observed {χ(t1 ), χ(t2 ), . . . , χ(tn )} is shown in red.

1.3.1

Assumptions

Since the data analysed in statistics is almost always generated by natural phenomena it is

prudent to make assumptions that are usually satisfied by natural phenomena. In functional data

analysis, the standard approach is to make assumptions about the continuity and smoothness

of the functional random variable. Common choices are that a functional random variable is

continuous or that it is continuously differentiable:

Definition 1.10 Let X : Ω → E be a functional random variable.

1. X is continuous iff E ⊂ C 0 .

2. X is continuously differentiable iff E ⊂ C 1 .

The capacity for change of many natural systems is limited by the availability of energy

capable of effecting that change. Since the supply of such energy is usually gradual, many

systems can be accurately modelled with continuously differentiable functions. Even financial

markets, which are often modelled by non-differentiable stochastic processes, are controlled by the

waxing and waning of supply and demand, so some aspects of financial markets can be analysed

with continuously differentiable functional random variables; see, for instance, Cai (2011) and

Aneiros et al. (2011).

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

13

Alternatively, if we wish to construct a functional dataset not well approximated by continuously differentiable functions, we can adopt a different approach; Bugni et al. (2009) analysed a

discontinuous non-differentiable functional random variable. Bugni et al. (2009) assumed instead

that the the data were piecewise constant and all points of discontinuity were observed. In other

situations, if the functional data are sampled very densely and there is very little noise in the

data, accurate reconstruction at small time intervals may not be especially important and we

may analyse the data after connecting the points with lines of constant gradient. This is the

technique that will be used for the analysis of the phenomenon of stock price reversal. We will

briefly review two of the most popular methods for constructing functional datasets: (1) basis

functions; and (2) kernel smoothing. A complete description is provided by Ramsay & Silverman

(2005).

1.3.2

Basis smoothing

The idea motivating this approach is that many functions can be approximated arbitrarily closely

by a linear combination of a set of suitably chosen basis functions: {φ1 , φ2 , . . .}. The basic choice

for {φ1 , φ2 , . . .} is the monomial basis:

{1, t, t2 , . . .}

If the data show evidence of periodicity a better choice may be the Fourier basis:

{1, sin(ωt), cos(ωt), sin(2ωt), cos(2ωt), . . .}

More exotic bases are occasionally used, including B-spline, I-spline and wavelet bases. Occasionally simple bases may suffice, including the constant basis, or the step function basis. See

Ramsay & Silverman (2005) for further information.

Once a suitable basis has been chosen the goal is to estimate the infinite vector {c0 , c1 , . . .},

using information contained in the finitely observed functional dataset so that:

∞

X

ĉj φj (t)

j=1

is an accurate approximation of the functional datum. In practice, we do not ordinarily estimate

an infinite vector; instead we truncate the basis after a finite number of terms, K, and then

estimate {c0 , c1 , . . . , cK } so that the sum:

χ̂(t) =

K

X

ĉj φj (t)

j=1

is an accurate approximation of the functional datum. Various methods have been developed for

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

14

choosing K; the most convincing approach is to balance the bias of estimates caused by a small

K against the greater variance of estimates caused by a large K.

A common method for estimating {c0 , c1 , . . . , cK } is to use techniques developed for constructing least squares estimates to minimise:

SMSSE ({χ(t1 ), χ(t2 ), . . . , χ(tn )}|c) =

n

X

χ(ti ) −

i=1

K

X

2

cj φj (ti )

j=1

Ordinary least squares estimation assumes that the residual for each χ(ti ) is independent and

identical for each ti . Often this is an unrealistic assumption because measurement error may

depend on the precise value of t at which the observation is made or the level of the function

observed; approximation errors may also exhibit autocorrelation. To account for these complications, weights can be added to each term to allow for unequal variances and covariances of

residuals.

1.3.3

Kernel smoothing

Kernel smoothing is closely related to the use of basis functions, it is best applied when it is

reasonable to assume that functional data is continuously differentiable. The tacit assumption in

fitting continuously differentiable functions to finitely observed functional datasets is that values

of a function at point are close to values of the function at nearby points. Kernel smoothing

makes this assumption explicit. This sacrifices some of the generality of the basis smoothing but

more accurate estimates are often obtainable with smaller datasets.

The estimated functional datum χ̂ is expressed as a linear combination of all observed values:

χ̂(t) =

n

X

wj (t)χ(tj )

j=1

The weight, wj , of each observed χ(tj ) is expressed in terms of a kernel function. To assist in the

development of theoretical properties, kernel functions are assumed to be symmetric, continuous

probability density functions. Common choices are the uniform kernel, the Epanechnikov kernel

and the Gaussian kernel, respectively:

K(u) =

1

2

if |u| ≤ 1

0 elsewhere

3 1 − u2 if |u| ≤ 1

K(u) = 4

0

elsewhere

2

1

u

K(u) = √ exp −

2

2π

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

15

1.0

1.0

0.5

0.5

1

2

3

4

5

1

6

2

3

4

5

6

-0.5

-0.5

-1.0

-1.0

Figure 1.4: Phase and amplitude variation. The chart on the left shows functional data differing

only in phase and the chart on the right shows functional data differing only in amplitude.

Then a common choice for the weight function are Nadaraya–Watson weights after Nadaraya

(1964) and Watson (1964):

wj (t) =

t−tj

h

Pn

t−ti

i=1 K

h

K

where h is called the bandwidth of the kernel. A large bandwidth means more observations are

practically incorporated in the imputation of each point and the result is a smoother curve; a

small bandwidth means fewer values are incorporated and the result is a curve that is not as

smooth.

In general the bandwidth is selected by a similar compromise to the selection of the degree at

which the basis is truncated in Section 1.3.2 above: a small bandwidth gives an estimator that

is nearly unbiased but with high variance; a large bandwidth gives a biased estimator with low

variance. So h is chosen to balance the variance and bias of the estimator.

1.4

Registration

Once a functional dataset has been constructed the next step is to inspect the dataset for phase

and amplitude variation, see Figure 1.4. Often phase variation is not of primary interest and

many techniques in functional data analysis are designed only to account for amplitude variation;

so phase variation is usually removed using a procedure called curve registration. Phase variation

may arise because biological systems experience ‘local’ times that pass at slightly different rates

to the ‘clock’ time. For example a plot of the derivatives of the growth of children in the Berkeley

Growth study, shown in Figure 1.5, suggests that some children experience growth spurts earlier

than others and for some the growth spurt is longer; this means that computing statistics of the

untransformed data can give results that are not representative. Only a brief description of the

procedure is provided here; a thorough account is available in Ramsay (2011).

There are essentially three kinds of registration: (1) shift registration; (2) landmark registration; and, (3) continuous registration. Shift registration is the simplest: each curve is shifted

16

1

0

−4 −3 −2 −1

Derivative of height

2

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

5

10

15

Time

Figure 1.5: Landmark Registration. This chart shows the first derivative of the heights of the

children plotted in Figure 1.1. It is adapted from the work of Ramsay et al. (2010)

.

to the left or right and no warping is required to ensure that key features align — this is the

procedure that would be applied to the synthetic data shown in the first panel of Figure 1.4.

Landmark registration requires both shifting and warping the data so that key features coincide

across all curves. This technique could be used for the data shown in Figure 1.5: the data could

be shifted and warped so that zero-crossings of all curves coincide. Continuous registration is

the most advanced approach, it uses the entire curve to align the data rather than simply the

location of landmarks — it is described in detail in Ramsay (2011).

1.5

A sample dataset

This thesis will illustrate the application and efficacy of the new techniques defined by examining

the phenomenon of stock market overreaction. The precise hypothesis investigated is whether

very large single day price declines in stock prices are more likely to be followed by period of

sustained price increase as compared to stock price behaviour after all other trading days. Early

progress on this topic was made by De Bondt & Thaler (1985) and Bremer & Sweeney (1991);

recent progress has been made by Cressy & Farag (2011) and Mazouz et al. (2009). In partcular,

Cressy & Farag (2011) suggest the phenomenon may be exploited to construct profitable trading

algorithms.

The reversal of sudden stock price decreases was amongst the first substantial evidence that

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

17

stock markets are not Markovian. This finding suggests that Markovian models cannot capture

all aspects of the behaviour of stock prices. Since popular models for stock price evolution assume

the Markov property, including Black & Scholes (1973) and Heston (1993), this finding suggests

such models should only be applied cautiously.

Functional data analysis has frequently found application in econometrics, see for example,

Cai (2011), Aneiros et al. (2011) and Bugni et al. (2009). So the application presented here may

be seen as a continuation of that work.

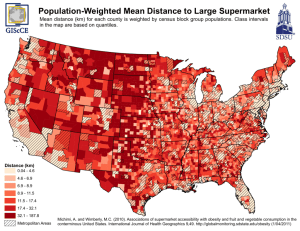

To examine the phenomenon of stock market overreaction, I collected historical prices from

1982 to 2011 for all companies currently listed on the NASDAQ Stock Market. I then assigned

the data to two categories; (1) the 40 day price evolution of a stock immediately following a

single-day decline of 10% or more; and (2) the 40 day price evolution of a stock after trading

days where there was no decline of 10% or more. A subsample of the these two categories is

plotted in Figure 1.6. The goal now is to determine if there are statistically significant differences

between the two categories and to provide descriptions of any such differences.

There were some problems with the data, the most significant were: stocks that were no

longer trading as of 2011 were systematically excluded; and, the price history was only of daily

resolution. The analysis of this data should therefore only be seen as illustrative of the quantiles

developed in this thesis. A more precise analysis of stock price reversal is available in Cressy &

Farag (2011) or Mazouz et al. (2009); although they do not approach the problem through the

prism of functional data analysis.

Based on Figure 1.6, we can draw some preliminary conclusions about the data. First, the

dispersion of stock prices is much higher after declines of 10% or more. Second, both datasets

seem to have a positive skew, with the skew being greater after the 10% decline. These findings

are in line with the prior work of Cressy & Farag (2011) and Mazouz et al. (2009).

CHAPTER 1. FUNCTIONAL DATA ANALYSIS

18

2.0

2.0

1.5

1.5

1.0

1.0

0.5

0.5

0

10

20

30

40

0

10

20

30

40

Figure 1.6: The chart on the left shows a random selection of the 40 day trading history of

NASDAQ stocks after trading days where there was no single-day decline of 10% or more, 1982–

2011; right shows a random selection of 40 day trading history immediately following a single-day

decline of 10% or more.

Chapter 2

Multivariate quantiles

The goal of this chapter is to identify the difficulties of constructing multivariate quantiles and

describe methods by which some of these difficulties have been conquered. All definitions of

multivariate quantiles, of which I am aware, sacrifice some of the utility of univariate quantiles.

2.1

The failure of the ordinary approach

In the univariate case, the quantiles of random variable are defined by reference to its distribution

function:

Definition 2.1 (Univariate quantiles) If X : Ω → R is a real random variable with distribution function FX and α ∈ (0, 1) then the α-quantile of X is:

Qα = inf {x ∈ R : FX (x) ≥ α}

Where necessary the distribution of the random variable associated with each quantile will be

included as a superscript: QX

α.

So we could try to extend this definition to a bivariate distribution as follows:

Definition 2.2 (Potential bivariate quantiles) If X : Ω → R2 is a bivariate real random

variable with distribution function FX (x, y) and α ∈ (0, 1) then the α-quantile of X may potentially be defined as:

Qα = inf {(x, y) ∈ R2 : FX (x, y) ≥ α}

However, for this definition to be meaningful we must be able to interpret the infimum of subsets

of R2 ; that is for some set S ⊂ R2 there must be some unique element (x, y) ∈ R2 so that (x, y)

is the largest element of R2 that is also less than or equal to every element of S. The existence

of a unique infimum requires the existence of a total order:

19

CHAPTER 2. MULTIVARIATE QUANTILES

20

Definition 2.3 (Total orders) The set X is totally ordered under the relation ≤ iff for every

a1 , a2 , a3 ∈ X the following conditions are satisfied:

1. If a1 ≤ a2 and a2 ≤ a1 then a1 = a2 (antisymmetry);

2. If a1 ≤ a2 and a2 ≤ a3 then a1 ≤ a3 (transitivity); and

3. Either a1 ≤ a2 or a2 ≤ a1 (totality).

The natural choice for ordering R2 is the Euclidean norm:

(x1 , y1 ) ≤ (x2 , y2 ) iff

q

x21 + y12 ≤

q

x22 + y22

Unfortunately this is not a total order: (1, 2) ≤ (2, 1) and (2, 1) ≤ (1, 2) but (1, 2) 6= (2, 1), which

contradicts the antisymmetry property of Definition 2.3. It is possible to specify total orders for

R2 , for instance the lexicographical order:

(x1 , y1 ) ≤ (x2 , y2 ) iff x1 ≤ x2 or (x1 = x2 and y1 ≤ y2 )

But such orders are not satisfactory in practice because they do not capture intuitive notions of

size, for example: (2, 0) ≥ (1, 1000). So, in general, we cannot extend the standard definition

of univariate quantiles to multivariate distributions in a way that permits the specification of

unique quantiles and corresponding order statistics.

2.2

Assessing the quality of multivariate quantiles

Despite the difficulty of ordering the values of multivariate distributions, substantial progress has

been made in defining multivariate quantiles. Before examining some of the definitions proposed,

it is appropriate to identify properties good definitions of multivariate quantiles should posess.

Serfling (2002) suggests there are five such properties. Ideally, multivariate quantiles should:

1. permit probabilistic interpretations of empirical and distribution quantiles;

2. permit the description of location through measures analogous to univariate medians,

trimmed means and order statistics;

3. permit the description of dispersion through measures analogous to univariate interquartile

range;

4. display affine equivariance and related equivariance properties;

5. permit the construction and interpretation of test statistics for natural hypotheses on key

characteristics of datasets.

These properties will be used to judge the quality of both the definitions of multivariate quantiles

reviewed and the functional quantiles defined in the second part of this thesis.

CHAPTER 2. MULTIVARIATE QUANTILES

2.3

21

Methods for constructing multivariate quantiles

Two methods for constructing multivariate quantiles are reviewed: (1) quantiles based on minimising norms; and (2) the quantiles of Fraiman & Pateiro-López (2011) which are tailored for use

in functional data analysis. A more complete review of the variety of methods for constructing

multivariate quantiles is presented in Serfling (2002).

2.3.1

Norm minimisation

In this section we review the definition of quantiles proposed by Chaudhuri (1996). The method

proposed by Chaudhuri (1996) did not generalise Definition 2.1 but instead extended a separate

but equivalent definition of univariate quantiles based on norm minimisation:

Lemma 2.1 (Univariate quantiles alternative definition) If X is a real random variable

with quantiles {Qα }α∈(0,1) then for each α ∈ (0, 1) we must have:

Qα = arg min E [|X − x| + (2α − 1)(X − x)]

x∈R

Proof The proof consists of replacing |X − x| with (X − x)1{X > x} + (x − X)1{X ≤ x} then

differentiating with respect to x and setting the resulting expression equal to 0.

This definition may be extended to multivariate distributions as follows:

Definition 2.4 (Chaudhuri’s multivariate quantiles) Suppose X : Ω → Rd is a multivariate dimensional random variable with d ≥ 2, then the β-quantile of X is:

Qβ = arg min E [Φ (β, X − x) − Φ (β, X)]

x∈Rd

where Φ(a, b) = ||a||+ < a, b > for the Euclidean norm || · || and the dot product < ·, · > and

β ∈ (−1, 1) indexes the quantiles; β is the analogue of α for univariate quantiles.

Chaudhuri (1996) also suggests a methods to estimate these quantiles and establishes the consistency of such estimators. This definition does not ensure the existence of a unique quantile for

each β ∈ (−1, 1) and Serfling (2002) shows that such quantiles only partially satisfy each of the

desirable properties of quantiles described in Section 2.2; but they perform quite well compared

to other definitions.

2.3.2

Functional quantiles

In this section we review the definition of quantiles given in Fraiman & Pateiro-López (2011).

The definition proposed in Fraiman & Pateiro-López (2011) is very general: it is applicable to

all univariate, multivariate and functional random variables defined on Hilbert spaces. We only

CHAPTER 2. MULTIVARIATE QUANTILES

22

consider the definition applied to a real functional random variable defined on a space of square

integrable functions with a specific inner product.

Definition 2.5 (Fraiman & Pateiro-López’s fuctional quantiles) Let X : Ω → E be a

real functional random variable such that E ⊂ L2 , the space of square integrable functions.

Define the inner product for χ1 , χ2 ∈ E:

Z

< χ1 , χ2 >=

χ1 (t)χ2 (t)dt

t∈I

And the corresponding norm:

||χ1 || =

√

< χ1 , χ1 >

And the unit ball:

B = {χ ∈ E : ||χ|| = 1}

Then the α-quantile in the direction of χ ∈ B is:

−E(X ),χ>

Qα,χ = Q<X

+ E(X )

α

<X −E(X ),χ>

where Qα

is the univariate quantile of Definition 2.1.

Fraiman & Pateiro-López (2011) suggest these quantiles display location equivariance, and

equivariance under unitary operators and homogeneous scale transformations. Fraiman & PateiroLópez (2011) also suggest a consistent method of estimating these quantiles. They do not comment on whether the quantiles permit probabilist interpretations or the description of location

and dispersion of functional random variables; nor do they give methods for using these quantiles

to construct hypothesis tests.

2.4

Room for improvement?

Fraiman & Pateiro-López (2011) present a convincing and theoretically sound method for defining

and estimating quantiles for functional random variables. However, so far, these quantiles have

not be shown to share many of the desirable characteristics outlined in Section 2.2. As their

construction is quite complex, it is not immediately clear if it is possible to establish these

characteristics; so an alternative construction of functional quantiles may succeed if it can be

used more easily to describe and make inferences on functional datasets.

Part II

Quantiles for Functional Data

23

Chapter 3

Defining functional quantiles

The goal of this chapter is to introduce a definition of quantiles for real functional random

variables. A description of the properties of the quantiles introduced is provided and a method

to estimate them empirically is suggested. The quantiles developed in this chapter should only

be applied after the functional dataset has been constructed as described in Section 1.3 and

registered as described in Section 1.4.

3.1

Functional quantiles

First, we propose a reasonable definition of the quantiles of the distribution of a functional

random variable. To overcome the fact that, in general, no natural order can be specified for an

arbitrary set of functions defined on a a closed interval of the real line, we will reconstitute the

functions so that a natural order can be specified.

3.1.1

Defining functional quantiles

The univariate quantiles of Definition 2.1 can be extended to a real functional random variable,

{X (t)}t∈I , in a natural way by considering the marginal distribution at each t ∈ I. Since the

marginal distribution of X is a real random variable, Definition 2.1 can be applied directly:

Definition 3.1 (Functional quantiles) If X : Ω → E is a real functional random variable

with marginal distribution function FX (t) at t ∈ I then the α-quantile of X as:

Qα (t) = inf {x ∈ R : FX (t) (x) ≥ α} for t ∈ I

Where necessary the distribution of the random variable associated with each quantile is

incorporated via a superscript: QX

α.

24

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

25

We can think of an α-quantile under this definition as a piecewise-defined function, where

the definition of the function is exactly some χ ∈ E for every t ∈ I. One immediate consequence

of this definition is that functional quantiles are, appropriately, functions mapping I → R.

In Chapter 2 we saw that univariate quantiles are defined most easily in terms of the distribution function of a random variable. We should therefore consider whether there is an extension

of the distribution function for functional random variables as that might permit an alternative

construction of functional quantiles. Bugni et al. (2009) suggest one extension for real functional

random variables:

Definition 3.2 (Distribution functionals) If X : Ω → E, is a real functional random variable then the distribution functional FX is given by:

FX (χ) = P [X (t) ≤ χ(t) for all t ∈ I]

(3.1)

Theorem 3.1 A distribution functional FX uniquely determines a functional random variable,

X.

Proof See the discussion in (Bugni et al. 2009, Section 2.1).

Now we could try to define the quantiles of X as some function of the set of functions

{χ ∈ E : FX (χ) ≥ α}. Unfortunately, as for Rn , no natural order can be specified for must

function spaces, so it is not sensible to talk about its infimum as we would for univariate quantiles.

Ultimately, after considering variations on this approach for some time, I rejected the idea that

quantiles could be defined using a distribution functional. It is not surprising that the standard

approaches for univariate and finite-dimensional multivariate data analysis do not carry over well

to the functional case, other authors have made similar findings. For instance Delaigle & Hall

(2010) show, amongst other things, that there is no direct analogue of the probability density

function defined in terms of small-ball probabilites for functional random variables.

Often it is quite easy to find the quantiles of a functional random variable, by way of example,

the derivation of the quantiles of the standard Wiener process is provided in Theorem 3.2; a plot

the quantiles derived and several synthetically generated simulations of a Wiener process is shown

in Figure 3.1.

Theorem 3.2 The standard Wiener process has quantiles Qα (t) =

√

t Φ−1

0,1 (α) where Φµ,σ 2 de-

notes the distribution function of N (µ, σ 2 ), the normal distribution with mean µ, and variance

σ2 .

Proof Let W be a Wiener Process. Now by definition, W(t) ∼ N (0, t). So applying Definition

√ −1

2.1, we see the α-quantile of W(t) is Φ−1

t Φ0,1 (α).

0,t (α) =

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

26

1.5

1.0

0.5

0.0

-0.5

-1.0

-1.5

0.0

0.2

0.4

0.6

0.8

1.0

Time

Figure 3.1: 20 realisations of standard Wiener process with selected quantiles; quartiles are shown

W

in red and QW

0.05 (t) and Q0.95 (t) (which estimate the maximum and minimum of the sample) are

shown in green.

3.1.2

Properties of functional quantiles

The quantiles introduced in Section 3.1.1 mimic the behaviour of the functional random variable

to which they are applied reasonably closely: if a functional random variable is continuous then

so are its quantiles; if a functional random variable is differentiable then in many situations

its quantiles will be too. This section establishes several useful properties of quantiles. The

central result of this section is that quantiles do not, in general, characterise the distribution of

a functional random variable as shown in Theorem 3.4.

Theorem 3.3 If X is a continuous functional random variable then the quantiles of X are

continuous.

Proof Let X : Ω → E be a continuous functional random variable with quantiles {QX

α }α∈(0,1) .

Then by Definition 1.10, E ⊂ C 0 . Now it suffices to show that for any > 0 and c ∈ I there

X

exists some δ > 0 such that if |t − c| < δ then |QX

α (t) − Qα (c)| < . Pick > 0, c ∈ I and

α ∈ (0, 1). Now define F = {χγ }γ∈G so that if γ ∈ G then χγ ∈ E and χγ (c) = QX

α (c). Now if

{χγ }γ∈G has only one element then by Definition 3.1, in a neighbourhood of c: QX

α = χγ which

is, by definition of X , a continuous function, so there must exist δ > 0 such that if |t − c| < δ

X

then |QX

α (t) − Qα (c)| < .

Now suppose {χγ }γ∈G has more than one element. Because X is continuous and QX

α (t) = χ(t)

for some χ ∈ E, by Definition 3.1; there must exist some χ1 , χ2 ∈ {χγ }γ∈G such that there is

X

some δ1 , δ2 > 0 for which QX

α (t) = χ1 (t) if t ∈ (c − δ1 , c) and Qα (t) = χ2 (t) if t ∈ (c, c + δ2 ).

Now since χ1 , χ2 ∈ E, χ1 and χ2 are continuous functions, and since χ1 (c) = χ2 (c), if we ensure

X

δ ≤ min{δ1 , δ2 }, then we can find δ such that: if |t − c| < δ then |QX

α (t) − Qα (c)| < .

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

27

Theorem 3.4 Quantiles do not characterise the distribution of a functional random variable.

Proof It suffices to show there exist two functional random variables, X and Y such that

Y

QX

α (t) = Qα (t) if α ∈ (0, 1) and X Y. Let χ1 and χ2 be non-random functions mapping

I → R, such that for some t1 ∈ I:

χ1 (t1 ) = χ2 (t1 )

Define X :

Then define Y:

χ1 (t) < χ2 (t)

if t < t1

χ1 (t) > χ2 (t)

if t > t1

χ (t) with probability 0.5

1

X (t) =

χ (t) with probability 0.5

2

max {χ (t), χ (t)}

1

2

Y(t) =

min {χ (t), χ (t)}

1

2

with probability 0.5

with probability 0.5

Now P(X = χ1 ) = 0.5 6= 0 = P(Y = χ1 ) so X Y, but applying Definition 3.1 we find the

quantiles of X and Y are:

max {χ (t), χ (t)}

1

2

Y

QX

(t)

=

Q

(t)

=

α

α

min {χ (t), χ (t)}

1

2

if α > 0.5

if α ≤ 0.5

A concrete example of a set of distinct functional random variables which share quantiles in

Figure 3.2.

Contemplation of this result and in particular contemplation of Figure 3.2 suggests that the

information lost when a functional random variable is reduced to its quantiles is knowledge about

which branch it takes at intersections of realisations of the functional random variable.

3.1.3

Characterising a functional random variable

Characterisation is a property of almost all definitions of quantiles. Univariate quantiles determine exactly the distribution function of a random variable and therefore characterise the

distribution. Similarly the multivariate quantiles of Chaudhuri (1996) and the functional quantiles of Fraiman & Pateiro-López (2011) characterise the distribution of the random variables to

which they are applicable. Our goal in this section is to prove that quantiles characterise the

distribution of a functional random variable if certain conditions are satisfied.

Standard assumptions in functional data analysis are continuity, differentiability and monotonicity. However we saw in Figure 3.2 that continuity is not a sufficient condition and we can

see in Figures 3.3 and 3.4 that monotonicity and differentiability are not sufficient either.

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

28

1.5

1.5

1.5

1.0

1.0

1.0

0.5

0.5

0.5

0.0

0.0

0.5

1.0

1.5

2.0

2.5

3.0

0.5

1.0

1.5

2.0

2.5

0.0

3.0

-0.5

-0.5

-0.5

-1.0

-1.0

-1.0

-1.5

-1.5

-1.5

0.5

1.0

1.5

2.0

2.5

3.0

Figure 3.2: An example of a pair of functional random variables which reduce to the same

quantiles. The left and middle charts show the equiprobable values of the functional random

variables; the right chart shows the quantiles which are shared by both random variables.

15

15

15

10

10

10

5

5

5

0.5

1.0

1.5

2.0

2.5

3.0

0.5

1.0

1.5

2.0

2.5

3.0

0.5

1.0

1.5

2.0

2.5

3.0

Figure 3.3: The figure shows that monotonicity is not sufficient to ensure that a functional

random variable is characterised by its quantiles. It follows the same schema as Figure 3.2.

1.0

1.0

1.0

0.5

0.5

0.5

0.5

1.0

1.5

2.0

2.5

3.0

0.5

1.0

1.5

2.0

2.5

3.0

0.5

-0.5

-0.5

-0.5

-1.0

-1.0

-1.0

1.0

1.5

2.0

2.5

3.0

Figure 3.4: This figure shows that differentiability is not sufficient to ensure that a functional

random variable s characterised by its quantiles. It follows the same schema as Figure 3.2.

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

29

So what are sufficient conditions to ensure that quantiles characterise the distribution of a

functional random variable? We observed in the previous section that the information lost when

a functional random variable is reduced to its quantiles is knowledge about which path is taken

at branch points of different realisations; so if we make assumptions about the behaviour of the

functional random variable at all branch points we should be able to show that quantiles are

characteristic. First some definitions are required:

Definition 3.3 (Stacked, crossing and tangled) Let X : Ω → E be a real functional

random variable. And let χ1 , χ2 be arbitrary elements of E; so χ1 and χ2 are functions

mapping I → R.

1. X is stacked iff for every χ1 , χ2 ∈ E, either χ1 ≥ χ2 or χ1 ≤ χ2 . Where χ1 ≤ (≥)χ2

iff for every t ∈ I, χ1 (t) ≤ (≥)χ2 (t).

2. X is crossing iff for every χ1 , χ2 ∈ E and t ∈ I such that χ1 (t) = χ2 (t) there is some

δ > 0 such that:

(i) χ1 (x) ≤ (≥)χ2 (x) if x ∈ (t − δ, t);

(ii) There is at least one x ∈ (t − δ, t) such that χ1 (x) < (>)χ2 (x);

(iii) χ1 (x) ≥ (≤)χ2 (x) if x ∈ (t, t + δ); and

(iv) There is at least one x ∈ (t, t + δ) such that χ1 (x) > (<)χ2 (x)

3. X is tangled iff it is neither stacked nor crossing.

To provide intuition about this definition, examples of selected stacked, crossing and tangled

functional random variables are plotted in Figures 3.5 and 3.6. The central idea is that all the

values of a stacked functional random variable can be placed in a stack without having to push

one function through another. Crossing is a complementary concept: if two values of a crossing

functional random variable are equal at a point, then they must push through each other at the

point. Note that a functional random variable, X : Ω → E, does not need to be continuous for

either property to be satisfied, nor does E need to be finite; functional random variables can also

be both stacked and crossing.

First we consider a consequence of the definition of stacked functional random variables.

This result is used as stepping stones to show that all stacked functional random variables are

characterised by their quantiles.

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

2.0

2.0

1.5

1.5

1.0

1.0

0.5

0.5

0.0

0.0

-0.5

-0.5

0.0

0.2

0.4

0.6

0.8

1.0

0.0

30

0.2

0.4

0.6

0.8

1.0

Figure 3.5: The chart on the left plots every χ ∈ E for a stacked functional random variable

X : Ω → E; the chart on the right gives an example of a tangled functional random variable

that is almost stacked, the points marked by arrows are the points at which the stacked property

fails.

2.0

2.0

1.5

1.5

1.0

1.0

0.5

0.5

0.0

0.0

-0.5

-0.5

0.0

0.2

0.4

0.6

0.8

1.0

0.0

0.2

0.4

0.6

0.8

1.0

Figure 3.6: The figure follows a similar to schema to that of Figure 3.5. Left shows every χ ∈ E

for a crossing functional random variable and right shows a tangled functional random variable

that is almost crossing.

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

31

Lemma 3.5 Let X : Ω → E be a stacked real functional random variable with quantiles,

{Qα }α∈(0,1) . Then E = {Qα }α∈(0,1) .

Proof It is enough to show (i) if α ∈ (0, 1) then Qα = χ for some χ ∈ E; and (ii) if χ ∈ E then

χ = Qα for some α ∈ (0, 1).

First we establish (i): pick α ∈ (0, 1). By Definition 3.1, Qα (t) = inf {x ∈ R : FX (t) (x) ≥ α}.

We need to show there is some χ ∈ E such that χ(t) = Qα (t) for every t ∈ I. Suppose, to get a

contradiction, there is no such χ ∈ E. Then there must exist χ1 , χ2 ∈ E such that χ1 (t) 6= χ2 (t)

if t ∈ {t1 , t2 } ⊂ I, Qα (t1 ) = χ1 (t1 ) and Qα (t2 ) = χ2 (t2 ). Assume without loss of generality

that χ1 (t1 ) > χ2 (t1 ). Then to ensure χ2 (t2 ) = Qα (t2 ) and χ1 (t2 ) 6= χ2 (t2 ) we must have

χ1 (t2 ) < χ2 (t2 ) . This is a contradiction of the assumption that X is stacked so our supposition

that there was no χ ∈ E such that χ(t) = Qα (t) for every t ∈ I was false.

Now we consider part (ii): we need to show that if χ ∈ E then there is some α ∈ (0, 1) such

T

γ∈I aγ .

that Qα = E. Pick χ ∈ E. Let aγ = {α ∈ (0, 1) : Qα (tγ ) = χ(tγ )}. Then define a =

Then we claim a 6= ∅ and Qα = χ if α ∈ a.

We now have sufficient knowledge to prove the first central theorem of this thesis: that all

stacked functional random variable are characterised by their quantiles.

Theorem 3.6 (Quantile characterisation theorem – stacked) If (Ω, F, P) is a probability space and X : (Ω, F) → (E, E) is a stacked real functional random variable, then it

is uniquely determined by its quantiles: {Qα }α∈(0,1) .

Proof (nonconstructive) First we give a nonconstructive proof. Let X and Y be stacked

functional random variables sharing the quantiles {Qα }α∈(0,1) . It suffices to show that

X ∼ Y.

Suppose, to get a contradiction, that X Y. Then there must exist some set F ∈ E

such that P(X ∈ F ) 6= P(Y ∈ F ). Let Ft1 = {χ(t1 )}χ∈F for some t1 ∈ I. Now since

X and Y are stacked, to ensure P(X ∈ F ) 6= P(Y ∈ F ) there must exist t1 such that

P(X (t1 ) ∈ Ft1 ) 6= P(Y(t1 ) ∈ Ft1 ). But Definition 3.1 ensures the quantiles of a functional

random variable determine its marginal distribution exactly; so the existence of such a t1 is

a contradiction of the assumption that X and Y share quantiles.

Proof (constructive) Next we give a constructive proof. This proof requires the additional assumption that functional random variables are continuous.

Let X : Ω → E be a stacked continuous functional random variable with quantiles

{Qα }α∈(0,1) . It is sufficient to show (i) we can recover the set E and (ii) we can measure

any F ∈ E with respect to P using only information contained in {Qα }α∈(0,1) .

First, by Lemma 3.5, {Qα }α∈(0,1) is identically E, so (i) is satisfied.

Now consider (ii). Pick F ∈ E. Since X is continuous, every χ1 , χ2 ∈ F can be distinguished by their values on the countable dense set IQ = {q ∈ Q : q ∈ I}. So P(X ∈ F ) may

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

32

be computed inductively from the marginal distributions of X at each t ∈ IQ . To make this

explicit, pick t0 ∈ IQ and consider the set Et0 = {χ(t0 )}χ∈E . A point y ∈ Et0 must satisfy

one and only one of the following conditions:

1. there is some χ ∈ F such that χ(t0 ) = y and there is some χ ∈ F c such that χ(t0 ) = y;

2. there is some χ ∈ F such that χ(t0 ) = y and there is no χ ∈ F c such that χ(t0 ) = y;

and

3. there is no χ ∈ F such that χ(t0 ) = y and there is some χ ∈ F c such that χ(t0 ) = y.

Let y10 , y20 and y30 be the set of all y ∈ Et0 satisfying the first, second and third conditions

respectively. Now {χ ∈ E : χ(t0 ) ∈ y20 } ⊂ F by definition of y20 and {χ ∈ E : χ(t0 ) ∈ y30 } ⊂

F c by definition of y30 so let P0 (F ) = P(χ(t0 ) ∈ y20 ), let E1 = E\{χ ∈ E : χ(t0 ) ∈ y20 ∪ y30 }

and let IQ1 = IQ \{t0 }.

Now using induction, assume Pn (F ), En , and IQn are known then pick tn+1 ∈ IQn so we

can define corresponding sets to y10 , y20 and y30 :

y1n+1 = {{χ(tn+1 )}χ∈En : χ(tn+1 ) ∈ ({χ(tn+1 )}χ∈F ∩ {χ(tn+1 )}χ∈F c )}

y2n+1 = {{χ(tn+1 )}χ∈En : χ(tn+1 ) ∈ {χ(tn+1 )}χ∈F ∩ ({χ(tn+1 )}χ∈F c )c }

y3n+1 = {{χ(tn+1 )}χ∈En : χ(tn+1 ) ∈ ({χ(tn+1 )}χ∈F )c ∩ {χ(tn+1 )}χ∈F c }

So {χ ∈ E : χ(tn+1 ) ∈ y2n+1 } ⊂ F and and {χ ∈ E : χ(tn+1 ) ∈ y3n+1 } ⊂ F c . Now define

Pn+1 (F ) = P(χ(tn+1 ) ∈ y2n+1 ), let En+1 = En \{χ ∈ En : χ(tn+1 ) ∈ y2n+1 ∪ y3n+1 } and let

IQn+1 = IQn \{tn+1 }.

So we can compute the sequence {P0 (F ), P1 (F ), . . .}. Now the sum of this sequence is

exactly:

P(X ∈ F ) =

∞

X

Pi (F )

i=0

Now we turn to the case of crossing functional random variables. Before a theorem can be

stated we need one more technical definition and two more lemmas.

Definition 3.4 (Smoothing quantiles) A real functional random variable X : Ω → E with

quantiles {Qα }α∈(0,1) does not have smoothing quantiles iff for every α ∈ (0, 1) and t1 ∈ I

such that Q is differentiable at t1 , there is some χ ∈ E and δ > 0 such that Qα (t) = χ(t) if

t ∈ (t1 − δ, t1 + δ).

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

33

The functional random variables shown in Figure 3.4 have smoothing quantiles, all other examples

of functional random variables depicted in this thesis do not.

Lemma 3.7 If a real functional random variable X : Ω → E is continuously differentiable and

there are no functions χ1 , χ2 ∈ E, and t ∈ I such that χ1 (t) = χ2 (t) and (∂χ1 )(t) = (∂χ2 )(t)

then X is crossing.

Proof Assume X : Ω → E is a continuously differentiable functional random variable and there

are no functions χ1 , χ2 ∈ E, and t ∈ I such that χ1 (t) = χ2 (t) and (∂χ1 )(t) = (∂χ2 )(t). Pick

χ1 , χ2 ∈ E and t ∈ I such that χ1 (t) = χ2 (t). It suffices to show that there is some δ > 0

satisfying the four limbs of Definition 3.3(2).

Now by assumption (∂χ1 )(t) 6= (∂χ2 )(t), so assume without loss of generality that (∂χ1 )(t) >

(∂χ2 )(t). Now since χ1 and χ2 are differentiable, χ1 (t) = χ2 (t) and (∂χ1 )(t) > (∂χ2 )(t) there

must exist δ > 0 such that χ1 (x) ≤ χ2 (x) if x ∈ (t − δ, t) and χ1 (x) ≥ χ2 (x) if x ∈ (t, t + δ)

so (i) and (ii) are satisfied. Now since χ1 (t) = χ2 (t) and (∂χ1 )(t) > (∂χ2 )(t) there must exist

x ∈ (t − δ, t) such that χ1 (x) < χ2 (x) and x ∈ (t, t + δ) such that χ1 (x) > χ2 (x) so (ii) and (iv)

are satisfied and the result follows.

Lemma 3.8 If X : Ω → E is a continuously differentiable functional random variable with

quantiles {Qα }α∈(0,1) , then for every α ∈ (0, 1), the function Qα is differentiable except for at

most countably many points.

Proof We will see that disjoint open intervals can be placed around every point of nondifferentiability. This suffices because every open interval contains a rational number and any

subset of the rationals is at most countable.

Let t1 and t2 be members of the set I such that t1 < t2 and Qα is non-differentiable at t1

and t2 . Now it suffices to show that for every such t1 , t2 we can find t such that t1 < t < t2 as

this permits the specification of the disjoint open intervals (·, t) and (t, ·) containing t1 and t2 .

Suppose, to get a contradiction, that there is no t such that t1 < t < t2 . Now by the assumption

that X is continuously differentiable, to ensure non-differentiability of Qα at t1 and t2 , there

must exist χ1 , χ2 ∈ E such that

χ1 (t1 ) = Qα (t1 )

χ1 (t2 ) 6= Qα (t2 )

χ2 (t2 ) = Qα (t2 )

χ2 (t1 ) 6= Qα (t1 )

But since Qα is continuous (as a consequence of Theorem 3.3) this implies that χ1 and χ2

are discontinuous; but since X is continuous, χ1 and χ2 must be continuous and we have our

contradiction. So there must exist t such that t1 < t < t2 . Similar results to this lemma are

obtained in Goswami & Rao (2004) and Froda (1929).

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

34

Now we have sufficient knowledge to prove the second central theorem of this thesis: that

some crossing functional random variables are characterised by their quantiles.

Theorem 3.9 (Quantile characterisation theorem – crossing) If (Ω, F, P) is a probability space and X : (Ω, F) → (E, E) is a real functional random variable such that:

1. X is continuous

2. There are no χ1 , χ2 ∈ E and t ∈ I such that χ1 (t) = χ2 (t) and (∂χ1 )(t) = (∂χ2 )(t);

and

3. X does not have smoothing quantiles.

Then X is uniquely determined by its quantiles {Qα }α∈(0,1) . Note that conditions 1 and 2

imply X is crossing as a consequence of Lemma 3.7.

Proof It suffices to show that: (i) we can construct every χ ∈ E and; (ii) we can measure

any F ∈ E with respect to P. The proof of (ii) is identical to constructive proof given for

Theorem 3.6; so we will only prove (i).

Pick χ ∈ E. Let t0 = min(I). Now by Definition 3.1, χ(t0 ) = Qα (t0 ) for some α ∈ (0, 1).

Let such an α be α0 . Now let t1 = sup{t ∈ I : Qα0 (t) ∈ C 1 if t ∈ [t0 , t1 )}. Then by the

assumption that X does not have smoothing quantiles, χ(t) = Qα0 (t) if t ∈ [t0 , t1 ]. Now

choose α1 ∈ (0, 1) so that the function:

Q (t)

α0

Qα0 ,α1 (t) =

Q (t)

α1

if t ∈ [t0 , t1 )

if t ≥ t1

is differentiable if t ∈ (t0 , t1 ]. Such an α1 must exist because X is continuously differentiable.

Define a1 as the set of all α1 satisfying this condition.

There is no guarantee that {Qα }α∈a1 contains only one function. However for any α ∈ a1

and for some χ2 ∈ E the function Qα0 ,α1 (t) = χ2 (t) for t ∈ [t0 , t1 ] so we are simply

constructing multiple elements of E simultaneously; so this is no barrier to the reconstruction

of E.

Now we apply a similar procedure to choose t2 and a2 and define:

Q (t)

α0

Qα0 ,α1 ,α2 (t) = Qα1 (t)

Q (t)

α2

if t ∈ [t0 , t1 )

if t ∈ [t1 , t2 )

if t ≥ t2

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

35

Then {χ(t)}t∈[t0 ,t2 ] ∈ {Qα0 ,α1 ,α2 (t)}α1 ∈a1 ,α2 ∈a2 ,t∈[t0 ,t2 ] again by the assumption that X

does not have smoothing quantiles. By Lemma 3.8, Qα has only countable many points

of non-differentiability for any α ∈ (0, 1), so we can apply this procedure inductively to

reconstruct χ over its entire domain.

3.2

Empirical functional quantiles

The goal of this section is to describe how the functional quantiles of Definition 3.1 can be

estimated empirically. A natural definition of empirical quantiles is given in Section 3.2.1; and

bias and consistency results for these empirical quantiles are obtained in Section 3.2.2.

3.2.1

Defining empirical quantiles

As before, we turn to the univariate case to find inspiration to define functional empirical quantiles. Unfortunately this is not entirely straightforward because there are a multiplicity of definitions for empirical univariate quantiles; in fact, the R Development Core Team (2011) suggest

there are at least nine ways to estimate univariate quantiles empirically. We will examine only

one simple method. First define the empirical distribution function:

Definition 3.5 Let {X1 , . . . , Xn } be a sample generated by the real random variable X. Then

the empirical distribution function of X is:

n

F̂X (t) =

1X

1{Xi ≤ t}

n i=1

The empirical distribution function permits an elegant definition of empirical quantiles:

Definition 3.6 Let {X1 , X2 , . . . , Xn } be a sample generated by the real random variable X and

let F̂X (x) be the empirical distribution function of X based on {X1 , . . . , Xn }. Then the empirical

α-quantile of X is:

Q̂α = inf {x ∈ R : F̂X (x) ≥ α}

So the univariate empirical quantile can be constructed simply by replacing FX with F̂X in

Definition 2.1. A natural extension for functional quantiles is obtained by applying an identical

substitution to Definition 3.1:

Definition 3.7 (Empirical functional quantiles) Let X be a real functional random

variable and let {X1 , X2 , . . . , Xn } be a functional dataset generated by X . Let F̂X (t) be the

marginal empirical distribution function at t ∈ I based on {X1 (t), X2 (t), . . . , Xn (t)}.

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

1.4

1.3

1.2

1.1

1.0

0.9

0.8

0.7

36

1.4

1.3

1.2

1.1

1.0

0.9

0.8

0.7

10

20

30

40

10

20

30

40

Figure 3.7: The figure on the left shows a sample of 3 stocks after a large price decline and the

figure on the right superimposes the empirical functional median, Q̂0.5 (t), of Definition 3.7.

Then the empirical α−quantile of the sample {X1 , X2 , . . . , Xn } is:

Q̂α (t) = inf {x ∈ R : F̂X (t) (x) ≥ α} for t ∈ I

This definition is easier to work with if it is expressed slightly differently (although the

similarity of Definitions 3.1 and 3.7 is concealed):

Lemma 3.10 If {X1 , X2 , . . . , Xn } is a functional dataset generated by a functional random variable with quantiles {Qα }α∈(0,1) and assume {X(1) (t), X(2) (t), . . . , X(n) (t)} are the order statistics

of {X1 (t), X2 (t), . . . , Xn (t)} then Definition 3.7 is equivalent to:

Q̂α (t) = X(dnαe) (t) for t ∈ I

Proof This is an immediate consequence of Definition 3.5 and Definition 3.7.

An example of the application of this definition to a small functional dataset is shown in

Figure 3.7. In Figure 3.8 the definition is applied to the full NASDAQ stock price dataset

introduced in Section 1.5.

3.2.2

Properties of empirical quantiles

This section develops bias and consistency results for the empirical quantiles defined in Definition

3.7. We will see that if certain weak conditions are satisfied then {Q̂α }α∈(0,1) are consistent

estimates for the functional quantiles of Definition 3.1 although they are usually biased estimates

for finite sample sizes. The proofs presented in this section are a relatively straightforward

extension of results obtained for univariate quantiles and order statistics. David (1970) was the

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

1.4

1.4

1.3

1.3

1.2

1.2

1.1

1.1

1.0

1.0

0.9

0.9

0

10

20

30

40

0

37

10

20

30

40

Figure 3.8: Empirical functional quantiles. This figure plots the quartiles of the NASDAQ stock

price data calculated according to Definition 3.7. This plot may be compared with Figure 1.6.

reference consulted for the univariate results. Some continuity and differentiability properties

for functional quantiles are also established.

To compute bias results exactly, we must make assumptions about the marginal distribution

of the functional random variable. We consider a functional random variable with marginal

uniform distribution; these results will assist in establishing general asymptotic results.

Lemma 3.11 (Marginal uniform distribution bias) If {X1 , X2 , . . . , Xn } is a functional dataset

generated by a functional random variable with marginal uniform distribution (that is X (t) ∼

U[0, 1] for t ∈ I) then {Q̂α }α∈(0,1) are biased estimates of {Qα }α∈(0,1) .

Proof It suffices to show E(Q̂α (t)) 6= Qα (t). So pick α ∈ (0, 1) and t ∈ I. First define the

function fdnαe as the density function of X(dnαe) (t). And compute:

E Q̂α (t) = E X(dnαe) (t)

Z ∞

=

xfdnαe (x)dx

−∞

Z ∞

dnαe−1 n−dnαe

n−1

=n

x FX (t) (x)

1 − FX (t) (x)

dx

dnαe − 1

−∞

Z 1

n−1

n−dnαe

=n

x xdnαe−1 [1 − x]

dx

dnαe − 1

0

n−1

n dnαe−1

=

n

(n + 1) dnαe

dnαe

n+1

(n + 1)α

<

=α

n+1

=

The first two equalities follow by definition, the third uses the following result: the probability

that an order statistics X(r) (t) falls within the small ball (x − dx, x + dx) is exactly equal to the

CHAPTER 3. DEFINING FUNCTIONAL QUANTILES

38