Introduction to Nonparametric Statistics Stat 425

advertisement

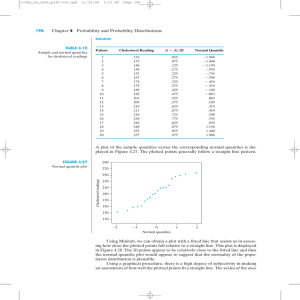

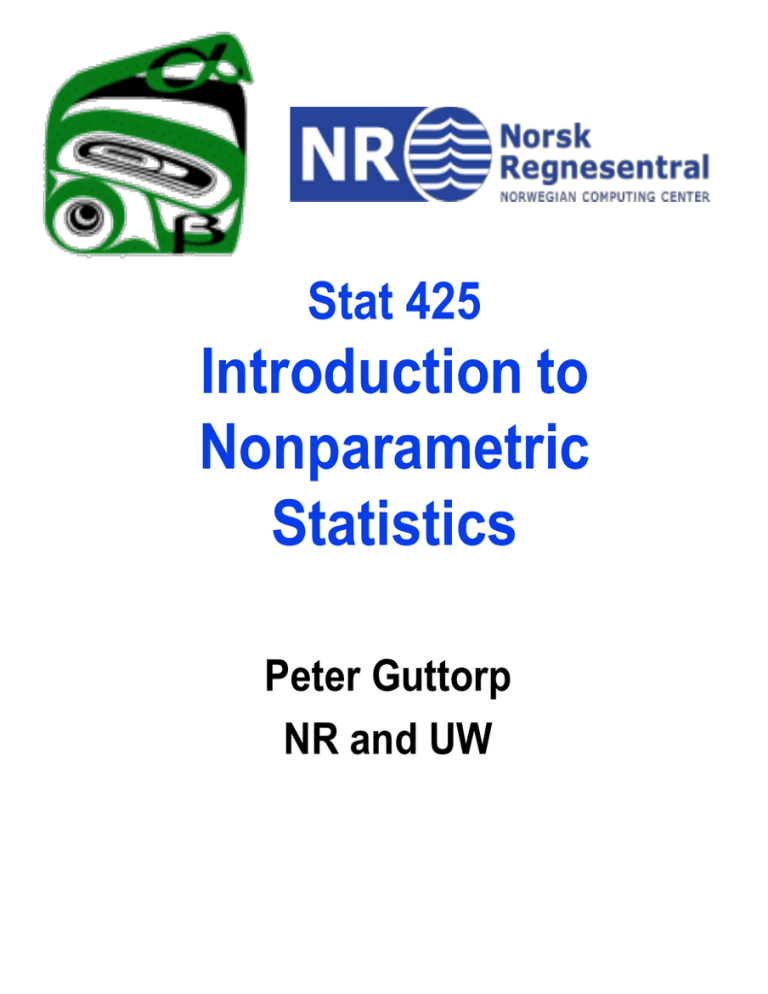

Stat 425 Introduction to Nonparametric Statistics Peter Guttorp NR and UW Administrative issues Web page: http://www.stat.washington.edu/pe ter/425/ Course grade based on participation (20%), homework (30%), midterm (25%) and final (25%). Both exams will be in class. Computing corner on Tuesdays. Homework due in class on Thursdays Office hour Tu 1-2 CMU B023 What the course is (and is not) about Parametric statistics: Data from a family of distributions described (indexed) by a parameter θ Under some conditions we can determine good statistical procedures (tests, estimates) How do you know if they are met? What if they are not met? An example Test: t-test CI: Estimator: Assumptions: Topics 1. Checking distributional assumptions Empirical distribution function Kolmogorov-Smirnov statistic QQ-plot The importance of assumptions Shift function Density estimation Testing for independence Topics, cont. 2. Ranks and order statistics When t-tests do not work Confidence sets for the median. Comparing the location of two or more distributions. Nonparametric approaches to bivariate data. Nonparametric Distribution-free Is this an exponential sample? 0.4 0.3 0.2 0.1 0.0 Density 0.5 0.6 0.7 Exponential sample 0 1 2 3 exp 4 5 6 The empirical distribution function For an event A, 1(A) = 1 if A happens, 0 otherwise. Let X1,...,Xn be iid with distribution function (cdf) F(x). The empirical distribution function (edf) is defined as Properties of edf 1. The edf is a cumulative distribution function. 2. It is an unbiased estimator of the true cdf. 3. It is consistent and asymptotically normal. How far is the edf from the cdf? 0.0 0.2 0.4 0.6 0.8 1.0 Exponential sample of size 100 0 1 2 3 4 5 -0.10 -0.05 Difference 0.00 Residual plot 0 1 2 3 4 5 The Kolmogorov distance where x(1)<x(2)<...<x(n) is the ordered sample Another distance: Cramér-von Mises 1.0 Kolmogorov distance for exponential sample 0.0 0.2 0.4 0.6 0.8 0.122 0 1 2 3 4 5 Why dK does not depend on F0 Considering transforming (monotonically) the x-axis. Does it change the distance? In particular, if X~F0 what is the distribution of Y=F0 (X)? Thus the distribution of dK(Fn,F0) is the same as the distribution of dK(Gn,x) where Gn is the edf of a sample of size n from a uniform distribution on (0,1) Distribution-free The KolmogorovSmirnov test Let X~F. To test H0: F = F0 we (1) Estimate F by Fn (2) Calculate dn=dK(Fn,F0) (3) If dn is too large, reject H0 One can also do a two-sample test, where X~F, Y~G and we test H0: F = G by rejecting for large values of dK(Fn,Gm). Limiting distribution How large is large? It turns out that where This works for n ≥ 80; for smaller n there are tables and computer programs. Confidence band for F For an unknown F, we use dK(Fn,F) as a pivot: This confidence band is simultaneous (valid for all x) In particular, one can see if different candidate F fall inside the band. 0 1 2 3 4 5 6 0.0 0.2 0.4 0.6 0.8 1.0 Quantile function For a continuous F there is a unique inverse function F-1. For a step function, like Fn, it is not unique, so we define the empirical quantile function as Graphically Empirical quantile function 0.0 -1.5 0.2 -1.0 -0.5 f(x) 0.4 f(x) 0.6 0.0 0.8 0.5 1.0 Empirical df -2 -1 0 1 0.0 0.2 0.4 x Sample -1.55 -0.67 -0.39 0.60 0.6 x 0.8 1.0 The q-q-plot The q-q plot is plotting (Fn-1(p),F0-1(p)) for p between 0 and 1 A common choice of F0 is the normal distribution, but we can test any specified disytibution. We can get a simultaneous confidence band for F0-1, the same way we got it for the cdf. Theoretical normal QQ-plots Exponential 4 3 2 0 -2 -1 0 1 2 -2 -1 0 1 2 Theoretical Quantiles Cauchy Uniform 0.8 0.6 0.4 0.0 0.2 -10 0 10 Sample Quantiles 30 1.0 Theoretical Quantiles -30 Sample Quantiles 1 Sample Quantiles 6 4 2 0 -4 -2 Sample Quantiles 8 10 Normal -2 -1 0 1 2 Theoretical Quantiles -2 -1 0 1 2 Theoretical Quantiles 3 2 1 0 Sample Quantiles 4 5 Exponential QQ-plot with confidence band 0 1 2 3 Exponential Quantiles 4 5