Quality assessment pilot study

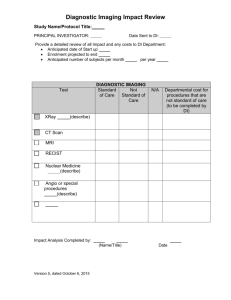

advertisement

Pilot study – quality assessment strategy 1 Introduction This systematic review evaluates diagnostic and therapeutic impact rather than diagnostic accuracy. The existing quality assessment tools QUADAS and QUADAS 2 are designed for the quality assessment of diagnostic accuracy studies and are therefore not appropriate to this review. The rapid scoping exercise returned only observational studies of the “”beforeafter” design. No other study designs were identified such as randomised controlled clinical trials. In 2009, Albon et al carried out a similar systematic review of the effectiveness of structural neuroimaging in psychosis.1 In this context, structural neuroimaging was either computed tomography or magnetic resonance imaging. Similarly, the authors’ search strategy returned only observational before-after studies and they found that no validated quality assessment tools existed for systematic review of this study design. In response, two of the authors, Meads and Davenport, reported the development and validation of a quality assessment tool for before after studies.2 This was an adaptation of the original QUADAS tool. The aim of this pilot study was to trial the quality assessment tool of Meads and Davenport using the rapid scope papers identified for the current systematic review. In order to maintain validity, any modifications would be restricted to presentation only, thus preserving the content of the tool developed by Meads and Davenport. 2 Materials and methods The rapid scoping exercise identified six studies that met the inclusion criteria. In a further refinement of the inclusion criteria, the study by Fortin3 was removed from the rapid scope studies. The reason for this was that this study concerned only implants that were placed in the posterior maxilla. Since other studies had been identified which included the anterior mandible, it was considered appropriate to remove this study from further consideration. The remaining five studies were: Diniz 2008 4, Frei 2004 5, Reddy 1994 6, Schropp 2001 7 and Schropp 2011 8 Meads’ and Davenport’s modified quality assessment tool removed two questions from the original QUADAS and added a further four. The original QUADAS form was therefore modified to represent Meads’ and Davenport’s tool. This is reproduced in Appendix A. First, the original numbers were retained from QUADAS and the new questions, as in Meads and Davenport, were assigned letters A to D. The QUADAS responses, “Yes”, “No” or “Unclear” were also retained. As a preliminary modification of presentation the questions were renumbered, using roman numerals to distinguish them from the original source numbering. This tool was then used to assess the quality of the five rapid scope studies. 3 Results Several problems were identified: Questions B and D, which were new questions added by Meads and Davenport, did not lend themselves to a “Yes”, “No” or “Unclear” answer. For example, question D asks, “Who performed the clinical evaluation and image analysis?” Furthermore, in the original QUADAS tool, a single box is available for each question in which to answer “Yes”, “No” or “Unclear” (Appendix B). These problems do not permit a visual interpretation of the quality assessment. Some items do not take into account that the question may not be applicable. For example, QUADAS question 14 is, “Were withdrawals from the study explained?” If there had been no withdrawals from the study then the answer would not fall into one of “Yes”, “No” or “Unclear”. It was therefore decided to include a further category of “Not applicable”. Similar considerations applied to QUADAS question 13, “Were uninterpretable/intermediate imaging results reported? “ and Meads’ and Davenport’s question B, “What was the explanation for patients who did not receive CT or MRI?” To address these problems, the form was modified as follows: First, separate boxes were included for each of “Not applicable (N/A)”, “Yes”, “Unclear” and, “No”. The answer, “Unclear”, was considered to be best placed between “Yes” and “No” allowing visual interpretation. Further the answer “N/A” was considered to be best placed separately, so that it would not form part of the interpretation. In addition, a method of allowing a common interpretation of “Yes”, “Unclear” and “No” questions with the two questions that could not be answered in this way was developed. First, these questions were placed in a separate section at the foot of the form. Secondly, a green to red gradient was placed behind the answer boxes so that a tick mark could be placed at any point along it. Green would signify an answer consistent with good study quality and red with bad study quality. The gradient was extended behind all of the “Yes”, “Unclear” and “No” questions so that answers from all sections of the form could be interpreted visually with a common measure. This also separated the “N/A” answers, visually removing them from consideration in the quality assessment. It was, of course, appreciated that this visual guide would form only part of the quality assessment and that some questions would have greater weights than others. Question 5 from QUADAS is, “Did the whole sample or a random selection of the sample receive verification using a reference standard of diagnosis?” At first reading this did not seem at first to require a “Yes”, “No” or “Unclear” answer. On further consideration, however, this was indeed a question requiring a “Yes”, “No” or “Unclear” answer. Nonetheless, it was felt to be helpful to include some clarification as follows, “Yes for whole sample or random selection. No if neither.” In the QUADAS tool for quality assessment of diagnostic accuracy tests, a “reference standard” is compared with an “index test”. In the context of diagnostic accuracy, the “reference standard” will be the current gold standard test against which the new, “index test”, is assessed. For the purposes of quality assessment in their neuroimaging study, Meads and Davenport considered the “before” diagnostic strategy to be the index test. This was conventional neurological examination. They considered the “after” strategy, CT or MRI, to be the reference standard on the basis that a reference standard is defined as the best test practically available. It seemed that similar considerations would apply in the current review. Conventional imaging should be seen as the reference test and three dimensional imaging as the reference standard. Nevertheless, this could be confusing in the form where an assessor would have to remember which was the reference standard and which the index test. Therefore, in the quality assessment form, it was decided to substitute the words index test with “conventional imaging” and reference standard with “three dimensional imaging”. When completing the form it was found that, on occasion, it was necessary to consult the guidance notes given with the QUADAS quality assessment tool. The renumbering of the questions made it difficult to find the correct section of these notes. Therefore, the original numbering system from QUADAS and from Meads and Davenport was re-introduced. To avoid confusion by future users, an explanatory note was made at the foot of the form. The amended quality assessment form is shown in Appendix C. 4 Conclusion and discussion The quality assessment tool of Meads and Davenport with the modified presentation does seem to visually capture the outcome of the assessment. This should now be trialled with a small number of co-reviewers to assess its clarity, ease of use and effectiveness 5 Post script After discussion with co-authors, it was felt helpful to include an overall subjective score for the quality assessment. This was added to the form using the same visual format that had been established. The final form is presented in Appendix D. Appendix A Modified Quadas according to Meads & Davenport Author Year Study title No. Item 1 Was the spectrum of patients representative of patients who will receive the test in practice? 2 Were the selection criteria clearly described? A Were patients recruited consecutively? 5 Is the period between reference standard and index test short enough to be reasonably sure that the target condition did not change between the two tests? Did the whole sample or a random selection of the sample receive verification using a reference standard of diagnosis? 6 Did the patients receive the same reference standard regardless of index test? 8 Was the execution of the index test described in sufficient detail to permit replication of the test? 9 Was the execution of the reference standard described in sufficient detail to permit its replication? D Who performed the clinical evaluation and image analysis? 10 Were the index test results interpreted without knowledge of the results of the reference standard? C Was the study and/or collection of clinical variables conducted prospectively? 11 Were the reference standard results interpreted without knowledge of the index test? 4 12 Were the same clinical results available when test results were interpreted as would be available when the test is used in practice? 13 Were uninterpretable/intermediate test results reported? 14 Were withdrawals from the study explained? B What was the explanation for patients who did not receive CT or MRI? Yes No Unclear Modified Quadas according to Meads & Davenport (renumbered) Author Year Study title No. Item I Was the spectrum of patients representative of patients who will receive the test in practice? II Were the selection criteria clearly described? III Were patients recruited consecutively? V Is the period between reference standard and index test short enough to be reasonably sure that the target condition did not change between the two tests? Did the whole sample or a random selection of the sample receive verification using a reference standard of diagnosis? VI Did the patients receive the same reference standard regardless of index test? VII Was the execution of the index test described in sufficient detail to permit replication of the test? VIII Was the execution of the reference standard described in sufficient detail to permit its replication? IX Who performed the clinical evaluation and image analysis? X Were the index test results interpreted without knowledge of the results of the reference standard? XI Was the study and/or collection of clinical variables conducted prospectively? XII Were the reference standard results interpreted without knowledge of the index test? IV XIII Were the same clinical results available when test results were interpreted as would be available when the test is used in practice? XIV Were uninterpretable/intermediate test results reported? XV Were withdrawals from the study explained? XVI What was the explanation for patients who did not receive 3 dimensional imaging? Yes No Unclear Appendix B Original QUADAS quality assessment form Appendix C - Quality Assessment Tool (Modified from Quadas 1 by Meads & Davenport) Author Year Study title No. Item 1 Was the spectrum of patients representative of patients who will receive imaging in practice? 2 Were the selection criteria clearly described? 6 Is the period between conventional imaging and 3D imaging short enough to be reasonably sure that the target condition did not change between the two tests? Did the whole sample or a random selection of the sample receive verification using a reference standard of diagnosis? (Yes for whole sample or random selection. No if neither.) Did the patients receive the same 3D imaging regardless of conventional imaging? 8 Was the execution of the conventional imaging described in sufficient detail to permit replication of the test? 9 Was the execution of the 3D imaging described in sufficient detail to permit its replication? 10 Were the conventional imaging results interpreted without knowledge of the results of the 3D imaging? 11 Were the 3D imaging results interpreted without knowledge of the conventional imaging? 4 5 12 Were the same clinical results available when imaging results were interpreted as would be available when the imaging is used in practice? 13 Were uninterpretable/intermediate imaging results reported? 14 Were withdrawals from the study explained? A Were patients recruited consecutively? C Was the study and/or collection of clinical variables conducted prospectively? B What was the explanation for patients who did not receive 3D imaging? D Who performed the clinical evaluation and image analysis? N/A Yes Unclear No (Green for good quality, red for poor quality.) (Green for good quality, red for poor quality.) Numbering is unchanged from original sources. Numbered items are from Quadas 1. Questions 3 & 7 were removed by Meads and Davenport. Letters are additional questions from Meads and Davenport Appendix D - Quality Assessment Tool (Modified from Quadas 1 by Meads & Davenport) Author Year Study title No. Item N/A Yes No Unclear 1 Was the spectrum of patients representative of patients who will receive imaging in practice? 2 Were the selection criteria clearly described? 6 Is the period between conventional imaging and 3D imaging short enough to be reasonably sure that the target condition did not change between the two tests? Did the whole sample or a random selection of the sample receive verification using a reference standard of diagnosis? (Yes for whole sample or random selection. No if neither.) Did the patients receive the same 3D imaging regardless of conventional imaging? 8 Was the execution of the conventional imaging described in sufficient detail to permit replication of the test? 9 Was the execution of the 3D imaging described in sufficient detail to permit its replication? 10 Were the conventional imaging results interpreted without knowledge of the results of the 3D imaging? 11 Were the 3D imaging results interpreted without knowledge of the conventional imaging? 4 5 12 Were the same clinical results available when imaging results were interpreted as would be available when the imaging is used in practice? 13 Were uninterpretable/intermediate imaging results reported? 14 Were withdrawals from the study explained? A Were patients recruited consecutively? C Was the study and/or collection of clinical variables conducted prospectively? B What was the explanation for patients who did not receive 3D imaging? (Green for good quality, red for poor quality.) D Who performed the clinical evaluation and image analysis? (Green for good quality, red for poor quality.) Numbering is unchanged from original sources. Numbered items are from Quadas 1. Questions 3 & 7 were removed by Meads and Davenport Letters are additional questions from Meads and Davenport Overall subjective quality assessment (Green for good quality, red for poor quality.) References 1. 2. 3. 4. 5. 6. 7. 8. Albon E, Tsourapas A, Frew E, Davenport C, Oyebode F, Bayliss S et al. Structural neuroimaging in psychosis: a systematic review and economic evaluation. Health technology assessment 2008; 12:iii-iv, ix-163. Meads CA,Davenport CF. Quality assessment of diagnostic before-after studies: development of methodology in the context of a systematic review. BMC medical research methodology 2009; 9:3. Fortin T, Camby E, Alik M, Isidori M,Bouchet H. Panoramic Images versus ThreeDimensional Planning Software for Oral Implant Planning in Atrophied Posterior Maxillary: A Clinical Radiological Study. Clinical implant dentistry and related research 2011; Diniz AF, Mendonca EF, Leles CR, Guilherme AS, Cavalcante MP,Silva MA. Changes in the pre-surgical treatment planning using conventional spiral tomography. Clinical oral implants research 2008; 19:249-253. Frei C, Buser D,Dula K. Study on the necessity for cross-section imaging of the posterior mandible for treatment planning of standard cases in implant dentistry. Clinical oral implants research 2004; 15:490-497. Reddy MS, Mayfield-Donahoo T, Vanderven FJ,Jeffcoat MK. A comparison of the diagnostic advantages of panoramic radiography and computed tomography scanning for placement of root form dental implants. Clinical oral implants research 1994; 5:229-238. Schropp L, Wenzel A,Kostopoulos L. Impact of conventional tomography on prediction of the appropriate implant size. Oral surgery, oral medicine, oral pathology, oral radiology, and endodontics 2001; 92:458-463. Schropp L, Stavropoulos A, Gotfredsen E,Wenzel A. Comparison of panoramic and conventional cross-sectional tomography for preoperative selection of implant size. Clinical oral implants research 2011; 22:424-429.