Neural Networks

advertisement

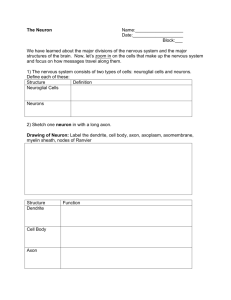

Language Project Neural Network Neural networks have a mass appeal Simulates brain characteristics Weighted connection to nodes Neural Network cont. A neural network consists of four main parts: 1. Processing units. 2. Weighted interconnections between the various processing units which determine how the activation of one unit leads to input for another unit. 3. An activation rule which acts on the set of input signals at a unit to produce a new output signal, or activation. 4. Optionally, a learning rule that specifies how to adjust the weights for a given input/output pair. Learning and predicting Learning method is back propagation Error is calculated at result nodes This error is then fed backward through the net Adjustments to weight reduce error We look at our result nodes and Output node which is the last node, and The difference we get is the error Predicting Predicting is summation of weights Dendrites have input value Input times weight gives value All value summed give total activation Parameters Internal parameters Learning rate Threshold Dendrite initial value Value Rand Activation formula Triangle Logistic linear Elements sense Gets input from the file Input is in sequence dendrite Connects neurons Has weight Weight is a floating value from 0-1 Elements cont soma – body of a neuron synapse – It is used for connection. It determines which dendrite will go to which neuron result – results are supplied to this node Parts of Language The derived NN Engine consists of three parts 1. Framework initialization Topology implementation 2. 3. Link necessary files NNL specific code Processor Process input using topology Properties Its imperative Keywords are not case sensitive Id’s are case sensitive Last neuron of layer is one to one relationship with result We can implement two neural networks, take the same sense values and get different results to compare Readable Writable We are using functions without declarations Functions can take any values Error will be caught in semantics Input and Output Network input Format in in in … in # out out …out Error checking for input file Network output The output file is same format Populate empty output with predictions Grammar Used A-> O; A | D; A | N ; A | Y; A | S ; A | R;A| e O-> soma I F --------body of a neuron E->dendrite E I-> id Y->synapse II --------- a connection F->function P P->( P’) P’->Z,P’| Z D-> dendrite I F -------- input to neuron N -> neuron E --- a neuron composed of soma and dendrite S->sense Z I ---- information is supplied to this node R-> result Z id ---- results are supplied to this node Z->number Sample Code // create first neuron (Logistic and Triangle are functions) soma s1 Logistic(10, 2, 5); dendrite d1 Value(1); dendrite d2 Rand(1,2); neuron n1 s1 d1 d2 ; // create second neuron soma s2 Triangle(3); dendrite d3 Value(1); dendrite d4 Rand(1,2); neuron n2 s2 d3 d4 ; // create second neuron soma s3 Triangle(3); dendrite d5 value(4); dendrite d6 Rand(1,2); neuron n3 s3 d5 d6; // connect neurons synapse n2 d2; synapse n3 d1; // input sense 1 d1; sense 2 d3; sense 3 d4; // out result 1 n1; Engine Performs analysis on neurons Detects layer of neuron order neurons in a list by layer Processes neuron Cascades to get predictions Performs back propagation Input data Streams data from input file Check data for errors Output data Writes results Traversing neurons Compiler Lexical Analyzer Removes comments Conditions code Tokenizes Parser Recursive descent Does not do static semantic Compiler cont. Semantics checks ids declarations Checks how ids are assembled Code generation Transforms NNL code into java Adds the engine System Interactions Creating network Using network Example Detecting letters detect L and T in a 5 by 5 grid Compare L and T – no false positive Compare random results (noise rejection) Topology One layer network One output Twenty five inputs Example cont. Future Enhancements Persistent data for each NNL program Easier training methods Keyword to generate redundant declarations Visual connection tool Include parameter choices High level semantics Questions