Genetic Programming Model for Software Quality Classification

advertisement

Genetic Programming Model for Software Quality Classication

Yi. Liu

T aghi M. Khoshgoftaar

Florida Atlantic University

Boca Raton, Florida USA

Abstract

Pr edicting software quality is very useful in software

engineering, but sometimes predicting the exact number

of faults is dicult and unnecessary. In this paper, we

apply Genetic Programming techniques to build a softwar e quality classication model base d on the metrics of

software modules. The model we built attempts to distinguish the fault-prone modules from non fault-prone

modules using Genetic Programming (GP). These GP

experiments were conducted with a random subset selection for GP in order to avoid overtting. We then

use the whole t data set as thevalidation data set to

select the b est model. We demonstrate through two case

studies that the GP technique can achieve good results.

Also, we compared GP modeling with logistic regression

modeling to verify the usefulness of GP.

Keywords: Software Metrics, Genetic Programming,

Properties of Metrics, Measur ementTheory, Classication, Cost of misclassication

1. Introduction

Soft w arequalit yis becoming more important as

computer systems pervade our societ y. Low qualit y

softw arecan impact people in many w ays, including

economic loss and

ev en place their lives at risk. Predicting softw are quality can guide decision-making processes for software dev elopment managers, and help

them to achiev ethe all-important goals of releasing

a high qualit ysoftw areproduct on time and within

budget. If w e can predict softw arequalit yearly in

the development cycle, it can signicantly reduce costs.

Readers may contact the authors through Taghi M. Khoshgoftaar, Empirical Software Engineering Laboratory , Dept.

of Computer Science and Engineering, Florida Atlantic University, Boca Raton, FL 33431 USA. Phone: (561)2973994, Fax: (561)297-2800, Email: taghi@cse.fau.edu, URL:

www.cse.fau.edu/esel/.

Early softw are quality prediction relies on the softw are

metrics collected in the specication, design and implementation phase. There are various techniques applied

to this eld and some of them have achieved good results 8], 2], 4], 5], 7], 9], 10], 1], 3]. One of the

modelingapproac hes is softw arequality classication

modeling based on cost-eective measurement 6]. In

this model, predicting the exact number of faults is not

necessary. The goal is to predict whether each module

is fault-prone or not fault-prone at the beginning of

system integration so that developers can invest more

eort on fault-prone modules than on not fault-prone

modules thereby minimizing development costs.

The advan tage of GP is that it can discover a pattern from a set of tness cases \without being explicitly

programmed for them" 13]. When we denea set of

functions and terminals, select a target tness function, provide a nite set of tness cases, GP can nd a

solution in the search space dened by these functions

and terminals provided to the problem.

There are no prior applications of GP to softw are

qualit y classication models to our knowledge. In this

paper, we rst proposed an integrated method for using

GP in softw are quality classication modeling, including random subset selection and denition of a model

selection process. It also rst introduced the prior

probability and costs of misclassication into a tness

function. Two case studies illustrated the success of the

GP technique in softw are quality classication models

by using data from tw o real-world projects: very large

c -based softw are applications, VLWA, writWindows

ten in C++ language with 1211 source code les, and

over 27.5 million lines of code in each application.

In this paper, We rst give an overview of the classication rules, and the tw omethodologies: Genetic

Programming (GP) and Logistic Regression modeling

(LRM). Then w e present the integrated method w e

used to build a softw arequalit yclassication model.

We also compared the results betw een GP and Logis-

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

tic Regression modeling.

2. Software Quality Classication Model

The goal of a softw arequalit ymodel is to predict

the quality of a module based on softw are metrics. The

qualit y of a module is measured by predicting its number of faults. But it is dicult to predict the exact

number of faults in a module. Sometimes it is even

unnecessary to satisfy this requirement. In this case, a

softw are quality classication model is especially useful. It focuses on classifying modules into tw ocategories: fault-prone and not fault-prone.

In a real-world softw aredev elopment, it is impossible to apply the

same eort and reliabilit y improvement techniques, like testing, to every module because

of schedule and cost limitations. The project manager

may want to assign more testing eort on the modules

which are more important and more likely to be faultprone. The purpose of a softw are quality classication

model is to provide a guideline for the software development process so that a dev elopment team can use

their reliability improvement eorts cost-eectively.

A module is said to be fault-prone if the number of

faults is greater than a selected threshold. Otherwise,

the module is not fault-prone. The class (fault-prone

or not fault-prone) is the dependent variable in the

model. The attributes of softw are modules collected in

the soft w are development process are the independent

variables. The model predicts the class of each module

based on it's known attributes, namely, the softw are

product and process metrics. An important advantage

of building a model using these metrics is that we can

predict the quality of the module in the early stages of

the soft ware development cycle. So the eort to correct

faults is muc h more cost-eective.

T o remain consistant with previously published

w ork, we will use the same denitions for several terms:

a \fault" is a defect in a program that may cause incorrect execution 11]. A \Type I error" is dened as

when a model misclassies a not fault-prone (nfp) softw aremodule as fault-prone (fp). A \Type II error"

is dened as when a model misclassies a fault-prone

softw are module as not fault-prone.12 ]

2.1. Classification Rules

In practice the penalties for dierent misclassications are not the same. If a type I error occurs, the cost

reects the waste of eort and money trying to improve

a module that already has high quality. If a type II error occurs, the cost is that a poor quality module loses

an opportunity to be corrected early. This can be very

expensive or ev en disastrous. In general, the later a

fault is discovered, the more expensive it is to repair.

So our classication rules take into account the costs

of dierent types of misclassication. The objective for

the rules is to minimize the expected cost of misclassication.

The expected cost of misclassication of one module

as dened in 12] is

ECM = CI Pr(2j1)1 + CII Pr(1j2)2

(1)

where CI is the cost of a type I misclassication.

CII is the cost of a type II misclassication. Pr(2j1)

is the type I misclassication rate, Pr(1j2) is the type

II misclassication rate. 1 is the prior probability of

membership for the not fault-prone class, 2 is the prior

probability of membership for the fault-prone class.

A classication rule that minimizes the expected

cost of misclassication as dened in 12] is

Class(xi ) =

(

nfp if ff12 ((xxii )) CCIII 21

fp otherwise

(2)

where f1 (xi ) is a lik elihood function for module i0 s

membership in the not fault-prone class. f2 (xi ) is a

likelihood function for module i0 s membership in the

fault-prone class. Class(xi ) is the predicted class of

module i based on vector of independent variables, xi .

Project managers will be interested in this rule because the cost is usually a key element for the project

management. But costs of misclassications are dicult to estimate for some projects. Sometimes prior

probabilities are unknown or dicult to estimate. In

such cases, we present a more general rule that does not

require the tw o parameters (CCIII and 21 ). It is dened

in 12]:

Class(xi ) =

(

nfp if ff12 ((xxii )) c

fp otherwise

(3)

where c is a constant and is chosen empirically. Usually, as the value of c increases, the type I misclassication rate increases and the type II misclassication rate

decreases. As a result, we select the c by achieving the

project's preferred balance betw een the tw o misclassication rates.

3. Genetic Programming Model

Genetic Programming (GP) was rst proposed by

John Koza. It is a part of the eld of machine learning system. One of the advantages of GP is that it

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

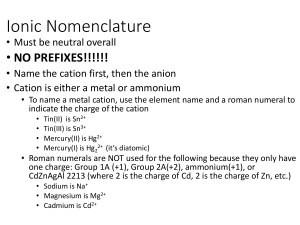

Gen=0

Creating the initial population

Yes

Present the result

Termination condition Satisfied

No

Evaluation

End

i=0

Selecting GP operaiton

Gen=Gen+1

Selecting individuals

Breeding and store into next population

Increase i

Yes

I>M

No

Figure 1. The flowchart of GP algorithm

can evolve a solution automatically from the input of

the training data 13 ]. Each individual in GP is a Sexpression composed of functions and terminals provided by the problem. We use a tness function to dene the quality of each individual. It determines which

individual can be selected for mating and reproduction

for the next generation.

3.1. The process of evolution of GP algorithm

The progress of GP imitates the Darwinian principle

of survival and reproduction of the ttest individuals.

The entire process of GP is shown in Figure 1. M is

the maximum number of generations.

4. Logistic Regression Modeling

Logistic Regression Modeling (LRM) is a statistic

modeling technique which is often used to investigate

the relationship betw een the response probability and

the explanatory variables. The independent variables

can be categorical, discrete or continuous, but the dependent variable can only take one of tw o possible values. It is very suitable to apply LRM to the softw are

qualit yclassication model since the predictable dependent variable is a class membership with tw o possible v alues:not fault-prone and fault-prone.

There are several possible strategies for encoding

categorical independent variables for the logistic regression model. F or binary categorical variables, w e

encode the categories as the values zero and one. We

can use discrete and continuous variables directly.

We dene a module being fault-prone as an "even t".

Let p be the probability of an even t, and thus, p=(1 ; p)

be the odds of an even t. Suppose xj is the j th inde-

pendent variable, and Xi is the vector of the ith module's independent variable values. The logistic regression model has the form:

log( 1 ;p p ) = 0 + 1 x1 + ::: + j xj + ::: + m xm (4)

where log means natural logarithm and m is the

number of independent variables, 0 is the intercept

parameter and j j 6= 0 are the slope parameters. bj

is the estimated value of j . The model also can be

restated as

0 + 1 x1 + ::: + m xm )

(5)

p = 1 +exp(

exp( + x + ::: + x )

0

1 1

m m

which implies each xj is assumed to be monotonically related to p. Since most softw are engineering measures do have a monotonic relationship with faults, we

can apply this model to softw are quality classication.

In this paper, we use stepwise logistic regression to

build the model, which is one of model selection methods using the following procedure.

First, estimate a model with only the intercept.

Ev aluatethe signicance of eac h variable not in the

model. Add to the model the variable with the largest

chi-squared p value which is better than a given threshold signicance level. Second, estimate parameters of

the new model. Evaluate the signicance of each variable in the model. Remove from the model the variable with the smallest chi-squared p value whose significance is worse than a given signicance level. Third,

repeat the rst step and the second step until no v ariable can be added or removed from the model. T est for

adding or removing a variable is based on an adjusted

residual chi-squared statistic for each variable, comparing models with and without the variable of interest.

We calculate the maximum likelihood estimates of

the parameters of the model, bj . The estimated standard deviation of a parameter can be calculated, based

on the log-likelihood function. All of these calculations

are provided by commonly available statistical pac kages, such as SAS.

We then apply the classication rule that minimizes

the expected cost of misclassication for this model.

So the process of classication is :

1. Calculate p^=(1 ; p^) using

log (1 ;p^ p^) = b0 + b1 x1 + ::: + bj xj + ::: + bm xm : (6)

2. Assign the module by a classication rule that

minimizes the expected cost of misclassication dened

by Equation (3).

Class(xi ) =

(

fault ; prone

if 1;p^ p^ CCIII nfp

fp

not fault ; prone otherwise

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

(7)

Table 1. Software Product Metrics for VLWA

Symbol Description

NUMI Number of times the source le was inspected

prior to the system test release.

LOCB Number of lines for the source le prior to

coding phase.

LOCT Number of lines of code for the source le

prior to system test release.

LOCA Number of lines of commented code for the

source le prior to coding phase.

LOCS Number of lines of commented code for the

source le prior to system test release.

4.1. VLWA dataset

In this paper, the GP and LRM modelsw ere rst

developed using data collected from tw o very large

c -based softw are applications. These appliWindows

cations were very similar and contained common softw are code. Data collected from both applications,

w as analyzed simultaneously. These applications were

written in C++ language with 1211 source code les,

and over 27.5 million lines of code in each application.

Source code les were considered as modules in these

case studies. The metrics were collected using a combination of several tools and databases. The independent variables for the tw o models are listed in Table 1.

NUMI is a process metric and the other four are product metrics. Two dependent variables are the number

of faults and the number of code churn during system

test. Code churn is dened as the summation of the

number of lines added, deleted and modied.

The rst model classied the modules into changeprone and not change-prone based on the number of

code churn. In this case study, change-prone modules

has four or more code churn. This threshold illustrates

project specic criteria. The t data set has 807 modules among which 618 modules are not change-prone

and 189 are change-prone. The test data set contains

the remaining 404 modules among which 308 modules

are not change-prone and 96 are change-prone.

The second model classied the modules into faultprone modules and not fault-prone modules based on

the number of faults. The selected threshold is 2,

namely, if a module has tw oor more faults, then it

is fault-prone. Otherwise, it is not fault-prone. The t

data set has 807 modules, consisting of 632 not faultprone modules and 175 fault-prone modules. The test

data set has 404 modules, consisting of 317 not faultprone modules and 87 fault-prone modules.

5. Empirical Case Study

We built tw o predictive models for VLWA data set:

the number of faults and the number of code churn.

These case studies show ed that GP can successfully be

applied to softw are reliability engineering.

The following steps summarize how this experiment

w as performed:

1. Collect the data from a past project. These data

usually are softw are metrics on which our prediction depends.

2. Determine the class of each module.

nfp If faults < threshold

Class(xi ) =

fp Otherwise

Where threshold depend on the project-specic

criteria.

3. Prepare the data set.

We split the data into t and test data set. In

these case studies, the t data set con tainstw o

thirds of the data and the test data set con tains

the remaining one third of the data.

4. Build a model:

We use the GP technique and apply the model

selection process which is dened in section 5.3

to build a GP-based softw are quality classication

model.

5. Predict the class of each module in test data set to

evaluate the predictive quality of the GP model.

We apply the model to the test data set to evaluate

it's quality. The result will tell us the level of accuracy

of the model when w eapply it to the subsequent releases or similar projects where the actual class of each

module is unknown.

5.1. Random Subset Selection

Successfully building a model using GP heavily depends on the selected tness cases. Usually, a large

data set will result in a better model. The selected tness cases must represent the environment of the problem in the best possible way. This allows GP to learn

the true nature of the problem rather than memorizing tness cases. In softw areengineering, with realw orld systems like VLWA, the number of tness cases

is xed when data collection nishes. It is impossible

to increase the number of tness cases for GP since

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

all of these tness cases are determined by the development process. We also do not know whet

her these

tness cases we provided are adequate to represent the

problem itself or not.

Under this situation, w eneed to nd a method to

use all of the tness cases in the best possible w ay.

One simple and common method is that w euse the

en tire t data set as a training data set and evaluate

the population of GP against the test data set. The

main disadvantage of this method is the risk of overtting. Overtting is when a model works well on the

t data set, but has poor performance on the test data

set. Because a higher tness may indicate overtting,

selecting the model with the highest tness may not be

a good method.

Another method is to split the t data set into tw o

data sets. One is the training data set used to build

a model, the second is the validation data set used to

validate the qualit yof the model. The test data set

remains the same. Again, the rst issue w e face is

overtting. The second issue is when w esplit the t

data set into the training data set and the validation

data set that roughly match the distribution in the t

data set.

In order to avoid these problems, w e choose a

method called Random Subset Selection (RSS). In this

method, we did not evaluate the population against the

en tire t data set,nor did w e pic k a x subset of the

t data set. A dierent subset from the t data set

for each generation is randomly selected. The tness

evaluation of each individual in each generation is performed against the subset, not the en tire t data set.

So the individuals in each generation must confront differen t data. The individuals can only survive if they

do well with many dierent subsets. Memorizing one

or more subsets will not ensure survival. Because the

surviving individuals are always confronted with dieren t data sets in each generation, they have to discover

the underlying rules behind the data.

Another advantage of this method is that calculation

time for the tness evaluation will be reduced when we

ha vea large data set. Because w e randomly pic k a

subset with a smaller number of tness cases than the

en tire t data set for each generation. The smaller the

size of the subset is, the shorter the evaluation time is.

In VLWA data set, we randomly pick up tw o-thirds

of the not-fault prone modules and tw o-thirdsof the

fault-prone modules from the t data set to use as the

tness subset for a given generation.

5.2. Fitness evaluation

We dene CI as the cost for a type I misclassication, CII as the cost for a t ypeII misclassication.

The cost ratio c is equal to CII =CI , which is used to

achiev e a preferred balance betw een the type I and type

II misclassication rates.

Our GP model rst predicted the number of faults

of each module. Then it classied each module as faultprone and not fault-prone. If a not fault-prone module

is misclassied as fault-prone, a penalty of CI will be

added to the tness of the model. If a fault-prone module is misclassied as not fault-prone, a penalty of CII

will be added to the tness of the model. The measurement of tness here includes raw tness and the

number of hits. The raw tness of each individual is

the sum of the cost for misclassication. In addition,

if the absolute value of number of faults predicted is

extremely large, then a constant penalty CIII will also

be added. So we dene the raw tness as:

fitness = CI NI + CII NII + CIII NIII (8)

where NI is the number of type I errors, NII is the

number of type II errors. NIII is the number of modules whose the absolute value ofn umber of faults are

predicted as extremely large. We dened CI as one

unit, CII as c CI . Since we hope that CIII will give

some penalties to these individuals which predict the

absolute value of number of faults as extremely large

and maintain the diversity of the population, we suggest that CIII is a small number times CI . In our

experiments, w edene CIII as twice of CI . We also

select CIII as ve times of CI in other experiments and

it did not impact the results.

The number of hits is dened as the number of correct classied modules.

5.3. Model Selection

Because GP training process is a stochastic and

emergent phenomena, eac h run generates dierent

models. Sometimes GP produces a good model, sometimes it does not. It is dicult to select the most suitable model for a system because of overtting and sample errors. The criterion for choosing the best model on

the training data set is especially dicult in our case

studies. It is ideal if a model has the lowest type I error

and the low est type II error. But the problem we faced

w as: as type I error decreases, type II error increases

and vice versa. So we dened \best model" based on

the classication rule 3 used in section 2.1, namely, the

model that yield the most balanced type I and type II

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

misclassication rates with the type II misclassication

rate being as low as possible. We also dene a model

selection process to choose the best model.

In our case studies, c will be changed within a given

range. We select the top ve individuals for each run.

T otallyw eha vefty individuals for eac h c since ten

runs were performed with dierent c values. Then we

pick the best one from the fty individuals. The following model selection process illustrates how we select

the \best mode".

1. Recalculate the tness of the fty individuals

based on the entire t data set.

We use the entire data set as our validation data

set. Although most of the fty individuals ha ve

similar tness when a run nishes, they have different tnesses when we measure them on the entire t data set. This step ensures that we always

pick the best one based on the entire t data set.

2. Select the best model for each c from the fty individuals based on the denition of \best model"

above.

We select the best model for each c, namely, the

model that yields the most balanced type I and

type II misclassication rates with the type II misclassication rate as low as possible.

3. Choose the best model for the system among the

models selected from step 2.

Of course, the model we choose is not always the one

whose performance is best on the test data set. But

each time we used this model selection process dened

above, it selected a model with good performance on

the test data set.

6. Case Study

Our case studies illustrated the method of applying

GP technique to softw arequalit yclassifcation models. The class membership is the dependent variable

we want to predict.

6.1. GP-tool

The tool we select is lilgp1.01 developed by Douglas

Zongker and Dr.Bill Punch of Michigan State University. It is implemented using the C language and based

on the LISP work of John Koza. When applying lilgp

to genetic programming application, each individual is

organized as a tree. Each node in the tree is a C function pointers. The speed of execution is faster than

interpreted LISP since the application is compiled.

Table 2. The Parameter list for GP

pop size

max generations

output.basename

output.bestn

init.method

init.depth

max depth

breed phases

breed1].operator

breed1].rate

breed2].operator

breed2].rate

function set

termination-criterion

1000

200

cccs2

5

half and half

2-10

20

2

crosso ver, select=tness

0.90

reproduction, select=tness

0.10

+,-,*,/,sin,cos,exp,log, GT, VGT

exceeding the maximum generation

6.2. Case study for VLWA data set

6.2.1 P arameterList

Operator GT is dened as: if the v alue of the rst parameter is bigger than the second one, then return 0.0.

Otherwise, return 1.0. Operator VGT is dened as: return the maximum value of the tw o parameters. So GP

can generate discontinuous function. Since choosing a

good combination of parameter setting is somewhat of

a black art, and our goal is to apply GP to software reliabilit y engineering, not parameter optimization.The

parameters we selected have not been optimized.

The independent variables of the VLWA data set are

listed in Table 1. The two dependent variables are class

memberships for the number of faults and code churn.

The threshold for the number of faults is empirically set

to be 2. If the number of faults of a module is greater

than or equal to 2, then it is fault-prone. Otherwise,

it is not fault-prone. The threshold for code churn is

4, namely, if the number of code churn of a module

is greater than or equal to 4, then it is change-prone.

Otherwise, it is not change-prone. The parameters are

same as CCCS.

6.2.2 Experiment Results

The rst model that we built is to classify modules as

change-prone or not change-prone based on code churn.

T able 3 shows the results for the t data set as c varies

from 1 to 5. T able 4 lists the results when we applied

the models to the test data set. The best result appeared in the t data set for c=2/2.5/3, with a type I

misclassication rate of 19.58%, a type II misclassication rate of 21.16% and an overall misclassication rate

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

Table 3. The best-of-runs for c=1 to 5 for code

churn(fit data set)

c

1

2

2.5

3

3.5

4

4.5

5

T ype I T ype II

62

75

10.03% 39.68%

Overall

137

16.98%

121

19.58%

121

19.58%

134

21.68%

157

25.40%

135

21.84%

218

35.28%

161

19.95%

161

19.95%

169

20.94%

191

23.67%

177

21.93%

233

28.87%

121

40

161

19.58% 21.16% 19.95%

40

21.16%

40

21.16%

35

18.52%

34

17.99%

42

22.22%

15

7.94%

Table 4. The best-of-runs for c=1 to 5 for code

churn(test data set)

c

1

2

2.5

3

3.5

4

4.5

5

Type I

29

9.42%

T ype II Overall

33

62

34.38% 15.35%

58

18.83%

57

18.51%

62

20.13%

82

26.62%

67

21.75%

100

32.47%

19

19.79%

18

18.75%

16

16.67%

16

16.67%

18

18.75%

7

7.29%

58

19

77

18.83% 19.79% 19.06%

77

19.06%

75

18.56%

78

19.31%

98

24.26%

85

21.04%

107

26.49%

Table 5. The best-of-runs for c=1 to 5 for

fault(fit data set)

c

1

Type I

61

9.65%

2

59

9.34 %

2.5

61

9.65%

3

194

30.70%

3.5

135

21.36%

T ype II

89

50.86%

75

42.86%

76

43.43%

48

27.43%

55

31.43%

Overall

150

18.59%

134

16.60%

137

16.98%

242

29.99%

190

23.54%

4.5

43

24.57 %

38

21.71%

196

24.29%

273

33.83%

4

5

153

43

196

24.21% 24.57 % 24.29%

153

24.21%

235

37.18%

of 19.95%. Application of the model to the test data

set yielded a type I misclassication rate of 18.83%, a

type II misclassication rate of 19.79% and an overall

misclassication rate of 19.06%.

The second model is to classify modules as faultprone or not fault-prone using the number of faults.

Table 5 shows the results for the t data set as c varied

from 1 to 5. T able 6 lists the results when we applied

the models to the test data set. The best result appeared in the t data set when c=4/4.5, with a type I

misclassication rate of 24.21%, a type II misclassication rate of 24.57% and an overall misclassication rate

of 24.29%. Application of the model to the test data

set yielded a type I misclassication rate of 20.19%, a

type II misclassication rate of 27.59% and an overall

misclassication rate of 21.78%.

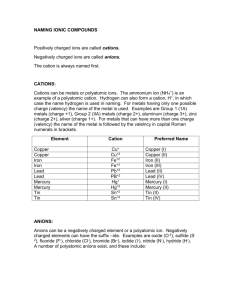

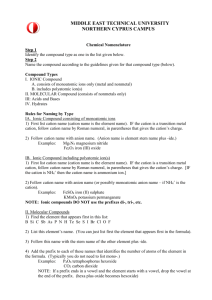

Figure 2 and gure 3 show the predictions of the tw o

GP models as c varies. If the type I error rate in the t

data set drops, it also drops when we apply the model

to the test data set. The type II misclassication rates

behave in the same way.

6.2.3 Logistic Regression Modeling

We also built tw o models for the tw o dependent variables of VLWA data set using logistic regression modeling. T able 7 and Table 8 show the results when the

tw obest models which are based on the t data set

w ere applied to the testdata set. The bestresult for

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

T ype I T ype II

25

39

7.89% 44.83 %

2

32

35

10.09 % 40.23%

2.5

36

36

11.36% 41.38%

3

92

25

29.02% 28.74%

3.5

63

26

19.87% 29.89%

4

4.5

type I misclassification rate

5

Overall

64

15.84%

87

16.58%

72

17.82%

117

28.96%

89

22.03%

64

24

88

20.19% 27.59% 21.78%

64

20.19%

116

36.59%

24

27.59%

19

21.84%

40.00

35.00

30.00

25.00

20.00

15.00

10.00

5.00

0.00

88

21.78%

135

33.42%

Type I

misclassification for

fit data set

Type I

misclassification for

test data set

0

2

4

6

type I misclassification rate

c

1

Type I

misclassification for

fit data set

20.00

15.00

10.00

5.00

0.00

Type I

misclassification for

test data set

0

2

4

6

value of c

type II misclassification rate

Table 6. The best-of-runs for c=1 to 5 for

fault(test data set)

40.00

35.00

30.00

25.00

60.00

50.00

Type II

misclassification for

fit data set

40.00

30.00

Type II

misclassification for

test data set

20.00

10.00

0.00

0

2

4

6

value of c

Figure 3. fault : Misclassifications for fit and

test

code churn is when 1=c = 0.22, with a type I misclassication rate of 26.95%, a type II misclassication

rate of 30.21% and an overall misclassication rate of

27.72%. The best result for the number of faults is

when 1=c=0.19, where a type I misclassication rate of

30.60%, a type II misclassication rate of 32.18% and

an o verall misclassication rate of 30.94%.

type II misclassification rate

value of c

6.2.4 Comparison

45.00

40.00

35.00

30.00

25.00

20.00

15.00

10.00

5.00

0.00

Type II

misclassification for

fit data set

Type II

misclassification for

test data set

0

2

4

6

value of c

Figure 2. code churn : Misclassifications for

fit and test

We compared the best results of the tw o methodologies:

LRM and GP. The results are shown in T able9 and

T able 10. The type I error rate and the type II error

rate of the GP model are much better than for LRM.

For example, for code churn, the type I error rate of

GP is 18.83%, compared to 26.95% for LRM . The type

II error rate is 19.79%, compared to 30.21% for LRM.

The overall misclassication rate is 18.56%, compared

to 27.72% for LRM. For the number of faults, the type

I error rate of GP is 20.19%, compared for 30.60% of

LRM, the type II error rate is 27.59%, compared to

32.18% for LRM and the overall misclassication rate

for GP is 21.78%, compared to 30.94% for LRM.

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

Table 7. Logistic regression model for code

churn(test data set)

1=c

0.1

T ype I T ype II

308

0

100.00% 0.00%

0.2

89

28

28.90% 29.17%

0.21

86

29

27.92% 30.21%

Overall

308

76.24%

117

28.96%

115

28.47%

0.23

101

25.00%

92

22.77%

88

21.78%

82

20.30%

75

18.56%

68

16.83%

69

17.08%

68

16.83%

65

16.09%

64

15.84%

65

16.09%

0.22

0.24

0.25

0.26

0.27

0.28

0.29

0.3

0.4

0.5

1

83

29

112

26.95% 30.21% 27.72%

69

22.40%

57

18.51%

52

16.88%

45

14.61%

38

12.34%

31

10.06%

29

9.42%

27

8.77%

18

5.84%

14

4.55%

7

2.27%

32

33.33%

35

36.46%

36

37.50%

37

38.54%

37

38.54%

37

38.54%

40

41.67%

41

42.71%

47

48.96%

50

52.08%

58

60.42%

Table 8. Logistic regression model for fault

(test data set)

1=c

0.1

0.11

0.12

0.13

0.14

0.15

0.16

0.17

0.18

0.19

0.2

0.3

0.4

0.5

1

Type I T ype II

317

0

100.00% 0.00%

315

0

99.37% 0.00%

308

1

97.16% 1.15%

298

8

94.01% 9.20%

220

8

69.40% 9.20%

195

12

61.51% 13.79%

184

21

58.04% 24.14%

159

25

50.16% 28.74%

107

26

33.75% 29.89%

Overall

317

78.47%

315

77.97%

309

76.49%

306

75.74%

228

56.44%

207

51.24%

205

50.74%

184

45.54%

133

32.92%

84

26.50%

27

8.52%

17

5.36%

15

4.73%

4

1.26%

112

27.72%

66

16.34%

60

14.85%

62

15.35%

59

14.60%

97

28

125

30.60% 32.18% 30.94%

28

32.18%

39

44.83%

43

49.43%

47

54.02%

55

63.22%

7. Conclusion

GP is a pow erful technique for nding a general

pattern behind a set of data. T oour knowledge, the

GP communit y has notapplied prior probability and

cost of misclassication to softw arequalit y classication modeling studies. This paper introduce the prior

probability and costs of misclassication into the tness function. Two full-scale industrial VLWA illustrate case studies the method we dened. The results

sho w the potential capability of GP in predicting softw are quality . Our models also illustrate the dierent

misclassication rates over a range of cost ratios. As

the cost ratio increases, type I errors increase and type

Table 9. Classification comparison for code

churn

Model

Errors

LRM

GP

T ype I errors

26.95% 18.83%

T ype II errors

30.21% 19.79%

Overall Misclassication Rate 27.72% 18.56%

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE

Table 10. Classification comparison for fault

Model

Errors

LRM

GP

T ype I errors

30.60% 20.19%

T ype II errors

32.18% 27.59%

Overall Misclassication Rate 30.94% 21.78%

II errors decrease.

In this paper, w ealso compare Genetic Programming modeling with logistic regression modeling. It

shows that the GP modeling was more accurate than

logistic regression modeling in our case studies.

F urther research will focus on improving the correctness of our model by rening evolutionary process and

combining product metrics with process metrics.

Acknowledgments

This work was supported in part by the National Science Foundation grant CCR ; 9970893. All of the assistance and suggestions from Peider Chen, Erika Dery,

Matthew Evett, Thomas Fernandez and Erik Geleyn is

very greatly appreciated.

References

6] T. M. Khoshgoftaar and E. B. Allen. A practical classication rule for softw are quality models. IEEE Transactions on Reliability, 49(2), June 2000.

7] T. M. Khoshgoftaar, E. B. Allen, W. D. Jones, and

J. P. Hudepohl. Data mining for predictiors of softw are

quality. International Journal of Software Engineering

and Knowledge Engineering, 9, 1999.

8] T. M. Khoshgoftaar, E. B. Allen, W. D. Jones, and

J. P. Hudepohl. Classication tree models of softw are

quality over multiple releases. IEEE Transactions on

R eliability, 49(1), Mar. 2000.

9] T. M. Khoshgoftaar, E. B. Allen, A. Naik, W. D.

Jones, and J. P. Hudepohl. Using classication trees

for softw are qualit y models:Lessons learned. International Journal of Software Engineering and Knowledge

Engineering, 9(2):217{231, 1999.

10] T. M. Khoshgoftaar, E. B. Allen, X. Y uan, W. D.

Jones, and J. P. Hudepohl. Assessing uncertain predictions of soft w are qualit. In

y Proceedings of the Sixth International Software Metrics Symposium, pages 159{

168, Boca Raton, Florida USA, Nov. 1999. IEEE Computer Society.

11] M. R. Lyu. Handbook of softw are reliabilit y engineering, chapter 1. 17:3{25, 1996.

12] T. M.Khoshgoftaar and E. B.Allen. A practical

classication-rule for softw are-qualit ymodels. IEEE

T ransactions On R elialibity, 49(2):209{215, June

2000.

13] J. R.Koza. Genetic Programming, volume I. MIT

Press, New York, 1992.

1] M. P . Evett, T. M. Khoshgoftar, P .-D. Chien, and

E. B. Allen. GP-based softw are qualit y prediction.

In J. R. Koza, W. Banzhaf, K. Chellapilla, K. Deb,

M. Dorigo, D. B. Fogel, M. H. Garzon, D. E. Goldberg,

H. Iba, and R. Riolo, editors, Genetic Programming

1998: Proceedings of the Thir d A nnual Conference,

pages 60{65, Madison, WI USA, July 1998. AAAI,

Morgan Kaufmann.

2] K. Ganesan, T. M. Khoshgoftaar, and E. B. Allen.

Case-based softw are qualit y prediction.International

Journal of Software Engineering and Knowledge Engine ering, 9(6), 1999.

3] T. M. Khoshgoftaar and E. B. Allen. Classication

of fault-prone softw aremodules: Prior probabilities,

costs, and model evaluation. Empirical Software Engine ering: An International Journal, 3(3):275{298,

Sept. 1998.

4] T. M. Khoshgoftaar and E. B. Allen. A comparative

study of ordering and classication of fault-prone software modules. Empirical Software Engineering: A n

International Journal, 4:159{186, 1999.

5] T. M. Khoshgoftaar and E. B. Allen. Logistic regression modeling of software qualit y. International

Journal of Reliability, Quality and Safety Engineering,

6(4), Dec. 1999.

Proceedings of the 6th IEEE International Symposium on High Assurance Systems Engineering (HASE’01)

1530-2059/01 $17.00 © 2001 IEEE