Chapter 5 Analysis of variance SPSS –Analysis of variance

advertisement

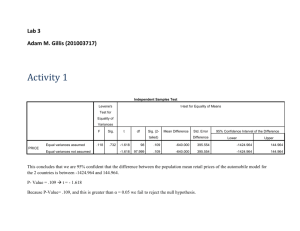

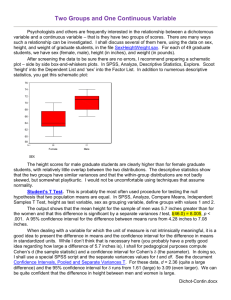

Chapter 5 Analysis of variance SPSS –Analysis of variance Data file used: gss.sav How to get there: Analyze Compare Means … One-way ANOVA … To test the null hypothesis that several population means are equal, based on the results of several independent samples. The test variable is measured on an interval- or ratio scale (for example age), and is grouped by a variable which can be measured on a nominal or discrete ordinal scale (for example life existing of the categories Dull, Routine and Exciting). An independent T test and a one-way ANOVA for two independent samples test the same hypothesis. You must select the dependent variable, and specify the factor to define the different groups. You can move more than one variable into the Dependent List to test all of them. See following figure. Button Options … Here you can choose to get descriptives of the data (Descriptive), and to test for equal variances in the groups (Homogeneity-of-variance). Button Post Hoc … To see if, and if yes which, groups differ among themselves, there are several possibilities. You can use the Bonferroni procedure (see following figure) when there are equal variances in the groups, which can be tested with the Homogeneity-of-variance test (Button Options). Output of running one-way ANOVA We performed a one-way ANOVA, with age as dependent variable, and life as factor, which exists of the groups: 0 = “Not applicable” 1 = “Dull” 2 = “Routine” 3 = “Exciting” 8 = “Don’t know” Oneway Descriptives Age of Respondent Dull Routine Exciting Total N Mean 52,62 47,28 44,54 46,33 65 457 471 993 Std. Deviation 20,059 18,191 16,106 17,479 Std. Error 2,488 ,851 ,742 ,555 95% Confidence Interval for Mean Lower Bound Upper Bound 47,64 57,59 45,61 48,95 43,08 46,00 45,24 47,42 Minimum 19 19 18 18 Maximum 89 89 87 89 Test of Homogeneity of Variances Age of Respondent Levene Statistic 8,287 df1 2 df2 990 Sig. ,000 ANOVA Age of Respondent Between Groups Within Groups Total Sum of Squares 4492,439 298568,2 303060,6 df 2 990 992 Mean Square 2246,220 301,584 F 7,448 Sig. ,001 The table ‘Descriptives’ speaks for itself. In the table ‘Test of Homogeneity of Variances’ you find the result of Levene’s Test for Equality of Variances. It tests the condition that the variances of both samples are equal, indicated by the Levene Statistic. In this statistic, a high value results normally in a significant difference, in this example that is Sig. = 0,000. Strictly speaking, the Bonferroni procedure can therefore not be used, as it assumes equal variances. However, we are dealing with large samples, which reduces the problem, and the Bonferroni test can be used and interpreted with care. In the table ‘ANOVA’ the variation (Sum Of Squares), the degrees of freedom (df), and the variance (Mean Square) are given for the within and the between groups, as well as the F value (F) and the significance of the F (Sig.). Sig. indicates whether the null hypothesis – the population means are all equal – has to be rejected or not. As you can see, there is much difference between the two Mean Squares (2246,220 and 301,584), resulting in a significant difference (F = 7,448; Sig. = 0,001). This means that H0 must be rejected. Thus: the average age of people who find life Dull, Routine, or Exciting are not all equal. But we don’t know yet which means differ from each other. Rejecting a null-hypothesis means that NOT ALL population means differ. We don’t know yet whether one or more means vary from each other! Therefore, we perform the Bonferoni procedure. The output shows us: Post Hoc Tests Multiple Comparisons Dependent Variable: Age of Respondent Bonferroni (I) Is life exciting or dull Dull Routine Exciting (J) Is life exciting or dull Routine Exciting Dull Exciting Dull Routine Mean Difference (I-J) 5,34 8,08* -5,34 2,74* -8,08* -2,74* Std. Error 2,302 2,298 2,302 1,140 2,298 1,140 Sig. ,062 ,001 ,062 ,049 ,001 ,049 95% Confidence Interval Lower Bound Upper Bound -,18 10,86 2,57 13,59 -10,86 ,18 ,01 5,48 -13,59 -2,57 -5,48 -,01 *. The mean difference is significant at the .05 level. The table ‘Multiple Comparisons’ shows that two out of three groups vary: Dull vs Routine Sig. = 0,062 which is higher than the Sig. level of 0.05. These groups do not vary. Dull vs Exciting Sig. = 0,001 which is lower than the Sig. level of 0.05. These groups vary. Exciting vs Routine Sig. = 0,049 which is lower than the Sig. level of 0.05. These groups vary (although only just). Because the Bonferroni test assumes equal variances, which does not hold in this case, you can do a test that does not assume equal variances, for example the Tamhane’s T2 test. We you perform this test, you will see that in this case it results in the same conclusions. How to get there: Analyze General Linear Model … A General Linear Model is, as the name suggest, general in that it incorporates many different models, so that many different tests can be performed. Among these, are the one- and two-way ANOVA, and regression analyses. More difficult designs can be analysed as well. Univariate … A univariate GLM is a test with only one dependent variable. There can be one or more independent variable or factors and/or variables. A one-way ANOVA is a univariate GLM with exactly one independent variable (e.g. fixed factor). A two-way ANOVA is a univariate GLM with exactly two independent variables (e.g. fixed factors). You can test null hypotheses about the effects of other variables on the means of various groupings of a single dependent variable. You can investigate interactions between factors as well as the effects of individual factors. Also, the effects of covariates and covariate interactions with factors can be included. In a univariate GLM you must select in the source variable list the variable you want to test and move it into the Dependent Variable Box. You can select only one dependent variable. Then, select the variables (factors) whose values define the groups and move them into the Fixed Factor box (or Random Factor(s) box if appropriate). If you have covariates, you move them into the Covariate(s) box. To obtain the default univariate GLM that contains all main effects and interactions, click OK. See following figure. Button Plots … To obtain plots so that you can examine interaction visually. In the Factors list box, you see all main factors in your model. To plot the means for the values of a singe factor, move that factor into the Horizontal Axis box and click Add. If you want to see the means for all combinations of values of two factors, move the second factor into the Separate Lines Box and click Add. The horizontal-axis factor means will be plotted separately for each value of the second factor. If you move a third factor into the Separate Plots box, separate plots for each value of this factor will be produced. See following figure. Button Post Hoc … When an overall F test has shown significance, you can use post hoc tests to evaluate differences among specific means. To pinpoint differences between all possible pairs of values of a factor variable, select the factors to be tested. Move them to the ‘Post Hoc Tests for:’ list box. Additionally, select one of the multiple comparison procedures, for example Bonferroni or Tukey. Many different tests are available. For information about a test, point to its name in the dialog box and click the right mouse button. See following figure. Output of running univariate GLM Univariate Analysis of Variance Between-Subjects Factors RS Highest Degree 0 1 2 Respondent's Sex 3 4 1 2 Value Label Less than HS N 80 High school 480 Junior college Bachelor Graduate Male Female 67 181 92 450 450 Tests of Between-Subjects Effects Dependent Variable: Number of Hours Worked Last Week Source Corrected Model Intercept DEGREE SEX DEGREE * SEX Error Total Corrected Total Type III Sum of Squares 22211,324a 918896,468 7409,113 9296,790 551,792 170221,266 1762191,000 192432,590 df 9 1 4 1 4 890 900 899 Mean Square 2467,925 918896,468 1852,278 9296,790 137,948 191,260 a. R Squared = ,115 (Adjusted R Squared = ,106) F 12,904 4804,440 9,685 48,608 ,721 Sig. ,000 ,000 ,000 ,000 ,577 Profile Plots Estimated Marginal Means of Number of Hours Worked Last Week 60 Estimated Marginal Means 50 40 Respondent's Sex Male 30 Less than HS High school Junior college Bachelor Female Graduate RS Highest Degree The ‘Profile Plot’ is a line plot for the average hours worked. Is there an interaction between gender and education? You see that the two lines don’t cross. The shapes of the lines for males and females are quite similar. That suggests that there is no interaction. You don’t expect pairs of lines drawn from real data to have exactly the same shape, even if there is no interaction between gender and education in the population. Your goal is to determine whether the interaction observed in the sample is large enough to believe that it also exists in the population. The table ‘Between-Subjects Factors’ speaks for itself. The table ‘Tests of Between-Subjects Effects’ is very similar to the one-way analysis of variance table ANOVA. What has changed is the number of hypotheses you are testing. In one-way analysis of variance, you tested a single hypotheses. Now you can test three hypotheses: one about the main effect of degree, one about the main effect of gender, and one about the degree-by-gender interaction. Mean Square for Degree = the variability of the sample means of the five degree groups Mean Square for Gender = the variability of the sample means of the two gender groups Error Mean Square = the variability of the observations within the 10 cell means, that is 5 (Degree) x 2 (Gender). It is a kind of ‘Within Groups Mean Square’. Remember: If the null hypotheses for an effect is true, then the corresponding F ratio is expected to be 1. You look at the observed significance level for each observed F ratio to see if you can reject the corresponding null hypotheses. Always, you first have to look at possible interaction effect, since it doesn’t make sense to talk about main effects if there is significant interaction between the factors. In this example, Sig. = 0.577, so you validate the null hypotheses that there is no interaction between the two variables. The effect of the type of degree on hours worked seems to be similar for males and females. The absence of interaction tells you that it’s reasonable to believe that the difference in average hours worked between males and females is the same for all degree categories. Since you didn’t find an interaction between degree and gender, it makes sense to test hypotheses about the main effects of degree and gender. The F statistic for the degree main effect is 9,685. The observed significance level is 0,000. This means that H0 must be rejected. The variable Degree has influence on the average hours worked. A posthoc test will reveal more about the differences in degree. The F statistic for the Gender main effect is 48,608. The observed significance level is 0,000. This means that H0 must be rejected. The variable Gender has influence on the average hours worked.