DEVELOPING ASSESSMENT LITERACY LITERACY

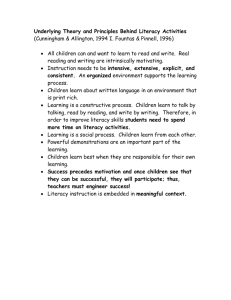

advertisement

Centre for Research in English Language Learning and Assessment DEVELOPING ASSESSMENT LITERACY Dr Lynda Taylor CRELLA Overview • 1 : Introduction to Assessment Literacy • 2 : Principles and Practice of Language Assessment Introduction to Assessment Literacy CRELLA Definitions of ‘literacy’? • What do we mean/understand by the word ‘literacy’? lit·er·a·cy n. 1. The condition or quality of being literate, especially the ability to read and write. 2. The condition or quality of being knowledgeable in a particular subject or field: cultural literacy; academic literacy Definitions of ‘academic literacy’? • the ability to read and write effectively within the college context in order to proceed from one level to another • the ability to read and write within the academic context with independence, understanding and a level of engagement with learning • familiarity with a variety of discourses, each with their own conventions • familiarity with the methods of inquiry of specific disciplines An age of literacy (or literacies)? • ‘academic literacy/ies’ • ‘computer literacy’ An age of literacy (or literacies)? • ‘civic literacy’ • ‘risk literacy’ What do we understand by ‘risk literacy’? ‘Risk literacy’ has been defined as… • ‘a basic grasp of statistics and probability’ • ‘statistical skills to make sensible life decisions’ But why might it be useful/important? Prof David Spiegelhalter (2009) Risk literacy: • ‘critical to choices about health, money and even education’ • ‘as the internet transforms access to information, it is becoming more important than ever to teach people how best to interpret data’ Prof David Spiegelhalter (2009) • ‘…we should essentially be teaching the ability to deconstruct the latest media story about a cancer risk or a wonder drug, so people can work out what it means. Really, that should be part of everyone’s language…’ Some key words/concepts so far… • • • • • • • • ability (to read + write) knowledge skills subject/field/discipline discourses conventions/methods understanding level of engagement • ‘basic grasp’ • ‘access to information’ • ‘teach people how best to interpret data’ • ‘within a context’ • ‘critical to choices’ • ‘skills to make sensible life decisions’ • ‘ability to deconstruct’ • ‘work out what it means’ • ‘part of everyone’s language’ ‘Assessment literacy’ … • ‘a basic grasp of numbers and measurement’ • ‘interpretation skills to make sensible life decisions’ But why is it necessary or important? And who needs it? The background (1) • The growth of language testing and assessment worldwide – public examinations systems at national level – primary, secondary and tertiary levels – alignment of testing systems with external frameworks, e.g. CEFR, CLB – measures of accountability – other socio-economic drivers, e.g. immigration, employment The background (2) • Growing numbers of people involved in language testing and assessment – test takers – teachers and teacher trainers – university staff, e.g. tutors & admissions officers – government agencies and bureaucrats – policymakers – general public ‘Language testing has become big business…’ (Spolsky 2008, p 297) But… … how ‘assessment literate’ are all those people nowadays involved in language testing and assessment across the many different contexts identified? Assessment literacy involves… • an understanding of the principles of sound assessment • the know-how required to assess learners effectively and maximise learning • the ability to identify and evaluate appropriate assessments for specific purposes • the ability to analyse empirical data to improve one’s own instructional and assessment practices • the knowledge and understanding to interpret and apply assessment results in appropriate ways • the wisdom to be able to integrate assessment and its outcomes into the overall pedagogic/decisionmaking process Assessment literacy involves… Skills + Knowledge + Principles Attitudes to tests, test scores and their experience of assessment? • Students • Language teachers • Educational boards • Politicians and policy-makers ‘… an oversimplified view of the ease of producing meaningful measurement is held by the general public and educational administrators alike …’ (Spolsky 2008, p 300) Barriers to assessment literacy? • Negative emotions/fear of numbers? • A very specialised/technical field? (professionalisation of language testing) • Too time-consuming? • Insufficient resources? Principles and practice of language assessment CRELLA Aim of language assessment • To measure a latent (largely unobservable) trait • But how? – Aim = to make inferences about an individual’s language ability – based on observable behaviour(s) – to which ‘test scores’ are attached • The process of ‘score interpretation’ involves giving meaning to numbers The over-arching principle of test ‘utility’/‘usefulness’ • Test purpose must be clearly defined and understood • Testing approach must be ‘fit for purpose’ • There must be an appropriate balance of ‘essential test qualities’ (such as validity and reliability) Defining sub-principles of usefulness Usefulness = Reliability + Construct validity + Authenticity + Interactiveness + Impact + Practicality (Bachman and Palmer 1996) Bachman and Palmer (1996) • Characteristics of the language use task and situation • Characteristics of the test task and situation • LANGUAGE USE • LANGUAGE TEST PERFORMANCE • Characteristics of the language user • Characteristics of the test taker – Topical knowledge – Affective schemata – Language ability – Topical knowledge – Affective schemata – Language ability Weir (2005) • A socio cognitive framework for test development and validation, embracing: – Test taker characteristics – Cognitive validity – Context validity – Scoring validity – Consequential validity – Criterion-related validity Testing/assessment cultures can shape both principles and practice • The role of historical and social context • USA tradition – psychometric focus – ‘objective’ test formats - MCQ • UK tradition – interface with pedagogy – focus on validity - task-based Some current issues for debate in the assessment community • Washback and impact • Accountability and ethical testing • Technological developments • Developments in linguistics Language testing/assessment • A historical, educational, sociological, technological phenomenon – a good example of a ‘complex system’ • An ‘inherently interdisciplinary venture’ • Assessment ‘serves other disciplines, and vice versa’ and ‘ the boundaries between theory and application are increasingly blurred’ This 2-week course is designed to help develop your assessment literacy • • • • • • • • ability knowledge skills subject/field/discipline discourses conventions/methods understanding level of engagement • ‘basic grasp’ • ‘access to information’ • ‘teach how best to interpret data’ • ‘within a context’ • ‘critical to choices’ • ‘skills to make sensible decisions’ • ‘ability to deconstruct’ • ‘work out what it means’ • ‘part of the language’ Enjoy your time here at CRELLA developing your assessment literacy!