Marjorie McColm - Fleming College

advertisement

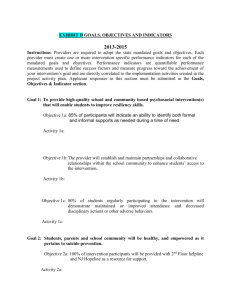

Performance Funding A Study of Performance Funding of the Ontario Colleges of Applied arts and Technology Doctor of Education Thesis Proposal January 27, 2001 Submitted By: Marjorie McColm Thesis Supervisor Theory and Policy Studies in Education Dr. Daniel Lang Ontario Institute for Studies in Education/ University of Toronto 1 Performance Funding 2 Table of Contents Abstract……………………………………………………………………………………………3 Introduction………………………………………………………………………………………..4 Purpose…………………………………………………………………………………………….8 Objectives/Research Questions……………………………………………………………………8 Literature Review………………………………………………………………………………….8 Research Questions…………………………………………………………………………….. 27 Research Methodology………………………………………………………………………… 28 Limitations……………………………………………………………………………………… 30 References……………………………………………………………………………………… 31 Bibliography…………………………………………………………………………………… 37 Appendices………………………………………………………………………………………38 Performance Funding 3 Abstract In 1999 the province of Ontario implemented performance funding in the community college sector and the university sector in 2000. The impetus behind the implementation of performance funding was to ensure that the post secondary sector was held accountable for it’s use of public resources. This thesis provides an examination of how and in what way the introduction of performance has impacted the Ontario Colleges of Applied Arts and Technology (CAATS). This study, relying on literature reviews, case studies of four colleges, interviews with key informants in the college sector, examines how performance funding has impacted both the day to decision making and long term strategic direction of the CAATS. This study also examines performance funding in other jurisdictions and how they compare to Ontario. The results of this study will inform policy and decision makers about the impact of performance funding on post-secondary institutions. Performance Funding 4 Introduction In the nineteen sixties systems were developed in response to a need to determine and allocate resources to a rapidly growing post-secondary education sector to (Kells, 1992). According to Kells by the mid nineteen seventies both the United Kingdom and the United States were hard at work using these systems to compare costs and determine appropriate funding levels. This was in response to both enrolment and resources remaining static or declining. The focus of management was to make strategic choices to improve quality and efficiency (Alexander,2000). There was a need to measure goal attainment and the performance of the institution. These systems led to the development of performance indicators. Originally performance indicators were internal indicators intended to assist the administration in improving the quality and efficiency of the individual institution. However by the mid nineteen eighties the context and interest shifted to governments using performance indicators to evaluate individual institutions and higher education systems as a whole. Governments wanted to measure the benefits of all government expenditures, and were interested in the economic benefits of their investment in higher education, particularly in jurisdictions where reliance on government funding was high, and resources were inadequate. In jurisdictions where the countries perceived they had adequate resources the interest in performance indicators was low ( Kells, 1992). To further encourage the achievement of specific outcomes, governments began to tie performance to funding. In the United States performance funding began in the state of Tennessee in 1975 and by 2000, 17 states had some form of performance funding for the public colleges and universities ( Burke, Rosen, Minassians, Lessaard, 2000). Burke and al Performance Funding 5 (2000) also found that 28 states were practising performance budgeting using results on performance indicators as a factor in setting higher education budgets. The Canadian context for higher education in the 1970s and 80s was similar to that of the United States. There was high growth in participation rates and large increases in expenditures and funding was often tied to in enrolment. Previously, accountability to government in higher education involved reporting on efforts and activity (ACCATO, 1996). In the new paradigm for accountability in higher education, a portion of funding is tied to achievement and results. As in other jurisdiction, provincial governments were looking for methods to promote efficiency and effectiveness in higher education. The province of Alberta was the first in Canada to introduce performance funding in post-secondary education in 1997. In 1999 the province of Ontario implemented performance funding in the community college sector and the university sector in 2000. In 2000, $7 million of the $738 million community college General Purpose Operating Grant (1 %) plus $7 Million from the Special Purpose Grant, was allocated according to how the college performed on three indicators: graduate employment rates after six months, and graduate and employer’s of graduates satisfaction with education received. This amount will be increased to 4% in 2001-2001 and 6% in 2002-2003. The universities received performance funds (1% of the operating grant) dependent on their achievement on three indicators: graduation rates, rate of employment after six months and rate of employment after two years (Ontario, 2000). In each sector the money is divided evenly between the three indicators. The institutions, which fall in the top third of measured performance, will get two-thirds of the allocation for the indicator. The institutions in the second Performance Funding 6 third receive the remaining one-third and the institutions in the bottom third receive nothing. Whether or not this is new money or a reallocation of existing funds is an import dimension of performance funding. If the institutions perceive this as new monies to be earned they will consider themselves in a win-neutral situation, if they perceive it as reallocated base grant they will consider the environment win – lose. Colleges and universities may consider the fact that the 15% cut in the general purpose grant in 1992 was never restored and subsequent increases have not kept place with inflation and therefore the monies used for performance funding may not be perceived as new money. Particularly, since the performance money is not added to the base and must be re-earned each year. Performance indicators and the funding tied to them become an expression of government policy and what is valued by government and ultimately society. From the government’s perspective the goal of performance funding in the post secondary sector is to promote a change in how institutions behave by holding them accountable for the specific priorities of government. According to Burke et al (2000), the true test of performance funding is whether or not it improves the performance of the colleges and universities and this will only be achieved if the colleges and universities “consider performance in internal allocations to their departments and divisions which are largely responsible for producing the desired results.” Therefore, the performance indicators that are chosen by government may have an influence on what the institutions value and where they allocate their resources. It should be noted that there are a number of models that may explain change in behaviour of post secondary institutions. Birnbaum (1983) cites four models that account for changes. The Performance Funding 7 first is the evolutionary model. Post secondary institutions evolve by interacting with the community, adapting to enrolment patterns and fads and trends. The second is the resource dependant model in which the institution changes behaviour in order to acquire additional resources. For example expanding the technology programs to take advantage of government incentive funding. The third is the natural selection model. The institution most able to adapt to changes in the environment will survive. A liberal arts college expands it’s offering to meet the vocational needs of the community is an example of adapting to the environment. Finding one or several niches may contribute to the survival and growth of the institution. The fourth model that may cause change in the institution is the social/intellectual model. In this model the disciple of study may change. An example of this model is the change to the nursing profession that requires a university degree instead of a college diploma for entry to practise. Birnbaum (1983) notes that it is very difficult for post secondary institutions to make dramatic changes to their mission, organisation or structure. Institutions face the internal constraints of few discretionary dollars to acquire new faculty or equipment for new programs. They don’t have access to good reliable information to make decisions that would move the institution in new directions. Also they are bound by natural internal resistance to change and inflexible operating procedures or contracts such as faculty collective agreements. External barriers to change are legal and fiscal requirements such as legislation restricting fund raising, the external environment’s perception of the institution, and that strategies that are compatible for one institution are not always functional for another. This thesis will determine if the implementation of performance indicators and performance Performance Funding funding has influenced changes in behaviour in the Ontario colleges of applied arts and technology and if it has changed behaviours it will examine the nature of these changes in behaviour. This will contribute to the theoretical and practical of accountability knowledge in several ways: it will provide an objective analysis of the influence of performance indicators on the activities of the colleges from an accountability perspective and will link theories on accountability structures to actual behaviours. Purpose The purpose of this study is to examine the nature and significance of the key performance indicators and performance funding implemented by the Government of Ontario and to investigate and provide a descriptive analysis of how and in what ways the implementation of performance funding has influenced the behaviour of the Ontario colleges of applied arts and technology. Objectives/Research Questions 1. How do the characteristics of the Ontario performance indicators compare with the characteristics of performance indicators in other jurisdictions? 2. Which performance indicators were tied to funding and why? 3. Do they treat the college sector and the university sector in the same manner? 4. How did the criteria for success, how the indicators are weighted, the manner in which funds were allocated and the amount of funding allocated to performance effect the operation of the institution? 8 Performance Funding 9 5. How did the implementation of performance indicators and performance funding affect mission, strategic plans, budgeting process , program offerings, services for students and internal accountability processes? Literature Review Performance Funding, Accountability and Governance Accountability is the stewardship of public funds. There are many ways in which an individual institution of higher education or the system of higher education can demonstrate accountability. Sid Gilbert of the University of Guelph defined accountability as "the provision of (agreed upon) data and information concerning the extent to which post-secondary institutions and the post secondary system are meeting their collective goals and responsibilities, including the effective use of scarce public resources" (as cited in ACCATO 1996). Who defines the outcomes or goals and responsibilities: the institution, the community, the government, or the industry partner? Dennison (1995) suggests colleges are accountable to a long list of stakeholders: students, staff, taxpayers, government, employers, and communities. And each stakeholder has different expectations of what an institution of higher education can and should do. Wagner (1989), in Accountability in Education theorises that these complex relationships dictate both the degree of accountability and the form which accountability will take. At times these expectations may be in conflict with one another. For example, internal stakeholders such as faculty may have an allegiance to their particular field of study instead of the institution whereas the government may want the institution's activity to be aligned with the government’s human resource needs. Often governments are looking for short term solutions to meet immediate needs and the institution Performance Funding 10 may look at education from a broader and longer term societal perspective. In the past, grants for universities and colleges were usually based on enrolment. When government wanted to influence how resources were allocated they offered some form of incentive funding such as grants for technology development. Many observers speculate (Serban 1998 a). Trow, (1994), Bruneau & Savage, (1995), Ashworth (1994), and Kells, (1992) that competition for the public resources intensified budgetary mechanisms shifted towards outcomes assessment and performance funding. And this move to external accountability and to tie funding to performance has changed the nature of governance in higher education (HE). The institutions become less autonomous and more controlled by government. The institutions lose their ability to develop their own mission and set their own goals and become more alike. The mission statement of the institution or the system is usually a broad-based statement that describes the institution's educational philosophy and purpose. The mission statement provides a point of reference for performance indicators and is seen as essential for the development of performance indicators (Minister's Task Force on University Accountability, 1993, Doucette and Hughes,1990). From the mission statement specific goals can be developed which indicate the sense of direction for the system or the institution. It makes the most sense to construct performance indicators that measure the achievement of the goals (Ball and Halwachi. 1987). With performance budgeting it could be argued that the mission of the system or institution will have to conform to the demands of the indicator tied to funding. And institutions will eventually lose their unique mission and goals and the system will become more homogeneous (Ashworth, 1994, Ewell, Wellman and Paulson, 1997). Performance Funding 11 Institutions of higher education (HE) often look at external accountability and the implementation of performance indicators and performance funding with great skepticism. The emphasis on accountability in HE was born in a period of fiscal restraint; therefore HE is suspicious of the motives of government (Sizer, Spee, & Bormans 1992). Also it is felt that the implementation of performance funding is a way for governments to justify cuts and to give more power and control to the government and its bureaucracy. This skepticism towards accountability is reinforced by the complexity of defining what accountability means in HE. Hufner (1991) proposes that accountability in HE is a multidimensional concept. Accountability may be at the system or institution level; there can be internal and external accountability. There is also function specific accountability, which may include teaching/learning, research, and administrative and fiscal accountability. The Minister's Task Force on University Accountability, (1994) suggests that HE should also be held accountable, in addition, for how it responds to community needs and initiatives. Trow (1994) argues that the only effective accountability is internal accountability and that the need for external accountability came about because of the mass expansion of universities and the dilution of internal controls. With the expansion of distance education and new technological learning environments Ewell et al (1997) would argue that the need for external control and accountability becomes more necessary as the capacity for internal controls becomes even more diluted. When the HE world was smaller accountability and the measure of quality was based on the reputation and resources of the institution. With the rapid growth and change in higher education these two measurements become even less useful. Performance Funding 12 Developing Performance Indicators for Performance Funding From his study of the use of indicators in Denmark, the United Kingdom, the Netherlands, Norway and Sweden, Sizer ( 1992) advances that PIs are most successful when there is cooperation between the HE and the government in the development of PIs. Sizer outlines the following best practices for the development of performance indicators. The first is to ensure that the government clearly specifies the objectives and policies for higher education. The government should work towards consensus with the HE institutions to determine the criteria for assessing achievement. To achieve institutional acceptance, before implementing the performance measures the government must be clear about how it intends to use the indicators. There should be ongoing dialogue as the indicators are implemented and revised. The government should support institutions in the development of information gathering to build the required database. Recognise that not all data should be used by the national government and some data may be used by the institution itself. If performance indicators are going to be used to allocate resources, the process must be clear and transparent. Teaching quality assurance systems should be developed within the institution. Burke, Modarresi and Seban (1999) who have studied the performance indicators and performance funding in the United States support many of Sizer’s conclusions regarding accountability programs. They found that unmandated voluntary systems programs were more likely to be successful than mandated prescribed accountability programs. Also the programs must have continuing support and respect the autonomy of the institutions and their diversity of mandates. Stable performance funding programs encourage collaboration between all Performance Funding 13 stakeholders, stress quality over efficiency and have eight to fifteen indicators. The ideal is when funding tied to performance is in addition to the base funding, and discretionary rather than targeted. The policies and priorities guiding the program must continue long enough for institutions to produce results. Polster and Newson (1999) challenge that in participating in the development of the indicators universities and colleges have been co-opted to participate in the development of performance indicators in the belief that if they do the measures will be more meaningful and less harmful. Academic institutions should be challenging the use of accountability as a basis for decision making. "Performance indicators open up the routine evaluation of academic activities to other than academic considerations, they make it possible to replace substantive judgements with formulaic and algorithmic representations” (Polster & Newson, 1999). The Characteristics of Performance Indicators Input/Process/Output/Outcomes In the development of performance indicators one common approach is to use the production model of inputs, process or throughputs, outputs and outcomes (David, 1996, ACAATO, 1996). Inputs are defined as resources and generally the indicators measure quantity. Throughputs or process is how the service is delivered and measures quality. Outputs are results and are quantitative and outcomes are longer-term goals and are usually qualitative (Association Colleges of Applied Arts and Technology of Ontario, 1996). Since the four parts of the model are interdependent it is believed that for true accountability there must be indicators that measure the objectives in each area (Hufner, 1991,Scott, 1994). Performance Funding 14 In an attempt to find the indicators that will have the most impact McDaniel (1996) conducted an opinion survey of eight different interest and expert groups from Western European countries. The participants were presented with 17 performance indicators and asked to rate them as to which of the following data can be identified as relevant quality indicators. Eleven of the 17 were rated as significant indicators. Conducting further analysis McDaniel determined which indicators would promote stakeholders to act and therefore have an impact on the institution. The following table gives examples of the input, process, output and outcome indicators. Indicators marked with * are ones which MacDaniel’s stakeholders believe would have high or medium impact on institutional behaviour. Table A: Examples of Input, Process, Output, and Outcome Indicators Input Indicators Process Indicators Outputs Indicators Outcome Indicators Market share of applicants Student workload Graduation rates Employer satisfaction with graduates* Persistence to Student/faculty ratio graduation Funding per student Faculty workload Amount and Quality of Facilities Diversity of programs/delivery modes Percentage of staff with Ph.D.* Class size Student evaluation of teaching/student satisfaction.* Number of Graduates passing licensing exams Cost per student Ph.D. Completion rates* Peer review of Curriculum content and teaching* Peer review of teaching* Adapted from Bottrill and Borden (1994) pages 107-117. Job Placement Reputation of the college as judged by external observers. Graduate Satisfaction Performance Funding 15 Relevance, Reliability, Accessibility and Clarity The report of the Minister’s Task Force on University Accountability (1994) believes that each indicator must be tested by the following questions. Relevance: Does the indicator actually measure what it says it does? Because you can calculate student contact hours may or may not tell you something about the workload of the faculty. To be relevant "the indicator must also be useful to those concerned with managing that activity" (Ball and Halwachi. 1987). Reliability: Can the data be reliably collected from year to year? Accessibility: Is the data easily available at a reasonable cost? Clarity: Is the information collected and the interpretation of it understandable and not open to misunderstanding? What must be avoided is calling anything to which one can attach a number a performance indicator (Association of Universities and Colleges of Canada, 1995). Once a piece of information becomes an indicator it takes on a new status. For an indicator to be meaningful it must be capable of measuring the objectives that are to be achieved (Sizer, Spee, & Bormans 1992). The intended audience also plays a role in determining which indicators are used and the level of reporting, system, institutional or program level. For example in Ontario the results of three indicators, graduate employment, student success, and the OSAP default rate must be reported by Performance Funding 16 the College system on both the institutional level and the program level. The institutional level is used for performance funding, the program level is to inform students to assist them in making program choices. In this case the same indicators are reported at different levels to meet the needs of the two different audiences, the government and the student. Simplicity is another valued characteristic. An illustrative example is found in Ashwoth (1994). "Goal: Increase the number of minority students enrolled in Texas institutions of Higher Education. Measure: The number of Hispanic, African-American and Native American U.S. citizens enrolled". Choosing Performance Indicators for Performance Funding The number of indicators developed is enormous. A Research Group of the University of Limburg identified 20 quantitative and 50 or more qualitative indicators that they believed to be reliable and valid (Sizer, Spee, & Bormans 1992). Bottrill and Borden (1994) list more than 300 possible indicators. How do governments and IHEs decide what indicators should be used? “Picking the performance indicator constitutes the most critical and controversial choice in performance funding” (Burke, 1998). Serban (1997) in her study of performance indicators in nine states using performance indicators to determine funding found that the selection of performance indicators was very difficult and controversial. "The diversity of institutional missions, the elusive nature of higher education quality, and prioritisation of higher education goals are just a few of the challenges in establishing an appropriate system of performance indicators. The performance indicators that are tied to funding will relate to the priorities and values of the policy makers.” Performance Funding 17 Joseph Burke (1998) examined indicators used for performance funding in eleven U.S. states. Making this comparison was difficult because the number of indicators, the content of the indicators and the wording used differed from state to state. The eleven states took seven different approaches to developing indicators for their four-year and two-year college programs. Some used identical indicators for both, others added indicators for particular types of institutions, some for individual institutions. Some states had mandated indicators, some states had optional indicators or a combination of optional and mandated. Burke compared the indicators used for performance reporting and performance funding. He found that both focused overwhelmingly on undergraduate education and the most common indicators in both lists were retention and graduation rates, transfers from two-to four year colleges, job placement and faculty teaching load. What was significant was that the states did not use access indicators such as ethnicity and gender for funding indicators. They also showed little interest in production indicators such as degrees granted in total or in a particular discipline. The funding indicators also introduced new policy issues such as distance education and the use of technology. The most common indicators used by four or more states were output or outcome indicators. The number of indicators used in the eleven states for funding ranged from a low of three to a high of 37. Lazell (1998) cautions against using too many performance indicators. The use of many indicators dilutes the importance of each one and the more used the greater the probability of having indicators that measure conflicting goals. Lazell cites the example of an indicator that supports high access that may not be compatible with an indicator that rewards high graduation rates. Performance Funding 18 The table below shows is shows the performance funding indicators that Burke found to be common in four or more states. The indicators appear to represent all areas of the production model. Table: B Indicators Used by Four or More States for Performance Funding. # input * Process indicators, † output indicator ‡ outcomes indicator Baccalaureate Institutions Two-Year Colleges Retention and graduation rates (10) † Retention and graduation rates (8) † Job placement (8) ‡ Two-to-four-year transfers (6)# Two-to-four-year transfers (6) † Faculty workload (5)* Faculty workload (4)* Institutional Choice (5)* Graduation credits and time to degree (4) † Graduation credits and time to degree (4) † Job Placement (4) ‡ Licensure test scores (4) ‡ Licensure test scores (4) ‡ Transfer graduation rates (4) † Workforce training and development (4)* Workforce training and development (4)* Adapted from Burke (1998), page 53 Performance Funding Criteria, Weighting of Indicators, Allocation Methods and Levels of Funding Once the performance indicators are selected for funding there are other policy considerations. The first is how success is determined. Serban (1998b) found that the common methods for determining success were institutional improvements over time, comparison with peer Performance Funding 19 institutions and comparison to a pre-set target standards or benchmarks. The system that appears to promote improvement of institutional performance of all the institutions in the system and recognise the individual strength and weaknesses of each institution is a combination of measuring institutional improvement over time and a comparison with peer institutions or performance benchmarks (Serban 1998b). The allocation process also includes applying a weighting factor to each indicator. The least controversial is applying identical weights to each factor. This implies that all indicators are of equal importance. However, in states where all the indicators are the same for every institution this method does not respond to individual institutional mission ( Serban,1998 b). To respond to the issue of mission the state of Tennessee has ten performance indicators and each one is worth 10% of the allocation, however one of the indicators is chosen by the institution and is mission specific (Banta, 1996). If the indicators have different weights some institutions may just concentrate improving their performance on the heavier weighted indicators (Serban, 1998 b). Allocation methods are an important factor to consider when setting up a performance funding system. There seems to be consensus that the allocation for performance funding should be in addition to the base funding ( Serban, 1998 b). However, the amount needs to be limited to guard against instability but large enough to induce changes. “ Additional money makes performance funding or budgeting a worthwhile effort. The resulting activities become part of a funded initiative, rather than an unfunded mandate or yet another activity competing for limited resources ( Burke, Modarresi and Seban,1999)”. Serban also found that some states designated the performance funds as discretionary and others restricted how the funds were to be used. Also Performance Funding 20 some states designed programs like Ontario where the institutions competed for the funds and created a system of winners and losers. Others developed systems were the funds were allocated to each institution and if performance criteria were not reached, the money was returned to the state general funds. Other jurisdictions such as Alberta set up a system of benchmarks based on passed results. The institution receives funds based on how well they did against the established benchmarks. An alternative to performance funding is performance budgeting. Burke and Serban, (1997) found in this system, governments use indicators in a flexible manner to inform budget allocations. This is in contrast to performance funding where performance on particular indicators is tied directly to funding allocations. Burke, Rosen, Minassians, and Lessard (2000) found that 28 (56%) state governments were using performance budgeting and 17 (34%) states were using performance funding. The authors note that the trend is towards the less tightly aligned to results performance budgeting. However because relatively small sums of money are tied to either program the result is that both have a marginal impact on institution budgets. A third method of accountability is performance reporting (Burke,2000). Periodic reports that detail the performance of colleges and universities on particular indicators may stimulate institutions to improve performance. The reports may go to legislators and the media. An example of this is in Ontario colleges and universities must post their student loan default rates on their web sites. Performance Funding in Florida, Missouri, Ohio, South Carolina and Tennessee In October of 1999 the Rockefeller Institute sent an opinion survey to campus leaders in Florida, Performance Funding 21 Missouri, Ohio, South Carolina and Tennessee. The purpose of the survey was to address the participants’ knowledge, use and effects of performance funding. The unanalysed results have been posted on the Institute’s web site. Each state has a unique system of performance funding the chart below compares the some of the characteristics of the states’ performance funding systems. The information for the chart is from Burke and Serban 1998 the State Synopses of Performance Funding Programs. Table C: Characteristics of Performance Funding in Florida, Missouri, Ohio, South Carolina and Tennessee State Adoption Mandated Initiation Number of Indicators Number of Indicators used for Funding Dollars Florida 1994 Yes Governor, legislature 3 3 12 million Missouri 1991 No Co-ordinating board 7 7 7.5 million Ohio 1995 Yes Co-ordinating Board 9 9 3 million South Carolina 1996 Yes Legislature 34 34 Tennessee 1979 No Co-ordinating Board 10 10 26.5 million A preliminary examination of the data on the web site ( Rockefeller, 2000) indicates that campus leaders believe that performance funding has increased the influence of senior campus administrators on major decisions affecting the institution. Performance funding is most used in decisions and actions when institutional planning takes place. It seems to be least used in academic advising and student services decisions or actions. Performance funding impacts most Performance Funding 22 on mission focus but has the least impact on faculty performance, faculty student interactions and graduates continuing their education. The choice of indicators seems to present the most difficulty in implementing performance funding and the high cost of collecting data and budget instability are identified as disadvantages of performance funding. In the opinion of the campus leaders the six most appropriate indicators are: accredited programs, graduate job placement, professional licensure exams, employer satisfaction surveys, external peer review, and retention/graduation rates. The survey participants felt that increases institutional choice and increased funding for performance would improve performance funding. Just over 50% of the respondents believe that performance funding has increased accountability to the state, 31% believe that performance funding has improved the performance of the institution. If the bottom line in assessing performance funding is improved institutional performance (Burke,2000) this is hardly a ringing endorsement. Performance Indicators and Funding in Alberta The Province of Alberta has 123, 000 students enrolled in 4 universities, 2 technical institutes, 15 colleges and 4 non-for profit religious university-colleges. In 1995 the provincial government mandated the creation of an accountability framework that would measure outputs and outcomes. In consultation with the institutions seventy-six indicators were developed (AECD,1996a,AECD 1996 B). The accountability framework also created a new funding mechanism that would reward an institutions performance in providing accessibility, quality and relevance. Fifteen million dollars or about 2% of total post secondary funding would be allocated for the performance based funding. Performance Funding 23 Performance funding is driven by 9 of the 76 indicators. Five indicators are common to all institutions and four are used by research universities only (Boberg, Barneston , 2000). The five common indicators were to measure three key goals of government responsiveness, accessibility and affordability. Responsiveness was measured by employment rate of graduates and graduate satisfaction with their educational experience. Accessibility was measured by measuring full-load equivalence (FLE) based on a three year rolling average adjusting for urban versus rural institutions. Affordability which is defined as educating the greatest number of students at a reasonable cost to the Alberta taxpayer and student was measured by examining administrative expenditures and out-side generated revenues (Boberg, Barneston , 2000). The research component has for indicators. Council success rates which is the amount of national granting council awards (MRC, NSERC, and SSHRC) per full-time faculty member as compared to per institutions. Citation impact that is the expressed ratio of citations to published papers. Community and Industry sponsored research is the amount of grants per full-time faculty and research enterprise is the total sponsored research revenues generated as a proportion of the government grant (Boberg & Barneston , 2000).. The performance funding is rewarded according to the institution performance on a predetermined performance corridor. The corridors were determined by using passed performance of the institutions and developing a set of bench marks that will change from year to year as performance changes. All institutions falling within the corridor are assigned the same number of points for that indicator. For example graduate employment is the percentage of graduates employed which is plotted on a linear scale. The benchmarks divide the linear scale Performance Funding 24 into a series of performance corridors such as 60-69%, 70-79%, 80-89%,> than 89%.The points for the five indicators are tallied and the funding awarded is based on the score. The research performance funds are separate and awarded based on the weighted and combines scores on the four indicators (Boberg & Barneston , 2000). . A two-year pilot began in 1996/97 and in 1997 all institutions received a 1% increase in its operating grant to recognise system-wide improvement in productivity. The breakdown of institutions awarded on their performance was 8 out of 21 received a 1.5% increase, 9 institutions received 0.75% and 4 institutions received no increases. In 1998 the institutions performances improved but their grants decreased because the $15 million allotted to the program remained static. A review of the mechanism took place in 1998 and the government agreed to increase the allotment to performance funding to $16 million in 1999 and $23 million in 2000 (Boberg & Barneston , 2000). According to Boberg & Barneston (2000) performance funding was introduced by the Alberta government to “increase institutional productivity and government influence over institutions” but the nature of performance of funding may achieve the opposite. They argue as does Trow (1994) that critical self evaluation and internal reform leads to increases in productivity and quality. In contrasted with performance funding which induces institutions to produce high scores on performance outcomes without any insight into inputs or processes. This is particularly the case in a climate of under funding. Also they argue that using performance funding creates an artificial market competition among public sector providers, which doesn’t work because of competing political considerations. For example it is highly unlikely a college or university will Performance Funding 25 go out of business because of access issues and community need. They also argue that the quantitative framework of performance funding will not measure the decline in quality that may come about from performance funding. The Future of Performance Funding According to Schmidtlein (1999) there is very little research on the effectiveness of performance funding/budgeting and argues that performance funding will fail, as have other notable budget reforms such as ‘Zero Based Budgeting’. This is because the funding and budgeting programs are based on seven faulty or unrealistic assumptions. That governments have well defined and stable policy objectives. Performance indicators are relevant and provide reliable data which allow the highest level of bureaucrats to make complex decisions that will improve higher education. The bureaucracy operates the college rather than the professional organisations. There is a direct observable link between inputs and outputs in a complex human endeavour such as education. Outcomes are easily identified and funding should be tied to their achievement. Government control through finances is preferable to autonomous institutions who are not as susceptible to short term trends and fads. Performance funding will require institutions to be more focused on quality and less on maintaining enrolment. Performance Funding 26 On the other hand Burke, Modarresi and Seban, (1999) believe that well developed performance funding programs do lead to institutional improvement. The biggest flaw is the academic community’s reluctance to identify and assess learning outcomes. They believe that “results will continue to count more and more in the funding of public colleges and universities”. Research Questions The performance funding system for the Ontario CAATS will be compared to other jurisdictions that have implemented performance funding program in the following dimensions: 1. How do the characteristics of the Ontario performance indicators compare with the characteristics of performance indicators in other jurisdictions? 2. Which performance indicators were tied to funding and why? 3. Do they treat the college sector and the university sector in the same manner? 4. How did the criteria for success, how the indicators are weighted, the manner in which funds were allocated and the amount of funding allocated to performance effect the behaviour of the institution? 5. How did the implementation of performance indicators and performance funding affect mission, strategic plans, budgeting process , program offerings, services for students and internal accountability processes? Research Methods The jurisdictions chosen for comparison will be: Performance Funding 27 The province of Alberta because it is the only other Canadian Province to implement performance funding. The states of Florida, Missouri, Ohio, South Carolina and Tennessee studied in October 1999 by the Rockerfeller Institute. This study asked key stakeholders for their opinions of the effects of performance funding. In the jurisdictions out side of Ontario relevant publications will be consulted and a structured interviews with key government officials and a sample of institutional leaders in each jurisdiction will be used to gather information. An in depth study of four Ontario CAATS will be carried out to determine if the implementation of performance funding affected mission, strategic plans, budgeting process and internal accountability processes and how. The four colleges will be chosen based on three criteria. One, diversity in their performance funding results, two, diversity of size and location, three, willingness to participate in the study. All internally published documents, press releases etc. related to the college’s response to the implementation of the accountability system will be reviewed to determine what changes the colleges intends to make in how is does it’s business. In addition there will be structured interviews with the President, Chair of the Board of Governors, Senior mangers of each institution. There will be an attempt ensure that the interview participants across the four colleges are consistent by position, for example all four presidents, four deans etc. Also there will be interviews with key informants from the Ministry of Colleges, Training and Universities and co-ordinating bodies to seek there opinion on how colleges have responded to the implementation of performance funding and what they envision for the future of performance Performance Funding 28 funding in the province. For the semi-structured interviews the questions will be partially developed from the Rockefeller 1999 opinion survey of Florida, Missouri, Ohio, South Carolina and Tennessee. In October of 1999 the Rockefeller Institute sent an opinion survey to campus leaders in preceding five states. The purpose of the survey was to address the participants’ knowledge, use and effects of performance funding. An analysis of this survey has yet to be completed. The survey contained nineteen Likert-type questions about performance funding. In addition the respondents were given an opportunity to give open-ended comments and there were 3-5 questions that were specific to performance funding issues in each state. Because I will be doing semi-structured interviews the Likert scale will not be used. The questions from the Rockefeller study that are relevant to the Ontario environment will be used without changes . Others questions from the study will be modified to make them relevant and new ones relevant to the Ontario experience will be developed. Questions deemed inappropriate or irrelevant to the Ontario experience will be deleted. See attached questionnaire. The data from the face-to-face interviews and phone interviews will be documented through hand written notes or word-processed notes and audio-tapes taken by the investigator. The audio-tapes will only be used as a back-up to written notes. The college participants will be identified by the their position in an aggregate ie the presidents of the four colleges unless permission is given otherwise. The colleges in the case study will be assigned a code. The state and provincial officials will only be identified by jurisdiction. For the interviewees, I will use code numbers to document in our notes the names of individuals being interviewed Performance Funding 29 All data from the interviews will reported in a such a manner to protect the identity of the interviewee. The data will be stored in a locked cabinet to protect individual privacy. Individuals will be quoted only where written permission is given. The data will be analysed by sorting the responses into themes and looking for common and diverse opinions. Limitations The study may be limited by the willingness, availability and accessibility of the participants. Also the performance funding in the college sector is a new program and there has been little opportunity to analysis the long term viability and effectiveness of the program. Performance Funding 30 References Association of Colleges of Applied Arts and Technology of Ontario. (1996) Accountability in a learning centred environment: Using performance indicators reporting, advocacy and planning. Toronto ON: ACAATO. Association of Colleges of Applied Arts and Technology of Ontario. (March10, 2000). 2000-01 Transfer Payment Budget/ 2000-05 Tuition Fee Policy Briefing Note. Toronto ON: ACAATO. Association of University and Colleges of Canada (1995). A primer on performance indicators. Research File, Volume 1, Number 2. Available http://ww.auc.ca/english/publications/research/jun95.html. [1999,April 7]. Ashworth, K.H. (1994). Performance based funding in higher education: The Texas Case Study. Change, 26(6). 8-15. Ball, Robert & Halwachi Jalil (1987). Performance indicators in higher education. Higher Education 16: p. 393-405. Banta, T. W., Rudolph, L.B. & Fisher, H. S. (1996). Performance funding comes of age in Tennessee. Journal of Higher Education, 67. 23-45 Birnbaum, Robert.(1983). Maintaining Diversity in Higher Education. San Francisco: Jossey –Bass publishers. Boberg, Alice & Barnetson. (2000). System-wide Program Assessment with Performance Performance Funding 31 Indicators: Alberta’s Performance Funding Mechanism. The Canadian Journal of Prgram Evaluation. Special Issue, 2-23 Bottrill, Karen & Borden Victor M. H. (1994). Appendix: Examples from the Literature. New Directions for Institutional Research n82, summer, p. 107-119. Bruneau, William & Savage, Donald. (1995). Not a magic bullet: Performance indicators in theory and practice. Paper presented at the Canadian Society for the Study of Higher Education Annual Meeting, Montreal, PQ. Burke, J. C., (1998). Performance Funding Indicators: Concerns, values, and models for state colleges and universities. New Directions for Institutional Research, 97. 49-60. Burke, J. C. & Serban (1998). State Synopses of Performance Funding. New Directions for Institutional Research, 97. 25-48. Burke, J. C., & Modarresi, S.(2000). To keep or not to keep performance funding: Signals from the Stakeholders. Journal of Higher Education. Vol 71 n 4 p 432-453. Burke, J. C., Modarresi, S. & Serban, A. M. (1999). Performance: Shouldn’t it count for something in state budgeting? Change,31, 16-24. Burke, J. C. & Serban, A. M. (1997). Performance Funding and Budgeting for Public Higher Education: Current Status and Future Prospects. The Nelson A. Rockerfeller Institute Of Government, Albany, New York. Cave, Martin, Kogan, Maurice and Hanney, Stephan (1990). In Dochy, Filip,J.R.C., Performance Funding 32 Segers, Mien,S.R. and Wijnen, Wynand H.F.W. (Eds.). Management information and performance indicators in higher education: and international issue. (Chapter 1). The Netherlands:Van Gorcum &Comp. Currie, Jan & Newson, Janice. (1998). Universities & Globalisation: Critical Perspectives. London, SACE Publications, Thousand Oaks David, Dorothy (1996). The real world of performance indicators. London, England: Commonwealth Higher Education Management Service. (ERIC Document Service No. ED416815) Dennison, John D. (1995). Accountability: Mission Impossible. In John D. Dennison (Ed.), Challenge and Opportunity: Canada's Community Colleges at the Crossroads. (pp. 220242).Vancouver, University of British Columbia Press. Doucette, D. and Hughes, B. (Ed.) 1990. Assessing Institutional Effectiveness in Community Colleges. Mission Viejo, CA.: League for Innovation in the Community College. Ewell, Peter, T., Wellman, Jane, V., & Paulson Karen (1997). Refashioning accountability: Toward a "coordinated" system of quality assurance for higher education [Online]. Available:http://www.johnsontdn.org/library/contrep/Reaccount.html [1999,September 1]. Fisher, Donald & Rubenson (1998). The Changing Political Economy: The private and public lives of Canadian Universities. In Jan Currie, & Janice Newson (Eds.). Universities & Globalization: Critical Perspectives. London, SACE Publications, Thousand Oak Hufner, K. (1991). Accountability. In P.G. Altbach (Ed.) International Higher Education: Performance Funding 33 An Encyclopedia, Volume 1(pp.47-58). New York: Garland Publishing, Inc. Kells, Herb R. (1992). An analysis of the nature and recent development of performance indicators in higher education. Higher Education Management, v4n2. 131-155. McDaniel, Olaf C. (1996). The theoretical and practical use of performance indicators. Higher Education Management v 8 n3, 125-139. Minister's Task Force on University Accountability. Report of the Committee on Accountability, Performance Indicators and Outcome Assessment. Revised April 29, 1994. Ontario Government (1999). Ministry of Training, Colleges and Universities [ On-line]. Available : http://www.gov.on.ca/MBS/english/press/plans2000/tcu.html Polster, Claire, & Newson, Janice (1998). Don't count your blessings: The social accomplishments of performance indicators. In Jan Currie, & Janice Newson (Eds.). Universities & Globalization: Critical Perspectives. London, SACE Publications, Thousand Oaks. Rockefeller Institute of Government (2000), Performance Funding Opinion Survey of Campus Groups (1999-2000). Available http://www.rockinst.org/quick_tour/higher_ed/current_projects.html#performance_funding [ 2001, January 28]. Schmidtlein, F. A. (1999). Assumptions underlying performance based budgeting. Tertiary Education and Management 5. 159-174. Scott, Robert, H.(1994). Measuring performance in higher education. In Meyerson, Performance Funding 34 Joel,E. and Massy, William, F. (Ed), Measuring Institutional Performance in Higher Education ( Chapter 1).Princeton, New Jersey: Perterson’s. Serban, A. M. (1998 a). Precursors of performance funding. New Directions for Institutional Research, 97. 15-24. Serban, A. M. (1998 b). Performance funding criteria, levels and methods. New Directions for Institutional Research, 97. 61-67. Serban, A. M. (1997). Performance Funding for Public Higher Education: Views of the Critical Stakehiolders. The Nelson A. Rockerfeller Institute Of Government, Albany, New York. Sizer, John, (1992). Performance indicators in government-higher institutions relationships: Lessons for government. Higher Education Management, 4. 156-163. Sizer, John, (1990). Performance indicators and the management of universities in the UK. A summary of developments with commentary. In Dochy, Filip,J.R.C., Segers, Mien,S.R. and Wijnen, Wynand H.F.W. (Eds.). Management information and performance indicators in higher education: and international issue. (Chapter 1). The Netherlands:Van Gorcum &Comp. Sizer, John, Spee, Arnold, & Bormans, Ron (1992). The Role of performance indicators in higher education. Higher Education ,v24n2, 133-155. Trow, Martin, (1994) Academic reviews and the culture of excellence. Studies of Higher Education and Research. No 2 , 7-40. Wagner, (1989) Accountability in Education. San Francisco: Jossey-Bass. Performance Funding 35 Bibliography Accountability study at Columbia University, Accountability of colleges and universities -The Essay [On line]. Available: http://www.columbia.edu/cu/provost/acu/acu/13.html [ September 12, 1996]. Association of Colleges of Applied Arts and Technology of Ontario. (1999) Backgrounder to press release of 1997-98 KPI results. March 9,1999. Association of Colleges of Applied Arts and Technology of Ontario. (1996) Accountability in a learning centered environment: Using performance indicators reporting, advocacy and planning. Toronto ON: ACAATO. Ball, Robert and Wilkinson Rob (1994). The use and abuse of performance indicators in UK higher education. Higher Education. Vol 27, p 417-427. Barnett, R.A. (1988). Institutions of Higher Education: purposes and 'performance indicators'. Oxford Review of Education. V14 n1 p 97-112. Benjamin, Michael (1996). The design of performance indicator systems: Theory as a guide to relevance. Journal of College Development. Vol 37 n 6 p 623-629. Bernstein, David (1999). Comments on Perrin’s “Effective Use and Misuse of Performance Indicators”. American Journal Evaluation. Vol 20, n 1, p85-93. Burke, Joseph, & Serban Andrea M. (1997). Performance funding or fad or emerging trend? Community College Journal. Dec/Jan p27-29. Performance Funding 36 Calder, William, Berry & Calder M. Elizabeth (1999). The inescapable reality of assessing college performance. The College Quarterly. Available: http://www.collegequarterly.org/CQ.html/HHH.043.CQ.F95.Calder.html (1999). Dolence, Michael G. & Norris, Donald M. (1994). Using Key performance indicators to drive strategic decision making. New Directions for Institutional Research. N 82 p63-80. Epper, Rhonda, Martin ( 1999). Applying bench marking to higher education: some lessons from experience. Change. Nov/Dec. Jongbloed, Ben, W. A. & Westerheijhden, Don, F. (1994). Performance Indicators and Quality Assessment in European Higher Education. New Directions for Institutional Research. n 82 p 37-49. Layzell, Daniel, T. (1998). Linking performance funding outcomes for public institutions of higher education: the US experience. European Journal of Education. N 1. Mayes, Larry, D. Measuring Community College Effectiveness: The Tennessee Model. Community College Review. Vol 23 n 1. Ministry of Education and Training & Association of Colleges of Applied Arts and Technology of Ontario (1999, April). kpiXpress: A regular update on the key performance indicators project, v1,n3. Ministry of Education and Training. (1998) Ministry of Education and Training business plan 1998-1999 [On-line]. Available:http://www.gov.on.ca/mbs/english/press/plans98/edu.html [September 4, 1999]. Performance Funding 37 Ministry of Education and Training. (1998). Implementing Key Performance Indicators for Ontario’s Colleges of Applied arts and Technology, Final Report of the College/Ministry of Education Task Work Groups. Toronto. Ministry of Education and Training. Monroe Community College. (1998). Campus-wide performance indicators (Report). Perrin, Burt (1998). Effective use and misuse of performance measurement. American Journal Evaluation. Vol 19, n 3. Peters, Micahel (1992). Performance accountability in ‘post-industrial society’: the crisis of British universities. Studies in Higher Education. Vol 17, n 2. Pollanen, R.M. (1995). The Role of Performance Indicators as Measures of Effectiveness in Educational Institutions: A literatue Review and Potential Theoretical Frmework for Colleges of Applied Arts and Technology of Ontario. Toronto ON: ACAATO. Vision 2000: Quality and Opportunity. (1990). Toronto Ontario: Ontario Ministry of Colleges and Universities. Watson, David (1995). Quality assurance in British universities: Systems and outcomes. Higher Education Management. Vol 7, n 3 p25-37. Performance Funding 38 Appendix A Draft of Letter to be sent to college case study participants. Marjorie McColm 40 Dunvegan Drive Richmond Hill, Ontario L4C 6K1 Telephone B. (416) 415-2123 Fax (416) 415 – e-mail: mmccolm@gbrownc.on.ca Dear XXXX Thank you for agreeing to be interviewed on ____________. As I explained in my telephone call I am a doctoral student in the Department of Theory and Policy Studies at OISE/ University of Toronto. My dissertation will examine the implementation of performance indicators and performance funding for the Ontario colleges of applied arts and technology in the province of Ontario. The purpose of this study is to examine if performance funding has influenced the behaviour of the Ontario colleges of applied arts and technology. The data for the study will collected through semi-structured interviews with key informants such as yourself in four colleges, external stakeholders such as ACCATO and the Ministry of Colleges Universities and Training and key informants in other jurisdictions outside Ontario. The questions will focus on how your college has responded to its performance on the KPIs. The interview questions have been pretested with college personnel similar to yourself in nonparticipating colleges. It should take us an hour to complete the questions. I will be conducting the interview and taking notes I would like to audio-tape the interview also to ensure accuracy of the notes, however, if you are uncomfortable with this it is not necessary. Please note that you are free to end the interview at any point. The research proposal has been through an ethical review. Please be assured that your responses will be kept confidential. No individual or college will be identified in the data reported. Responses may be identified by generic positions. For example senior administrators have divided opinions regarding …. All data collected will be kept in a locked filing cabinet at my home and destroyed five years after the completion of my dissertation. Attached is a consent form please complete fax a signed copy back to me at 416-415- 8888. If you have any questions regarding project please don’t hesitate to contact me. Performance Funding 39 Thank you again for participating in the study, I believe that it will generate some interesting and important data that about KPIs and performance funding. I look forward to meeting you. Marjorie McColm Performance Funding Appendix B Draft Consent form for Interview with College Personnel Marjorie McColm 40 Dunvegan Drive Richmond Hill, Ontario L4C 6K1 Telephone B. (416) 415-2123 Fax (416) 415 – e-mail: mmccolm@gbrownc.on.ca March 2001 I ____agree ______ do not agree to be interviewed regarding Key Performance Indicators and Performance Funding. I understand that the data collected during this interview will be incorporated into the dissertation. I note that I am free to terminate this interview at any time. Name:___________________ Signature :______________________ Date: ___________________________ 40 Performance Funding 41 Appendix C Draft of Questionnaire for College Personnel in Four Case Studies Semi-Structured Interview Questionnaire for College Personnel Information Collected before the Interview 1. Descriptive Information 1.1 Name of College 2.1 Address 3.1 Number of students 4.1 Number of programmes 5.1 Reputation programs 6.1 Name and tittle 2. Results on KPI Year Graduation rate Rate of Employment after 6 months* Graduate Employers Satisfaction* Satisfaction* 1999 2000 2001 Funding Indicators * Ranking for performance funding – top third, middle third or bottom third Student Satisfaction OSAP Repayment Performance Funding Year Rate of Employment after 6 months $ Graduate $ Employers $ earned Satisfaction earned Satisfaction earned 42 % of total Budget 2000 2001 3. Are you aware of your KPI results? 4. Where they what you predicted? What surprised you? 5. What is your view of the results? 6. Which KPI has given you the best information for decision making? 7. In your opinion which KPI has had the most influence on your institution? 8. Who in the college receives the results? To what extent does your institution disseminate its results on performance funding indicators to campus units or constituencies? 9. In what format are the results disseminated to your constituency? 10. What are they expected to do with the results? 11. To what extent are the institutional results on performance funding indicators used for decisions and actions in the following area? Performance Funding Academic advising Administrative services Admissions Curriculum and program planning Faculty workload Institutional Planning Internal budget allocations Student outcomes assessment Student services. 43 13. What is your estimate of the impact of performance funding on the following factors at your institution? Administrative efficiency Faculty performance Faculty-student interaction Graduates continuing their education Graduates job placement Inter-institutional co-operation Mission focus Quality and quantity of student learning 14. How has the money that has been received from performance funding been allocated? Special projects Base budgets Performance Funding 44 15. To what extent do the following represent an advantage of performance funding in Ontario? Campus direction over performance funding money Emphasis on results and performance Identification and priority goals for higher education Improved higher education performance Increased efficiency Increased externals accountability Increased funding Increased response to provincial needs 16. To what extent do the following represent a disadvantage of performance funding in your provincial? Budget instability Erosion of institutional autonomy Erosion of institutional diversity High cost of data collection and analysis Unhealthy competition amount campuses 17. How appropriate or inappropriate are the following indicators for performance funding? Graduation rate OSAP repayment Employer Satisfaction Graduate Satisfaction Student Satisfaction Rate of Employment after six months Performance Funding 18. What would improve performance funding in Ontario? Fewer indicators More indicators Institutional choice to match individual mission Decreased funding for performance Increased funding for performance Higher institutional performance targets Lower institutional performance targets 19. Has performance funding Improved the performance of your institution Increased accountability of your institution Increased the responsiveness of your institution to provincial needs Increased provincial funding for higher education 20. What would improve performance funding? Multi-year evaluations? Institution specific indicators? Mission specific indicators? 21. What do you think is the future of performance funding in Ontario? 22. What do you think would be an effective alternative to performance funding to ensure accountability? 45 Performance Funding 46 Appendix D Draft of Letter to State and Provincial Key Informants Marjorie McColm 40 Dunvegan Drive Richmond Hill, Ontario L4C 6K1 Telephone B. (416) 415-2123 Fax (416) 415 – e-mail: mmccolm@gbrownc.on.ca Dear XXXX Thank you for agreeing to be interviewed on ____________. As I explained in my telephone call I am a doctoral student in the Department of Theory and Policy Studies at OISE/ University of Toronto. My dissertation will examine the implementation of performance indicators and performance funding for the Ontario colleges of applied arts and technology in the province of Ontario. The purpose of this study is to examine if performance funding has influenced the behaviour of the Ontario colleges of applied arts and technology. The data for the study will collected through semi-structured interviews with key informants in external jurisdictions such as yourself, stakeholders external to the college in Ontario and personnel in four Ontario colleges. The questions will focus on the implementation of performance funding in you jurisdiction. The interview questions have been pretested. It should take us an hour to complete the questions. I will be conducting the interview and taking notes. Please note that you are free to end the interview at any point. The research proposal has been through an ethical review. Please be assured that your responses will be kept confidential and will be reported by your state (province) unless you give your consent. All data collected will be kept in a locked filing cabinet at my home and destroyed five years after the completion of my dissertation. Performance Funding 47 Attached is a consent form please complete fax a signed copy back to me at 416-415- 8888. If you have any questions regarding project please don’t hesitate to contact me. Thank you again for participating in the study, I believe that it will generate some interesting and important data that about KPIs and performance funding. I look forward to meeting you. Marjorie McColm Performance Funding Appendix D Consent form for Interview with State and Provincial Key Informants Marjorie McColm 40 Dunvegan Drive Richmond Hill, Ontario L4C 6K1 Telephone B. (416) 415-2123 Fax (416) 415 – e-mail: mmccolm@gbrownc.on.ca March 2001 I ____agree ______ do not agree to be interviewed regarding Key Performance Indicators and Performance Funding. I understand that the data collected during this interview will be incorporated into the dissertation. I note that I am free to terminate this interview at any time. Name:___________________ Signature :______________________ Date: ___________________________ 48 Performance Funding Appendix E Draft of Questionnaire for State and Provincial Key Informants 1. What influenced your jurisdiction to implement performance funding? 2. What role did the post-secondary institutions play in the development in the process? 3. How were your performance indicators developed? 4. Which ones wee chosen for performance funding and why? 5. What percentage of the education envelop is allocated to performance funding? 6. How are the performance funds allocated? 7. Do you anticipate any changes in your performance funding process? 8. In your opinion what has been the effect of performance funding on the colleges? 49