Practical Online Formative Assessment for First Year Accounting

advertisement

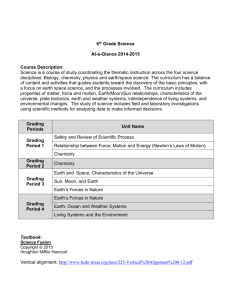

Practical Online Formative Assessment for First Year Accounting B. Bauslaugh bruce@lyryx.com N. Friess nathan@lyryx.com Lyryx Learning Inc. #205, 301 - 14th Street N.W. Calgary, Alberta, Canada T2N 2A1 C. Laflamme laflamme@ucalgary.ca University of Calgary Department of Mathematics and Statistics 2500 University Dr. NW. Calgary Alberta Canada T2N1N4 August 10, 2011 Abstract Lyryx Learning began as a project in the Department of Mathematics and Statistics at the University of Calgary, Alberta, Canada. The objective was to provide students and instructors with a learning and assessment tool better than the available multiple-choice style systems. Lyryx soon began focusing on Business and Economics courses at the higher education level, eventually offering course assessment to 100,000 students annually. This article describes its efforts toward online formative assessment in first year accounting courses. An appropriate user interface was first designed to allow for the presentation of genuine accounting objects, in particular financial statements. Next, grading algorithms were implemented to analyze student work and provide them with personalized feedback on their work, sometimes even going beyond what is normally provided by a human grader. Finally, performance analysis is presented to students and instructors, enabling them to better to assess learning and help them both make necessary adjustments. 1 Introduction Multiple-choice style (MCS) assessment has now been around for about a century. The simplicity of the MCS structure made it a perfect candidate for early automated implementation, and in the 1970’s PLATO may very well have been the first large scale system to do so. Although still widely used for summative assessment (i.e. 1 for testing mastery of learning outcomes), it is precisely the simplicity of the MCS structure which undermines its value and potential as a learning tool (see for example Scouller [14]). A grader that merely indicates whether answers to questions are correct or not can only provide limited learning assistance. However, “Computeraided assessment” (CAA) has made significant progress over the last forty years (see Conole and Warburton [4]), now offering a much wider range of tools for learning and assessment. In particular CAA now has a much better chance to be effectively used toward formative assessment, generally defined as the use of assessment to provide information to students and instructors over the course of instruction to be used in improving the learning process (see for example [2]). Boston [3] goes on to say that “Feedback given as part of formative assessment helps learners become aware of any gaps that exist between their desired goal and their current knowledge, understanding, or skill and guides them through actions necessary to obtain the goal” (see also Ramaprasad [12], and Sadler [13]). One of the best forms of formative assessment is what is traditionally known as ”homework”, which is the focus of this project and current article, as applied to the area of first year accounting. Because of its procedural aspects, accounting lends itself naturally to CAA. Bangert-Drowns, Kulick and Morgan [1], and Elawar and Corno, [6]), emphasize that one of the most helpful type of feedback on homework and examinations is specific comments on the mistakes and errors in a student’s work, together with targeted suggestions for improvement. As we will see, this capability is now much closer to reality for CAA, with the further ability to allow students to repeatedly try a given task again and again, each time receiving feedback and personal guidance on their work. This provides students with a rich learning environment: it is not simply getting the right answer that is important, but rather the opportunity to succeed while focusing their attention on the task at hand. 2 Overview of the Formative Assessment Implementation Lyryx assessment was initially based on the pedagogical premise that assessment methods can be categorized as either formative or summative. Formative assessment methods are primarily concerned with providing feedback to students to assist their learning of the subject material. Typically this takes the form of homework assignments, lab work, etc. in which the student is given extensive feedback regarding what errors they made and how they should be corrected. Summative assessment is primarily concerned with gauging the extent to which a student has mastered the subject material. This usually takes the form of quizzes or exams, and detailed feedback may not be provided. MCS assessment performs reasonably well as a summative assessment tool, but fails in the formative role. Our goal was to develop and implement a formative CAA. Our system uses four main features to accomplish this goal: 1. Randomized Questions 2. The User Interface 2 3. The Grading Algorithms 4. Feedback Information to Students and Instructors Randomization of the question content allows the students to attempt the same question as many times as they require to master a concept without allowing mere memorization of the answer to a specific question. Each time a question in our system is accessed, the specifics are regenerated randomly to provide an effectively infinite pool of similar questions. In this respect CAA can be superior to traditional homework, where a student has only one (or very few) attempts, and does not have the opportunity to make use of the feedback provided by the instructor by attempting the assignment again. With our system the student can practice the same question over and over, each time receiving informative feedback, until they succeed. Furthermore, only the student’s best grade over all attempts at the question is recorded, providing a low-threat environment and motivating the student to continue practicing until the material is mastered. The user interface (UI) is necessary so that students can be presented with objects appropriate for the intended field of study, and should be as intuitive as possible to avoid placing any barriers to learning. Students need to focus on learning the subject material rather than the interface of a piece of software. The user interface also needs to be flexible enough to avoid inadvertently giving the student extra information; a restrictive user interface can force the student into making decisions that they should be using their knowledge to make. In the case of accounting, students must learn how to create financial statements, and the experience should be as similar as possible to creating a financial statement using a spreadsheet, or free-form on paper. Grading homework is generally viewed as one of the most onerous duties of an instructor. Effective grading for formative assessment requires skills obtained through years of teaching experience: the ability to understand and anticipate student difficulties, and to provide helpful and fair comments on their work and suggestions for improvement. Replicating these skills to any extent in CAA is a significant undertaking. The UI plays a role in helping the computer understand the actual student work, by imposing some restrictions and structure on the student’s input. Then the grading knowledge of an experienced instructor must be harnessed and be implemented into a series of computer instructions. The correct answer is typically easy enough to recognize, although even here rounding errors in calculations need to be carefully handled. When the answer is incorrect, however, an experienced instructor can often recognize where the student has gone astray and show them how to correct their error. This ability is reproduced in our system by comparing incorrect student responses to a pool of ’common mistakes’ for a given problem. If such a common mistake is recognized, appropriate feedback is given. Clearly quantitative subjects, in particular procedural ones, lend themselves much better to this implementation. As experienced instructors know, there is a subtle and difficult balance to be achieved between helping students with feedback and suggestions but not spoonfeeding them. Restraint must be used in not providing students with recipes to solve the problems at hand, but instead providing enough guidance to assist them and avoid excessive frustration. Moreover the content of the feedback, and grading 3 procedures in general, should be closely aligned with the content learned by the students in class. For example, notation and procedures, as well as difficulty level, should match what is used by the student’s textbook and instructor as closely as possible. This is simply so that all parts of the learning process, whether they be lessons, assessment, or any other component, form as cohesive a learning experience as possible. Finally, computers also have the ability to store vast amounts of information, and this can provide valuable feedback to students and instructors, completing the framework for effective formative assessment. In this context privacy is always a concern - it is our opinion that details of when and how a student works is personal information that should not be tracked. However, aggregate information such as the average number of attempts at a question taken by students in a class, average grade per attempt, and similar statistics can be extremely valuable to an instructor for determining where the class is struggling. This can be used to focus class time more effectively. For individual students, the system can track their performance on individual topics and provide suggestions as to where their efforts would be best spent during their study time. 3 Randomized Questions It is well known that the key to learning any skill is practice. By far the most effective way for students to master a subject such as accounting is to actively engage with the material - actually doing accounting and receiving feedback on their performance. Unfortunately this requires a significant amount of resources per student, and a typical instructor with several large classes of students can devote only a limited amount of effort to any individual student. As a result, each topic in a course will generally be tested only by a single assignment or quiz, with limited feedback provided to each student. Students will often give only a cursory glance to the feedback, since they will likely not be tested on the same material again until the final exam. Ideally, students should be provided with immediate feedback on their work and have the opportunity to apply the new information as quickly as possible. In this respect an automated system is ideal - the feedback is instant and the student can immediately attempt the question again to verify that they have understood the feedback. However, this is undermined if the student simply attempts an identical question. For the feedback to be useful it should contain the correct answer, but there is no value in the student simply copying the correct answer into a new instance of the question. What is required is a similar question, testing the same content, but with enough difference that the student must engage the new question using the concepts explained in the feedback. A key component of the Lyryx system is randomization of the questions. Each question in the test bank is really a question type that can be instantiated in an essentially unlimited number of specific randomized instances. Obviously the details of how this is done are dependent on the question being implemented. In accounting, for example, a journalization question will typically have a large pool of transaction templates from which a subset is selected, and then realistic random values are 4 generated for the amounts within each transaction. 4 The User Interface One of the key parts of the Lyryx assessment engine is the user interface. How a question is presented to the student ties in with the level of grading that will be applied to the student’s answer and detail of feedback that can be provided. If an instructor wants to provide a more granular response than “right” or “wrong”, then the student must be allowed to explore the course material in a rich user interface. In the field of accounting, two of the most important and frequently used user interface elements are journals and financial statements. Both these feature prominently in the Lyryx system. Early on in learning financial accounting, students typically encounter the concept of journalizing transactions. A student will be given a list or narrative describing transactions performed by a fictitious company and they are asked to record these transactions in a journal using double-entry bookkeeping. One journal entry will contain the date that the transaction is performed, a list of one or more accounts to which the transaction applies (either as a credit or debit), and the dollar amounts that are credited or debited to the associated account. Finally, there can be a comment or explanation of the transaction, which may include an short English statement, a related invoice number, and so on. The Lyryx journal entry interface is presented in a fixed format that is similar to the format found in a typical journal. A blank journal entry is shown in Figure 1. Figure 1: A blank journal entry When a student clicks on the date, debit, or credit fields of a journal entry, a text box allows the student to enter their value for that field. Clicking on the account field brings up a dialog that allows the student to choose from a chart of accounts, containing a full selection of accounts for a company of the type the question refers to. This allows the student the freedom to make mistakes and learn which accounts are appropriate for the transaction presented in the question. Formulas can be input for numerical amounts (credits and debits), and these are checked immediately for correct syntax. Similarly, dates are parsed using typical day/month formats. For each journal entry, two rows are displayed by default, as each entry will require at least two accounts. For more complex entries, the student will need to add more 5 rows to the entry using the buttons under the date field. This avoids giving the student extra information (i.e. how many accounts the transaction requires). When a student is presented with multiple transactions to be journalized, they can enter the entries into the journal in any order, and individual account names can be listed in each entry in any order. A typical completed journal entry is shown in Figure 2. Figure 2: A completed journal entry Another important user interface object in Lyryx accounting products is the financial statement. Technically, a financial statement is very similar to a journal; both are variants on a spreadsheet. Journals have a more strict layout than financial statements, but they both have fields for accounts and numerical amounts. Financial statements, however, are much more free-form than journals. In the Lyryx system they start as an empty list of rows with no formatting. An empty income statement is shown in Figure 3. Figure 3: A blank income statement The student can click on the large column to bring up a chart of accounts, similar to the one shown in journals, but also including a list of section headings that can be used in the statement. The last narrow columns are used for numbers that are the account balances, totals, etc. for the rows. The student can add and delete rows using down arrow and X buttons. The left and right arrows change the indenting of each row, to help the student format the various sections and sub-sections of the financial statement (although this is purely for display purposes and is not considered in the grading process). For financial statements, it is left to the student to know which sections are appropriate for this kind of statement, such as Assets, Liabilities, 6 and Equity, as well as how these should be organized into sub-sections like Current Liabilities and Long-Term Liabilities. The student can enter the various sections in any order, and choose any account from the general list of accounts and place them in the section that they believe is appropriate. The student will also need to total each section and choose the appropriate heading for that line, such as Total Liabilities. Here the chart of accounts dialog has various distractors for headings that may be used in one kind of statement, but not appropriate for the current question. Figure 4 shows a completed income statement in the Lyryx system. Figure 4: A completed income statement 5 The Grading Algorithms The primary goal for the Lyryx assessment engine is to grade all student work as closely as possible to the way a human (instructor) would grade the work, if not better, drawing on the strengths of computer algorithms. When a student makes a mistake, the computer will attempt to determine where the student made the error in their work, and will use the instructor knowledge that is encoded in the grading algorithm to determine the specific error committed and provide targeted feedback to the student. This helps the student to understand where they went wrong and how they can improve their grade on a future attempt. A simple example of the level of grading desired can be shown in a financial statement. If a student makes an error in one of the account balances in the Liabilities section of a financial statement, then the system should identify the line where the error was made. Perhaps a common mistake is for students to enter a 7 negative number instead of a positive number, and the system can make a note of this in the feedback presented to the student. Furthermore, if the student sums up the Liabilities section correctly, based on the incorrect number from the earlier line, then the system should not penalize them again. Marks should be deducted only once for the initial mistake and not for totals or other values that were computed consistently with the initial mistake. All of these are relatively simple details that a human would be able to manage easily, but they require considerable sophistication in an automated system. When grading journal entries, there are a number of types of mistakes that a student can make. For an individual transaction, a student can select an incorrect account, enter an incorrect dollar amount, date, or comment. There may also be more than one correct way to journalize the same transaction, or set of transactions. For example, a purchase transaction may be entered either as one transaction involving four accounts or as two transactions involving two accounts each. The system should accept all correct methods of journalizing the transactions, while rejecting any incorrect variants. The grading process should not be dependent upon the order in which the transactions are entered in the journal, or the order in which the accounts are entered in the transaction, although there are often conventions for these which the system should point out to the student. As mentioned earlier, correct solutions are relatively straightforward to grade. The difficulty arises when the input is incorrect, because it is often unclear (especially to an automated system) what the student intended to do, and hence it is difficult to provide meaningful feedback. For example, if two transactions are entered in a journal incorrectly, it may be unclear which entry was intended for which transaction. The Lyryx grading algorithm for journals takes as its input the list of transactions from the question, and the journal that the student entered via the user interface. The list of transactions can include information on alternative correct ways to journalize the transactions, individually or in combination. The algorithm considers every possible matching of the transactions to the student’s journal entries, and selects the best one. We define the best matching to be the one that awards the student with the highest number of marks. Thus, this results in an interpretation of the student’s answer that gives them the most marks. We opted for this metric in acknowledgment of the fact that any automated system may misinterpret the student’s input, and it would be particularly frustrating for the student if they received a lower grade due to an error by the grading process. Clearly for this method to be effective, it is important for the assignment of marks to be an accurate reflection of how good a fit an entry is for a transaction. Financial statements are similar to journals in that the algorithm takes in a correct financial statement and the student’s financial statement. However, the basic unit being marked is not a journal entry, but instead the basic unit is a section in the financial statement. Each section of the student’s answer (such as Assets, Liabilities, etc) is decomposed into its smallest possible sub-sections. The sub-sections are then combined into the higher-level sections, building a tree of all possible ways to combine the sections and sub-sections of the student’s answer. The algorithm then runs through each possible interpretation and marks it against the correct answer, choosing the interpretation that will award the student with the best 8 possible marks. Again, this may not be the same interpretation that the student intended. However, this approach allows not only for a few misplaced or incorrect accounts in various sections, but it also allows the marker to recognize a misplaced sub-section within a larger section, as well as the simple cases of differing orders of accounts or sections. To illustrate how the grading algorithm for financial statements works and the level of feedback provided, we provide an example of a correct income statement, a student answer with some common kinds of mistakes, and the feedback provided by the marker. The correct answer and student answer are shown in Figure 4 above: these are the inputs to the grading algorithm. The resulting feedback is shown in Figure 5. Your solution was: Grading: Congratulations. You have completed this income statement correctly. Figure 5: Typical feedback for a correct income statement Note in particular how the feedback deals with errors that are used in subsequent totals in Figure 6. These algorithms are based on brute force attempts to find the best interpretation, as defined by giving the student the best possible grade. Currently, these algorithms are effective enough for students to receive a fair grade and receive useful feedback. In practice we have found that students and instructors are very satisfied with the feedback generated by these algorithms and that the interpretations made by the algorithms match closely with the intentions of the students. However, the brute force algorithms are not perfect; they can be very resource intensive and as mentioned previously, the interpretation that the algorithm uses may not align with 9 Your solution was: Grading: Revenues (Lines 1-2) You have completed this part correctly. Expenses (Lines 3-8) Line 4: ’Advertising expense’ should be $5,700, but you have not entered this. This will cost you 1 mark. Line 8: Total expenses should be $183,700, but you have not entered this. However, your answer is consistent with the accounts you listed in this section so you will not lose any marks. Operating income (Line 9) Line 9: Operating income should be $66,300, but you have not entered this. However, your answer is consistent with the accounts you listed in this section so you will not lose any marks. Other gains and losses (Lines 10-11) Line 11: ’Loss on sale of investment’ should be $5,500, but you have not entered this. This will cost you 1 mark. Income before income taxes (Line 12) Line 12: Income before income taxes should be $60,800, but you have not entered this. However, your answer is consistent with the accounts you listed in this section so you will not lose any marks. Income tax expense (Line 13) You have completed this part correctly. Net income (Line 14) Line 14: Net income should be $56,200, but you have not entered this. However, your answer is consistent with the accounts you listed in this section so you will not lose any marks. Figure 6: Typical feedback for grading consistent values in an income statement the student’s mental model of their answer. There is room for more research into other algorithms for interpreting students’ answers. 6 Feedback Information to Students and Instructors An important part of formative assessment is the opportunity to reflect on the learning process and make adjustments as required by individual learners. This is true for both students and instructors. It is straightforward, of course, to record student grades and provide instructors 10 with a gradebook for their class. This provides both students and instructors with a general overview of their performance in the class. For the student, receiving a lower grade than expected on an assignment is typically a wake-up call for action, but where to start? There is much information that can be useful to assist the student in that direction. For example the system stores grades broken down for each question from all assignments, each of which corresponds to particular concepts in the subject material. Thus the system can report to the student not only on their overall performance, but on how they are performing on each concept covered in their assignments; a much more granular approach. The student may learn they have performed better on some aspects of the course than others, and thus can focus on improving in these specific areas. Further, the system can suggest practice questions exactly in these areas. What is also of importance here is that this environment is for the student alone, which is a low threat tool not visible even to the instructor. There are no grades involved but simply the opportunity for the student to improve. A simple colored green/yellow/red Performance Meter informs the student on their performance for each of the concepts in the subject. The more practice and success, the longer the green bar. For the instructor, that same granularity of measurement of student performance on each question is averaged over the entire class and presented on a graph. Additional data is provided, such as the average number of attempts on each question, as well as the average grade on all attempts by the students. This data provides instructors with an overall measure of student mastery of the course material. They can then address any perceived shortcomings and better assist students with their needs. There are other variables worth considering. One is a sense of how long students typically require to complete an assignment, which could in theory provide a sense of difficulty level for the material. However that data is difficult to record and gauge accurately; students can typically do their work at anytime and anywhere they wish, thus they may well start an assignment, and then take a break for long period of time without the system being aware. Even a long period of inactivity cannot be interpreted with certainty. 7 Limitations and Future Work Currently Lyryx assessment focuses on subjects in fields that have a significant amount of quantitative material, such as accounting, finance, economics, statistics, and mathematics. These subject areas are chosen because they lend themselves well to questions that allow for multiple steps in the student’s work, which can then be analyzed by a grading algorithm. Also, quantitative questions have answers that can be classified as “right” or “wrong” more easily than verbal questions. In verbal or qualitative subject areas, Lyryx is still primarily limited to multiple choice or simple “fill in the blank” style answers. Currently, Lyryx is actively engaged in researching other methodologies for grading qualitative questions. One such approach is to use a peer-to-peer style grading system, where students write short paragraphs and grade each others’ work, thereby removing the requirement 11 for a computer to analyze and grade these answers. More research is needed to effectively harness computing power for qualitative grading. Even in the quantitative fields, much better algorithms may be applied compared to the brute force algorithms that Lyryx currently uses. For example, various classification algorithms used in artificial intelligence may be applicable to grading student work, such as a Naive Bayesian classifier, or a Perceptron classifier. These sorts of algorithms are current used in classifying email SPAM (see Graham [7]), where a similar binary verdict is determined based on inputs (SPAM versus not SPAM, or in our case, correct versus incorrect answers). Finally, although we have demonstrated two algorithms that mark particular kinds of questions used in accounting courses, more research is needed into user interfaces and grading algorithms for other kinds of quantitative questions. For example, Lyryx has a user interface for allowing a student to draw a graph, as well as a corresponding grading algorithm. Increasing the pool of possible questions allows for instructors to assess students’ knowledge of the material in more depth. References [1] R.L. Bangert-Drowns , J.A. Kulick, and M.T. Morgan, The instructional effect of feedback in test-like events, Review of Educational Research, 61 no. 2 (1991), pp. 213-238. [2] P. Black and D. Wiliam, ’In Praise of Educational Research’: formative assessment, British Educational Research Journal, 29 no. 5 (2003), pp. 623-637. [3] C. Boston, The concept of formative assessment, Practical Assessment, Research & Evaluation ( http://pareonline.net ), 8 no. 9, (2002). [4] G. Conole and B. Warburton, A review of computer-assisted assessment, ALT-J, Research in Learning Technology, 13, No. 1 (2005), pp. 17-31. [5] T. Crooks, The Validity of Formative Assessments, British Educational Research Association Annual Conference, University of Leeds, September 2001. Available at http://www.leeds.ac.uk/educol/documents/00001862.htm [6] M.C. Elawar and L. Corno, A factorial experiment in teachers’ written feedback on student homework: Changing teacher behaviour a little rather than a lot, Journal of Educational Psychology, 77 no. 2 (1985), pp. 162-173. [7] P. Graham, Better Bayesian filtering, 2003 Spam Conference, January 2003. Available at: http://www.paulgraham.com/better.html [8] K. Howie and N. Sclater, User requirements of the ultimate online assessment engine, Computers and Education, 40, no. 3 (2003), pp. 285-306. [9] T. Jensen, Enhancing the critical thinking skills of first year business students, presentation at Kwantlen Polytechnic University, Vancouver, 2008. 12 [10] Report for JISC, Roadmap for e-assessment, June 2006. Available at http://www.jiscinfonet.ac.uk/InfoKits/effective-use-ofVLEs/resources/roadmap-for-eassessment [11] D. Nicol and C. Milligan, Rethinking technology-supported assessment in terms of the seven principles of good feedback practice. In C. Bryan and K. Clegg (Eds), Innovative Assessment in Higher Education, Taylor and Francis Group Ltd, London (2006). [12] A. Ramaprasad, On the definition of feedback, Behavioral Science, 28 no. 1, (1983) pp. 4-13. [13] D.R. Sadler, Formative assessment and the design of instructional systems, Instructional Science, 18 no.2, (1989) pp. 119-144. [14] K. Scouller, The influence of assessment method on students’ learning approaches: multiple choice question examination versus assignment essay, Higher Education, 35 pp. 453-472 (1998). 13