mis.doc

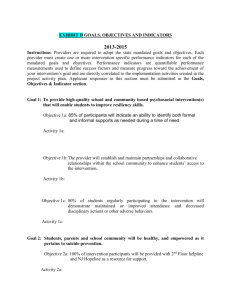

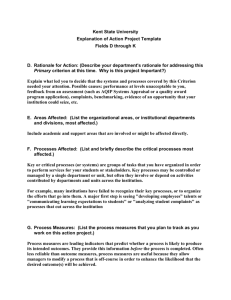

advertisement