Introduction - PPKE-ITK

advertisement

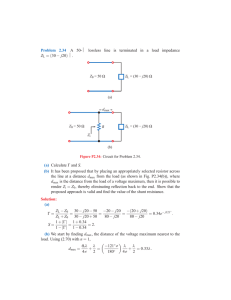

7. APPLICATION OF THE HNN AS A PATTERN RECOGNIZER AND ERROR CORRECTING DECODER

Introduction

HNN~associative memory

Steps:

1. Learning: Hebb rule W

1

N

M

s s

T

, the bias vector b is a nullvector in this case, where

1

s 1,1 , 1,.., M are the elements of the learning set

N

N

2. Initiation: yi (k 1) sgn Wij y j (k ) and yi(k) is the output of the ith neuron at time

j 1

instant k

N

3. Iteration until criterion of convergence is reached yi (k ) sgn Wij y j (k ), i

j 1

Applications:

Pattern recognition

Error correcting decoder

Automatic surveillance

Pattern recognizer

Many problems can be driven to pattern recognition. An universal model is shown hereunder:

The Hopfield network can be used for image recognition, if the Hopfield network is used as an

associative memory, where the stored images are the patterns to be recognized. The only problem is

that respect to the number of images the size of the network drastically increases.

This is why it is more reasonable to investigate the problem of character recognition.

The HNN for character recognition

The problem is how to recognize, from the noisy samples, the real sent samples. The question is

how to construct the network in a way, that it always converges to one of the stored patterns, and

that pattern is the right one. In the following figure one can follow how pattern recognition works

well:

One can see how the Hopfield network associates to the right characters a) Output of the HNN, b) The character to be

recognized, c) The character corrupted by noise, d) The noise

1

7. APPLICATION OF THE HNN AS A PATTERN RECOGNIZER AND ERROR CORRECTING DECODER

And in the next figure how it misses to recognize the characters and converges to wrong steady

states:

Examples of bad character recognition a) Output of the HNN, b) The character to be recognized, c) The character

corrupted by noise, d) The noise.

Although in the first example one can follow the work of the HNN as a good character

recognizer, we must consider that the probability of recognizing the right number is very low. This

can be explained with the very large correlation between the characters. In the first case the noise

power was taken to be 3dB. Number 1 was approximately always recognized well (error probability

was about10−3 ). For number 0, 2 and 3 the match probability was only 0.1, which is extremely low,

but can be explained by the high similarity of the numbers.

It has been seen that the HNN, for this kind of recognition problem, can stuck into unwanted

steady states. The question is how to solve the problem. The idea of coding the stored patterns 14

can give better results. The point is to construct such a transformation, where the attraction area is

increased, and the probability of mismatch is decreased. Where there are only as many steady states

as many characters to be recognized.

The increase of the attractions

The network and the transformations

If we want to apply the results of the information theory it is necessary to assure the ‘quasy

orthogonality’ for the patterns. It means that the bit {1} and the bit {-1} have to appear with the

same probability in the pattern (P(siα=1)= P(siα=-1)=0,5). So sα must be chosen freely with

uniform distribution. In this case the capacity of the associative memory can be determined as

N

(Informational Theoretical Capacity) where N is the dimension of the patterns.

M

2 log N

In practice the memory items S = {sβ ,β = 1,…,Μ} are not independent. So we have to encode

the original S into S’ which satisfies the quasy orthonogonality. The coder F: S → S' must be

topologically invariant with small complexity.

2

7. APPLICATION OF THE HNN AS A PATTERN RECOGNIZER AND ERROR CORRECTING DECODER

The modified pattern recognition system implemented by HNN

The coder

The following coder was proposed for this problem:

1. Define s1, s2,..., sM as M pattern samples, sk,m is the kth element of pattern sample

N

s 1,1 ,1 M

2. Generate M binary vectors s' 1,1 ,1 M , where the elements of the vectors are

uniformly distributed random variables.

3. Compute the coder matrix elements by the following equation:

1 M

M ij s'i s j

N 1

If we will use this coder we should determine the neural network’s optimal weights (matrix

W) from the pattern set S'={s’β ,β=1,…,Μ}. It allows a good capacity and better stability for the

associative memory than calculating with the original set S={sβ ,β=1,…,Μ} according to

information theory analysis. The output of the coder will be x’=F(x) where the ith element of the x’

vector can be determined using the relation:

N 1 M

N

1 M N

x'i sgn M ij x j sgn s'i s j x j sgn s 'i s j x j

j 1 N 1

j 1

j 1

N 1

This relation assures a small complexity mapping that can easily be calculated. The decoder

could be the (Moore-Penrose) inverse matrix of F.

The topological invariance of mapping

We have to make further examination of the above method, namely: Is the mapping, described in

the previous section, topologically invariant? To answer this question we must prove:

s min d x, s

s' min d x' , s'

If

then

holds in the transformed space.

N

[This proof was completely incomprehensible for me

mistakes in the ’3 Hopfield Networks.pdf’, page 16]

3

due

to

the

many

7. APPLICATION OF THE HNN AS A PATTERN RECOGNIZER AND ERROR CORRECTING DECODER

Error correcting decoder

Motivation

To guarantee a certain Quality of Service (QoS) for example in mobile communication there’s a

certain value of bit error probability and a data speed which has to be assured. Bit error probability

depends on the Sound to Noise Ratio (SNR) and data speed on the bandwidth. Usually neither of

them can be physically increased because the power is limited (eg. Mobile phones’ battery SNR

can’t be increased) and a frequency bands are very expansive and already used (bandwidth is

limited). This leaves us looking for algorithmic solutions and one of them is to use Hopfield Neural

Networks (HNN) as an error correcting decoder.

Also in this case it is necessary to use a transformation of the original learning set vectors into

quasy orthogonal vectors to reach the Informational Theoretical Capacity like in the previous

application of pattern recognition (see section ‘The network and the transformations’).

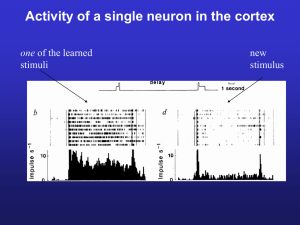

The figure hereunder shows a experimentally setup for comparing the HNN’s performance with a

simple threshold detector:

The only drawback compared to a simple threshold detector is that the HNN converges to a steady

state in O(N2) (threshold detector in only O(N)) but it’s still worth it since using the HNN the bit

error probability can be very much decreased virtually.

References

1. http://neural.hit.bme.hu/pazmany/neuralis/fileok/3%20Hopfield%20Networks.pdf

2. http://digitus.itk.ppke.hu/~losda/anyagok/ErrorCorrectingDecoder/error_correcting_hnn_D

OCUMENT.pdf

4