Mental models, confidence, and performance in a complex dynamic

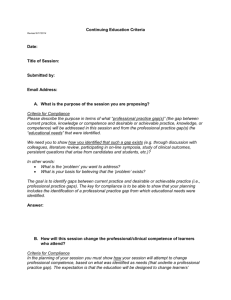

advertisement

Mental models, confidence, and performance in a complex dynamic decision making environment Georgios Rigas and Fredrik Elg Uppsala University, Department of Psychology, Box 1225, 752 48 Uppsala, Sweden Making decisions in complex dynamic systems continuously engages processes of knowledge acquisition and knowledge application. Investigation of these processes in isolation, in a psychological laboratory, is possible using abstract dynamic systems (Funke, 1995). However, the isolation of the processes of knowledge acquisition and knowledge application is not possible in a microworld, in a meaningful way. What we can do instead, is to observe these processes in interaction. In research with microworlds, the participants are allowed to organize their behavior in any way they consider appropriate. Microworlds are computer simulations of certain reality aspects, were the details of reality are omitted. A number of microworlds have been used in psychological research the last 20 years (for a review see Brehmer & Dörner, 1993; Funke, 1988). A microworld can be a city were the participant acts as a mayor (Dörner, Kreuzig, Reither, & Stäudel, 1983) a developing country in Africa (Dörner, Stäudel, & Strohscnhneider, 1986), a burning forest (Lövborg & Brehmer, 1991) etc. Microworlds are characterized by various degrees of complexity, intransparency, novelty, and dynamic change. Under certain conditions acquiring more knowledge is incompatible with effective application of existing knowledge and the test of simplistic hypotheses about isolated causal chains is often impossible because the variables of complex dynamic systems are interconnected in causal nets. What a participant needs to do in a complex microworld has been described by Dörner (e.g., Dörner & Wearing, 1995) in terms of GRASCAM (General Recursive Analytic Synthetic Constellation Amplification) processes. A GRASCAM process is expected to lead to enrichment and differentiation of a mental representation. In this way a network of causal relations is built. The degree of elaboration of the networks of causal relations in different participants has been proposed as an explanation of the differences in behavior. Elaborated networks are hypothesized to lead to (1) more decisions that are also more coordinated, (2) fewer questions, (3) more stable information asking and decision making. The ability to construct elaborated causal networks is assumed to be dependent on a person’s heuristic competence. Heuristic competence can be defined as a persons confidence in his or her ability to find any missing operators in order to maintain control over a process or accomplish a task. According to Dörner (e.g., Dörner & Wearing, 1995), heuristic competence is the context indepentent component of the actual competence. The confidence of an individual that he or she already knows how to fullfill the requirements of a task, the epistemic competence, is the context dependent component of the actual competence. If the heuristic competence of an individual is high then actual competence remains stable. To be able to test these hypotheses, it is necessary to find ways to assess the mental models of the structural relations of a microworld. One such technique for the measurement of the structural knowledge of a dynamic system, is the causal diagrams. We adapted this technique for the assessment of the structural aspects of peoples mental models in MORO (see Figure 1). MORO is a computer simulation of the living conditions of a small nomad tribe in southern Sahara (Dörner, Stäudel, & Strohscnhneider, 1986). Figure 1. The causal structure of the central variables in the MORO simulation. To asssess the structural aspects of peoples mental models all arrows and signs were removed. However, using a causal diagram template, of the type illustrated in figure 1 without arrows and signs, before the interaction with the microworld influences intransparency, because the central variables of the simulation are presented. Intransparency is one of the most important characteristics of natural decision making environments, so it is essential to study decision making processes in the laboratory in the presence of intransparency. Therefore we used this technique only after the MORO session. To assess the mental models of our subjects we had them answer a number of open questions. Their answers we used to construct structural causal models of the type illustrated in Figure 2. These reconstructed causal models can be evaluated by expert raters. Here, we examine, how behavior and performance in MORO may be affected by assessments of the mental models, and the interrater reliability of these assessments. We also discuss the pattern of correlations between the ratings of the mental models, heuristic and actual competence, and behavior and performance in MORO, in relation to Dörner’s theoretical hypotheses. Method Participants Twenty-two people participated in the study, all with no prior experience of the Moro/Winmoro task. The age of the participants ranged from 20 to 34 years. (Mean 23.9). Subjects received 2 movie tickets for participating in the study. There were 7 male and 15 female subjects. The behavior and performance of this group is compared to a control group of thirtyeight subjects (Brehmer & Rigas, 1997) tested under standard conditions. Apparatus WinMoro, version 7, 1993, by Victor & Brehmer, based on "Moro" version 17.5.88, by Dörner et. al., 1986, was used. The program was run under Windows 3.11, on a PC-computer. Tasks Heuristic competence (HC), was measured by a Questionnaire developed by Stäudel (1987), with additional questions (Rigas, Brehmer, Elg, & Heikinen, 1997). Mental Model Questionnaire (MMQ). MMQ is a set of open questions designed to tap subjects mental models of goals and assumptions about the task, the system to control, and self-perceived ability to comply with the goals in the task. Actual competence (AC), Motivation (MOT), and perceived Relevance (REL) of ones own decision making in the task, were measured by direct questions of the type "how motivated are you?". WINMORO, a complex and dynamic simulation of the living conditions of a tribe living in south-west Sahara, Africa. The experimenter functioned as interface. Participants had no direct visual access to the computer screen. Procedure The HC questionnaire a was administered first, followed by an instruction where key issues on the subjects role and the nature of the system are addressed without explicit indications on decision policies. Before the start of the experiment subjects were required to fill in the MMQ, AC, MOT, and REL forms, which were then updated by the subjects at year 4, and at the end of the MORO session. Subjects were also required to fill in the MOT, and REL forms, at the time of a catastrophe and one year after the catastrophe. Results No statistically significant differences in performance were found between the group in this study and the control group from Brehmer & Rigas (1997). (Pastures, number of cattle, groundwater level, number of tse-tse flies, capital, population, deaths by starvation, number of years until the first catastrophe, and a wheighted measure of performance in general, were not found different in any systematic way.) Statistically significant differences were found for some behavior variables. The participants in the present study spent more time, asked more questions in general, and more central questions in particular. Questions regarding the value of the central variables in the simulation, as illustrated in figure 1, are scored as central questions. No systematic differences were obtained in the number of decisions or the stability of the information asking or the decision making. From the answers of the subjects in the MMQ we constructed structural models of the type illustrated in Figure 2. We see the model of a successful participant (left) and an unsuccessful one (right). There are no differences in the level of elaboration, if only the number of elements and relations is considered and not the qualitative aspects of the models. However, the model of the successful subject has a higher level of abstraction. Figure 2. Reconstructed structural models for a successful (left) and an unsuccessful (right) participant. The interrater reliability for the ratings of the quality of the causal models was .85. The interrater reliability for the ratings of subjects confidence at year 1 was .82, at year 4 was .78, and at year 30 it was .80. Contrary to what was expected, confidence in ones mental model and heuristic competence correlated negatively with performance variables, with correlation coefficients ranging between .00 and -.41. Quality of mental model did not correlate significantly with any perfomance variable. Structural knowledge as assessed by the causal diagram template, after the MORO session, correlated negatively with groundwater level, r = -.41. This variable correlated also negatively with the stability of decision making, r = -.48. Quality of mental model before the interaction correlated negatively with number of questions, r = -.31, and positively with number of central questions, r = .46, as expected. Discussion The assessments of the mental models do not seem to cause any undesirable effects in comparison to the control group. The interrater reliability was sufficiently high. However, the pattern of the correlations is not as expected. The negative correlations of heuristic competence and confidence in ones mental model with performance are puzzling. On the other hand, the negative correlations of structural knowledge, after the MORO session, with one performance variable and the stability of the decision making, may reflect the degree of the exploration of the simulation programme. Another hypothesis, for these findings, is that it is not the structural aspects of ones mental model that determine the perfomance but the implicit and dynamic aspects of the mental model. It is very important that we find ways to measure the implicit and dynamic aspects of the mental models. Generally, efforts to find good predictors of success in microworlds have not been successful. Intelligence and personality have been consistently found to be uncorrelated with performance in dynamic systems (Brehmer & Rigas, 1997; Dörner et. al, 1983; Putz-Osterloh, 1981; Rigas, 1997; Strohsneider, 1991). These findings have been either explained as a result of the different cognitive demands that the ability test and the dynamic systems place upon the participants (Dörner, 1986; PutzOsterloh, 1993) or as a function of the reliability problems of performance measures in microworlds (Funke, 1995). In two independent studies (Rigas, 1997; Strohschneider, 1986) it was found that performance measures in MORO did not have good reliability, at least as far as reliability of performance scores in such a complex dynamic system can be assessed using the test-retest method and true score theory. The typical stability coefficients in these two studies were about .30. The reliability problems of performance measures in complex dynamic systems should therefore be our highest research prioririty. Heuristic competence is the only construct that has been found in some studies (e.g. Stäudel, 1987) to be related to performance in the MORO simulation. Stäudel’s findings could not be replicated in a number of experiments by our research group in Uppsala (e.g., Elg, 1992). The internal consistency coefficients of scales measuring heuristic competence are over .80 (Rigas et. al., 1997). The problem then must be in the way this construct is measured. We should thus abandon the respondent measures and search for operant measures of heuristic competence. References Brehmer, B., & Dörner, D. (1993). Experiments with computer-simulated microworlds: Escaping both the narrow straits of the laboratory and the deep blue sea of the field study. Computers in Human Behavior, 9, 171-184. Brehmer, B., & Rigas, G. (1997). Transparency and the Relationship between Dynamic Decision Making and Intelligence. Manuscript under preparation. Dörner, D. (1986). Diagnostik der operativen Intelligenz. Diagnostica, 32, 290308. Dörner, D., Kreuzig, H. W., Reither, F., & Stäudel, T. (Eds.).(1983). Lohhausen. Vom Umgang mit Unbestimmtheit und Komplexität. Bern, Switzerland: Hans Huber. Dörner, D., Stäudel, T., & Strohschneider, S. (1986). MORO. Programmdokumentation. Memorandum 23. Lehrstuhl Psychologie II, Bamberg Universität. Dörner, D., Wearing, A. (1995). Complex Problem Solving: Toward a (Computersimulated) Theory. In P. A. Frensch & J. Funke (Ed.), Complex Problem Solving: The European Perspective (pp. 65-99). Hillsdale, NJ: Lawrence Erlbaum Associates. Elg, F. (1992). Effects of Economic Competence in a Complex Dynamic Task. Thesis. Uppsala University, Department of psychology. Funke, J. (1995). Experimental Research on Complex Problem Solving. In P. A. Frensch & J. Funke (Ed.), Complex Problem solving: The European Perspective (pp. 243-268). Hillsdale, NJ: Lawrence Erlbaum Associates. Funke, J. (1988). Using simulation to study complex problem solving: A review of studies in FRG. Simulation & Games, 19, 277-303. Lövborg, L., & Brehmer, B. (1991). NEWFIRE- A Flexible System for Running Simulated Fire-Fighting Experiments. Risö National Laboratory, Roskilde, Denmark. Putz-Osterloh, W., & Lüer, G., (1981). Über die Vorhersagbarkeit komplexer Problemlöseleistungen durch Ergebnisse in einem Intelligenztest. Zeitschrift für Experimentelle und Angewandte Psychologie, 28, 309-334. Putz-Osterloh, W. (1993). Complex Problem Solving as a Diagnostic Tool. In H. Schuler, J. L. Farr, & M. Smith (Eds.), Personnel Selection and Assessment: Individual and Organizational Perspectives (pp. 289-301). Hillsdale, NJ: Lawrence Erlbaum Associates. Rigas, G. (1997). Learning by Experience in a Dynamic Decision Making Environment and Intelligence. Paper to be presented at SPUDM, 1997, Leeds, England. Rigas, G., Brehmer, B., Elg, F., & Heikinen, S. (1997). Reliability and Validity of Heuristic Competence Scales. Manuscript under preparation. Stäudel, T. (1987). Problemlösen, Emotionen und Competenz. Regensburg, Germany: Roderer. Strohschneider, S. (1986). Zur Stabilität und Validität von Handeln in komplexen Realitätsbereichen.Sprache & Kognition, 5, 42-48. Strohschneider, S. (1991). Problemlösen und Intelligenz: Über die Effekte der Konkretisierung komplexer Probleme. Diagnostica, 37, 353-371.