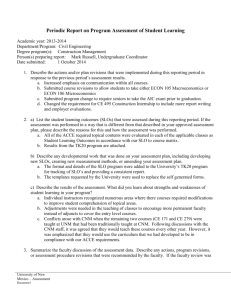

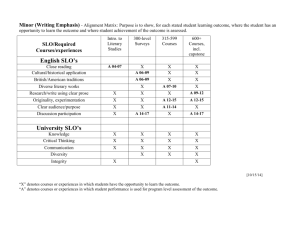

The Marketing of Student Learning Objectives (SLOs

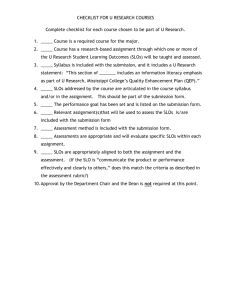

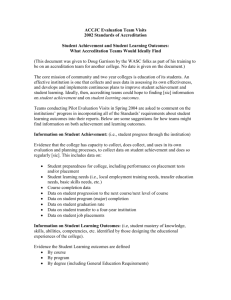

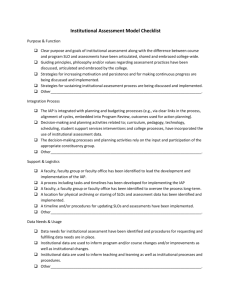

advertisement