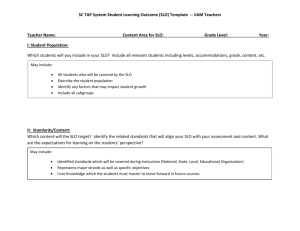

Course-Level Assessment Questions

advertisement

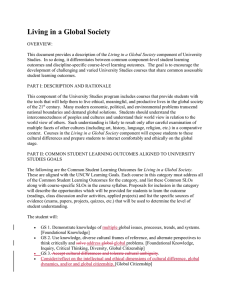

March 9, 2011 Aim of the SLO Process: Build a purposeful system to measure, document, reflect on, discuss, and improve student learning. The Ideas of the Process: What is the value and expectation of course-level assessment? What would effective courselevel assessment look like and what would it yield? When, where, how, how often, and why does the dialogue happen? What opportunities or venues exist to enable that discussion? What about faculty members using different assessment instruments to assess the same course? How do the aggregated course-specific findings help inform their conversations? What kind of course-level assessment plan will enable a rigorous, thoughtful, and useful process? How does course-level assessment interact with assessment efforts at other levels (e.g. program, general education)? What is effective about the program-level SLO assessment process? How can those effective elements be carried over into assessment at the course level? What is the purpose of this form? How is it intended to be used and by whom? How does it relate to the resource allocation process (since programmatic implications into the form refer to resources)? By what process can program-level and course-level SLOs be modified? Is the reporting form submitted by faculty member, by section or by course? Is a simplified option that allows for more dialogue and avenues for change possible? How is the loop closed? How will future assessment reports on the same course be managed? The Mechanics of the Process: What is the expectation – how often are courses required to assess/report? Is every SLO assessed every term? The form allows the responder to select a course. Are departments at liberty to select an assessment plan that works for them? Are there any college expectations? How do these expectations relate to expectations of other assessment processes (i.e. programlevel, institution-level)? Which courses are included in the pilot? Do you want to consider CTE courses since they are familiar with core indicators and may be dabbling with SLO-like practices with their respective external agencies? What is the timeline? Is there a cycle for the assessment plan? Who fills this out? Is one submitted per course or per faculty member? Is it section or course assessment? What were the criteria used to determine if and how many students met the SLO? How does the form capture the “story” so that it can inform the discussion about the assessment effort at a later time? How are the reporting form responses organized? Where does this information go? How and in what form does it circle back to the faculty member? How many SLOs does a typical course have? How would information where faculty members using different instruments have different percentages of students meet the SLOs be used? Mechanical Suggestions: Collect all information per SLO? Put the SLO with its type of assessment instrument instead of separating all SLO entries from all assessment instrument entries, consider the same with % of students who met that SLO. Consider asking open-ended questions as appropriate. Perhaps leave the implications section as a narrative, people might be prone to checking things off (may also be used as a way to confirm the implication selections when it is rolled out college-wide). Consider putting the classroom implications before the programmatic implications for the course-level reporting forms.