Introduction to Assessment Powerpoint

advertisement

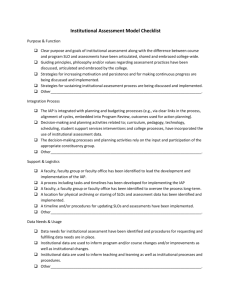

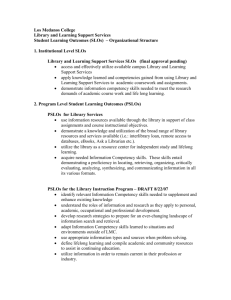

Defining and Documenting Student Learning Outcomes at Lamar State College-Port Arthur Why assess student learning? Improve quality of education Provide accountability to Student learning Students The student experience Employers Institutional effectiveness Parents Planning and budgeting External funding sources Transfer institutions SACS-COC THECB Faculty Concerns “We already assess: grades.” “This is additional work.” “I’m too busy.” “This violates my academic freedom.” “Degree attainment demonstrates that SLOs are attained.” “I don’t know how.” “When will this go away?” “We’re only doing this through the SACS study, then we will just quit this assessment business.” Why aren’t grades enough? Inconsistency between instructors teaching the same courses – non-standardized grading practices. Grades may reflect student behaviors such as class participation, attendance, citizenship, extra credit, missed assignments, and other factors. Accuracy in assessment requires meaningful data across sections, through time. Sure, the students like our services and programs, and they love our classes, but what evidence do we have that what we are doing is making a difference? Assessment turns colleges from being teacher-centered to being Student & Learningcentered Assessment, defined Assessment is the systemic, methodical collection, review, and use of information about educational programs undertaken for the purpose of improving student learning and development. -- (Palomba & Banta, 1999) A successful assessment program is Continuous and on-going Easy to administer Affordable Timely Meaningful Accessible to users Useful and pertinent The basis for future improvements Questions Guiding Assessment 1. What should students learn from our educational programs and experience? 2. How can we document and evaluate how well we are teaching and how well students are learning? 3. What changes should we make to improve teaching and learning? 4. Do the changes we make actually work? Four levels of college assessment Institutional level 2. Program/departmental level 1. General education/core curriculum b. Degree programs c. Developmental education d. Continuing education 3. Course level 4. Individual student level a. 2a: Gen Ed/Core Curriculum Oral and written communication skills Critical thinking skills Mathematical problem-solving skills Information literacy Technology literacy Social and interpersonal skills Cultural/global/diversity skills 2c/2d: Developmental and distance education Developmental education is assessed by performance in the next level course Distance education is assessed by its equivalency to traditionally-delivered course material. 2b: Assessing departments, degrees, and programs Where to start? Catalog descriptions Syllabi and course outlines Course assignments and tests Textbooks (esp. tables of contents, introductions, and summaries Colleagues Professional associations The vocabulary of assessment Value-added – the increase in learning that occurs during a course, program, or undergraduate education (Leskes, 2002) Absolute learning outcome - assesses a learner's achievement against an absolute standard or criterion of performance Embedded assessment - a means of gathering information about student learning that is integrated into the teaching-learning process Authentic assessment - requires students to actively accomplish complex and significant tasks, while bringing to bear prior knowledge, recent learning, and relevant skills to solve realistic or authentic problems Formative assessment - the gathering of information about student learning-during the progression of a course or program and usually repeatedly-to improve the learning of those students (Leskes, 2002) Summative assessment - the gathering of information at the conclusion of a course, program, or undergraduate career to improve learning or to meet accountability demands (Leskes, 2002) Triangulation – multiple lines of evidence point to the same conclusion Quantitative - methods that rely on numerical scores or ratings Qualitative - methods that rely on descriptions rather than numbers SLO = ? A. Student Life Organization B. Special Liquor Order C. Student Learning Outcomes D. Space Liaison Officer Student Learning Outcomes, defined “Learning outcomes are statements of knowledge, skills, and abilities the individual student possesses and can demonstrate upon completion of a learning experience or sequence of learning experiences (e.g., course, program, degree).” (Barr, McCabe, and Sifferlen, 2001) SMART SLOs Smart Hmmm…. Measurable Attainable Realistic and Results-Oriented Timely Good learning outcomes are: Learner centered Key to the course, program, and institutional mission Meaningful to both students and faculty Measurable SLOs at Different Levels Course Level: Students who complete this course can calculate and interpret a variety of descriptive and inferential statistics. Program Level: Students who complete the Psychology program can use statistical tools to analyze and interpret data from psychological studies. Institutional Level: Graduates from our campus can apply quantitative reasoning to real-world problems. Program-level SLOs vs Course-level SLOs Program-level SLOs (PSLOs) are a holistic picture of what is expected of students completing a defined program or course of study. PSLOs should reflect the total learning experiences in the program – not just the courses taken. Course-level SLOs (CSLOs) are a holistic picture of what is expected of students completing a particular course. CSLOs should be related to the PSLOs and the institutional mission. Writing Student Learning Outcomes 1. Identify what the student should learn: a. b. c. What should the student be expected to know? What should the student be expected to be able to do? How is a student expected to be able to think? 2. Keep the outcomes to a single, simple sentence 3. Be as specific as possible 4. Use active verbs that describe an observable or identifiable action (see Bloom’s Taxonomy) 5. Identify success criteria 6. Think about how you will measure the outcomes (documentation, artifacts, evidence) Bloom’s Taxonomy Evaluation Synthesis Analysis Application Comprehension Knowledge Higherorder, critical thinking Lowerorder, recall Bloom’s Taxonomy Evaluation: To judge the quality of something based on its adequacy, value, logic, or use. Synthesis: To create something, to integrate ideas into a solution, to propose an action plan, to formulate a new classification scheme. Higherorder, critical thinking Analysis: To identify the organizational structure of something; to identify parts, relationships, and organizing principles. Application: To apply knowledge to new situations, to solve problems. Comprehension: To understand, interpret, compare and Contrast, explain. Knowledge: To know specific facts, terms, concepts, principles, or theories. Lowerorder, recall What are the problems with these SLOs? The student will complete a self-assessment survey. The student will appreciate the benefits of exercise. The student will develop problem-solving skills and conflict resolution skills. The student will strengthen his/her writing skills. 100% of students will demonstrate competency in managing a database. . Stronger SLOs (“Students will be able to” is assumed) Articulate five health-related stress impacts on the body. Analyze a nutrition food label and explain various components of that food label and their relation to healthy food choices. Apply principles of logical argument in their writing. Evaluate the strengths and weaknesses of open and closed source software development models. Demonstrate appropriate First Aid procedures on a heart attack victim. SLO Assessment Is designed to improve student learning Is faculty-driven Is an on-going, not episodic, process Is important to “close the loop” or act on the findings Is about evaluating the effectiveness of programs, courses, and services, not individual students or individual instructors. Meaningful SLO Assessment is Measureable Sustainable Process for measuring SLOs Create written statements of measureable SLOs Use results to improve student learning Evaluate student performance, assemble data, and report results Set benchmarks Choose the evaluation tools Set standards for levels of performance on each SLO Identify observable factors that provide the basis for assessing which level of performance has been achieved Good evidence is Relevant – meaningful Verifiable – reproducible Representative – sample size Cumulative – over time Actionable – usable results Using a Rubric A rubric is simply a table in which you connect your student learning objective to the measurement of success. The next development activity will cover rubrics more thoroughly. Accomplished (3) Competent (2) Developing (1) SLO 1 Success Criteria Success Criteria Success Criteria SLO 2 Success Criteria Success Criteria Success Criteria SLO 3 Success Criteria Success Criteria Success Criteria SLO 4 Success Criteria Success Criteria Success Criteria Not Observed (0) Identifying Success Criteria Example from Medical Office Administration Program PSLO Developing (1) Competent (2) Accomplished (3) Not Observed (0) Apply current trends in medical insurance, HIPAA guidelines, and coding systems. Occasional insight of insurance trends and understands current coding systems. Moderate insight and analysis of insurance trends and usually able to locate a code. Exceptional insight and analysis of insurance trends and mastery of the technique for locating a medical code. Not enough information to assess. The success criteria are the benchmarks of successful attainment of the SLO. The example above is for a Program, but the concept and process can also be applied to Course-level assessment. Rating SLO Evidence: Direct Measures Comprehensive/capstone exams or assignments Licensure examinations Professionally judged performances/demonstrations Portfolios (documented learning experiences) Value-added measures (pre/post testing, longitudinal studies and analyses) Standardized tests (CAAP, CLA) Case studies Embedded questions Simulations Rubrics SLO Evidence: Indirect Measures Student satisfaction surveys Alumni satisfaction surveys Employer satisfaction surveys Grades Retention rates Graduation rates/surveys Placement rates (employment or transfer institutions) Focus groups/group interviews Advisory committee recommendations Reflective essays Test Mapping Test mapping is a process by which you identify which questions on your exams match up to the SLOs you’ve identified for the Program or Course and to the level of cognitive activity the question requires, using Bloom’s Taxonomy of measurable verbs. Use one map per test. Today we are going over one portion of test mapping – matching up the test questions to the SLOs. In the near future we will have a development activity that covers test mapping more thoroughly. Example of a Test Map This example of a test map comes from a Program, but the process also works at the Course level. PSLO 2. Demonstrates awareness of cultural differences and similarities 2a. Identifies cultural characteristics (including beliefs, values, perspectives, and/or practices Test Question Number 5, 8, 9, 12 2b. Interprets works of human expression 20, 21, 30 within cultural contexts. 2c. Shows awareness of one’s own culture in relation to others. (If you don’t have any questions that match up to the SLO, then leave a blank.) Save Copies of All Work! Please make a habit of Saving at least 10 random copies of all student work; photocopies or electronic copies are fine. Ideally you should save examples of excellent, mediocre, and poor work. Saving all scoring rubrics for performances or demonstrations if you use them or as you develop them. Creating and saving a test map for all Scantron, multiple choice, short answer, and essay tests. When in doubt, SAVE COPIES.