Observer Ratings of Personality

advertisement

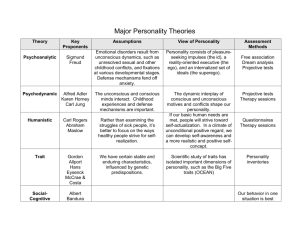

Observer Ratings of Personality Robert R. McCrae and Alexander Weiss National Institute on Aging, National Institutes of Health, DHHS Baltimore, Maryland Running head: OBSERVER RATINGS Acknowledgement This research was supported by the Intramural Research Program of the NIH, National Institute on Aging. Observer Ratings 2 Long before self-report personality scales were invented—as long ago as Theophrastus' Characters, 319 B.C.E.—observers described personality traits in other people. Psychoanalysts and psychiatrists developed formal languages for the description of personality traits in patients that Block (1961) distilled into a common language in a set of Q-sort items. In psychology, perhaps the first important use of observer ratings was Webb's 1915 study of trait ratings of teacher trainees (Deary, 1996), and the discovery of the Five-Factor Model (FFM) of personality was made through the analysis of eight observer rating studies (Tupes & Christal, 1961/1992). Yet despite their historical importance, observer ratings are used far less often than self-reports in personality assessment. This is unfortunate, because self-reports have well-known limitations, and observer ratings can complement and supplement them. There is now a solid body of evidence that ratings by informants well acquainted with the target provide reliable, stable, and valid assessments of personality traits (e.g., Funder, Kolar, & Blackman, 1995; Riemann, Angleitner, & Strelau, 1997). There are several methods of obtaining observer-rating data. When members of a group are asked to assess each other, a ranking technique is sometimes used, in which each group member ranks every other member. Again, group members may nominate the single individual who shows the highest level of a trait. Expert raters can be asked to complete a Q-sort to describe targets (e.g., Lanning, 1994). However, in recent years most informant ratings have been obtained by the use of questionnaires that parallel familiar self-report instruments. The Big Five Inventory (BFI; Benet-Martínez & John, 1998) uses a format that can be applied to either selfreports or observer ratings; the Revised NEO Personality Inventory (NEO-PI-R; Costa & McCrae, 1992) has parallel forms, with "I . . .," "He . . .," and "She . . ." phrasings. The firstperson version is called Form S, the third-person version Form R. Observer ratings can be used in almost every context in which self-reports are normally Observer Ratings 3 used, but they are particularly valuable when (1) targets are not available to make self-reports; (2) self-reports are untrustworthy; or (3) researchers wish to evaluate and improve the accuracy of assessments by aggregating multiple raters (Hofstee, 1994). In this chapter we will focus on ratings of personality (including traits, motives, temperaments, and so on), but the method can also be used to assess psychopathology, moods, social attitudes, and many other psychological constructs. A Step-by-Step Guide Selecting or developing the scale. A researcher or practitioner who wishes to assess a personality construct needs some assessment instrument. For personality traits, standardized and validated instruments should be the first choice. The BFI provides a quick assessment of the five major personality factors, as does the short version of the NEO-PI-R, the NEO Five-Factor Inventory (NEO-FFI; Costa & McCrae, 1992). The full, 240-item NEO-PI-R provides more reliable measures of the factors, together with scores for 30 specific traits, or facets, that define the factors. Goldberg's (1990) adjective scales have been used for observer ratings (e.g., Rubenzer, Faschingbauer, & Ones, 2000). The California Adult Q-Set (CAQ; Block, 1961) was originally intended for quantifying expert ratings, but it has been adapted for lay use (Bem & Funder, 1978) and can be scored in terms of the FFM (McCrae, Costa, & Busch, 1986). There are several considerations in selecting among these instruments. The CAQ requires the rater to sort items from least to most characteristic according to a fixed distribution. This procedure eliminates certain response biases, but it is a lengthy and cognitively challenging task relative to completing standard questionnaires. The full NEO-PI-R gives much more detailed information than the NEO-FFI, but takes correspondingly longer to complete. The Goldberg adjectives and the BFI are chiefly useful for research, because they do not offer normative information. The NEO-FFI and NEO-PI-R have norms for observer ratings, and thus can be used Observer Ratings 4 in assessing individual cases as well as in research. Observer ratings have generally been the preferred method of assessing temperament and personality in children. Among the validated measures available are the EASI-III Temperament Survey (Buss & Plomin, 1975), the Hierarchical Personality Inventory for Children (Mervielde & De Fruyt, 1999), and the California Child Q-Set (Block, 1971), which can be scored for FFM factors (John, Caspi, Robins, Moffitt, & Stouthamer-Loeber, 1994). Because the FFM is a comprehensive model of personality traits, scales from these instruments will be relevant in most applications. In some instances, however, the construct of interest will be highly specialized (e.g., expectancy of occupational success) or only weakly related to FFM dimensions (e.g., religiosity). In these cases, researchers have two options. The first is to adapt an existing self-report scale for use by observers; the second is to develop a scale de novo. The first option is clearly preferable; it saves time and effort, and there is now considerable experience suggesting that observer rating adaptations of self-report instruments maintain most of the psychometric properties of the original (e.g., McCrae et al., 2005). Research employing a newly adapted observer rating scale should ideally include established measures that can be used to provide validity evidence for the new scale. If no established self-report measure is available, researchers can develop a new scale, following the same procedures and standards that are used in the development of self-report scales: Writing relevant items, piloting the scale, examining internal consistency and factor structure, and gathering evidence of retest reliability and convergent and discriminant validity. Observer rating scales are subject to biases and response sets much as self-report scales are, and these biases must be considered in creating new scales. Acquiescent responding (McCrae, Herbst, & Costa, 2001) is effectively neutralized by including approximately equal numbers of positively- and negatively-keyed items. Evaluative bias in observer ratings is usually called a Observer Ratings 5 halo effect, a tendency to attribute desirable or undesirable characteristics indiscriminately to a target. Such biases can be controlled to some extent by phrasing items in objective terms, by instructing raters to give a careful and honest description of the target, and by choosing raters who are unbiased, or whose biases cancel each other out: One's true personality probably lies somewhere between the descriptions given by one's best friend and one's worst enemy. Selecting raters. The targets in an observer rating study are usually dictated by the research question: If one wants to look for personality predictors of heart disease, the targets will be coronary patients and controls. But there are several possibilities for selecting the raters. Researchers who are interested in impression formation normally choose unacquainted individuals and provide them with a controlled exposure to the target. For example, raters may be shown a videotape of the target in a social interaction and asked to provide ratings (Funder & Sneed, 1993). More commonly, researchers are interested in obtaining accurate assessments of the target's personality, and choose raters who are already well-acquainted with the target: spouses, co-workers, roommates, or friends. There is some controversy about the effect of length of acquaintance on the accuracy of ratings. Kenny (2004) goes so far as to say that "acquaintance is not as important in person perception as generally thought" (p. 265). His conclusions are based chiefly on laboratory studies, however, where short adjective scales are typically used and correlations across raters are generally small (Mdn r = .15; Kenny, 2004, Table 1) and remain so after further exposure to the target. Real life settings and questionnaire measures yield more evidence of an acquaintance effect. For example, Kurtz and Sherker (2003) used the NEO-FFI on samples of college roommates tested after two weeks and fifteen weeks and found median self-other agreement rose from .27 to .43 across the five scales. It is likely, however, that a ceiling is reached fairly soon in such settings, because self-peer agreement in a study that involved raters who had been Observer Ratings 6 acquainted with the target for up to 70 years showed a median correlation of only .41 (Costa & McCrae, 1992). These correlations are substantially higher than the '.3 barrier' once thought to characterize the upper limit of personality scale validities, but they are also substantially less than 1.0, which would indicate perfect concordance. Researchers who want higher correlations have two choices: They can select intimate informants instead of merely well-acquainted informants, or they can aggregate judgments from multiple raters. There is evidence from American (Costa & McCrae, 1992) and Russian (McCrae et al., 2004) samples that spouse ratings agree with self-reports more highly than do other informants' ratings (Mdns = .58 vs. .40), presumably because people share more personal thoughts and feelings with spouses. In a Czech study (McCrae et al., 2004), high self-other agreement (Mdn = .57) was found for ratings by siblings, other relatives, and friends as well as spouses. Perhaps Czechs are generally more selfdisclosing than are Americans and Russians. Gathering data from multiple raters of each target has two advantages. First, one can assess interrater agreement, which gives a sense of the amount of confidence to be placed in the ratings; it is particularly useful as evidence for the validity of new scales. Second, one can aggregate (that is, average) across raters to improve the accuracy of the assessment. Agreement between raters is called consensus in the literature on person perception, and it can in principle arise from inaccurate shared stereotypes. But correlations between aggregated observer ratings and self-reports are less likely to be due to stereotypes, and studies show that increasing the number of raters increases self-other agreement, and thus, presumably, validity. Four raters per target appears to be the practical maximum, although there are diminishing returns from any more than two raters (McCrae & Costa, 1989). There has been no systematic research on the optimal combination of raters; however, it would seem that the fullest portrait would be obtained Observer Ratings 7 by combining reports from raters acquainted with the target in different ways, for example, a family member and a co-worker, or a same-sex friend and an opposite-sex friend. Implementing the study. To this day, the bulk of personality research is conducted by collecting self-report data from college students. The reason for this is simply convenience: College students are at hand, and they can give informed consent and complete a questionnaire in a single sitting. It is perfectly possible to conduct observer-rating studies in the same way, as long as anonymous targets are used. For example, if one wished to know whether the association between Openness to Experience and political preferences varied across the lifespan, one could randomly assign students to think of a young, middle-aged, or old man or woman and provide ratings of their personality and political beliefs (cf. McCrae et al., 2005). But more commonly, the researcher wants to know about particular targets: psychotherapy patients (see Bagby et al., 1998), job applicants, dating couples. In these situations, the design is a bit more involved. It is first necessary to identify raters, who are usually nominated by the target, and then in most cases it will be necessary to get informed consent from both target and rater. In obtaining consent, it would be important to specify what information from the observer rating, if any, would be divulged to the target: Some targets might want to participate only if they will receive feedback; some raters might want to participate only if their ratings were strictly confidential. In preparing instructions, it would be essential to make it clear to the rater who the intended target was, and to include some check on this—for example, by requiring the rater to provide the name and age of the target being rated. Beyond that, little special attention is needed in the instructions: Raters are simply asked to describe the target by judging the accuracy of personality items. People have very little difficulty with such a task. Vazire (in press) advocated and illustrated the use of informant reports in Internet assessments. In clinical practice, a psychotherapy patient may be asked to name a significant other Observer Ratings 8 who could provide ratings of him or her; that person would then be contacted (perhaps by mail) and asked to provide the ratings. (Written informed consent would not be required if the data were used exclusively for clinical purposes, but mutual agreement among clinician, patient, and rater would probably be needed in order to obtain valid and clinically useful ratings.) In the 1980s, cross-observer agreement on personality traits was offered as evidence that traits were veridical, and skeptics sometimes argued that the rater and target may have conspired to give similar ratings. Usually instructions to avoid discussing the questionnaire with the target are sufficient; if this is a serious concern, it may be necessary to gather data in a controlled setting, with the rater physically separated from the target. Research using couples often gathers self-reports and partner ratings from both partners. This is a rich experimental design, because it allows the researcher to study such issues as assortative mating (whether the two have similar personalities) and assumed similarity (whether people tend to see others as they themselves are). But it also requires particular care in creating a data file that clearly distinguishes the different method and target data, and that accurately matches data from the two partners. Variable labels should reflect both the source and target of the rating. Examples of Observer Rating Research Observer ratings can be used in almost any assessment of personality, but there are several research circumstances in which they are particularly valuable. In some of these, their value comes from their use in conjunction with self-reports. We provide three examples of these applications, in personality research, in the interpretation of an individual case, and in latent trait modeling. For other research problems, self-reports are not feasible, or even possible, and we illustrate the use of observer ratings for assessing cognitively impaired adults, historical figures, Observer Ratings 9 and chimpanzees. The use of observer ratings for the assessment of personality in children is already widely practiced (e.g., Mervielde & De Fruyt, 1999). Personality Research in the ABLSA Some of the research that led to the prominence of the FFM was conducted in the 1980s on members of the Augmented Baltimore Longitudinal Study of Aging (ABLSA). The BLSA itself was begun in 1958 as a study of aging men; in 1978 women were added to the study, but at a rather modest rate. Participants visited the Gerontology Research Center every one to two years to complete a battery of biomedical and psychosocial tests. To increase the number of women in the study, we augmented the BLSA by inviting the spouses of then-current BLSA members to complete questionnaires at home (McCrae, 1982). Among the first data gathered from the ABLSA in 1980 were self-reports on the NEO Inventory, a three-factor precursor of the NEOPI-R, and, a few months later, spouse ratings on a third-person version of the NEO Inventory. As new versions of the instrument were developed that included scales for Agreeableness and Conscientiousness, both Form S and Form R were readministered. Beginning in 1983, ABLSA participants were asked to nominate peer raters, and these individuals in turn were asked to complete Form R to describe the participant. The NEO-PI-R was administered to peer raters in 1990. These data, in combination with the other psychological data collected in the BLSA and ABLSA, led to several key findings: Different observers agreed with each other and with self-reports at a level that was both statistically significant and socially important, and the differences in method made it unlikely that this was the result of artifacts. Personality traits could be consensually validated, and thus the claim, prevalent in the 1970s, that traits were mere cognitive fictions could be dismissed (McCrae, 1982). Observer Ratings 10 When a self-report scale is correlated with a measure of social desirability, it may mean that the scale is contaminated by the desire of the individual to present himor herself in a flattering light, or it may mean that the social desirability measure is actually assessing genuine aspects of personality. Researchers routinely presumed that the former interpretation was correct, and the dispute could not be resolved if only self-reports were available. But significant correlations between self-reported social desirability scales and observer ratings of personality could not plausibly be attributed to a shared bias, and thus demonstrated that social desirability scales assess more substance than style (McCrae & Costa, 1983). Longitudinal studies of self-reports had consistently shown that individual differences in personality traits are extremely stable in adults, with uncorrected retest correlations near .80 over six- to ten-year intervals. But perhaps that simply reflected the effects of a crystallized self-concept; people might be blind to changes in their basic personality traits. If personality changed with age whereas self-concept did not, self-reports should become increasingly inaccurate, and selfother agreement should be lower for old than for young targets. Observer rating data showed that was not the case (McCrae & Costa, 1982), and longitudinal stability coefficients in observer rating data were as high as in self-reports (Costa & McCrae, 1988). In principle, NEO-PI-R facet scale scores contain variance common to the five factors, variance that is specific to the facet, and error. But unless there is substantial specific variance, there is no need to assess facets; all personality traits would simply be combinations of the five factors. It was possible to separate the specific variance from the common variance by partialling out the factor scores, Observer Ratings 11 but against what criteria could one validate the residuals? Residuals from observer ratings of the same facets were the natural choice, and correlations of the residuals from the two methods in fact demonstrated the consensual validation of specific variance in NEO-PI-R facet scales (McCrae & Costa, 1992). The wish that we could "see oursels as ithers see us" (in Burns' language) is based on the widespread belief that there are noticeably different public and private selves. Hogan and Hogan (1992) referred to the former as reputation and believed that the private self, available to introspection, must show a different structure of personality. But the same FFM is found in self-reports and observer ratings (Ostendorf & Angleitner, 2004), and agreement among different observers is not systematically higher than agreement between self and other (McCrae & Costa, 1989). The distinction between public and private selves is thus in some sense an illusion; there is no perfect consensus on our public self that we could hope to see, and our self-reports are not based on some direct intuition of our inner nature, but on self-observations that substantially overlap with what others observe. The essence of good science is replication, and multimethod replications are powerful because they reduce the possibility that findings are due to some artifact of a particular method. In ABLSA studies, we showed that self-reports on the NEO-PI-R were related to psychological well-being, interpersonal traits, Jungian types, and ways of coping. All of these findings were replicated when spouse or peer ratings were used as the measure of personality (Costa & McCrae, 1992). Interpreting a Case Study: Madeline G Costa and Piedmont (2003) reported a study of Madeline G, a flamboyant Native American lawyer who volunteered to be a case study for a variety of personality assessors Observer Ratings 12 (Wiggins, 2003). She completed Form S of the NEO-PI-R, and her common-law husband completed Form R to describe her. Consistent with the general impression that she was a volatile and forceful character, Madeline G's responses consisted almost exclusively of strongly disagree (33.8%) or strongly agree (48.8%), and the resulting profile was correspondingly extreme: Four of the five domains and 25 of the 30 facets were in the "Very Low" or "Very High" range. Such a profile is statistically very unusual, and in isolation it would have been difficult to know whether it was meaningful or merely an artifact of extreme responding. A comparison with Form R was therefore more than usually informative in this case. Although not as extreme, her husband's descriptions agreed in direction with her self-reports for three factors and 19 facets, suggesting that her self-reports were exaggerated but not meaningless. It is possible to assess the degree of agreement between two personality profiles (for example, Madeline G's self-report and her husband's rating of her), although all the currentlyused metrics have limitations. As Cattell pointed out in 1949, simple Pearson correlations are problematic because they reflect only the similarity of shape of the profile, not similarity of level. Cattell's proposed alternative, rp, takes into account the difference between profile elements, but not the extremeness of the trait. When Madeline G scores T = 16 on Agreeableness whereas her husband places her at T = 4 (where T-scores have a mean of 50 and standard deviation of 10), there is more than a standard deviation difference between the scores—yet both depict her as profoundly antagonistic (recall that she was a lawyer). McCrae (1993) proposed a different metric, the coefficient of profile agreement, rpa, that is sensitive to both the difference between corresponding profile elements and the extremeness of the average score. The computer-generated Interpretive Report (Costa & McCrae, 1992; Costa, McCrae, & PAR Staff, 1994) for the NEO-PI-R calculates this quantity, and shows in the case of Madeline Observer Ratings 13 G that agreement is .95, which is very good compared to agreement between most married couples. The high value is found in spite of major disagreements between the two sources on some factors and facets, because rpa takes extremeness of scores into account, and Madeline G has a very extreme profile. In such cases, rpa probably overestimates agreement; this is one of its limitations. But empirical tests (McCrae, 1993) have shown that it is generally superior to Cattell's rp as a measure of overall profile agreement. The Interpretive Report also uses rpa statistics to pinpoint agreement or disagreement on specific factors and facets. Madeline and her husband disagreed on twelve facets and on two factors, Neuroticism and Conscientiousness. Madeline regarded herself as very low in Neuroticism and high in Conscientiousness, whereas her husband saw her as high in Neuroticism and low in Conscientiousness. Within the six Conscientiousness facets, there was both agreement and disagreement: Both believed that she was high in C4: Achievement Striving and very low in C6: Deliberation, but she saw herself as high in C3: Dutifulness whereas he saw her as very low. Here is a woman with lofty goals who sets out to achieve them without much thought about the ramifications of her actions. Perhaps she focused on the ends and viewed herself as highly principled; perhaps he focused on the means, and saw her as unscrupulous. How should observer rating data be integrated with self-report data in the interpretation of individual cases? First, they can be used to evaluate the quality of the self-report. Many validity scales have been devised to assess the trustworthiness of self-reports, but evidence suggests that they are of limited value (Piedmont, McCrae, Riemann, & Angleitner, 2000). An independent source of information is preferable. A comparison with her husband's ratings suggests that overall, Madeline's self-report is valid, but that there are some particular trait assessments (such as C6: Dutifulness) that are questionable. Second, the two sources can be combined to provide an optimal estimate of personality Observer Ratings 14 trait levels. It is probable that Madeline is neither as dutiful as she says or as unethical as her husband claims; averaging them should give a better estimate of the true level. (In fact, the Interpretive Report provides an average with a slight adjustment to account for the fact that the variance of means is reduced; see McCrae, Stone, Fagan, & Costa, 1998). But there is a third possibility, of particular importance in clinical settings, where the psychologist expects to interact with the client on repeated occasions. It is not necessary to stop the assessment after the questionnaires have been scored. Instead, discrepancies between two sources ought to be explored with the client, the observer, or both. On the one hand, that should yield a better assessment of the disputed trait; on the other hand, it may offer insights into the nature of their relationship. It does not bode well for a marriage when one partner thinks the other is unethical, and in this case, Madeline G's relationship ended shortly after the assessments. The Estimation of Latent Traits Riemann, Angleitner, and Strelau (1997) provided an elegant example of the integrative use of data from multiple observers and self-reports. They assessed personality using self-reports and ratings from two informants on the NEO-FFI in a sample of mono- and dizygotic twins. Typically, behavior genetic studies only use self-reports, and partition the variance into genetic, shared environmental, and non-shared environmental effects. Most behavior genetic studies of personality have shown that the shared environment (experiences shared by children living in the same family) accounts for little or no variance; instead, personality is about equally determined by genetic influences and the non-shared environment (Bouchard & Loehlin, 2001). When Riemann et al. analyzed self-reports, they reported heritabilities, a measure of the proportion of variance that arises from genetic differences, of .42 to .56. In these analyses, however, the non-shared environment term is somewhat mysterious: It includes real effects on personality that are due to unique experiences of different children in the Observer Ratings 15 same family (such as the fact that one took piano lessons whereas the other studied the flute), but it also includes measurement error. It is possible to eliminate random measurement error by correcting for test unreliability; this typically increases the estimate of genetic effects modestly. But reliability estimates only deal with random error, not systematic bias. For example, a rater may think that a target is more extraverted than the target actually is, but because the rater maintains this view consistently, the misperception does not introduce unreliability. Riemann et al. used data from two raters to estimate heritability using latent trait analyses, in which both random error and systematic biases of the two raters were statistically controlled. In this design, the heritabilities were higher (.57 to .81); and when the latent traits (the "true scores") were estimated from a combination of self-reports and the two informant ratings, heritabilities were even higher, .66 to 79. This study suggests that the heritability of personality traits has been systematically and substantially underestimated by mono-method studies. Mono-method studies may also have been misleading with respect to factor intercorrelations. Digman (1997) proposed that the Big Five factors were themselves intercorrelated yielding two factors he called α, Personal Growth, and β, Socialization. Markon, Krueger, and Watson (2005) elaborated on this scheme, using data from a meta-analysis of selfreport studies. However, using multitrait, multimethod confirmatory factor analyses of selfreports and peer- and parent ratings, Biesanz and West (2004) found that "none of the Big Five latent factors were significantly correlated" (p. 865), and suggested that within-method evaluative biases were probably responsible for the apparent higher-order structure of FFM factors. Personality and its Changes in Alzheimer's Disease Although geriatricians have often observed that there seem to be personality changes in patients with Alzheimer's disease, there was very little research on the topic until the 1990s. One Observer Ratings 16 of the major reasons for that was, of course, that the self-report instruments typically used to assess personality were of dubious applicability. Would dementing patients have insight into their own personality, or indeed, would they be able to complete a questionnaire at all? Certainly not in the later stages of the disease. Siegler and her colleagues realized that observer ratings provided a feasible alternative (Siegler et al., 1991). They asked caregivers to rate 35 patients with memory impairment on an older version of the NEO-PI-R in two conditions: as they were now, and as they had been before onset of the disease (the premorbid ratings). The premorbid profiles were generally in the normal range, but there were dramatic differences for the current description: Patients were seen as high in Neuroticism, low in Extraversion, and very low in Conscientiousness. The increase in Neuroticism was not surprising, because Alzheimer's disease had often been linked to depression. But no one had anticipated changes in Conscientiousness, although they seem obvious in hindsight: People who have difficulty concentrating and remembering show behavior that is poorly organized and not goal-directed. That ground-breaking study, subsequently replicated by several teams of investigators, was imperfect in a few respects. First, it was not clear if the premorbid ratings were accurate, because they depended on the memory of the caregiver and might be distorted by contrast with current traits. For example, premorbid Conscientiousness might have been overestimated because it was so much higher than current levels. Strauss, Pasupathi, and Chatterjee (1993) recruited two independent raters—the caregiver and another friend or relative—and asked for premorbid and current ratings of the patient. The two raters generally agreed on both ratings, suggesting that both were accurate reflections of the patient's personality at different times. In a later study, Strauss and Pasupathi (1994) obtained observer ratings of Alzheimer's patients on two occasions, one year apart. They showed continuing changes in the direction of Observer Ratings 17 higher Neuroticism and lower Conscientiousness over the course of the year, suggesting that observer ratings are sensitive to relatively small changes. Observer ratings have also been used to study other psychiatric disorders in which selfreports from patients were suspect, including Parkinson's disease and traumatic brain injury. In addition, Duberstein, Conwell, and Caine (1994) gathered informant reports about suicide completers as part of a "psychological autopsy," which suggested that suicide completers are more likely to be closed than open to experience. Portraits of Historical Figures Was Abe really honest? Was Washington cold? We cannot ask these men to complete self-report questionnaires, and it is not clear how much we would learn from reading the many biographies that have been written about each of them, because the data would not be standardized in a way that made meaningful comparisons possible. Rubenzer, Faschingbauer, and Ones (2000) solved this problem by inviting biographers of U.S. Presidents to describe their subjects using observer rating forms of the NEO-PI-R, the California Adult Q-Set (Block, 1961), and a set of adjective scales (Goldberg, 1990). (See Song and Simonton, this volume, for another approach to the assessment of historical figures.) It is one thing to ask caregivers to rate the personality of an Alzheimer's patient as he or she was five years ago; it is another to ask historians to rate the personality of an individual who has been dead for two centuries. How would they respond to items like "[George Washington] really loves the excitement of roller coasters" or "[Abraham Lincoln] believes that the 'new morality' of permissiveness is no morality at all," when these are clearly anachronistic? Fortunately, Rubenzer et al. only identified three such items in the NEO-PI-R, and even those could probably be meaningfully rated: Does anyone really think that Washington would have loved roller coaster rides if he had been given the opportunity to try one? Observer Ratings 18 Rubenzer and colleagues argued that their assessments were valid because they were internally consistent and showed cross-rater agreement (using the rpa measure) comparable to that seen in contemporary peer rating studies. Based on descriptions by 10 experts, George Washington was average in Neuroticism, low in Extraversion, Openness, and Agreeableness, and very high in Conscientiousness—a portrait that seems to aptly characterize his steadfast but formal personality. Lincoln was rather more interesting, scoring high on Neuroticism, Extraversion, Agreeableness, and Conscientiousness, and very high on Openness. The high Neuroticism scores are consistent with his well-known episodes of depression, and the high Openness is seen in his legendary love of books. Curiously, although he was generally agreeable, he was ranked very low in Straightforwardness, being a far shrewder politician than he pretended to be. McCrae (1996) reported a case study of the French-Swiss philosopher, composer, and novelist, Jean-Jacques Rousseau. Figure 1 shows his profile, based on ratings by a political scientist who had written a book on his political ideology. In this Figure, the five factor scores are given on the left, and the 30 facet scales are grouped by factor toward the right. Because only one rater was available, interrater agreement could not be assessed, but this profile is consistent with the writings of Rousseau's contemporaries, who described both his paranoia and his genius. Like Madeline G, Rousseau shows an extreme profile, with scores literally off the chart for several facets (e.g., N4: Self-Consciousness, O1: Fantasy). Perhaps this reflects the rater's use of extreme response options, but it must be recalled that Rousseau was one of the most extraordinary figures in history, and these ratings may be completely accurate. If the reader still feels the need for a second opinion on Rousseau—good. Personality psychologists, clinicians, and historians should all cultivate a taste for multiple assessments of personality (McCrae, 1994). Observer Ratings 19 ____________________ Figure 1 about here ____________________ Personality Assessment in Chimpanzees Another chapter (Vazire, Gosling, Dickey, & Shapiro, this volume) has discussed the use of observer ratings to assess personality in other species, but we feel it is worth focusing on some issues in observer ratings of the personality of our closest nonhuman relatives, chimpanzees (Pan troglodytes). King and Figueredo (1997) asked zoo workers to rate individual chimpanzees on a 43-item questionnaire that was based on adjective measures of the FFM. Each adjective was accompanied by a short description to clarify the intended construct. Factor analysis of the 43 items suggested six factors. The first factor, Dominance, was a large chimpanzee-specific factor that was indicative of competitive prowess. The remaining five factors were analogous to the domains of the FFM. Because the validity of observer ratings of chimpanzee personality cannot be assessed by comparing them to self-reports, King and Figueredo (1997) had multiple raters assess each target and used intraclass correlations to show that individual [ICC(3, 1)] and mean [ICC(3, k)] ratings were consistent across raters (Shrout & Fleiss, 1979). The interrater reliability of individual ratings can be used to compare reliabilities of similar factors across different species even if the number of raters differs. The interrater reliability of mean ratings are typically used to describe the reliability of scores based on multiple raters. Because using a mean of several ratings will reduce measurement error, it should be no surprise that the reliability of mean ratings will be higher. A recent study (King, Weiss, & Farmer, 2005) on the personality of chimpanzees in zoos and a sanctuary in the Republic of Congo (Brazzaville) challenged Kenny’s (2004) conclusion Observer Ratings 20 that length of acquaintance has little effect on the reliability of ratings. Raters at zoos knew their charges for an average of 6.5 years, and there were a mean of 3.7 raters per zoo chimpanzee. Raters at the sanctuary knew their charges for a mean of only 6.9 months, but each chimpanzee in this habitat was rated by an average of 16.2 raters (King, Weiss, & Farmer, 2005). Interrater reliabilities of individual ratings were considerably higher (Mdn = .46) for ratings of chimpanzees in zoos than for ratings at the sanctuary (Mdn = .26), suggesting that length of acquaintance does affect accuracy, and that one should be skeptical of any conclusions drawn from single ratings of chimpanzees based on limited acquaintance. However, the same study showed that the reliabilities of the mean ratings of the chimpanzees in the African sanctuary (Mdn = .87) were comparable to or greater than those of chimpanzees living in zoos (Mdn = .77). In short, the use of more raters per chimpanzee all but eliminated any disparity between the reliabilities of ratings that arose due to differences in the length of acquaintance. Observer ratings of chimpanzee personality traits can also be validated by a pattern of correlations with external criteria. Supporting evidence has been found in two studies that have related each of the chimpanzee factors to frequencies of relevant behaviors (Pederson, King, & Landau, 2005), and Dominance, Extraversion, and Dependability (analogous to Conscientiousness) to ratings of subjective well-being (King & Landau, 2003). Cautions in the Use of Observer Ratings We advocate multimethod approaches to personality assessment because no method, including observer ratings, is perfect. Some of the potential problems with observer ratings are familiar from the self-report literature. Acquiescence, extreme responding, and random responding are threats to the validity of observer ratings much as they are to self-reports. Efforts to identify or correct invalid protocols have so far met with limited success (Piedmont et al., Observer Ratings 21 2000), in part because even carelessly completed inventories typically have some useful information: The validity of most assessments is a matter of degree (Carter et al., 2001). Users of both Form S and Form R of the NEO-PI-R are thus given four rather conservative rules for discarding protocols as invalid: (1) more than 40 missing items; (2) a response of disagree or strongly disagree to the validity check item, "I have tried to answer all these questions honestly and accurately;" (3) a no response to the validity check item "Have you entered your responses in the correct areas?;" or (4) random responding, as evidenced by strings of repetitive responses (e.g., more than six consecutive strongly disagrees). Even in these instances, however, clinicians are advised to retain the responses, discuss the potential problems with the respondent, and interpret the profile cautiously. For research purposes, the optimal solution is to score all protocols (replacing missing items with neutral or the group mean) and report analyses with and without the exclusion of nominally invalid protocols. McCrae et al. (2005) extended the assessment of protocol validity to entire samples in their cross-cultural study of observer-rated personality. Personality questionnaires are familiar to American and European college students, but they are less widely used in Asia and Africa, so there is reason to believe that the quality of personality data might be better in some cultures than in others. McCrae et al. created a Quality Index that took into account random responding, acquiescence, missing data, the fluency of the sample in the language of the questionnaire, the quality of the translation, and miscellaneous problems reported by the test administrator. This index was not used to exclude samples, but to help interpret the data. For example, the factor structure of NEO-PI-R Form R was less well replicated in African cultures. Was that because African cultures have a personality structure that is different from that of Americans, or was it simply due to error of measurement? The fact that the Quality Index was low in most of the African cultures suggested the latter interpretation, and when noise was reduced by pooling Observer Ratings 22 responses from the five African cultures, the FFM structure was clearly replicated. Judging by the attention it has been given by psychometricians, the major concern with self-reports is social desirability, individual differences in the tendency to exaggerate one's strengths and minimize one's failings. One of the chief merits of observer ratings is that they do not share this bias: Mary Jones may be particularly prone to socially desirable responding, but there is usually no reason to think that her raters will also feel a need to paint a rosy picture of her. Although the assessment and control of individual differences in socially desirable responding is problematic (Piedmont et al., 2000), this does not mean that there are no evaluative biases in self-reports and observer ratings. Individuals who are applying for a job typically score higher on self-reports of all desirable traits than disinterested volunteers, and their scores must be interpreted in terms of job applicant norms, not volunteer norms. Similar biases might well be found in observer ratings if the observer has an interest in the outcome. For example, spouse ratings of job applicants might also be unrealistically favorable, because the whole family would benefit from the job offer. A striking instance of negative bias in observer ratings was reported by Langer (2004), who administered Form S and Form F of the NEO-PI-R to 20 couples being evaluated for child custody litigation. Their mean self-reports for the five factors all fell within the normal range, although they tended toward higher-than-average Extraversion, Agreeableness, and Conscientiousness, and lower-than-average Neuroticism scores. Their mean ratings of their spouses, however, were dramatically different: They described their spouses as high in Neuroticism, low in Openness, and very low (mean T-scores = 26.3 and 25.2) in Agreeableness and Conscientiousness. The moral is that in interpreting observer rating data in groups or individuals, one must take into account normative biases attributable to the situation. (Among Observer Ratings 23 chimpanzees, situational biases to consider in rater reports may include whether the individuals live in the wild, sanctuaries, or research labs.) Ideally, this would mean the use of relevant norms (e.g., from a large sample of spouse ratings of custody litigants); otherwise, clinical judgment must be used to discount suspicious data. It is of considerable interest that Langer reported Form S/Form R correlations ranging from .18 to .46 for the five factors, with significant agreement for Extraversion and Openness. This suggests that some validity in individual differences is retained even when the mean levels are dramatically distorted. Future Directions Research on Ratings A number of researchers have been interested in the factors that affect agreement between judges (Funder et al., 1995; John & Robins, 1993). Differences in observability and evaluation appear to influence cross-observer agreement, and Extraversion is usually found to be the most consensually rated factor (Borkenau & Liebler, 1995). In a cross-cultural extension of that work, McCrae et al. (2004) reported similar findings for American and European samples, but not for Chinese data. Perhaps agreement across raters depends on the salience of the trait in the raters' culture. There is ample evidence that ratings from observers who are well-acquainted with the target provide reliable and valid assessments of the full range of personality traits, but many questions remain about how to optimize observer rating assessments. Is some particular combination of raters (e.g., a spouse and a friend) preferable, and should these sources be differentially weighted? Should special instructions be given, perhaps asking raters to focus on the person in a particular context (at work, at home), or emphasizing that they should report both strengths and weaknesses? We know already that observer rating methods and instruments work Observer Ratings 24 well in translation and across a variety of cultures (McCrae et al., 2005); are any special problems posed by the use of informant ratings of personality gathered via the Internet (e.g., "flaming")? In most cases, research using observer ratings replicates findings based on self-reports, but in a few instances, substantive findings differ across these methods. For example, crosssectional ratings of Agreeableness consistently increase across the adult lifespan when selfreports are examined, but not when observer ratings are analyzed (McCrae et al., 2005). How do we account for such discrepancies, and which set of findings should we believe? In the assessment of chimpanzee personality, continued work is needed on assessment instruments. One important question about chimpanzee personality is whether deviations from the FFM structure previously reported are an artifact of the specific measure. The questionnaire King and Figueredo (1997) used was based on the human FFM, but it was not a standard measure, and it was modified for use with chimpanzees. Thus, we do not know whether the Dominance factor and a unipolar Agreeableness factor found in chimpanzees reflect unique characteristics of chimpanzees' personality trait structure, or whether these are attributable to the particular set of adjectives chosen. Observer rating studies need to be conducted in which human targets are rated on the chimpanzee personality questionnaire and a well-validated measure of the FFM. Factor analysis of the chimpanzee items in this human sample would indicate whether the human or chimpanzee structure would be found using this instrument (cf. Gosling, Kwan, & John, 2003). Correlations with the FFM measure would aid in the interpretation of the chimpanzee items and factors. Wider Applications Observer ratings provide a simple, valid, and still-underutilized source of personality data. If researchers want to evaluate the correlation of Scale A with Scale B, they typically Observer Ratings 25 administer them to college students. There is no reason why the students should not be asked to provide observer ratings rather than (or in addition to) self-reports. With some thought, researchers may be able to obtain more generalizable findings in this way, by requiring that raters select targets of specified age, gender, or SES. Informant ratings can also be used effectively on hypothetical targets. The Personality Profiles of Cultures Project (Terracciano et al., 2005) included informant reports on the traits of the "typical" member of each culture, allowing a comparison of national stereotypes (e.g., the typical Canadian is thought to be more compliant than the typical American). Informants can rate ideal romantic partners (Figueredo et al., 2005) or preferred roommates. Strictly speaking, these are not observer ratings, because the hypothetical targets have not been observed. They do, however, provide useful psychological information using the observer rating methodology. Observer ratings have one more important feature that makes them especially valuable for survey research: They require minimal involvement of the target. Survey researchers often complain that their interviews do not leave enough time to administer a full personality inventory, and if they assess personality at all, it is likely to be with a handful of questions of limited reliability. But most survey respondents can easily identify a significant other or friend who could be asked to make ratings of the respondent's personality and return them to the interviewer, either in person or by mail (cf. Achenbach, Krukowski, Dumenci, & Ivanova, 2005). Although those data might not meet the stringent sampling requirements of the study, they would provide important auxiliary information that could confirm or extend results of the interview assessment and validate the abbreviated self-report personality assessments. Obviously, the same advantage should make observer ratings attractive to clinicians or researchers whose current test batteries already tax the patience of their clients or research participants. Observer Ratings 26 References Achenbach, T. M., Krukowski, R. A., Dumenci, L., & Ivanova, M. Y. (2005). Assessment of adult psychopathology: Meta-analyses and implications of cross-informant correlations. Psychological Bulletin, 131, 361-382. Bagby, R. M., Rector, N. A., Bindseil, K., Dickens, S. E., Levitan, R. D., & Kennedy, S. H. (1998). Self-report ratings and informant ratings of personalities of depressed outpatients. American Journal of Psychiatry, 155, 437-438. Bem, D. J., & Funder, D. C. (1978). Predicting more of the people more of the time: Assessing the personality of situations. Psychological Review, 85, 485-501. Benet-Martínez, V., & John, O. P. (1998). Los Cinco Grandes across cultures and ethnic groups: Multitrait multimethod analyses of the Big Five in Spanish and English. Journal of Personality and Social Psychology, 75, 729-750. Biesanz, J. C., & West, S. G. (2004). Towards understanding assessments of the Big Five: Multitrait-Multimethod analyses of convergent and discriminant validity across measurement occasion and type of observer. Journal of Personality, 72, 845-876. Block, J. (1961). The Q-sort method in personality assessment and psychiatric research. Springfield, IL: Charles C Thomas. Block, J. (1971). Lives through time. Berkeley, CA: Bancroft Books. Borkenau, P., & Liebler, A. (1995). Observable attributes as manifestations and cues of personality and intelligence. Journal of Personality, 63, 1-25. Bouchard, T. J., & Loehlin, J. C. (2001). Genes, evolution, and personality. Behavior Genetics, 31, 243-273. Buss, A. H., & Plomin, R. (1975). A temperament theory of personality development. New York: Wiley. Observer Ratings 27 Carter, J. A., Herbst, J. H., Stoller, K. B., King, V. L., Kidorf, M. S., Costa, P. T., Jr., et al. (2001). Short-term stability of NEO-PI-R personality trait scores in opioid-dependent outpatients. Psychology of Addictive Behaviors, 15, 255-260. Cattell, R. B. (1949). rp and other coefficients of pattern similarity. Psychometrica, 14, 279-298. Costa, P. T., Jr., & McCrae, R. R. (1988). Personality in adulthood: A six-year longitudinal study of self-reports and spouse ratings on the NEO Personality Inventory. Journal of Personality and Social Psychology, 54, 853-863. Costa, P. T., Jr., & McCrae, R. R. (1992). Revised NEO Personality Inventory (NEO-PI-R) and NEO Five-Factor Inventory (NEO-FFI) professional manual. Odessa, FL: Psychological Assessment Resources. Costa, P. T., Jr., McCrae, R. R., & PAR Staff. (1994). NEO Software System [Computer software]. Odessa, FL: Psychological Assessment Resources. Costa, P. T., Jr., & Piedmont, R. L. (2003). Multivariate assessment: NEO-PI-R profiles of Madeline G. In J. S. Wiggins (Ed.), Paradigms of personality assessment (pp. 262-280). New York: Guilford. Deary, I. J. (1996). A (latent) Big Five personality model in 1915? A reanalysis of Webb's data. Journal of Personality and Social Psychology, 71, 992-1005. Digman, J. M. (1997). Higher-order factors of the Big Five. Journal of Personality and Social Psychology, 73, 1246-1256. Duberstein, P. R., Conwell, Y., & Caine, E. D. (1994). Age differences in the personality characteristics of suicide completers: Preliminary findings from a psychological autopsy study. Psychiatry: Interpersonal & Biological Processes, 57, 213-224. Figueredo, A. J., Sefcek, J., Vasquez, G., Brumbach, B. H., King, J. E., & Jacobs, W. J. (2005). Evolutionary personality psychology. In D. M. Buss (Ed.), Handbook of evolutionary Observer Ratings 28 psychology (pp. 851-877). Hoboken, NJ: Wiley. Funder, D. C., Kolar, D. C., & Blackman, M. C. (1995). Agreement among judges of personality: Interpersonal relations, similarity, and acquaintanceship. Journal of Personality and Social Psychology, 69, 656-672. Funder, D. C., & Sneed, C. D. (1993). Behavioral manifestations of personality: An ecological approach to judgmental accuracy. Journal of Personality and Social Psychology, 64, 479490. Goldberg, L. R. (1990). An alternative "description of personality": The Big-Five factor structure. Journal of Personality and Social Psychology, 59, 1216-1229. Gosling, S. D., Kwan, V. S. Y., & John, O. P. (2003). A dog's got personality: A cross-species comparative approach to personality judgments in dogs and humans. Journal of Personality and Social Psychology, 85, 1161-1169. Hofstee, W. K. B. (1994). Who should own the definition of personality? European Journal of Personality, 8, 149-162. Hogan, R., & Hogan, J. (1992). Hogan Personality Inventory manual. Tulsa, OK: Hogan Assessment Systems. John, O. P., Caspi, A., Robins, R. W., Moffitt, T. E., & Stouthamer-Loeber, M. (1994). The "little five": Exploring the Five-Factor Model of personality in adolescent boys. Child Development, 65, 160-178. John, O. P., & Robins, R. W. (1993). Determinants of interjudge agreement on personality traits: The Big Five domains, observability, evaluativeness, and the unique perspective of the self. Journal of Personality, 61, 521-551. Kenny, D. A. (2004). PERSON: A general model of interpersonal perception. Personality and Social Psychology Review, 8, 265-280. Observer Ratings 29 King, J. E., & Figueredo, A. J. (1997). The Five-Factor Model plus Dominance in chimpanzee personality. Journal of Research in Personality, 31, 257-271 King, J. E., & Landau, V. I. (2003). Can chimpanzee (Pan troglodytes) happiness be estimated by human raters? Journal of Research in Personality, 37, 1-15. King, J. E., Weiss, A., & Farmer, K. H. (2005). A chimpanzee (Pan troglodytes) analogue of cross-national generalization of personality structure: Zoological parks and an African sanctuary. Journal of Personality, 73, 389-410. Kurtz, J. E., & Sherker, J. L. (2003). Relationship quality, trait similarity, and self-other agreement on personality traits in college roommates. Journal of Personality, 71, 21-48. Langer, F. (2004). Pairs, reflections, and the EgoI: Exploration of a perceptual hypothesis. Journal of Personality Assessment, 82, 114-126. Lanning, K. (1994). Dimensionality of observer ratings on the California Adult Q-Set. Journal of Personality and Social Psychology, 67, 151-160. Markon, K. E., Krueger, R. F., & Watson, D. (2005). Delineating the structure of normal and abnormal personality: An integrative hierarchical approach. Journal of Personality and Social Psychology, 88, 139-157. McCrae, R. R. (1982). Consensual validation of personality traits: Evidence from self-reports and ratings. Journal of Personality and Social Psychology, 43, 293-303. McCrae, R. R. (1993). Agreement of personality profiles across observers. Multivariate Behavioral Research, 28, 13-28. McCrae, R. R. (1994). The counterpoint of personality assessment: Self-reports and observer ratings. Assessment, 1, 159-172. McCrae, R. R. (1996). Social consequences of experiential openness. Psychological Bulletin, 120, 323-337. Observer Ratings 30 McCrae, R. R., & Costa, P. T., Jr. (1982). Self-concept and the stability of personality: Crosssectional comparisons of self-reports and ratings. Journal of Personality and Social Psychology, 43, 1282-1292. McCrae, R. R., & Costa, P. T., Jr. (1983). Social desirability scales: More substance than style. Journal of Consulting and Clinical Psychology, 51, 882-888. McCrae, R. R., & Costa, P. T., Jr. (1989). Different points of view: Self-reports and ratings in the assessment of personality. In J. P. Forgas & M. J. Innes (Eds.), Recent advances in social psychology: An international perspective (pp. 429-439). Amsterdam: Elsevier Science Publishers. McCrae, R. R., & Costa, P. T., Jr. (1992). Discriminant validity of NEO-PI-R facets. Educational and Psychological Measurement, 52, 229-237. McCrae, R. R., Costa, P. T., Jr., & Busch, C. M. (1986). Evaluating comprehensiveness in personality systems: The California Q-Set and the Five-Factor Model. Journal of Personality, 54, 430-446. McCrae, R. R., Costa, P. T., Jr., Martin, T. A., Oryol, V. E., Rukavishnikov, A. A., Senin, I. G., et al. (2004). Consensual validation of personality traits across cultures. Journal of Research in Personality, 38, 179-201. McCrae, R. R., Herbst, J. H., & Costa, P. T., Jr. (2001). Effects of acquiescence on personality factor structures. In R. Riemann, F. Ostendorf & F. Spinath (Eds.), Personality and temperament: Genetics, evolution, and structure (pp. 217-231). Berlin: Pabst Science Publishers. McCrae, R. R., Stone, S. V., Fagan, P. J., & Costa, P. T., Jr. (1998). Identifying causes of disagreement between self-reports and spouse ratings of personality. Journal of Personality, 66, 285-313. Observer Ratings 31 McCrae, R. R., Terracciano, A., & 78 Members of the Personality Profiles of Cultures Project. (2005). Universal features of personality traits from the observer's perspective: Data from 50 cultures. Journal of Personality and Social Psychology, 88, 547-561. Mervielde, I., & De Fruyt, F. (1999). Construction of the Hierarchical Personality Inventory for Children. In I. Mervielde, I. Deary, F. De Fruyt & F. Ostendorf (Eds.), Personality psychology in Europe: Proceedings of the Eighth European Conference on Personality Psychology (pp. 107-127). Tilburg, The Netherlands: Tilburg University Press. Ostendorf, F., & Angleitner, A. (2004). NEO-Persönlichkeitsinventar, revidierte Form, NEO-PIR nach Costa und McCrae [Revised NEO Personality Inventory, NEO-PI-R of Costa and McCrae]. Göttingen, Germany: Hogrefe. Pederson, A. K., King, J. E., & Landau, V. I. (2005). Chimpanzee (Pan troglodytes) personality predicts behavior. Journal of Research in Personality, 39, 534-549. Piedmont, R. L., McCrae, R. R., Riemann, R., & Angleitner, A. (2000). On the invalidity of validity scales in volunteer samples: Evidence from self-reports and observer ratings in volunteer samples. Journal of Personality and Social Psychology, 78, 582-593. Riemann, R., Angleitner, A., & Strelau, J. (1997). Genetic and environmental influences on personality: A study of twins reared together using the self- and peer report NEO-FFI scales. Journal of Personality, 65, 449-475. Rubenzer, S. J., Faschingbauer, T. R., & Ones, D. S. (2000). Assessing the U.S. presidents using the Revised NEO Personality Inventory. Assessment, 7, 403-420. Shrout, P. E., & Fleiss, J. L. (1979). Intraclass correlations: Uses in assessing rater reliability. Psychological Bulletin, 86, 420-428. Siegler, I. C., Welsh, K. A., Dawson, D. V., Fillenbaum, G. G., Earl, N. L., Kaplan, E. B., et al. (1991). Ratings of personality change in patients being evaluated for memory disorders. Observer Ratings 32 Alzheimer Disease and Associated Disorders, 5, 240-250. Strauss, M. E., & Pasupathi, M. (1994). Primary caregivers' descriptions of Alzheimer patients' personality traits: Temporal stability and sensitivity to change. Alzheimer Disease & Associated Disorders, 8, 166-176. Strauss, M. E., Pasupathi, M., & Chatterjee, A. (1993). Concordance between observers in descriptions of personality change in Alzheimer's disease. Psychology and Aging, 8, 475480. Terracciano, A., Abdel-Khalak, A. M., Ádám, N., Adamovová, L., Ahn, C.-k., Ahn, H.-n., et al. (2005). National character does not reflect mean personality trait levels in 49 cultures. Science, 310, 96-100. Tupes, E. C., & Christal, R. E. (1992). Recurrent personality factors based on trait ratings. Journal of Personality, 60, 225-251. (Original work published 1961) Vazire, S. (in press). Informant reports: A cheap, fast, and easy method for personality assessment. Journal of Research in Personality. Wiggins, J. S. (2003). Paradigms of personality assessment. New York: Guilford. Observer Ratings 33 Jean-Jacques Rousseau Figure 1. Revised NEO Personality Inventory profile for J.-J. Rousseau, as rated by A. M. Melzer, adapted from McCrae, 1996. Profile form reproduced by special permission of the publisher, Psychological Assessment Resources, Inc., 16204 North Florida Avenue, Lutz, Florida 33549, from the Revised NEO Personality Inventory by Paul T. Costa, Jr., and Robert R. McCrae. Copyright 1978, 1985, 1989, 1992 by PAR, Inc. Further reproduction is prohibited without permission of PAR, Inc. Observer Ratings 34 Recommended Readings Benet-Martínez, V., & John, O. P. (1998). Los Cinco Grandes across cultures and ethnic groups: Multitrait multimethod analyses of the Big Five in Spanish and English. Journal of Personality and Social Psychology, 75, 729-750. Costa, P. T., Jr., & Piedmont, R. L. (2003). Multivariate assessment: NEO-PI-R profiles of Madeline G. In J. S. Wiggins (Ed.), Paradigms of personality assessment (pp. 262-280). New York: Guilford. Funder, D. C., Kolar, D. C., & Blackman, M. C. (1995). Agreement among judges of personality: Interpersonal relations, similarity, and acquaintanceship. Journal of Personality and Social Psychology, 69, 656-672. Kenny, D. A. (2004). PERSON: A general model of interpersonal perception. Personality and Social Psychology Review, 8, 265-280. McCrae, R. R. (1994). The counterpoint of personality assessment: Self-reports and observer ratings. Assessment, 1, 159-172. McCrae, R. R., Stone, S. V., Fagan, P. J., & Costa, P. T., Jr. (1998). Identifying causes of disagreement between self-reports and spouse ratings of personality. Journal of Personality, 66, 285-313.