Chapter 11 Summary

advertisement

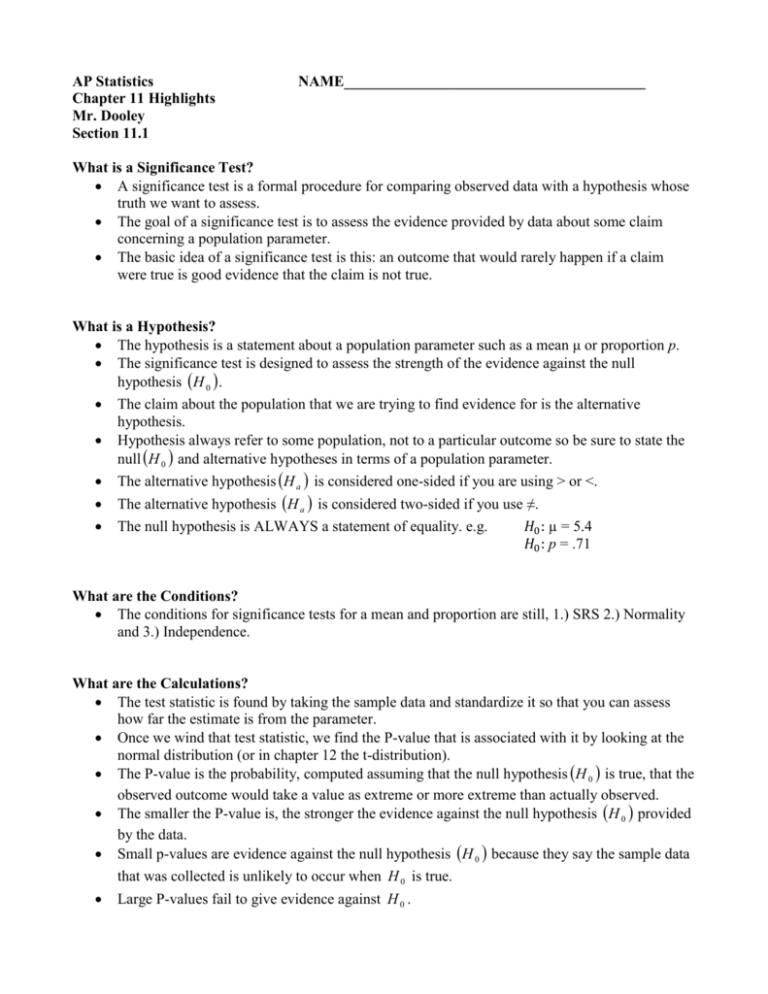

AP Statistics Chapter 11 Highlights Mr. Dooley Section 11.1 NAME________________________________________ What is a Significance Test? A significance test is a formal procedure for comparing observed data with a hypothesis whose truth we want to assess. The goal of a significance test is to assess the evidence provided by data about some claim concerning a population parameter. The basic idea of a significance test is this: an outcome that would rarely happen if a claim were true is good evidence that the claim is not true. What is a Hypothesis? The hypothesis is a statement about a population parameter such as a mean μ or proportion p. The significance test is designed to assess the strength of the evidence against the null hypothesis H 0 . The claim about the population that we are trying to find evidence for is the alternative hypothesis. Hypothesis always refer to some population, not to a particular outcome so be sure to state the null H 0 and alternative hypotheses in terms of a population parameter. The alternative hypothesis H a is considered one-sided if you are using > or <. The alternative hypothesis H a is considered two-sided if you use ≠. The null hypothesis is ALWAYS a statement of equality. e.g. 𝐻0 : µ = 5.4 𝐻0 : p = .71 What are the Conditions? The conditions for significance tests for a mean and proportion are still, 1.) SRS 2.) Normality and 3.) Independence. What are the Calculations? The test statistic is found by taking the sample data and standardize it so that you can assess how far the estimate is from the parameter. Once we wind that test statistic, we find the P-value that is associated with it by looking at the normal distribution (or in chapter 12 the t-distribution). The P-value is the probability, computed assuming that the null hypothesis H 0 is true, that the observed outcome would take a value as extreme or more extreme than actually observed. The smaller the P-value is, the stronger the evidence against the null hypothesis H 0 provided by the data. Small p-values are evidence against the null hypothesis H 0 because they say the sample data that was collected is unlikely to occur when H 0 is true. Large P-values fail to give evidence against H 0 . What’s the deal with the P-value versus the α level? The decisive value of P is called the significance level which we also call the alpha (α) level. If we choose α = .05 we are requiring that the data give evidence against H 0 so strong that it would happen no more than 5% of the time when H 0 is true. If we choose α = .01, we are insisting on stronger evidence against H 0 . If the P-value is as small or smaller than alpha, we say that the data are “statistically significant at level α” “Significant” in the statistical sense does not mean “important.” It means “not likely to happen by chance” As with confidence intervals, your conclusion should have a clear connection to your calculations and should be stated in the context of the problem. If our P-value is less than our α level, we reject H 0 (The evidence supports the alternate hypothesis) If our P-value is greater than our α level, we fail to reject H 0 (The evidence supports the null hypothesis) Section 11.2 Highlights The math for a “z test for a population mean” A significance test about the population mean in the unrealistic setting when σ is known is called a “z Test for a Population Mean” x 0 z The z statistic is found using the formula: . n The z statistic has a standard normal distribution so we can use either the chart in our yellow stat packet or normalcdf to find the P value. Failing to find evidence against H 0 means only that the data are consistent with H 0 , not that we have clear evidence that H 0 is true. The link between confidence intervals and significance tests A 95% confidence interval captures the true value of μ in 95% of all samples. If we are 95% confident that the true μ lies in our interval, we are also confident that values of μ that fall outside our interval are incompatible with the data. There is an intimate connection between 95% confidence and significance at the α = .05 level and the same goes for 99% confidence and significance at the α = .01 level. A level α two-sided significance test rejects a hypothesis H 0 : 0 exactly when the value 0 falls outside a level ( 1 ) confidence interval for μ. The link between two-sided significance tests and confidence intervals is sometimes call duality. Section 11.3 Highlights The purpose of a test of significance is to give a clear statement of the degree of evidence provided by the sample against the null hypothesis. The P-value does this, but how small should a P-value be in order to be considered “convincing” evidence against the null hypothesis? If H 0 represents an assumption that the people you have to convince have believed for years, strong evidence will be needed to persuade them. If rejecting H 0 in favor of H a means making an expensive decision, you will need strong evidence. There is no sharp border between “statistically significant” and “statistically insignificant,” only increasingly strong evidence as the P-value decreases. Statistical significance is not the same as practical importance. Badly designed surveys or experiments often produce invalid results. Formal statistical inference cannot correct basic flaws in the design of an experiment or study. Section 11.4 Highlights Type I error occurs if we reject the null hypothesis when it is in fact true. The probability of committing a Type I error is α. Type II error occurs if we fail to reject the null hypothesis when it is actually false. The probability of committing a Type II error is β The power of a significance test measures its ability to detect an alternative hypothesis. The power against a specific alternative is the probability that the test will reject the null hypothesis when the alternative is true The power against a specific alternative is 1 – β Increasing the sample size increases the power. We can also increase the power by using a higher α level.