9 - Nearest Neighbor and Naive Bayes Classification

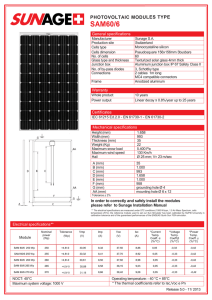

advertisement

9 – NEAREST NEIGHBOR AND NAÏVE BAYES CLASSIFIERS

9.1 – Nearest Neighbor Classification

276

9.2 - Nearest Neighbor Classification in R

The function knn in the class library performs nearest neighbor classification for test

cases based on a training dataset. A portion of the help file for the function is shown

below.

You may also need a function to determine the percent of observations misclassified.

Here fit is the predicted class from the model and y is actual response categories.

> misclass = function(fit,y) {

temp <- table(fit,y)

cat("Table of Misclassification\n")

cat("(row = predicted, col = actual)\n")

print(temp)

cat("\n\n")

numcor <- sum(diag(temp))

numinc <- length(y) - numcor

mcr <- numinc/length(y)

cat(paste("Misclassification Rate = ",format(mcr,digits=3)))

cat("\n")

}

277

Example 9.1 - Handwritten Digit Recognition on UPS Zip Codes

> library(ElemStatLearn)

> digits.knn = knn(zip.train[,-1],zip.test[,-1],cl=zip.train[,1],k=3)

Note the first column in zip.train and zip.test is the correct digit. The other 256 columns are 16 X 16 pixel

readings of the darkness. Samples are shown below.

> misclass(digits.knn,zip.test[,1])

Table of Misclassification

(row = predicted, col = actual)

y

fit

0

1

2

3

4

5

6

7

8

9

0 355

0

7

2

0

3

3

0

4

2

1

0 258

0

0

2

0

0

1

0

0

2

2

0 182

2

0

2

1

1

1

0

3

0

0

1 154

0

4

0

1

4

0

4

0

3

1

0 182

0

2

4

0

3

5

1

0

0

6

2 145

0

0

2

0

6

0

2

1

0

2

1 164

0

0

0

7

0

1

2

1

2

0

0 138

1

4

8

0

0

4

0

1

1

0

1 151

0

9

1

0

0

1

9

4

0

1

3 168

Misclassification Rate =

0.0548

The package klaR also contains the function sknn that performs knn and weighted knn

classification. It allows for the use of standard formula nomenclature. It would not

work with the digit recognition data however, not sure why.

278

Example 9.2: Classifying Species of Water Bear

> wb.sknn = sknn(Species~.,data=WaterBears)

> misclass(predict(wb.sknn)$class,WaterBears$Species)

Table of Misclassification

(row = predicted, col = actual)

y

fit

bohleberi roanensis smokiensis unakaensis

bohleberi

10

0

0

0

roanensis

0

10

1

1

smokiensis

0

0

9

1

unakaensis

0

0

0

9

Misclassification Rate =

0.0732

The use of a weighted nearest neighbor approach can yield superior results. A

weighted nearest neighbor approach gives more weight to observations that are close to

the target point with the weight being determined from the distance using:

𝑤𝑖 = exp(−𝛾‖𝑥𝑖 − 𝑥̅ ‖).

> wb.sknn = sknn(Species~.,data=WaterBears,gamma=4)

> misclass(predict(wb.sknn)$class,WaterBears$Species)

Table of Misclassification

(row = predicted, col = actual)

y

fit

bohleberi roanensis smokiensis unakaensis

bohleberi

10

0

0

0

roanensis

0

10

0

0

smokiensis

0

0

10

0

unakaensis

0

0

0

11

The weighted-knn approach gives no misclassifications on the training data. Crossvalidation of methods for classification is still critical, especially when considering

methods that have the potential to over fit the training data.

279

Cross-validation function for knn()

knn.cv = function(train,y,B=25,p=.333,k=3) {

y = as.factor(y)

data = data.frame(y,train)

n = length(y)

cv <- rep(0,B)

leaveout = floor(n*p)

for (i in 1:B) {

sam <- sample(1:n,leaveout,replace=F)

pred <- knn(train[-sam,],train[sam,],y[-sam],k=k)

tab <- table(y[sam],pred)

mc <- leaveout - sum(diag(tab))

cv[i] <- mc/leaveout

}

cv

}

Cross-validation function for sknn()

sknn.cv = function(train,y,B=25,p=.333,k=3,gamma=0) {

y = as.factor(y)

data = data.frame(y,train)

n = length(y)

cv <- rep(0,B)

leaveout = floor(n*p)

for (i in 1:B) {

sam <- sample(1:n,leaveout,replace=F)

temp <- data[-sam,]

fit <- sknn(y~.,data=temp,k=k,gamma=gamma)

pred = predict(fit,newdata=train[sam,])$class

tab <- table(y[sam],pred)

mc <- leaveout - sum(diag(tab))

cv[i] <- mc/leaveout

}

cv

}

> names(WaterBears)

[1] "Species" "SSI"

"BTWa"

"BTWs"

"BTWp"

"BTWL"

"BTPA"

> X = WaterBears[,-1]

> y = WaterBears[,1]

> results = knn.cv(X,y,B=1000,p=.25)

> summary(results)

Min. 1st Qu. Median

Mean 3rd Qu.

0.0

0.1000 0.2000 0.1639 0.2000

Max.

0.6000

> results = sknn.cv(X,y,B=1000,p=.25)

> summary(results)

Min. 1st Qu. Median

Mean 3rd Qu.

0.0000 0.1000 0.2000 0.1673 0.2000

Max.

0.6000

> results = sknn.cv(X,y,B=1000,p=.25,gamma=4) <- Weighted-knn (

> summary(results)

Min. 1st Qu. Median

Mean 3rd Qu.

Max.

0.0000 0.1000 0.1000 0.1392 0.2000 0.6000

280

> results = sknn.cv(X,y,B=1000,p=.25,gamma=2)

> summary(results)

Min. 1st Qu. Median

0.0000 0.1000 0.1000

Mean 3rd Qu.

0.1395 0.2000

Max.

0.5000

> results = sknn.cv(X,y,B=1000,p=.25,gamma=8)

> summary(results)

Min. 1st Qu. Median

Mean 3rd Qu.

Max.

0.0000 0.1000 0.1000 0.1446 0.2000 0.5000

281

9.3 – Naïve Bayes Classification

282

283

9.4 - Naïve Bayes Classifiers in R

There are two packages that contain Naïve Baye’s classifier functions: klaR

(NaiveBayes) and e1071(naiveBayes). Both functions seem to work similarly,

but I did run into some issues obtaining predicted class when using the one in klaR.

However, the klaR package has several functions for plotting the results from

classification methods when the Xi’s are numeric.

NaiveBayes from klaR

naiveBayes from e1071

284

Example 9.2: Water Bears (cont’d)

> wb.nb = NaiveBayes(Species~.,data=WaterBears)

> misclass(predict(wb.nb)$class,WaterBears$Species) note the $class

Table of Misclassification

(row = predicted, col = actual)

y

fit

bohleberi roanensis smokiensis unakaensis

bohleberi

10

0

0

0

roanensis

0

8

0

1

smokiensis

0

1

10

0

unakaensis

0

1

0

10

Misclassification Rate =

0.0732

> wb.nb2 = naiveBayes(Species~.,data=WaterBears)

> misclass(predict(wb.nb2,newdata=WaterBears[,-1]),WaterBears$Species)

Table of Misclassification

(row = predicted, col = actual)

y

fit

bohleberi roanensis smokiensis unakaensis

bohleberi

10

0

0

0

roanensis

0

8

0

1

smokiensis

0

1

10

0

unakaensis

0

1

0

10

Misclassification Rate =

0.0732

> partimat(Species~.,data=WaterBears,method=”naiveBayes”)

285

Cross-validation Functions for Baye’s Classifiers

This one tends to behave better than the NB one because naiveBayes is more stable.

nB.cv = function(X,y,B=25,p=.333,laplace=0) {

y = as.factor(y)

data = data.frame(y,X)

n = length(y)

cv <- rep(0,B)

leaveout = floor(n*p)

for (i in 1:B) {

sam <- sample(1:n,leaveout,replace=F)

temp <- data[-sam,]

fit <- naiveBayes(y~.,data=temp,laplace=laplace)

pred = predict(fit,newdata=X[sam,])

tab <- table(y[sam],pred)

mc <- leaveout - sum(diag(tab))

cv[i] <- mc/leaveout

}

cv

}

> results = nB.cv(X,y,B=500)

> summary(results)

Min. 1st Qu. Median

Mean 3rd Qu.

Max.

0.00000 0.07692 0.15380 0.14380 0.23080 0.61540

The NB one tends to be buggy due to NaiveBayes fits being unstable.

NB.cv = function(X,y,B=25,p=.333,fL=0,usekernel=F) {

y = as.factor(y)

data = data.frame(y,X)

n = length(y)

cv <- rep(0,B)

leaveout = floor(n*p)

for (i in 1:B) {

sam <- sample(1:n,leaveout,replace=F)

temp <- data[-sam,]

fit <- NaiveBayes(y~.,data=temp,fL=fL,usekernel=usekernel)

pred = predict(fit,newdata=X[sam,])$class

tab <- table(y[sam],pred)

mc <- leaveout - sum(diag(tab))

cv[i] <- mc/leaveout

}

cv

}

> results = NB.cv(X,y,B=500,fL=4)

There were 50 or more warnings (use warnings() to see the first 50)

> summary(results)

Min. 1st Qu. Median

Mean 3rd Qu.

Max.

0.00000 0.07692 0.15380 0.14400 0.15380 0.53850

l

286