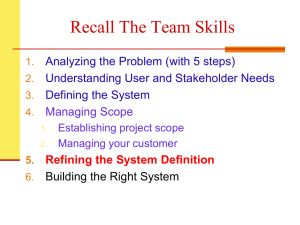

Impact analysis

advertisement

IMPACT ANALYSIS WHAT IS IT? Impact "The totality of positive and negative, primary and secondary effects produced by a development intervention, directly or indirectly, intended or unintended. Note: also interpreted as the longer term or ultimate result attributable to a development intervention (RBM term); in this usage, it is contrasted with output and outcome which reflect more immediate results from the intervention." (OECD-DAC Working Party on Aid Evaluation, April 2001) This definition is consistent with that found in evaluation manuals of most UN agencies (including UNICEF), as well as those of major international NGOs. UNICEF definition: The longer term results of a programme — technical, economic, socio-cultural, institutional, environmental or other — whether intended or unintended. The intended impact should correspond to the programme goal. Impact analysis Impact assessment approaches that tried to predict impact -- environmental impact assessment, social impact assessment, cost-benefit analysis, social cost benefit analysis -- date back from the 1950s and 60s. Impact analysis for evaluation was initially confined to ex-poste evaluation. But just as approaches to programming have evolved, shifting towards increased participation, shifting from linear project approaches to broader programme approaches, concepts and approaches to impact evaluation necessarily evolve too. (Roche, 1999: 19-20) Across the different approaches, impact analysis: Tries to measure or value change. Focuses on consequences of intervention, but not immediate or intermediate results. See Figure 1. Is concerned with intended and unintended results, positive and negative results. Consider interest in environmental impact, gender impact, notions of "do no harm" in humanitarian response (Oakley et al., 1998: 36; Anderson, 1999). Focuses on sustainable and/or significant results. Consider that in some humanitarian response, results of programme interventions might not be lasting in volatile contexts of civil war, for example, but might include very significant life saving changes (Roche, 1999: 21). One current definition of impact assessment/analysis in international development context, which is consistent with principles of participation and balances accountability and learning purposes: Impact assessment refers to an evaluation of how, and to what extent, development interventions cause sustainable changes in living conditions and behaviour of beneficiaries and the differential effects of these changes on women and men. Impact assessment also refers to an evaluation of how, and the extent to which, development interventions influence the socio-economic and political situation in a society. In addition it refers to understanding, analysing and explaining processes and problems involved in bringing about changes. It involves understanding the perspectives and expectations of difference stakeholders and it takes into account the socio-economic and political context in which the development interventions take place (Hopkins, 1995). Impact analysis - Page 1/7 Figure 1: Impact analysis in relation to logical programme structure Situation at time of planning Situation assessment Changed situation Impact Impact Wider objectives Subsequent outcomes Effectiveness Specfic objectives Plan of activities The results The programme plan Goals Specfic outcomes Efficiency Outputs Inputs > Activities/Processes Source: Adapted from Ruxton, 1995 in Oakley et al., 1998. Impact analysis - Page 2/7 WHY IS IMPACT SO HARD TO ASSESS/EVALUATE? The nature of change To carry out impact analysis, one must understand how change occurs. Whereas many programme/project models and frameworks assume a linear change inputs outputs outcomes/impact there is growing recognition and interest in more complex processes of change. Outputs may influence and change inputs and the same inputs can produce different outcomes/impact over time or in different contexts. inputs outputs outcomes/impact A inputs outputs outcomes/impact B — different time/place Also, change may be very sudden. Consider the effects of economic crisis, outbreak of war, earthquakes, or even at the local level, the change in local leadership (Roche, 1999: 24). Isolating change —attribution Impact analysis is about measuring or valuing change that can be attributed to an intervention. This means that it is not enough to say that the programme objectives or goals have been met. It requires distinguishing to what degree they can be attributed to that programme. Results of the project Effects of other factors/ processes Other concurrent programmes of international assistance Changes due to the external context, including conflict/disaster Developments that would have come about anyway Attribution is: "the extent to which observed development effects can be attributed to a specific intervention or to the performance of one or more partners taking account of other interventions, (anticipated or unanticipated) confounding factors, or external shocks" (OECD-DAC, April 2000). For UNICEF, the challenge is to draw conclusions on the cause-and-effect relationship between programmes/projects and the evolving situation of children and women. Even in a simple linear model of change, as we move up the results chain, from outputs to outcomes and impact, it is widely recongised that the influence of external factors increases and management control decreases. Impact analysis - Page 3/7 Objective/Outcome Outputs Activities Decreasing management control Goal/Impact factors Increasing levels of risk/influence of external factors Figure 2: Attribution of results Inputs Source: Adapted from DAC Working Party on Aid Evaluation. n.d. "Results-based Management in the Development Cooperation Agencies: A review of experience" Background Report to February 2000 meeting, subsequently revised. It may be difficult to attribute intermediate and long-term results (outcomes and impact) to any single intervention or actor. Evaluations and reporting on results should therefore focus on plausible association of effects. Difficulties in attribution to any one actor increase as programmes succeed in building national capacity building and sector-wide partnerships. In such cases, it may be sensible to undertake joint evaluations, which may plausibly attribute wider development results to the joint efforts of all participating actors. Multi-agency evaluations of effectiveness of SWAPs, CAPs, or under the UNDAF framework are possible examples. In crisis and unstable contexts, the number of partners is often greater. There are even more pressures than usual both for interagency coordination/collaboration and for visibility of individual organisations to help in the competition for funding. The impact of contextual factors far outweighs even the total of humanitarian efforts and can at the same time be immensely volatile. There is argument for more frequent system-wide evaluations to capture the impact of the humanitarian sector as a whole (OECD-DAC, 1999). It follows from all of the above that impact analysis requires an effort to understand the processes of change in the wider context (Roche, 1999: 25). What change is significant? Who decides? Obviously no impact analysis can measure or value the totality of change. This points to important questions: what is measured, who chooses and how? Or as Robert Chambers posed it: “Whose reality counts?” Differences may emerge in terms of what happened, how it happened and also what is considered a significant change in people’s well-being, i.e. impact. The distinction between outcomes and impact can often be blurred. For example, a community’s awareness of human rights and their confidence to appeal to Impact analysis - Page 4/7 existing support systems may be situated as an outcome in a programme design, but may be considered a significant change in quality of life by the community (Roche, 1999: 23). It follows that impact analysis must be based in methodologies that draw out — not smooth over — different perspectives. For many organisations, including UNICEF, evaluation must give special attention to the perspectives of primary stakeholders. In crisis and unstable contexts, particularly in complex emergencies, perspectives are often polarised. This makes the management of impact analysis a very sensitive political process. Similarly, benchmarks and the interpretation of the significance of results are also very much contextspecific. In a complex emergency such as a severe natural disaster, preserving nutrition status measures at the same level may be judged an extremely positive impact, whereas in other contexts it would imply complete failure. Insufficient baseline and monitoring data It is generally agreed that monitoring performance at different levels — inputs, process, outputs, outcomes, impact — becomes more complicated and more expensive the further along the results chain (OECD-DAC, n.d.). And for impact analysis, it is necessary to explore the whole results chain in order to draw reliable conclusions on attribution (Roche, 1999:26). In practice, there is often insufficient data for impact analysis— all the more so in crisis and unstable contexts where baseline data is often made obsolete, regular information systems collapse, and the pressures on programme response often lead to neglect of foreword monitoring and evaluation planning and practice. Precisely because there is often insufficient data for impact analysis, some organisations are pushing for “impact monitoring”, i.e. introducing efforts to assess the unfolding impact into M&E plans and systems (Oakley et al., 1998: 55-58; Roche, 1999). Scope Balancing the scope of impact analysis is a challenge. As mentioned above, one must explore the whole results chain in order to draw reliable conclusions on attribution. At the same time, impact analysis is different from a simple aggregation of achievements. It is important to balance at which level impact will be assessed. Combining analysis of macro- and microlevel impacts is difficult (Oakley et al., 1998: 35), and primary stakeholders’ perspectives will most likely be limited to micro-level impacts. Impact analysis requires consideration not only of planned positive change, but also unplanned and negative consequences. This requires a broad scope of investigation regardless of the level of analysis. Time Impact analysis takes effort and time, not least the opportunity costs to primary stakeholders. This must be balanced against demands for accountability (Roche, 1999:35). APPROACHES TO ATTRIBUTION Different approaches correspond to different models of evaluation as well as different users’ preferences and needs. “Scientific approach” — Quantitative comparison The scientific approach is favoured by those wishing to generate quantitative measure of impact: easily analysed and packaged, suitable, for example, for donors or to feed into cost effectiveness analysis of a project. These are generally based on the use of the following: Impact analysis - Page 5/7 Control Groups: This typically involves comparison between the population targeted by the intervention and a suitably comparable group that was not targeted, as identified from the outset of the programme. In social development interventions, and even more so in humanitarian response, the deliberate exclusion of control groups from programme benefits is not morally acceptable (Roche, 1998: 78; Hallam). Alternatively, comparisons can be made with other groups that have been excluded from the programme unintentionally, for lack of access or agency capacity. However, such comparisons must be made with care, and imply having a deep understanding of the context, of other processes of change, and in emergencies, of the survival strategies of the two groups (Roche, 1999: 81; Hallam). “Before/after” comparison: This is comparing the situation at the time of the evaluation with the situation before the intervention. Note, a particular challenge exists in crisis and unstable contexts where “before” measures, if they exist, are often entirely invalidated by drastic changes in the situation. The deductive approach: Based on acknowledgement of the above limitations, this approach seeks to provide a narrative interpretation of links between an intervention and any impacts that have a high degree of plausibility. The validity has to be judged on the basis of the logic and consistency of the arguments, the strengths and quality of the evidence provided, the degree of cross-checking of findings (triangulation), the validity of the methodology and the reputation of those involved. It relies heavily on interviews with key informants, visual observation and induction of lessons from other similar or comparable cases. ISSUES FOR MANAGERS OF EVALUATION In an evaluation that includes or focuses on impact analysis, there are some aspects of evaluation design that deserve particular attention by evaluation managers, both in shaping the process and reviewing the final product: Ensure that stakeholders involved share a common understanding of impact and expectations vis-à-vis "impact analysis" (Roche, 1999). Be aware of and make explicit different stakeholders' understanding as to how change happens and correspondingly what kinds of change they think should be explored, measured, appraised (Roche, 1999). Consider how the desired consequences (impact) of the programme/project will be defined. How will different stakeholders' perspective on this be drawn out or limited (Oakley et al., 1998: 55)? Consider how the context of the impact analysis will be defined. Which factors will be taken into account and how will they be weighed? What frameworks or references will be used for understanding the wider context? What level of impact will be examined? Impact on whom? Will impact be measured at the level of the individual, the household, communities, organisations, wider institutions (Roche, 1999)? What examples will be used from which to generalise impact (Oakley et al., 1998:56)? A selection needs to be made taking into account representation in terms of the population coverage and types of activities making up a programme, but also in terms of different levels and different stages of the results chain. Impact analysis - Page 6/7 IMPACT MONITORING Impact monitoring is getting increasing attention since impact evaluation is often hampered by the lack of quality monitoring data (Oakley et al, 1998: 55-58; Roche, 1999). The focus on impact is brought forward into monitoring systems. In crisis and unstable contexts, the focus on impact monitoring stems from a concern that negative and unintended effects of the programme can emerge early on and waiting for an evaluation even 12 months down the road to detect such problems could mean loss of lives. Impact monitoring entails broadening the scope of regular monitoring. It involves some new data collection but also a focus on interpreting much of the same traditional monitoring data collection in relation to potential impact. It entails focusing on the following broad questions: What changes are happening in the broader context that challenges assumptions and could these threaten or support the intended impacts? Do assumptions internal to the programme still hold up? Are gaps in the programme logic emerging? Is coordination between different partners and the parts of the programme they manage working as expected? Are local leaders and primary stakeholders engaging as expected? Are original estimates at pace of implementation holding up and does this threaten intended impacts in any way? How do different stakeholders, especially primary stakeholders, perceive the value of programme outputs? Do they see a link to their perceived needs? What negative changes do stakeholders, especially primary stakeholders, perceive as happening as a result of the programme? Much of impact monitoring will rely on narrative and traditional qualitative data analysis as opposed to quantitative measures. It will likely combine: Good analysis of national trends based on existing information sources and monitoring systems Focused sub-national and community-level data collection using key informant interviews, focus groups, community meetings including use of RAP techniques such as timelines, mapping etc. Impact monitoring is an area open for further development and innovation. REFERENCES Anderson, M (1999). Do no harm – How aid can support peace – or war. Lynne Rienner publishers, London. DAC Working Party on Aid Evaluation ( n.d.) "Results-based Management in the Development Cooperation Agencies: A review of experience" Background Report to February 2000 meeting, subsequently revised. DAC Working Party on Aid Evaluation. (April 2001) Glossary of Evaluation and Results Based Management Terms. Hopkins, R. (1995) Impact Assessment: Overview and Methods of Application, Oxford: Oxfam/Novib. Oakley, P., B. Pratt and A. Clayton. (1998) Outcomes and Impact: Evaluating Change in Social Development, Oxford: Intrac. OECD-DAC (1999) Evaluation and Aid Effectiveness Series, no. 1 "Guidance for Evaluation Humanitarian Assistance in Complex Emergencies." Roche, C. (1999) Impact Assessment for Development Agencies: Learning to Value Change, Oxford: Oxfam/Novib. UNICEF (2004) Programme Policy and Procedures Manual. Impact analysis - Page 7/7