Homework 9 Solutions

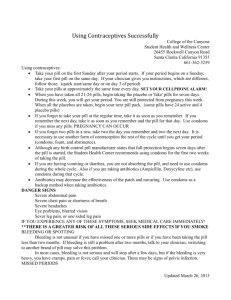

advertisement

Homework 9 Solutions 1. Ross 7.5 p. 408 The point ( X , Y ) at which the accident occurs is stated to be uniformly distributed in a square with sides 3 miles wide; hence, the joint density of ( X , Y ) is f ( x, y ) 1 / 9, 3 / 2 x, y 3 / 2 . This can be decomposed into f ( x, y ) f ( x) f ( y ) , where both f (x) and f ( y ) are 1/3 on the interval [3 / 2, 3/2] ; that is, X and Y are independent and uniformly distributed on the interval [3 / 2, 3/2] . Hence, the expected travel distance of the ambulance is 3/ 2 3/ 2 3/ 2 3/ 2 x y x 4 4 9 3 E( X Y ) E( X ) E( Y ) dx dy 2 dx xdx . 3 3 3 3 0 3 8 2 3 / 2 3 / 2 3 / 2 2. Ross 7.8 p. 409 Let X i equal 1 if the i-th arrival sits at a previously unoccupied table and 0 otherwise (that is, X i 0 if the i-th arrival has a friend among the i 1 people already there). Then, the expected number of occupied tables is just N N E (number of occupied tables ) E X i E ( X i ) . i 1 i 1 Since the X i ’s are indicators, E ( X i ) is just the probability that the i-th arrival does not have any friends among the i 1 people already there; hence, E ( X i ) (1 p) i 1 . Substituting this into the above expression, we get N N 1 1 (1 p) N 1 (1 p) N i 1 j E (number of occupied tables ) (1 p) (1 p) . 1 (1 p) p i 1 j 0 3. Ross 7.14 pp. 409-410 The number of stages needed until there are no more black balls in the urn is a negative binomial random variables with parameters m and 1 p . Therefore, the expected number of stages needed is just m /(1 p) . 4. Ross 7.24 p. 411 a) Number the small pills, and let X i equal 1 if small pill i is still in the bottle after the last large pill has been chosen and let it be 0 otherwise, i 1, , n . Also, let Y j , j 1,, m , equal 1 if the j-th small pill created is still in the bottle after the last large pill has been n m i 1 j 1 chosen and its smaller half returned. We observe that X X i Y j . Now, E ( X i ) P(small pill i is chosen after all m large pills ) 1 /( m 1) E (Y j ) P( j - th created small pill is chosen after m j existing large pills ) 1 /( m j 1) Thus, E ( X ) m n 1 . m 1 j 1 m j 1 b) Note that the day on which the last large pill is chosen, Y, can be expressed in terms of X m(n 2m 2) m 1 as Y n 2m X , and thus, E (Y ) n 2m E ( X ) . m 1 j 1 m j 1 5. Ross 7.42 p. 412 a) Let X i equal 1 if pair i consists of a man and a woman and 0 otherwise; i 1, ,10 . Then, we have 10 10 1 1 100 10 E ( X i ) P ( X i 1) 190 19 20 2 E ( X i X j ) P ( X i 1, X j 1) P ( X i 1) P ( X j 1 | X i 1) 9 9 10 1 1 10 9 , i j 19 18 19 17 2 Hence, 10 100 E X i i 1 19 2 10 10 10 10 10 9 10 900 18 16200 Var X i 10 1 2 2.640 19 19 2 19 17 19 361 17 6137 i 1 b) Let Yi equal 1 if pair i consists of a married couple and 0 otherwise; j 1, ,10 . Then, we have E (Yi ) P(Yi 1) 10 10 1 20 190 19 2 E (Yi Y j ) P(Yi 1) P (Y j 1 | Yi 1) 1 9 1 1 , i j 19 18 19 17 2 Hence, 10 10 E Yi i 1 19 1 10 1 1 1 10 1 Var Yi 10 1 2 19 19 2 19 17 19 i 1 2 180 18 3240 0.528 361 17 6137 6. Ross 7.45 p. 413 a) The correlation of X 1 X 2 and X 2 X 3 is just Cov( X 1 X 2 , X 2 X 3 ) Var ( X 2 ) 1 2 Var ( X 1 X 2 ) Var ( X 2 X 3 ) 2 2 (since the variables are pairwise uncorrelated, all terms drop out in the covariance except for Cov( X 2 , X 2 ) Var( X 2 ) ). b) Replacing the X 2 X 3 term in the above expression with X 3 X 4 , we can see that the numerator becomes 0 (because the variables are all pairwise uncorrelated); hence the correlation between X 1 X 2 and X 3 X 4 is 0. 7. Ross 7.56 p. 414 Let Yi equal 1 if the elevator stops at floor i and 0 otherwise; i 1,, N . Also, let X denote the number of people that enter on the ground floor. Note, first of all, that N N E Yi | X k E[Yi | X k ] . i 1 i 1 Now, for any i and k, E[Yi | X k ] is just 1 minus the probability that all k passengers get off on N 1 one of the other N 1 floors; i.e., E[Yi | X k ] 1 . This means the above N expression becomes k N 1k N E Yi | X k N 1 i 1 N So we have the conditional expectation for the number of stops given that k people entered. To obtain the unconditional expectation, we need to take the weighted average of these conditional expectations: k k k N N 1 e 10 (10) k N 1 (10) 10 E Yi N 1 N Ne k! k! k 0 N i 1 k 0 N N Ne 10 e10(11 / N ) N Ne 10 / N N (1 e 10 / N ) 8. Ross 7.57 p. 414 Let N denote the expected number of accidents per week; then E ( N ) 5 . Also, let X i , i 1, , N , denote the number of workers injured in accident i; the X i ’s are independent and E ( X i ) 2.5 for all i. We have that the number of workers injured in each accident is independent of the number of accidents that occur, so the expected number of workers injured in a week is N E X i E ( NX 1 ) E ( N ) E ( X 1 ) (5)( 2.5) 12.5 . i 1 9. Ross 7.64 p. 416 a) The expected lifetime of a randomly chosen bulb is just the weighted average of the conditional expected lifetimes (conditioning on the type of bulb): E( X ) E( X | type 1) P(type 1) E( X | type 2) P(type 2) p1 (1 p) 2 . b) Similarly, 2 2 Var ( X ) E ( X 2 ) E ( X ) E ( X 2 | type 1) p E ( X 2 | type 2)(1 p) p1 (1 p) 2 p( 12 12 ) (1 p)( 22 22 ) p1 (1 p) 2 2 10. The moment-generating function of a random variable X having a Laplace density is, for a t a , 0 a (t a ) x a x tx 1 e ae dx e dx e (t a ) x dx 2 2 0 0 e (t a ) x a 1 a e (t a ) x 1 a 2a a2 2 t a t a 0 2 t a t a 2 t 2 a 2 t 2 a2 M (t ) E (e tX ) For t a or t a , M (t ) . The first derivative of M (t ) evaluated at t 0 gives us E ( X ) : E ( X ) M ' (0) (t 2 a 2 )(0) a 2 (2t ) 2a 2 t (t 2 a 2 ) 2 (t 2 a 2 ) 2 t 0 0 t 0 Because the expected value of X is 0, the variance of X is just E ( X 2 ) , which can be obtained by taking the second derivative of M (t ) and evaluating it at t 0 : (t 2 a 2 ) 2 (2a 2 ) (2a 2 t )( 4t )(t 2 a 2 ) 2a 2 (a 2 3t 2 ) 2 E ( X ) M " (0) 2 2 2 4 2 2 3 (t a ) (t a ) a t 0 t 0 2