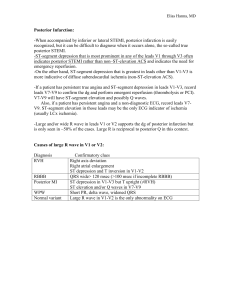

2.2 Bayesian inference:estimation

advertisement

2.2 Bayesian Inference For Bayesian analysis, f | x , the posterior distribution, plays an important role in statistical inferential procedure. Some Bayesians suggest that inference should ideally consist of simply reporting the entire posterior f | x distribution (maybe for a non-informative prior). However, some standard uses of the posterior are still helpful!! Some statistical inference problems are I. Estimation (point estimate), estimation error II. Interval estimate III. Hypothesis testing IV. Predictive inference I. Estimation (point estimate) (a) Estimation is ˆ is the most likely value of given the The generalized maximum likelihood estimate of maximizes f | x . ˆ which prior and the sample X. Example 3 (continue): n n xi 2 1 i 1 X 1 ,, X n ~ N ,1, f x1 , , xn | e 2 and ~ N ,1 .Then, 1 2 x 1 n f | x1 ,, xn ~ N , 1 n 1 1 n ˆ x n 1 1 n ◆ is then the posterior mode (also posterior mean) Other commonly used Bayesian estimates of include posterior mean and posterior median. In Normal example, posterior mode=posterior mean=posterior median. Note: The mean and median of the posterior are frequently better estimates of than the mode. It is worthwhile to calculate and compare all 3 in a Bayesian study. Example 4: X ~ N ,1, 0; 1, 0. Then, f | x f x | I 0 , I 0 , e 1 e 2 x 2 2 Thus, f | x I 0, e e 0 2 x 2 2 x 2 2 d . x 2 2 Further, E f | x e 0 e x 2 e d 2 x 2 0 e d 2 0 e 0 e x 2 d 2 x 2 2 d 0 x 2 d 2 x 2 x x d 2 0 x e 2 d 2 x e 2 2 x x e d x 1 2 x e 2 2 2 2 d d e x x e 2 2 d 2 / 2 e 2 2 2 2 d d x x 1 x x2 1 e 2 x 2 1 x Note: The classical MLE in this example is x. However, classical MLE might result in senseless conclusion!! (b) Estimation error The posterior variance of x is 3 0. The V x E f | x x The posterior variance is defined as f | x V x E 2 . x 2 , f | x is the posterior mean. where x E Note: V x V x x x 2 Example 3 (continue): x 1 n . f | x1 ,, xn ~ N , 1 n 1 1 n Then, the posterior mean is x1 , , xn x n 1 , 1 n and the posterior variance is V x1 ,, xn 1 . n 1 Suppose the classical MLE x1 , , xn x is used, then V x1 ,, xn V x1 ,, xn x1 ,, xn x1 ,, xn 2 n n xi xi 1 i1 i 1 n 1 n 1 n 4 2 n n x i 1 i 1 n 1 nn 1 1 x n 1 n 1 2 2 Example 5: X ~ N , 2 , 1. Then, f | x ~ N x, 2 . Thus, the posterior mean=the posterior mode=posterior mode=x =classical MLE Note: The Bayesian analysis based on a non-informative prior is often formally the same as the usual classical maximum likelihood analysis. Example 4 (continue): The posterior density is f | x I 0, e e 0 and the posterior mean is 5 x 2 2 x 2 2 d x2 1 e 2 E f | x x x 2 x x , 1 x x2 1 e 2 2 where x . If x x , then 1 x V x V x x x 2 V x x x x 2 V x 2 x Therefore, V x V x 2 x . V x 1 x x x since V x E f | x x 2 x e 2 0 e x 2 x 2 2 d 2 2 0 0 e d 0 xe x e 2 x 2 e e 0 x 2 2 x 2 d 0 x x 1 where 6 2 d x 2 x 2 2 2 d d x e 2 x 2 2 d 2 e 2 2 d de 2 2 2 2 e e d 2 e 2 2 2 e x e 2 2 x 2 2 d e x 2 2 d (c) Multivariate estimation Let 1 , , p t be p-dimensional parameter. Then, the posterior mean is x 1 x 2 x p x t E f ( | x ) 1 E f ( | x ) 2 E f ( | x ) p and the posterior variance is f | x V x E x x t x Further, The posterior variance of is V x E f | x x x t . V x x x x x t 7 t