Bayesian Approaches

advertisement

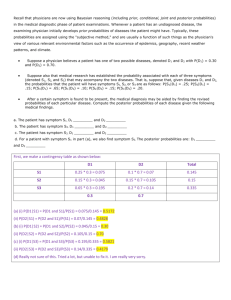

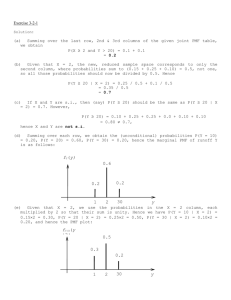

Bayesian Approach Jake Blanchard Fall 2010 Introduction This is a methodology for combining observed data with expert judgment Treats all parameters are random variables Discrete Case Suppose parameter i has k discrete values Also, let pi represent the prior relative likelihoods (in a pmf) (based on old information) If we get new data, we want to modify the pmf to take it into account (systematically) Terminology i)=prior relative likelihoods (data available prior to experiment providing ) =observed outcome P(= i|)=posterior probability of = I (after incorporating ) P´(= i)=prior probability P´´ (= i)=posterior probability Estimator of parameter is given by ˆ pi=P(= Useful formulas P i P | i P i k P | P i i 1 i k ˆ E | i P i i 1 k P X a P X a | i P i i 1 Example Variable is proportion of defective concrete piles Engineer estimates that probabilities are: Defective Fraction Probability .2 .30 .4 .40 .6 .15 .8 .10 1. .05 Prior PMF 0.45 0.4 0.35 0.3 P' 0.25 0.2 0.15 0.1 0.05 0 0 0.2 0.4 0.6 0.8 1 Find Posterior Probabilities Engineer orders one additional pile and it is defective Probabilities must be updated p .2 * .3 .4 * .4 .6 * .15 .8 * .1 1* .05 0.44 .2 * .3 P p 0.2 .136 p .4 * .4 P p 0.4 .364 p P p 0.6 .204 P p 0.8 .182 P p 1.0 .114 pˆ E p | .2 * .136 .4 * .364 .6 * .204 .8 * .182 1.0 * .114 0.55 Posterior PMF 0.4 0.35 0.3 0.25 P' 0.2 0.15 0.1 0.05 0 0 0.2 0.4 0.6 0.8 1 What if next sample had been good? Switch to p representing good (rather than defective) “Good” Fraction Probability 0 .05 .2 .10 .4 .15 .6 .40 .8 .30 1. .00 Find Posterior Probabilities Engineer orders one additional pile and it is good Probabilities must be updated p .8 * .3 .6 * .4 .4 * .15 .2 * .1 0 * .05 0.56 .2 * .1 P p 0.2 .036 p .4 * .15 P p 0.4 .11 p P p 0.6 .43 P p 0.8 .43 P p 1.0 0 pˆ E p | .2 * .036 .4 * .11 .6 * .43 .8 * .43 1.0 * 0 0.65 Continuous Case Prior pdf=f´() f P | f P | f d k 1 P | f d L P | f k L f ˆ E | f d P X a P X a | f d Example Defective piles Assume uniform distribution Then, single inspection identifies defective pile Solution f ( p ) 1 0 p 1 f ( p ) k p (1.0) 0 p 1 1 k1 2 pdp 0 f ( p ) 2 p 0 p 1 1 2 pˆ E p | p * 2 pdp 3 0 Sampling Suppose we have a population with a prior standard deviation (´) and mean (´) Assume we then sample to get sample mean (x)and standard deviation () With Prior Information f k L( ) f ( ) f k N x , N , n 2 2 x n ˆ 2 2 n b Pa b f d a Weighted average of prior mean and sample mean