Lec2LogisticRegression2 [modalità compatibilità]

advertisement

![Lec2LogisticRegression2 [modalità compatibilità]](http://s2.studylib.net/store/data/005811485_1-24ef5bd5bdda20fe95d9d3e4de856d14-768x994.png)

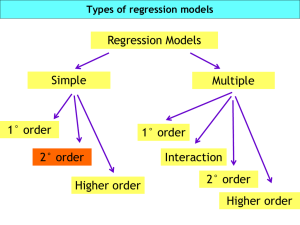

18/06/2014 LOGISTIC REGRESSION 2 dr. Ferruccio Biolcati-Rinaldi Applied Multivariate Analysis - Module 2 Graduate School in Social and Political Sciences University of Milan - Academic Year 2013-2014 How to fit, interpret and evaluate a logistic regression model? 1 18/06/2014 1. Fit and interpretation Logit coefficients • b coefficients • Hard to understand • Sign interpretation 2 18/06/2014 Odds ratios: exp(b) (Field 2005, 225-226) • • • Crucial to the interpretation of logistic regression is the value of exp(b), which is an indicator of the change in odds resulting from a unit change in the predictor. As such, it is similar to the b-coefficient in logistic regression but easier to understand (because it doesn’t require a logarithmic transformation). Ratio of odds = odds after a unit change in the predictor / original odds This proportionate change in odds is exp(b), so we can interpret exp(b) in terms of the change in odds: – If the value is greater than 1 then it indicates that as the predictor increases, the odds of the outcome occurring increase; – conversely, a value less than 1 indicates that as the predictor increases, the odds of the outcome occurring decrease. • Transforming for a better interpretation: Δ% = [Exp(β)-1]*100 – E.g.: exp(β)=1.2 Δ%=20%; exp(β)=0.8 Δ%=-20%; exp(β)=2.4 Δ%=140%; exp(β)=0.2 Δ%=-80%; Odds ra@o ≠ Risk ra@o (Menard 2002, 57) • A risk ratio is a ratio of two probabilities • The two are «approximately» equal under certain fairly restrictive conditions(a base rate less than.10) • In general, the use of an odds ratio to “represent” a risk ratio will overstate the strength of the relationship. E.g.: – odds ratio of 4.5 for females – risk ratio of 2.8 for females (.487/.173) 3 18/06/2014 Odds ratio and probability: do not over-emphasise odds ratio • Probability – Change in church attendance from 0.39 to 0.38 – Δabsolute = -0.01 Δrelative = -2.5% • Odds ratio – From 0.639 (=0.39/0.61) to 0.613 (=0.38/0.62) – Odds ratio = 0.959 (=0.613/0.639) – Δrelative = -4,1% Probability interpretation Sorry for some slides in Italian… 4 18/06/2014 “Pensi al suo rapporto con la religione. Quale, fra le seguenti definizioni, descrive meglio le Sue credenze religiose attuali?” (%) (CGP 2001, 231-248) Credo in Gesù Cristo e negli insegnamenti della Chiesa cattolica 54,3 Credo in Gesù Cristo ma solo in parte negli insegnamenti della Chiesa cattolica 28,6 Non mi sento membro di nessuna religione in particolare, ma credo nell’esistenza di una Divinità o Essere superiore o Energia spirituale o Dimensione soprannaturale 9,6 Sono membro di una religione o culto o setta o nuovo movimento religioso o confessione diversa da quella cattolica 1,3 Sono assolutamente ateo/a, cioè credo che non esista nessuna Divinità o Essere superiore o Energia spirituale o Dimensione soprannaturale 5,1 Non sa/non risponde 1,1 Totale 100,0 (N) (2.171) Regressione logistica binomiale Un esempio (CGP 2001, 231-248) • Credere senza appartenere* = α + β1 età (deviazioni dalla media, 45,6) + β2 femmina + β3 Italia Centrale + β4 Italia Meridionale • Credere senza appartenere * = - 1,818 - 0,023 età (deviazioni dalla media, 45,6) - 0,527 femmina + 0,147 Italia Centrale – 0,467 Italia Meridionale 5 18/06/2014 Età X1 X2 X3 X4 Età Femmina Centro Sud logit P(Y= 1) P(1 - P) 30 -15,6 0 0 0 -1,46 0,19 60 14,4 0 0 0 -2,15 0,10 0,15 0,09 30 -15,6 0 1 0 -1,31 0,21 0,17 60 14,4 0 1 0 -2,00 0,12 0,10 30 -15,6 0 0 1 -1,93 0,13 0,11 60 14,4 0 0 1 -2,62 0,07 0,06 30 -15,6 1 0 0 -1,99 0,12 0,11 60 14,4 1 0 0 -2,68 0,06 0,06 30 -15,6 1 1 0 -1,84 0,14 0,12 60 14,4 1 1 0 -2,53 0,07 0,07 30 -15,6 1 0 1 -2,45 0,08 0,07 60 14,4 1 0 1 -3,14 0,04 0,04 20 -25,6 0 0 0 -1,23 0,23 0,18 30 -15,6 0 0 0 -1,46 0,19 0,15 40 -5,6 0 0 0 -1,69 0,16 0,13 50 4,4 0 0 0 -1,92 0,13 0,11 60 14,4 0 0 0 -2,15 0,10 0,09 70 24,4 0 0 0 -2,38 0,08 0,08 Logit: Z = -1,818 -0,023*X1 -0,527*X2 +0,147*X3 -0,467*X4 Nota: l'età è espressa in scarti dalla media (45,6) Fonte: adattamento da Corbetta, Gasperoni e Pisati (2001, 231-248) P(Y=1)=exp(logit)/(1+exp(logit)) Conditional-effects plot (CGP 2001, 231-248) P(Y=1) Nord P(Y=1) Centro P(Y=1) Sud 0,25 0,20 0,15 0,10 0,05 0,00 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 Età 6 18/06/2014 Binomial logistic regression models • Additive form (linear effect): – Logit(p^i) = α + β1Xi1 + β2Xi2 • Multiplicative form (non linear effect): – p^i = Pr(Yi = 1|Xi) = exp(α + β1Xi1 + β2Xi2) / 1 + exp(α + β1Xi1 + β2Xi2) Average marginal effects (Kohler and Kreuter 2012, 361-362) • There is not an obvious way to express the influence of an independent variable on the dependent variable with one single number • A marginal effect is the slope of a regression line at a specific point • Because the marginal effects differ with the levels of the covariates, it makes sense to calculate the average of all marginal effects of the covariate patterns observed in the dataset. This is the average marginal effect 7 18/06/2014 2. Model fit evaluation R2, F, and sum of squared errors (Menard 2002, 17-24) • • • • • • • • • Total Sum of Squares (SST) (Mean of Y) Error Sum of Squares (SSE) (Predicted value of Y) Regression Sum of Squares (SSR) = SST – SSE The multivariate F ratio is used to test whether the improvement in the prediction using Y Regression instead of Y Mean could be attributed to random sampling variation. Two equivalent hypothesis F= R2, coefficient of determination, explained variance Proportional reduction in error statistic, form 0 to 1 R2 = SSR/SST 8 18/06/2014 Likelihood (Menard 2002, 17-24) • log LL as SS • log LL, negative values, to maximize • - 2 log LL, posi@ve values from 0 to ∞, to minimize – D0 as SST (Mean of Y) – DM as SSE (Predicted value of Y) – GM as SSR McFadden’s R2 • R2McF = 1 – (ln L(MFull) / ln L(MIntercept)) • If model MIntercept = MFull, R2McF equals 0, but R2McF can never exactly equal 1. • Pseudo R2 in Stata 9 18/06/2014 Likelihood ratio statistic (Aldrich and Nelson 1984, 54-61) • c = -2log(L0/L1) = (-2logL0) – (-2logL1) = -2(logL0 – logL1) [F] – where L1 is the value of the likelihood function for the full model as fitted [SSE] – and L0 is the maximum value for the likelihood function if all coefficients except the intercept are 0 [SST]. • That is, the computed chi-square value tests the hypothesis that all coefficients except the intercept are 0, which is exactly the hypothesis that is tested in regression using the “overall” F statistic. • The degrees of freedom for this chi-square statistic is K-1 (i.e., the number of coefficients constrained to be zero in the null hypothesis). • The formal test, then, is performed by comparing the computed statistic c to a critical value (χ2(K-1, α)) taken from a table of chisquare distribution with K-1 degrees of freedom and significance level α. Joint hypothesis tests for subsets of coefficients (Aldrich and Nelson 1984, 54-61) • • • • • • • • We have defined procedures for testing hypotheses about individual coefficients (t-test and confidence interval) and about set of all coefficients except the intercept. In many circumstances, hypotheses of interest center on the importance or significance of more than one coefficient, but not all of them. Religion example… A procedure that can be used to test joint hypotheses is a variant on the likelihood ratio test reported above for the overall fit of the model. c = -2log(L2/L1) where L1 is – as above – the value of the likelihood function for the full model as fitted and L2 is the likelihood value that results from constraining some coefficients to be zero, leaving the other coefficients unconstrained. c will follow a chi-square distribution with degrees of freedom equal to the number of constraints imposed. L2 can be no larger than L1, and will be equal to L1 only if the coefficients of interest have coefficients of zero in the unconstrained model. The smaller L2 (and hence the larger c statistic) the less likely it is that the constrained coefficients are in fact zero. 10 18/06/2014 R2Count and R2AdjCount Credente senza appartenenza piuttosto che cattolico (soglia = 0,11; pp = 62,3%; λ = -257,2%) Valori osservati di Y Totale Valori previsti di Y 1 (sì) 2 (no) 1 (sì) 121 656 777 2 (no) 87 1.144 1.231 Totale 208 1.800 2.008 Pass by score (soglia = 0,50; pp = 60,0%; λ = 0,0%) Valori osservati di Y Totale Valori previsti di Y 1 (sì) 2 (no) 1 (sì) 12 12 2 (no) 8 18 24 26 Totale 20 30 50 Pass by score and exp (soglia = 0,50; pp = 72,0%; λ = 36,4%) Valori osservati di Y Totale Valori previsti di Y 1 (sì) 2 (no) 1 (sì) 16 8 24 2 (no) 6 20 26 Totale 22 28 50 11 18/06/2014 actual VS. predicted values (CGP 2001, 244-248) Valori previsti di Y Valori osservati di Y Totale 1 (sì) 0 (no) 1 (sì) Veri positivi (VP) Falsi positivi (FP) R1 0 (no) Falsi negativi (FN) Veri negativi (VN) R0 Totale Poterepredittivo = C1 VP + VN *100 N C0 λ= N VP + VN − max(C1, C 0) *100 N − max(C1, C 0) 3. Other topics 12 18/06/2014 Zero cell • Zero cell count occurs when the dependent variable is invariant for one or more values of a categorical independent variable • E.g.: other ethnicity and marijuana • odds = 1/(1-1) = 1/0 = + infinity, ln(odds) = + infinity • odds = 0/(1-0) = 0/1 = 0, ln(odds) = - infinity • Very high estimated standard error • Particularly nominal values • 3 options – Accepting … – Recoding the categorical independent variable in a meaningful way – Adding a constant to each cell of the contingency table Complete separation • If you are too successful in predicting the dependent variable with a set of predictors • Coefficients and standard errors extremely large, pseudo R2=1 • Quasi-complete separation, bivariate relationship • Nothing intrinsically wrong, but be suspicious: problems in the data or in the analysis 13 18/06/2014 Rare events • http://www.statisticalhorizons.com/logisticregression-for-rare-events Readings • 17-18 June 2014: LOGISTIC REGRESSION 2 – Menard, S. [2002], Applied logistic regression analysis, second edition, Thousand Oaks, CA, Sage: chapters 2 and 3. – Kohler, U. and Kreuter, F. [2012], Data analysis using stata, third edition, College Station, Texas: Stata Press: chapter 10. 14