Chapter 10

advertisement

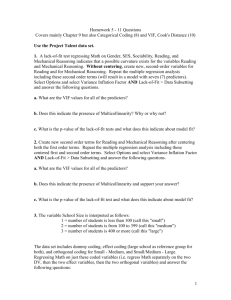

Regression Model Building Diagnostics KNNL – Chapter 10 Model Adequacy for Predictors – Added Variable Plot • Graphical way to determine partial relation between response and a given predictor, after controlling for other predictors – shows form of relation between new X and Y • May not be helpful when other predictor(s) enter model with polynomial or interaction terms that are not controlled for • Algorithm (assume plot for X3, given X1, X2): – Fit regression of Y on X1,X2, obtain residuals = ei(Y|X1,X2) – Fit regression of X3 on X1,X2, obtain residuals = ei(X3|X1,X2) – Plot ei(Y|X1,X2) (vertical axis) versus ei(X3|X1,X2) (horizontal axis) • Slope of the regression through the origin of ei(Y|X1,X2) on ei(X3|X1,X2) is the partial regression coefficient for X3 Outlying Y Observations – Studentized Residuals Model Errors (unobserved): i Yi 0 1 X i1 ... p 1 X i , p 1 E i 0 2 i 2 i , j 0 i j Residuals (observed) where hij (i, j )th element of H = X(X'X)-1 X': nn ei Yi Y i Yi b0 b1 X i1 ... bp 1 X i , p 1 ^ E ei 0 2 ei 2 1 hii s 2 ei MSE 1 hii ei , e j hij 2 i j s ei , e j hij MSE i j Semi-Studentized Residual (Residual divided by estimate of , trivial to compute): ei ei* MSE Studentized Residual (Residual divided by its standard error, messier to compute): ri ei MSE 1 hii Outlying Y Observations – Studentized Deleted Residuals Deleted Residual (Observed value minus fitted value when regression is fit on the other n -1 cases): ^ di Yi Y i (i ) ^ Y i (i ) b0(i ) b1(i ) X i1 ... bp 1(i ) X i , p 1 bk (i ) regression coefficient of X k when case i is deleted Studentized Deleted Residual (makes use of having predicted i th response from regression based on other n -1 cases): di ti ~ t n p 1 s d i s 2 di s 2 pred i MSEi 1 Xi' X(i)'X i Xi ei2 Note: SSE n p MSE n p 1 MSEi 1 hii n p 1 ti ei 2 SSE 1 hii ei -1 X i' 1 X i1 X i , p 1 1/2 Computed without re-fitting n regressions Test for outliers (Bonferroni adjustment): Outlier if ti t 1 , n p 1 2n Outlying X-Cases – Hat Matrix Leverage Values h11 h -1 H = X X'X X' 21 hn1 Notes: 0 hii 1 h1n h2 n hnn h12 h22 hn 2 1 x i1 xi x i , p 1 hij x i' X'X x j -1 n hii trace H trace X X'X X' trace X'X X'X i 1 -1 -1 trace I p p Cases with X-levels close to the “center” of the sampled X-levels will have small leverages. Cases with “extreme” levels have large leverages, and have the potential to “pull” the regression equation toward their observed Y-values. Large leverage values are > 2p/n (2 times larger than the mean) ^ ^ n i 1 Y = HY Y i hijY j hijY j hiiYii j 1 j 1 n hY j i 1 ij j Leverage values for new observations: hnew,new X'new X'X X new -1 New cases with leverage values larger than those in original dataset are extrapolations Identifying Influential Cases I – Fitted Values Influential Cases in Terms of Their Own Fitted Values - DFFITS: ^ DFFITSi ^ Y i Y i (i ) # of standard errors own fitted value is shifted when case included vs excluded MSE(i ) hii Computational Formula (avoids fitting all deleted models): 1/2 hii n p 1 DFFITSi ei 2 SSE 1 h e 1 h ii i ii 1/2 1/2 h ti ii 1 hii Problem cases are >1 for small to medium sized datasets, 2 p for larger ones n Influential Cases in Terms of All Fitted Values - Cook's Distance: 2 ^ ^ ^ ^ ^ ^ Y Y j (i ) Y- Y (i) ' Y- Y (i) j ei2 hii j 1 Di pMSE pMSE pMSE 1 hii 2 Problem cases are > F 0.50; p, n p n Influential Cases II – Regression Coefficients Influential Cases in Terms of Regression Coefficients (One for each case for each coefficient): b b DFBETAS k (i ) k k (i ) # of standard errors coefficient is shifted when case included vs excluded MSE(i ) ckk where X'X -1 c00 c 10 c p 1,0 c01 c11 c p 1,1 c0, p 1 c1, p 1 c p 1, p 1 Problem cases are >1 for small to medium sized datasets, 2 for larger ones n Influential Cases in Terms of Vector of Regression Coefficients - Cook's Distance: 2 ^ ^ ^ ^ ^ ^ Y Y j j ( i ) Y- Y (i) ' Y- Y (i) ei2 hii b - b (i) ' X'X b - b (i) j 1 Di pMSE pMSE pMSE 1 hii 2 pMSE Problem cases are > F 0.50; p, n p n When some cases are highly influential, should check and see if they affect inferences regarding model. Multicollinearity - Variance Inflation Factors • Problems when predictor variables are correlated among themselves – Regression Coefficients of predictors change, depending on what other predictors are included – Extra Sums of Squares of predictors change, depending on what other predictors are included – Standard Errors of Regression Coefficients increase when predictors are highly correlated – Individual Regression Coefficients are not significant, although the overall model is – Width of Confidence Intervals for Regression Coefficients increases when predictors are highly correlated – Point Estimates of Regression Coefficients arewrong sign (+/-) Variance Inflation Factor Original Units for X 1 ,..., X p 1 , Y : σ 2 b 2 X'X Correlation Transformed Values: X ik* σ b 2 * * 2 rXX -1 where: VIF k 2 1 X ik X k sk n 1 b VIF * k * -1 Yi* 1 Yi Y n 1 sY 2 k 1 1 Rk2 with Rk2 Coefficient of Determination for regression of X k on the other p 2 predictors Rk2 0 VIF k 1 0 Rk2 1 VIF k 1 Rk2 1 VIF k Multicollinearity is considered problematic wrt least squares estimates if: p 1 max VIF 1 ,..., VIF p 1 10 or if VIF VIF k 1 p 1 k is much larger than 1

![Lec2LogisticRegression2 [modalità compatibilità]](http://s2.studylib.net/store/data/005811485_1-24ef5bd5bdda20fe95d9d3e4de856d14-300x300.png)