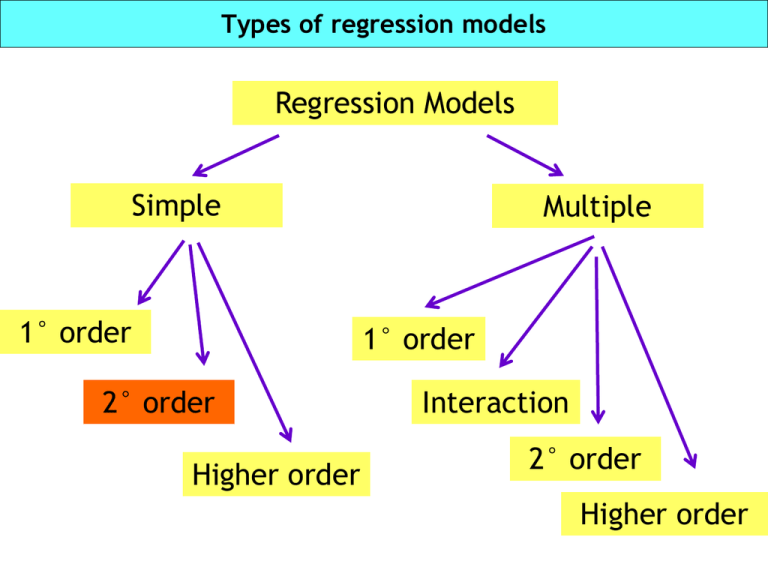

Types of regression models

Regression Models

Simple

1° order

Multiple

1° order

2° order

Higher order

Interaction

2° order

Higher order

A quadratic second order model

E(Y)=β0+ β1x+ β2 x2

• Interpretation of model parameters:

• β0: y-intercept. The value of E(Y) when x1 = x2 = 0

• β1 : is the shift parameter;

• β2 : is the rate of curvature;

Example with quadratic terms

The true model, supposedly unknown, is

Yi 100

= .00

2 + xi2 + εi, with εi~N(0,2)

75. 00

y

50. 00

25. 00

0. 00

2. 00

Data: (x,y). See SQM.sav

4. 00

6. 00

x

8. 00

10. 00

Model 1: E(Y) = β0 + β1x

Model Summary

Model

1

R

R Square

a

,973

Adjusted

R Square

,947

,947

a. Predictors: (Constant), x

Model

1

df

Mean Square

80624,915

1

80624,915

4500,202

103

43,691

85125,117

104

Residual

Total

a. Predictors: (Constant), x

Coefficients

b. Dependent Variable: y

Unstandardized

Coefficients

Model

1

B

(Constant)

x

a. Dependent Variable: y

6,60994

ANOVA b

Sum of

Squares

Regression

Std. Error of

the Estimate

F

Sig.

,000a

1845,332

a

Standardized

Coefficients

Std. Error

-19,959

1,483

10,744

,250

Beta

t

,973

Sig.

-13,454

,000

42,957

,000

Linear Regression

100 .00

y = -19.96 + 10.74 * x

R-Square = 0.95

75. 00

y

50. 00

25. 00

0. 00

2. 00

4. 00

6. 00

x

8. 00

10. 00

Model 2: E(Y) = β0 + β1x2

Model Summary

Model

1

R

Adjusted

R Square

R Square

,996a

,991

,991

a. Predictors: (Constant), XSquare

Model

1

Residual

Total

Smaller variance and SE

2,68707

ANOVA b

Sum of

Squares

Regression

Std. Error of

the Estimate

df

Mean Square

84381,422

1

84381,422

743,695

103

7,220

85125,117

104

F

11686,632

Sig.

,000a

a. Predictors: (Constant), XSquare

b. Dependent Variable: y

Coefficients

Unstandardized

Coefficients

Model

1

B

(Constant)

XSquare

a. Dependent Variable: y

a

Standardized

Coefficients

Std. Error

2,340

,417

,997

,009

Beta

t

,996

Sig.

5,608

,000

108,105

,000

Linear Regression

100 .00

y = 2.34 + 1.00 * XSquar e

R-Square = 0.99

75. 00

y

50. 00

25. 00

0. 00

0. 00

25. 00

50. 00

XSquare

75. 00

100 .00

Model 3: E(Y) = β0 + β1x + β2x2

Model Summary

Model

1

R

R Square

a

.996

.991

a. Predictors: (Constant), XSquare, x

Model

1

Sum of

Squares

Regression

Std. Error of

the Estimate

.991

2.66608

ANOVA b

df

Mean Square

F

84400.103

2

42200.052

725.014

102

7.108

85125.117

104

Residual

Total

Adjusted

R Square

Sig.

.000a

5936.999

a. Predictors: (Constant), XSquare, x

b. Dependent Variable: y

Coefficients

Unstandardized

Coefficients

Model

1

Standardized

Coefficients

B

4.177

Std. Error

1.206

x

-.830

.512

XSquare

1.071

.046

(Constant)

a. Dependent Variable: y

a

Beta

t

3.463

Sig.

.001

-.075

-1.621

.108

1.069

23.046

.000

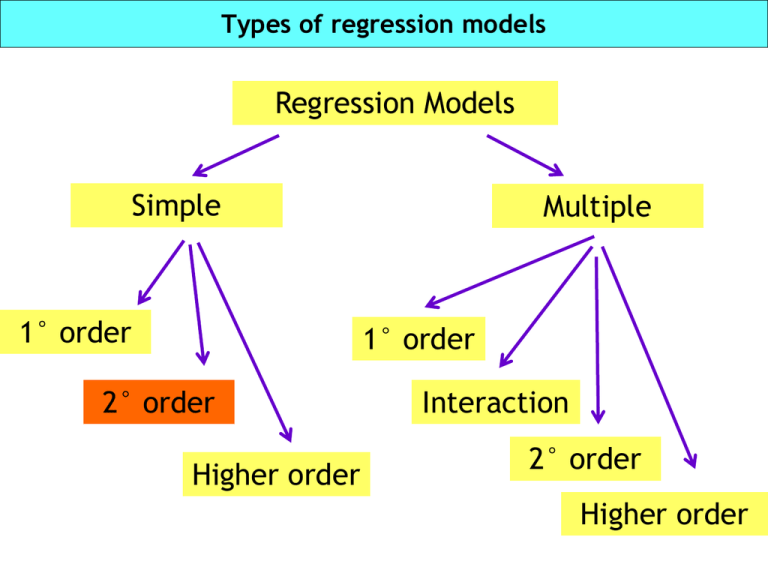

Types of regression models

Regression Models

Simple

1° order

Multiple

1° order

2° order

Higher order

Interaction

2° order

Higher order

A third order model with 1 IV

E(Y)=β0+ β1x+ β2 x2+ β3 x3

Use with caution given

numerical problems that

could arise

Y

>0

3

Y

X1

<0

3

X1

Types of regression models

Regression Models

Simple

1° order

Multiple

1° order

2° order

Higher order

Interaction

2° order

Higher order

First-Order model in k Quantitative variables

E(Y)=β0+β1x1+β2 x2 + ... + βk xk

Interpretation of model parameters:

β0: y-intercept. The value of E(Y) when x1 = x2 =...= xk= 0

β1: change in E(Y) for a 1-unit increase in x1 when x2,.., xk

are held fixed;

β2: change in E(Y) for a 1-unit increase in x2 when x1, x3,...,

xk are held fixed;

...

A bivariate model

E(Y)=β0+β1x1+β2 x2

Changing x2 changes only the y-intercept.

In the first order model a 1-unit change in one independent

variable will have the same effect on the mean value of y

regardless of the other independent variables.

A bivariate model

Y

Response

Plane

X1

Yi = 0 + 1X1i + 2X2i + i

(Observed Y)

0

i

X2

(X1i,X2i)

E(Y) = 0 + 1X1i + 2X2i

Example: executive salaries

•

•

•

•

•

•

Y = Annual salary (in dollars)

x1 = Years of experience

x2 = Years of education

x3 = Gender : 1 if male; 0 if female

x4 = Number of employees supervised

x5 = Corporate assets (in millions of dollars)

E(Y)=β0+ β1x1+ β2 x2 + β4 x4 + β5 x5

Data: ExecSal.sav

Do not consider x3

(Gender) for the moment

Exsecutive salaries: Computer Output

Riepilogo del modello

Modello

R-quadrato

R

R-quadrato corretto

,870a

,757

,747

Deviazione standard Errore

della stima

12685,309

a. Predittori: (Costante), Corporate assets (in million $), Years of Experience, Years of Education,

Number of Employees supervised

Simple regression

Multiple regression

Riepilogo del modello

Modello

R

R-quadrato

R-quadrato

corretto

Deviazione

standard Errore

della stima

1

dimension0

,783a

,613

.

Predittori: (Costante), Years of Experience

a

,609

15760,006

Coefficient of determination

The coefficient R2 is computed exactly as in the

simple regression case.

R

2

Explained

SST (Total)

SSR

SST

n

n

( yi y )

i 1

Total variation

n

variation

2

2

( yˆ i y )

i 1

SSR (Regression)

1

SSE

SST

( y i yˆ i )

2

i 1

SSE (Error)

A drawback of R2: it increases with the number of added

variables, even if these are NOT relevant to the problem.

Adjusted R2 and estimate of the variance σ2

A solution: Adjusted R2

– Each additional variable reduces adjusted R2, unless

SSE varies enough to compensate

2

Ra

n 1 SSE

SSE

2

1

1

R

n

k

1

SST

SST

An unbiased estimator of the variance σ 2 is computed as

s

2

SSE

n k 1

2

ˆi

n k 1

Exsecutive salaries: Computer Output (2)

Coefficientia

Model

Coefficienti non

standardizzati

Variables

1

B

(Costante)

Years of

Experience

Years of

Education

Number of

Employees

supervised

Corporate

assets (in

million $)

Deviazione

standard

Errore

Coefficienti

standardizz

ati

Beta

T-tests

t

Sig.

-37082,148

17052,089

-2,175

,032

2696,360

173,647

,785 15,528

,000

2656,017

563,476

,243

4,714

,000

41,092

7,807

,272

5,264

,000

244,569

83,420

,149

2,932

,004

Variabile dipendente: Annual salary in $

Testing overall significance: the F-test

• 1. Shows If There Is a Linear Relationship

Between All X Variables Together & Y

• 2. Uses F Test Statistic

• 3. Hypotheses

– H0: 1 = 2 = ... = k = 0

• No Linear Relationship

– Ha: At Least One Coefficient Is Not 0

• At Least One X Variable Affects Y

The F-test for 1 single coefficient is equivalent to the t-test

Anova table

F-statistic

Anovab

Modello

1

Somma dei

quadrati

Media dei

quadrati

df

Regressione

4,766E10

Residuo

1,529E10

95

Totale

6,295E10

99

F

4 1,192E10 74,045

Sig.

,000a

1,609E8

. Predittori: (Costante), Corporate assets (in million $), Years of Experience, Years of

Education, Number of Employees supervised

a

df = k: number of

b. Variabile dipendente: Annual salary in $

regression slopes

p-vale of F-test

df = n-1: n=

number of

observations

MSE (mean

square error),

the estimate of

variance

Decision: reject

H0, i.e. accept

this model

Interaction (second order) model

E(Y)=β0+ β1x1+ β2 x2 + β3 x1x2

• Interpretation of model parameters:

• β0: y-intercept. The value of E(Y) when x1 = x2 = 0

• β1+ β3 x2 : change in E(Y) for a 1-unit increase in x1

when x2 is held fixed;

• β2 + β3 x1 : change in E(Y) for a 1-unit increase in x2

when x1 is held fixed;

• β3: controls the rate of change of the surface.

Interaction (second order) model

E(Y)=β0+ β1x1+ β2 x2 + β3 x1x2

Contour lines are not parallel

The effect of one variable depends on the level of the other

Example: Antique grandfather clocks auction

Clocks are sold at an auction on competitive offers.

Data are:

– Y : auction price in dollars

– X1: age of clocks

– X2: number of bidders

Model 1: E(Y) = β0 + β1x1 + β2x2

Model 2: E(Y) = β0 + β1x1 + β2x2 + β3x1x2

Data: GFCLOCKS.sav

Data summaries

Descriptive Statistics

Age

32

108

194

144.94

Std.

Deviatio

Statistic

n

27.395

Bidders

32

5

15

9.53

2.840

.420

.414

-.788

.809

Price

32

729

2131

1326.88

393.487

.396

.414

-.727

.809

Valid N (lis twise)

32

N

Statistic

Minimu

m

Statistic

Maximu

m

Statistic

Mean

Statistic

Skewnes s

Statistic

If data are Normal Skewness is 0

If data are Normal (eccess) Kurtosis is 0

Note: Skewness and Kurtosis are not

enough to establish Normality

Kurtosis

Std. Error

Statistic

Std. Error

.216

.414

-1.323

.809

P-P plot for Normality

If data are Normal.

Points should be

along the straight

line.

In this example the

situation is fairly

good

Bivariate scatter-plots

2000

2000

P r ice

1200

1200

800

1600

P rice

1600

800

120

140

160

Age

180

6

8

10

Bidders

12

14

Model 1: E(Y) = β0 + β1x1 + β2x2

Model Summary

Model

1

R

Adjusted

R Square

R Square

a

.945

.892

.885

a. Predictors: (Constant), Bidders, Age

Model

1

Std. Error of

the Estimate

ANOVA b

Sum of

Squares

Regression

df

Mean Square

4283062.960

2

2141531.480

516726.540

29

17818.157

4799789.500

31

Residual

Total

133.485

a. Predictors: (Constant), Bidders, Age

Coefficients

b. Dependent Variable: Price

Unstandardized

Coefficients

Model

1

Std. Error

173.809

Age

12.741

.905

Bidders

85.953

8.729

a. Dependent Variable: Price

120.188

Sig.

.000a

a

Standardized

Coefficients

B

-1338.951

(Constant)

F

Beta

t

-7.704

Sig.

.000

.887

14.082

.000

.620

9.847

.000

Model 2: E(Y) = β0 + β1x1 + β2x2 + β3x1x2

Model Summary

Model

1

R

R Square

a

.977

Adjusted

R Square

.954

Std. Error of

the Estimate

.949

88.915

a. Predictors: (Constant), AgeBid, Age, Bidders

ANOVA b

Model

1

Sum of

Squares

Regression

Residual

Total

df

Mean Square

4578427.367

3

1526142.456

221362.133

28

7905.790

4799789.500

31

F

Sig.

193.041

.000a

a. Predictors: (Constant), AgeBid, Age, Bidders

Coefficients a

b. Dependent Variable: Price

Unstandardized

Coefficients

Model

1

B

(Constant)

Standardized

Coefficients

Std. Error

320.458

295.141

.878

2.032

Bidders

-93.265

AgeBid

1.298

Age

a. Dependent Variable: Price

Beta

t

Sig.

1.086

.287

.061

.432

.669

29.892

-.673

-3.120

.004

.212

1.369

6.112

.000

Interpreting interaction models

The coefficient for the interaction term is significant.

If an interaction term is present then also the

corresponding first order terms need to be included to

correctly interpret the model.

In the example an uncareful analyst could estimate the

effect of Bidders as negative, since b2=-93.26

Since an interaction term is present, the slope estimate

for Bidders (x2) is

b2 + b3x1

Note: b = ^β

For x1= 150 (age) the estimated slope for Bidders is

-93.26 + 1.3 (150) = 101.74

Models with qualitative X’s

Regression models can also include qualitative (or

categorical) independent variables (QIV).

The categories of a QIV are called levels

Since the levels of a QIV are not measured on a natural

numerical scale in order to avoid introducing fictitious

linear relations in the model we need to use a specific

type of coding.

Coding is done by using IV which assume only two values:

0 or 1.

These coded IV are called dummy variables

Models with QIV

• Suppose we want to model Income (Y) as a function of

Sex (x) -> use coded, or dummy, variables

x = 1 if Male, x = 0 if Female

E(Y) = β0+ β1x

E(Y) = β0+ β1 if x =1, i.e. Male

E(Y) = β0 if x =0, i.e. Female

β0 is the base level, i.e Female is the reference category

β1 is the additional effect if Male

In this simple model, only the means for the two groups

are modeled

QIV with q levels

As a general rule, if a QIV has q levels we need q-1 dummies

for coding. The uncoded level is the reference one.

Example: a QIV has three levels, A, B and C

Define

x1 = 1 level A, x1 = 0 if not

x2 = 1 level B, x2 = 0 if not

Model: E(Y) = β0+ β1x1 + β2x2 C is the reference level

Interpreting β’s

β0 = μC

(mean for base level C)

β1 = μA - μC

(additional effect wrt C if level A)

β2 = μB - μC

(additional effect wrt C if level B)

Models with dummies

Even if models which consider only dummy variables do in

practice estimate the means of various groups, the

testing machinery of the regression setup can be useful

for group comparisons.

Dummies can be used in combination with any other

dummies and quantitative X’s to construct models with

first order effects (or main effects) and interactions to

test hypotheses of interest.

In order to define dummies in SPSS see

“Computing dummy vars in SPSS.ppt”

Example: executive salaries

A managing consulting firms has developed a regression

model in order to analyze executive’s salary structure

•

•

•

•

•

•

Y

x1

x2

x3

x4

x5

= Annual salary (in dollars)

= Years of experience

= Years of education

= Gender : 1 if male; 0 if female

= Number of employees supervised

= Corporate assets (in millions of dollars)

Data: ExecSal.sav

A simple model: E(Y) = β0 + β3x3

Male group

Female group

This model estimates the means of the two groups (M,F)

We wanto to test if the difference in means is

significant, i.e. not due to chance

Regression Output

Model Summary

Model

1

R

Adjusted

R Square

R Square

.392a

.153

.145

a. Predictors: (Constant), Gender

Model

1

23320.282

ANOVA b

Sum of Squares

Regression

Salary difference between

groups is significant

Std. Error of

the Estimate

df

Mean Square

9651865066.845

1

9651865066.845

Residual

53295882433.156

98

543835535.032

Total

62947747500.001

99

a. Predictors: (Constant), Gender

b. Dependent Variable: Annual s alary in $

Unstandardized

Coefficients

Model

1

B

Std. Error

(Constant)

83847.059

3999.395

Gender

20739.305

4922.915

Coefficients

F

17.748

Sig.

.000a

a

Standardized

Coefficients

95% Confidence Interval for B

Beta

t

.392

Sig.

Lower Bound

Upper Bound

20.965

.000

75910.389

91783.729

4.213

.000

10969.940

30508.670

a. Dependent Variable: Annual s alary in $

Mean increment for Male

C.I. for mean increment

Model 2: E(Y) = β0 + β1x1 + β3x3

It seems that

the two groups

are separated

Model 2 considers

same slope but

different

intercepts

If x3 = 0 (female) then E(Y) = β0 + β1x1

If x3 = 1 (male)

then E(Y) = β0 + β3 + β1x1

Computer output for model 2

R square improved greatly

Model Summary

Model

1

R

Adjusted

R Square

R Square

a

.860

.740

Std. Error of

the Estimate

.735

12981.615

a. Predictors: (Constant), Years of Experience, Gender

ANOVA b

Model

1

Sum of Squares

df

Mean Square

Regression

46601081714.527

2

23300540857.264

Residual

16346665785.474

97

168522327.685

Total

62947747500.001

99

a. Predictors: (Constant), Years of Experience, Gender

Coefficients

F

Sig.

138.264

.000a

a

b. Dependent Variable: Annual s alary in $

Unstandardized

Coefficients

Model

1

(Constant)

B

50614.312

Std. Error

3161.279

Gender

18894.215

2743.253

2633.831

177.875

Years of Experience

Standardized

Coefficients

Beta

95% Confidence Interval for B

t

16.011

Sig.

.000

Lower Bound

44340.048

Upper Bound

56888.576

.357

6.888

.000

13449.618

24338.812

.767

14.807

.000

2280.799

2986.863

a. Dependent Variable: Annual s alary in $

New intercept for

Male is significant

In this model effect of experience

is assumed equal for the two

groups

Model 3: E(Y) = β0 + β1x1 + β3x3 + β4x1x3

With this model we want to test whether gender and

experience interacts, i.e. if male salary tend to grow at

a faster (slower) rate with experience.

If x3 = 0 (female) then E(Y) = β0 + β1x1

If x3 = 1 (male)

then E(Y) = (β0 + β3) + (β1 + β4)x1

New intercept for

male

New slope for male

Remark: running regression for the two groups together

allows to have higher degrees of freedom (n) for

estimating parameters and model variance.

Model 3: E(Y) = β0 + β1x1 + β3x3 + β4x1x3

Model 3 considers

different slope

and different

intercepts

Computer output for model 3

There is evidence that

salaries for the two groups

grow at different rate with

experience

Model Summary

Model

1

R

Adjusted

R Square

R Square

.868a

.754

.746

a. Predictors: (Constant), ExpGender, Years of

Experience, Gender

Unstandardized

Coefficients

Model

1

B

(Constant)

Std. Error

58049.768

4461.179

Gender

7798.504

5497.470

Years of Experience

2044.541

864.122

ExpGender

Std. Error of

the Estimate

12700.080

Coefficients

a

Standardized

Coefficients

Beta

95% Confidence Interval for B

t

Sig.

Lower Bound

Upper Bound

13.012

.000

49194.397

66905.139

.147

1.419

.159

-3113.888

18710.896

308.565

.595

6.626

.000

1432.045

2657.036

373.653

.301

2.313

.023

122.426

1605.818

a. Dependent Variable: Annual s alary in $

Estimated lines:

^ = 58049.8 + 2044.5*(Years of Experience) for female

Y

^ = 65848.3 + 2908.7*(Years of Experience) for male

Y

A complete second order model

E(Y)=β0+ β1x1+ β2 x2 + β3 x1x2+ β4x12+ β5 x22

• Interpretation of model parameters:

•

•

•

•

β0: y-intercept. The value of E(Y) when x1 = x2 = 0

β1 and β2 : shifts along the x1 and x2 axes;

β3 : rotation of the surface;

β4 and β5 : controls the rate of curvature.

Back to Executive salaries

What about if

suspect that rate

of growth

changes and has

opposite signs for

M and F?

x1 = Years of experience

x3 = Gender (1 if Male)

Note: x32 = x3 since

it is a dummy

E(Y)=β0+ β1x1+ β2 x3 + β3 x1x3+ β4x12

E(Y)=β0+ β1x1+ β2 x3 + β3 x1x3+ β4x12+ β5 x3x12

Model 4

Model 5

Comparing Model 4 and 5

Model 4

If x3 = 0 (female) then

E(Y) = β0 + β1x1 + β4x12

If x3 = 1 (male) then

E(Y) = (β0 + β2) + (β1 + β3)x1 + β4x12

Model 5

Different intercept and slope for M

and F but same curvature

If x3 = 0 (female) then

E(Y) = β0 + β1x1 + β4x12

If x3 = 1 (male) then

E(Y) = (β0 + β2) + (β1 + β3)x1 + (β4+β5)x12

Different intercept, slope and

curvature for M and F

Model 5: computer output

Riepilogo del modello

Modello

R

dimension0

,875a

1

R-quadrato

corretto

R-quadrato

,766

Deviazione

standard Errore

della stima

,754

12507,735

a. Predittori: (Costante), Exp2Gen, Gender, Years of Experience, ExpSqu, ExpGen

Anovab

Modello

1

Somma

dei

quadrati

Media dei

quadrati

df

Regressione

4,824E10

5

Residuo

1,471E10

94

Totale

6,295E10

99

a. Predittori: (Costante), Exp2Gen, Gender, Years of Experience, ExpSqu, ExpGen

b. Variabile dipendente: Annual salary in $

F

9,648E9 61,673

1,564E8

Sig.

,000a

Model 5: computer output

Coefficientia

Modello

1

Coefficienti non

standardizzati

B

Deviazion

e

standard

Errore

Beta

t

Sig.

(Costante)

52391,973 6497,971

8,063

,000

Years of

Experience

Gender

ExpGen

ExpSqu

Exp2Gen

3373,970 1165,248

,982 2,895

,005

21122,152 8285,802

-2081,897 1459,842

-53,181

45,001

112,836

54,950

,399

-,724

-,422

,904

2,549

-1,426

-1,182

2,053

a. Variabile dipendente: Annual salary in $

Which model is preferable? Model 3 or model 5?

,012

,157

,240

,043

A test for comparing nested models

Two models are nested if one model contains all the terms

of the other model and at least one additional term.

The more complex of the two models is called the

complete (or full) model.

The other is called the reduced (or restricted) model.

Example: model 1 is nested in model 2

Model 1: E(Y)=β0+ β1x1+ β2 x2 + β3 x1x2

Model 2: E(Y)=β0+ β1x1+ β2 x2 + β3 x1x2+ β4x12+ β5 x22

To compare the two models we are interested in testing

H0: β4 = β5 = 0, vs. H1: at least one, β4 or β5, differs from 0

F-test for comparing nested models

Reduced model:

E(Y) = β0+ β1x1+ … + β2 xg

Complete Model:

E(Y) = β0+ β1x1+ … + β2 xg + βg+1 xg+1 + … + βkxk

To test

H0: βg+1 = … = βk = 0

H1: at least one of the parameters being tested is not 0

Compute

F

( SSE

R

SSE C ) /( k g )

MSE

C

Reject H0 when F > Fα, where Fα is the level α critical

point of an F distribution with (k-g, n-(k+1)) d.f.

F-test for nested models

Where:

SSER = Sum of squared errors for the reduced model;

SSEC = Sum of squared errors for the complete model;

MSEC = Mean square error for the complete model;

Remark:

k – g = number of parameters tested

k +1 = number of parameters in the complete model

n = total sample size

Compute partial F-tests with SPSS

1. Enter your complete model in the Regression dialog box

– choose the Method “Enter”

2. Click on “Next”

3. In the new box for Independent variables, enter those

you want to remove (i.e. those you’d like to test)

– choose the Method “Remove”

4. In the “Statistics” option select “R squared change”

5. Ok.

Applying the F-test

Let us use the F-test to compare Model 3 and Model 5 in

the executive salaries example.

Note that Model 3 is nested in Model 5

Model 3:

E(Y) = β0 + β1x1 + β2x3 + β3x1x3

Model 5:

E(Y) = β0 + β1x1 + β2x3 + β3x1x3 + β4x12 + β5x3x12

Apply the F-test for H0: β4 = β5 = 0

Computer output

Variabili inserite/rimossec

Modello

Variabili

Variabili

inserite

rimosse Metodo

1

.

Exp2Gen,

Per

blocchi

Gender, Years

of Experience,

ExpSqu,

ExpGena

2

.a

Exp2Gen, Rimuovi

ExpSqub

a. Tutte le variabili richieste sono state immesse.

Do NOT reject H0: β4 = β5 = 0,

i.e. Model 3 is better

F-statistic

F p-value

b. Tutte le variabili richieste sono state rimosse.

c. Variabile dipendente: Annual salary in $

Riepilogo del modello

Variazione dell'adattamento

Model

R

RDeviazione

R- quadrat standard Variazione

quadr

di RVariazio

o

Errore della

stima

ato corretto

quadrato ne di F df1

1

,875°

,766

,754 12507,735

2

,868b

,754

,746 12700,080

a. Predittori: (Costante), Exp2Gen, Gender, Years of Experience, ExpSqu, ExpGen

b. Predittori: (Costante), Gender, Years of Experience, ExpGen

,766 61,673

-,012

2,488

df2

Sig.

Variazio

ne di F

5

94

,000

2

94

,089

A quadratic model example: Shipping costs

Although a regional delivery service bases the charge for shipping a

package on the package weight and distance shipped, its profit per

package depends on the package size (volume of space it occupies) and

the size and nature of the delivery truck.

The company conducted a study to investigate the relationship

between the cost of shipment and the variables that control the

shipping charge: weight and distance.

– Y : cost of shipment in dollars

– X1: package weight in pounds

– X2: distance shipped in miles

It is suspected that non linear effect may be present

Model: E(Y) = β0 + β1x1 + β2x2 + β3x1x2 + β4x12 + β5x22

Data: Express.sav

Scatter plots

16. 0

16. 0

12. 0

12. 0

C o st o f sh ip m en t

C o st o f sh ip m en t

8. 0

0. 00

2. 00

8. 0

4. 0

4. 0

4. 00

6. 00

Weight of parcel in lbs.

8. 00

50

100

150

200

250

Distance shipped

Scatter plots in multiple regression often do not show too much information

Model: E(Y) = β0 + β1x1 + β2x2 + β3x1x2 + β4x12 + β5x22

Model Summary

Model

1

R

R Square

a

.997

Adjusted

R Square

.994

Std. Error of

the Estimate

.992

.4428

a. Predictors: (Constant), Weight*Dis tance, Distance

b

squared, Weight s quared, Weight of parcel in lbs.,ANOVA

Distance shipped

Model

1

Sum of

Squares

Regression

Mean Square

449.341

5

89.868

2.745

14

.196

452.086

19

Residual

Total

df

F

Sig.

.000a

458.388

a. Predictors: (Constant), Weight*Dis tance, Distance squared, Weight squared,

Coefficients a

Weight of parcel in lbs., Distance shipped

b. Dependent Variable: Cost of s hipment

Model

1

Unstandardized

Coefficients

B

Standardized

Coefficients

Std. Error

(Constant)

.827

.702

Weight of parcel in lbs.

-.609

.180

Dis tance s hipped

.004

Weight squared

Dis tance s quared

Beta

t

Sig.

1.178

.259

-.316

-3.386

.004

.008

.062

.503

.623

.090

.020

.382

4.442

.001

1.51E-005

.000

.075

.672

.513

.007

.001

.850

11.495

.000

Weight*Distance

a. Dependent Variable: Cost of shipment

Not significant, try to eliminate

Distance squared

Model: E(Y) = β0 + β1x1 + β2x2 + β3x1x2 + β4x12

Model Summary

Model

1

R

R Square

.997

a

Adjusted

R Square

.994

Std. Error of

the Estimate

.992

.4346

a. Predictors: (Constant), Weight*Dis tance, Distance

b

shipped, Weight squared, Weight of parcel in ANOVA

lbs.

Model

1

Sum of

Squares

Regression

Residual

Total

df

Mean Square

449.252

4

112.313

2.833

15

.189

452.086

19

F

Sig.

.000a

594.623

a. Predictors: (Constant), Weight*Dis tance, Distance shipped, Weight squared,

Coefficients a

Weight of parcel in lbs.

b. Dependent Variable: Cost of s hipment Unstandardized

Standardized

Coefficients

Coefficients

Model

1

B

Std. Error

(Constant)

.475

.458

Weight of parcel in lbs.

-.578

.171

Dis tance s hipped

.009

Weight squared

Weight*Distance

a. Dependent Variable: Cost of shipment

Beta

t

Sig.

1.035

.317

-.300

-3.387

.004

.003

.141

3.421

.004

.087

.019

.369

4.485

.000

.007

.001

.842

11.753

.000

Applying the F-test: Shipping costs

A company conducted a study to investigate the relationship

between the cost of shipment and the variables that control the

shipping charge: weight and distance.

– Y : cost of shipment in dollars

– X1: package weight in pounds

– X2: distance shipped in miles

It is suspected that non linear effect may be present,

use the F-test for nested models to decide between

Model 1: E(Y) = β0 + β1x1 + β2x2 + β3x1x2 + β4x12 + β5x22

Model 2: E(Y) = β0 + β1x1 + β2x2 + β3x1x2

Data: Express.sav

ANOVA Tables

Full model

ANOVA b

Model

1

Sum of

Squares

Regression

Residual

Total

df

Mean Square

449.341

5

89.868

2.745

14

.196

452.086

19

F

Sig.

.000a

458.388

a. Predictors: (Constant), Weight*Dis tance, Distance squared, Weight squared,

Weight of parcel in lbs., Distance shipped

b. Dependent Variable: Cost of s hipment

Reduced model

ANOVA b

Model

1

Sum of

Squares

Regression

Residual

Total

df

Mean Square

445.452

3

148.484

6.633

16

.415

452.086

19

F

358.154

a. Predictors: (Constant), Distance shipped, Weight of parcel in lbs., Weight*Dis tance

b. Dependent Variable: Cost of s hipment

Sig.

.000a

F-statistic

To test H0: β4 = β5 = 0, from the ANOVA tables we have

F

( SSE

R

SSE C ) / 2

MSE

C

( 6 . 633 2 . 745 ) / 2

9 . 92

0 . 196

The critical value Fα (at 5% level) for and F-distribution

with 2 and 14 d.f. is 3.74

Since F (9.92) > Fα (3.74) the null hypothesis is rejected at

the 5% significance level. I.e. the model with quadratic

terms is preferred over the reduced one.

Computer output

Variables Entered/Removed

Model

1

Variables

Entered

Variables

Removed

Weight*

Dis tance,

Dis tance

squared,

Weight

squared,

Weight of

parcel in

lbs.,

Dis tance a

shipped

Method

.

2

a

Enter

F-statistic

Dis tance

squared,

Weight

squared

.

c

Remove

b

F p-value

a. All requested variables entered.

b. All requested variables removed.

Model Summary

c. Dependent Variable: Cost of shipment

Change Statistics

Model

1

2

R

R Square

Adjusted

R Square

Std. Error of

the Es timate

R Square

Change

F Change

df1

df2

Sig. F Change

a

.994

.992

.4428

.994

458.388

5

14

.000

b

.985

.983

.6439

-.009

9.917

2

14

.002

.997

.993

a. Predictors: (Constant), Weight*Distance, Distance squared, Weight squared, Weight of parcel in lbs., Distance shipped

b. Predictors: (Constant), Weight*Distance, Weight of parcel in lbs., Distance shipped

Reject H0: β4 = β5 = 0

Executive salaries: a final model (?)

•

•

•

•

•

•

Y

x1

x2

x3

x4

x5

= Annual salary (in dollars)

= Years of experience

= Years of education

= Gender : 1 if male; 0 if female

= Number of employees supervised

= Corporate assets (in millions of dollars)

Try adding other variables to model 3

E(Y) = β0 + β1x1 + β2x2 + β3x3 + β4x1x3 + β5x4 + β6x5

Model 6

Computer Output: Model 6

Riepilogo del modello

Modello

R

1

R-quadrato

,963a

R-quadrato

corretto

,927

,922

Errore della

stima

7020,089

a. Predittori: (Costante), Corporate assets (in million $), Years of Experience, Years of Education, Gender, Number of

Employees supervised, ExpGender

Anovab

Model

1

Somma dei

quadrati

Regressione

Residuo

Totale

Media dei

quadrati

df

5,836E10

6

4,583E9

93

6,295E10

99

F

Sig.

9,727E9 197,384 ,000a

4,928E7

a. Predittori: (Costante), Corporate assets (in million $), Years of Experience, Years of Education, Gender, Number of Employees supervised, ExpGender

Computer Output: Model 6

Coefficients

Model

Coefficienti non

standardizzati

1

B

(Costante)

Years of Experience

Gender

ExpGender

Years of Education

Number of Employees

supervised

Corporate assets (in million

$)

a. Variabile dipendente: Annual salary in $

Deviazion

e standard

Errore

Coefficient

i

standardiz

zati

Beta

-38331,331 9533,238

2178,964

171,979

13203,101 3137,775

669,546

2689,594

53,239

180,310

209,042

311,914

4,470

46,600

,634

,249

,233

,246

,353

,110

t

Sig.

-4,021

,000

12,670

,000

4,208

,000

3,203

,002

8,623

,000

11,910

,000

3,869

,000

Executive salaries: comparison of models

Mod.

Predictors

Adj. R2

1

x1, x2, x4, x5

Standard

error

0.747 12685.31

2

x1, x3

0.735 12981.62

138.26

3

x1, x3, x1∙x3

0.746 12700.08

98.09

6

x1, x3, x1∙x3,

x4, x5

0.922

7020.09

F-stat

74.05

197.38

![Lec2LogisticRegression2 [modalità compatibilità]](http://s2.studylib.net/store/data/005811485_1-24ef5bd5bdda20fe95d9d3e4de856d14-300x300.png)