notes

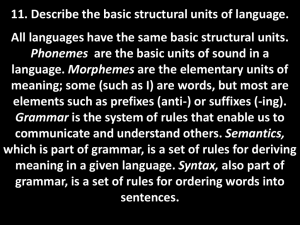

advertisement

CS 461 – Aug. 24

• Course overview

• Section 1.1

– Definition of finite automaton (FA)

– How to draw FAs

Overview

• What is Computational Theory?

– Not so concerned with specific HW/SW

– Search for general truths, models, imagination

Ex. What is possible vs. impossible?

• Facets

– Automata & Formal Languages (Ch. 1 – 3)

There are 3 important models of computation.

– Computability (Ch. 3 – 5)

“Some problems can’t be solved.”

– Complexity (Ch. 7)

“Some problems take too long to solve.”

Theory

• What is theory?

• You have already dealt with abstract ideas in

earlier courses

–

–

–

–

–

–

Difference between void, null, zero

Different kinds of zeros (int, float, positive, negative)

Infinity versus NaN

Correctness of an algorithm

Number of iterations in a loop

Does recursion really work?

Computation Models

• Purpose of a model is to study what computers

and programs can do.

• Models range from simple to complete:

– Model #1: Finite Automaton

– Model #2: Pushdown Automaton

– Model #3: Turing Machine

• Assume programming essentially boils down to

input outputs.

• For simplicity / convenience,

– input = any binary string

– output = 0/1, T/F, accept/reject

Finite Automaton

• Our first model – Chapter 1

• Purpose of FA is to take (binary) input string and

either “accept” or “reject” it.

• The set of all strings accepted by an FA is called

the language of the FA.

• “Language” simply means a set of strings, e.g.

the set of all binary strings starting with “0”.

Example

• Start state

• Accept/happy state(s)

0

s1

1

s2

0, 1

– If we are in one of

these when input is

done, we accept

string.

• Transitions

• “finite” refers to # of

states. More on

limitations later.

Example

0

s1

s2

• The FA could also be

expressed in a table.

• The table tells us

where to go on each

possible input bit.

From

1

0, 1

s1

Input

0

s2

Input

1

s1

s2

s2

s2

Example

• Let’s try sample input

like 101.

0

s1

1

s2

0, 1

• Can you figure out the

language of this

machine?

– i.e. How do we get to

the happy state?

Example #2

0

s1

1

s2

s3

0

1

• What is language of this FA?

• Note: if we change which state is the accept

state, we’d have a different language!

0, 1

CS 44 – Aug. 26

• Finish section 1.1

– Definition of FA

– Examples

– Regular languages

– Union of 2 regular languages (handout)

Formal Definition

A finite automaton has 5 things:

1.

2.

3.

4.

5.

A set of states

An alphabet, for input

A start state

A set of accept states

Transition function: δ(state, input) = state

•

When we create/define an FA, must specify all

5 things. A drawing does a pretty good job.

Example

0

1

s1

s2

s3

0

1

0, 1

From state

Input 0

Input 1

s1

s2

s1

s2

s1

s3

s3

s3

s3

Let’s make FA’s

1.

2.

3.

4.

5.

L = bit strings that have exactly two 1’s

L = starts with 01

L = ends with 01

L = has an even number of 1’s

L = starts and ends with same symbol

*** Very good idea to give meaningful names to your

states.

Since L is a set… how would we create an FA for the

complement of L?

Regular Language

• A regular language is a set of strings accepted

by some FA.

• Examples

– Starting with 01; ending with 01, containing 01.

– Strings of length 2.

– {0, 11}

• Any finite set is regular. Infinite sets are more

interesting. Yet, # states always finite!

Operations on sets

• We can create new regular sets from existing

ones, rather than starting from scratch.

• Binary operations

– Union

– Intersection

• Unary operations

– Complement

– Star: This is the concatenation of 0 or more

elements.

For example, if A = {0, 11}, then A* is { ε, 0, 11, 00,

1111, 011, 110, …}

• “Closure property”: you always get a regular set

when you use the above operations.

Union

• Book shows construction (see handout)

• We want to union two languages A1 and A2: M1

accepts A1 and M2 accepts A2. The combined

machine is called M.

• M pretends it’s running M1 and M2 at same time!

δ((r1,r2),a) = (δ1(r1,a),δ2(r2,a))

• # states increases because it’s the Cartesian

product of M1 and M2’s states.

• Next section will show easier way to do union.

Wolf, Goat, Cabbage

• A man would like to cross a river with his wolf,

goat and head of cabbage.

• Can only ferry 1 of 3 items at a time. Plus:

– Leaving wolf & goat alone: wolf will eat goat.

– Leaving goat & cabbage alone: goat will eat

cabbage.

• Yes, we can solve this problem using an FA!

– Think about possible states and transitions.

CS 44 – Aug. 29

• Regular operations

– Union construction

• Section 1.2 - Nondeterminism

– 2 kinds of FAs

– How to trace input

– NFA design makes “union” operation easier

– Equivalence of NFAs and DFAs

Wolf, Goat, Cabbage

• A man would like to cross a river with his wolf,

goat and head of cabbage.

• Can only ferry 1 of 3 items at a time. Plus:

– Leaving wolf & goat alone: wolf will eat goat.

– Leaving goat & cabbage alone: goat will eat

cabbage.

• Yes, we can solve this problem using an FA!

– Think about possible states and transitions.

Operations on sets

• We can create new regular sets from existing

ones, rather than starting from scratch.

• Binary operations

– Union

– Intersection

• Unary operations

– Complement

– Star: This is the concatenation of 0 or more

elements.

For example, if A = {0, 11}, then A* is { ε, 0, 11, 00,

1111, 011, 110, …}

• “Closure property”: you always get a regular set

when you use the above operations.

Union

• Book shows construction (see handout)

• We want to union two languages A1 and A2: M1

accepts A1 and M2 accepts A2. The combined

machine is called M.

• M pretends it’s running M1 and M2 at same time!

δ((r1,r2),a) = (δ1(r1,a),δ2(r2,a))

• # states increases because it’s the Cartesian

product of M1 and M2’s states.

• Next section will show easier way to do union.

Union

• Book shows construction (see handout)

• We want to union two languages A1 and A2: M1

accepts A1 and M2 accepts A2. The combined

machine is called M.

• M pretends it’s running M1 and M2 at same time!

δ((r1,r2),a) = (δ1(r1,a),δ2(r2,a))

• # states increases because it’s the Cartesian

product of M1 and M2’s states.

• Next section will show easier way to do union.

Non-determinism

• There are 2 kinds of FA’s

– DFA: deterministic FA

– NFA: non-deterministic FA

• NFA is like DFA except:

– A state may have any # of arrows per input symbol

– You can have ε-transitions. With this kind of transition,

you can go to another state “for free”.

• Non-determinism can make machine construction

more flexible. At first the theory seems more

complex, but NFA’s will come in handy.

Example

0, ε

1

s1

1

s2

s3

s4

0, 1

0, 1

From state

Input 0

Input 1

s1

s1

s1, s2

s2

s3

s3

s4

s3

s4

s4

Input ε

s4

continued

0, ε

1

s1

s2

1

s3

s4

0, 1

0, 1

• See the non-determinism?

• Remember, any time you reach a state that has

ε-transitions coming out, take it! It’s free.

• Let’s trace input 010110.

0, ε

1

s1

1

s2

s3

s4

0, 1

0, 1

Start

Read 0 Read 1 Read 0 Read 1 Read 1 Read 0

s1

s1

s1

s1

s1

s2

s3

s3

[dies]

s2

s3

s4

s1

s2

s3

[dies]

s1

s3

[dies]

s4

s4

Moral

• When tracing a word like 010110, we just

want to know if there is any way to get to

the accept state.

• Language is anything containing 11 or

101.

• Corresponding DFA would have more

states.

CS 461 – Aug. 31

Section 1.2 – Nondeterministic FAs

• How to trace input √

• NFA design makes “union” operation

easier

• Equivalence of NFAs and DFAs

NFA’s using “or”

ε

Old start 1

New start

ε

Old start 2

• Can you draw NFA for:

{ begin with 0 or end with 1 } ?

Amazing fact

• NFA = DFA

• In other words, the two kinds of machines have

the same power.

• Proof idea: we can always convert a DFA into

an NFA, or vice versa. Which do you think is

easier to do?

Formal NFA def’n

• The essential difference with DFA is in the

transition function:

DFA

δ: Q x Σ Q

NFA

δ: Q x Σε P(Q)

• Thus, converting DFA NFA is easy. We

already satisfy the definition!

NFA DFA construction

1. When creating DFA, states will be all possible

subsets of states from NFA.

–

–

–

This takes care of “all possible destinations.”

In practice we won’t need whole subset: only create

states as you need them.

“empty set” can be our dead state.

2. DFA start state = NFA’s start state or

anywhere you can begin for free. Happy state

will be any subset containing NFA’s happy

state.

3. Transitions: Please write as a table. Drawing

would be too cluttered. When finished, can

eliminate useless states.

Example #1

• NFA transition table

given to the right.

• DFA start state is {1, 3},

or more simply 13.

inputs

state

a

b

ε

1

-

2

3

2,3 3

-

2

3

• DFA accept state would

be anything containing

1. Could be 1, 12, 13,

123, but we may not

need all these states.

1

-

-

continued

• The resulting DFA

could require 2n

states, but we should

only create states as

we need them.

inputs

state

a

b

ε

1

-

2

3

2,3 3

-

2

3

1

Let’s begin:

If we’re in state 1 or 3, where do we go if we read an ‘a’ or a ‘b’?

δ(13, a) = 1, but we can get to 3 for free.

δ(13, b) = 2. We need to create a new state “2”.

Continue the construction by considering transitions from state 2.

-

-

answer

DFA

inputs

NFA

inputs

state

a

b

ε

1

-

2

3

2,3 3

-

2

3

1

-

-

state

a

b

13

13

2

2

23

3

23

123

3

123

123

23

3

13

Notice that the DFA is in fact deterministic: it has exactly one destination per

transition. Also there is no column for ε.

Example #2

• NFA transition table

given to the right.

• DFA start state is A.

• DFA accept state would

be anything containing

D.

inputs

State

0

1

ε

A

A

A,C

-

B

D

-

C

C

D

B

B

D

-

continued

Let’s begin.

δ(A, 0) = A

δ(A, 1) = AC

We need new state AC.

δ(AC, 0) = A

δ(AC, 1) = ABC

Continue from ABC…

inputs

State

0

1

ε

A

A

A,C

-

B

D

-

C

C

D

B

B

D

-

answer

NFA

DFA

inputs

inputs

State

0

1

ε

State

0

1

A

A

A,C

-

A

A

AC

B

D

-

C

AC

A

ABC

C

D

B

B

D

-

ABC

AD

ABC

AD

ABC

ACD

ACD

ABC ABCD

ABCD ABCD ABCD

final thoughts

• NFAs and DFAs have same computational

power.

• NFAs often have fewer states than

corresponding DFA.

• Typically, we want to design a DFA, but NFAs

are good for combining 2+ DFAs.

• After doing NFA DFA construction, we may

see that some states can be combined.

– Later in chapter, we’ll see how to simplify FAs.

CS 461 – Sept. 2

• Review NFA DFA

• Combining FAs to create new languages

– union, intersection, concatenation, star

– We can basically understand how these work by

drawing a picture.

• Section 1.3 – Regular expressions

– A compact way to define a regular set, rather than

drawing an FA or writing transition table.

Example #2

• NFA transition table

given to the right.

• DFA start state is A.

• DFA accept state would

be anything containing

D.

inputs

State

0

1

ε

A

A

A,C

-

B

D

-

C

C

D

B

B

D

-

continued

Let’s begin.

δ(A, 0) = A

δ(A, 1) = AC

We need new state AC.

δ(AC, 0) = A

δ(AC, 1) = ABC

Continue from ABC…

inputs

State

0

1

ε

A

A

A,C

-

B

D

-

C

C

D

B

B

D

-

answer

NFA

DFA

inputs

inputs

State

0

1

ε

State

0

1

A

A

A,C

-

A

A

AC

B

D

-

C

AC

A

ABC

C

D

B

B

D

-

ABC

AD

ABC

AD

ABC

ACD

ACD

ABC ABCD

ABCD ABCD ABCD

Moral

• NFAs and DFAs have same computational

power.

• NFAs often have fewer states than

corresponding DFA.

• Typically, we want to design a DFA, but NFAs

are good for combining 2+ DFAs.

• After doing NFA DFA construction, we may

see that some states can be combined.

– Later in chapter, we’ll see how to simplify FAs.

U and ∩

• Suppose M1 and M2 are DFAs. We want to

combine their languages.

• Union: We create new start state. √

– Do you understand formalism p. 60 ?

• How can we also do intersection?

Hint: A ∩ B = (A’ U B’)’

Concatenation

• Concat: For each happy state in M1, turn it into

a reject state and add ε-trans to M2’s start.

• Example

L1 = { does not contain 00 }

L2 = { has even # of 1’s }

Let’s draw NFA for L1L2.

• Let’s decipher formal definition of δ on p. 61.

Star

• We want to concat the language with itself 0+

times.

• Create new start state, and make it happy.

• Add ε-transitions from other happy states to the

start state.

• Example

L = { begins with 1 and ends with 0 }

Let’s draw NFA for L*.

• Formal definition of δ on p. 63.

Regular expression

• A concise way to describe a language

– Text representation, straightforward to input into

computer programs.

• Use alphabet symbols along with operators

+ means “or”

* means repetition

Concatenation

• There is no “and” or complement.

Examples

• What do you think these regular expressions

mean?

0* + 1*

0*1*

00*11*

(a shorthand would be 0+1+ )

(0 + 1)*

• What’s the difference between 10*1 and

1(0+1)*1 ? Does this mean “anything with 1 at

beginning and end?”

Practice

• Words with 2 or more 0’s. What’s wrong with

this answer: 1*01*01 ?

• Words containing substring 110.

• Every even numbered symbol is 0.

– What’s wrong with: ((0 + 1)*0)* ?

• Words of even length.

• The last 2 symbols are the same.

• What is the shortest word not in: 1*(01)*0* ?

• True or false: (111*) = (11 + 111)*

CS 461 – Sept. 7

• Section 1.3 – Regular expressions

Examples

Equivalent to FA’s.

regular expression FA

(straightforward)

FA regular expression

(* major skill)

Regular expression

• A concise way to describe a language

– Text representation, straightforward to input into

computer programs.

• Use alphabet symbols along with operators

+ means “or”

* means repetition

Concatenation

• There is no “and” or complement.

Examples

• What do you think these regular expressions

mean?

0* + 1*

0*1*

00*11*

(a shorthand would be 0+1+ )

(0 + 1)*

• What’s the difference between 10*1 and

1(0+1)*1 ? Does this mean “anything with 1 at

beginning and end?”

Practice

• Words with 2 or more 0’s. What’s wrong with

this answer: 1*01*01 ?

• Words containing substring 110.

• Every even numbered symbol is 0.

– What’s wrong with: ((0 + 1)*0)* ?

• Words of even length.

• The last 2 symbols are the same.

• What is the shortest word not in: 1*(01)*0* ?

• True or false: (111*) = (11 + 111)*

Conversions

• It’s useful to be able to convert among:

– English description of language

– FA drawing

– Regular expression

• Also good practice to consider complement of a

language.

• Let’s practice writing regular expressions.

– General technique: think about how to get to happy

state. Sometimes difficult, so we’ll look at general

algorithm later.

Reg exp FA

• Just build the FA in pieces.

– We already know how to combine FA’s using union,

concatenation, star – these are the basic operations

in regular expressions.

– You may even be able to construct the FA intuitively.

• Let’s try examples

– 0*10

– 0* + 1*

FA reg exp

• If the FA doesn’t have too many “loops,” it’s not hard to

write down the regular expression.

• Try this one. Also think about what would happen if a

different state is the accept state.

1

s1

1

s2

0

s3

0

s4

0, 1

0, 1

In other words

From state

Input 0

Input 1

s1

s4

s2

s2

s2

s3

s3

s4

s4

s4

s4

s4

1

s1

1

s2

0

s3

0

s4

0, 1

0, 1

CS 461 – Sept. 9

• Section 1.3 – Regular expressions

Examples

Equivalent to FA’s.

regular expression FA

(straightforward)

FA regular expression

(* major skill)

This one is more ambitious…

Think about L and L’.

0, 1

0

1

0

1

0

0, 1

0, 1

0

1

1

State

0

1

s1

s2

s2

s2

s3

s7

s3

s7

s4

s4

s5

s7

s5

s6

s6

s6

s1

s7

s7

s7

s7

Need help

• Often an FA is too complex to just figure out the

language just by looking at it.

• Need a general procedure!

0

s1

01* (01*0) ?????

s2

Nope!

Actually, it should be

0

1*0(1* + 01*0)*

1

1

State

0

1

s1

s2

s1

s2

s1

s2

Procedure

• Step-by-step transformation of FA, taking away

one state at a time until we just have one start

and one happy.

• We’ll label edges with regular expressions.

– So our FA will be a “generalized” NFA

• Step 1: Add a new start and new happy state.

• Step 2: For each of the other states:

– Pick one to be ripped out.

– Recalculate edges (except to start or from happy)

taking this removal into account.

Basic idea

Suppose we are getting rid of state X.

To go from A to B, you can either get their directly,

or via X. We would label edge from A to B as:

δ(A,B) = δ(A,B) + δ(A,X) δ(X,X)* δ(X,B)

Notes:

1. If X has no edge to itself,

Just say ε.

2. If no edge AX or XB,

we say Ø and thus

no need to recalculate AB.

X

A

B

Example

0

• First create new start

and happy states.

s1

s2

0

• New machine below…

1

0

ε

start

1

s1

ε

s2

end

0

1

1

In other words

0

State

0

1

s1

s2

s1

s2

s1

s2

s1

s2

0

1

ε

start

ε

0

s1

s2

end

0

1

1

State

0

1

start

ε

s1

s1

s2

s1

s2

s1

s2

end

1

end

Get rid of s1

0

ε

start

s1

ε

s2

end

0

1

1

???

start

ε

s2

end

???

• Need to compute δ(start,s2) and δ(s2,s2).

δ(start,s2) = Ø + ε1*0, which simplifies to 1*0

δ(s2, s2) = 1 + 01*0

In other words

State

0

1

start

ε

s1

s1

s2

s1

s2

s1

s2

end

end

Becomes:

State

1*0

start

s2

s2

end

1+01*0

ε

s2

end

Get rid of s2

ε

1*0

s2

start

end

1 + 01*0

start

end

???

• Calculate δ(start, end) = Ø + 1*0 (1+01*0)* ε

which simplifies to 1*0 (1 + 01*0)*.

Example #2

b

1

2

a

a, b

• Create new start & happy states.

• What happens when we get rid of state 1?

b

start

ε

1

2

ε

end

a

a, b

In other words

b

1

2

a

a, b

State

a

ε

b

start

1

1

1

2

2

2

2

end

end

b

start

ε

1

2

ε

end

a

a, b

Get rid of state 1

δ(start, 2) = Ø + ε a*b = a*b

δ(start, ) = Ø + ε a* Ø = Ø

δ(2, 2) = a + b + Ø… = a + b

δ(2, ) = ε

b

start

ε

1

2

ε

end

a

a, b

continued

a*b

start

2

ε

end

a+b

Finally we can get rid of state 2 to obtain:

a*b (a + b)*

CS 461 – Sept. 12

• Simplifying FA’s.

– Let’s explore the meaning of “state”. After all if we

need to simplify, this means we have too many states.

– Myhill-Nerode theorem

– Handout on simplifying

What is a state?

Example: 0*10*

need 1

1

0

state = 0*

1

0

state = 0*10*

0, 1

state = 0*10*1 (0 + 1)*

• No matter what next input symbol is, all words in

same state act alike.

x, y same state z, xz same state as yz

• A state is a collection of words that react to input

in the same way.

Equivalent states

odd

1

0

0

even

1

1

0

0, 1

• Whether we’ve seen even or odd number of 0’s shouldn’t

matter. Only concern is ultimate outcome: will string be

accepted or not?

• Words x and y should be in same state if z, xz and yz

have the same outcome.

In other words

• The 2 machines are equivalent.

From state

Input 0

Input 1

Need 1

Need 1

Good

Good

Good

Bad

Bad

Bad

Bad

From state

Input 0

Input 1

Even

Odd

Good

Odd

Even

Good

Good

Good

Bad

Bad

Bad

Bad

Myhill-Nerode theorem

•

•

Basis for simplification algorithm.

Also gives condition for a set to be regular.

–

i.e. infinite # states not allowed.

3 parts to theorem:

1.

2.

3.

For any language L, we have equivalence relation R:

xRy if z, xz and yz same outcome.

If L is regular, # of equivalences classes is finite.

If # equivalences classes finite, language is regular.

Proof (1)

For any language L, we have equivalence relation R: xRy

if z, xz and yz same outcome.

•

To show a relation is an equivalence relation, must

show it is reflexive, symmetric and transitive.

•

Reflexive: xz and xz have same outcome. (i.e. both

are accepted, or both are rejected.)

•

Symmetric. If xz has same outcome as yz, then yz has

same outcome as xz.

•

Transitive. If xz has same outcome as yz, and yz has

same outcome as tz, then xz has same outcome as tz.

All 3 are obviously correct.

Proof (2)

If L is regular, # of equivalences classes is finite.

• Regular means L is recognized by some FA.

• Thus, # of states is finite.

• It turns out that (# equiv classes) <= (# states)

Why? Because 2 states may be “equivalent.”

More importantly: # classes can’t exceed # states. Proof:

Assume # classes > # states. Then we have a state

representing 2 classes. In other words, x and y in the same

state but x not related to y. Meaning that z where xz, yz don’t

have same fate but travel thru the same states! This makes no

sense, so we have a contradiction.

• Since (# equiv classes) <= (# states) and # states is

finite, we have that # equiv classes is finite.

Proof (3)

If # equivalences classes finite, language is regular.

• We prove regularity by describing how to construct an

FA.

• Class containing ε would be our start state.

• For each class: consider 2 words x and y.

–

–

–

–

x0 and y0 have to be in the same class.

x1 and y1 have to be in the same class.

From this observation, we can draw appropriate transitions.

Since states are being derived from classes, the number of

states is also finite.

• Accept states are classes containing words in L.

Example

• L = { all bit strings with exactly two 1’s } has 4

equivalence classes

[ ε ], [ 1 ], [ 11 ], [ 111 ]

• Let’s consider the class [ 11 ]. This includes

words such as 11 and 0101.

– If we append a 0, we get 110 and 01010. These

words also belong in [ 11 ].

– If we append a 1, we get 111 and 01011. These

words belong in the class [ 111 ].

• This is the same thought process we use when

creating FA’s transitions anyway.

Learning from theorem

• There exist non-regular languages! It happens if

# of equivalence classes is infinite.

– Soon we’ll discuss another method for determining

non-regularity that’s a little easier.

• Part 3 of proof tells us that there is an FA with

the minimum number of states (states =

equivalence classes).

– See simplification algorithm handout.

Example

Is { 0n1n } regular? This is the language ε, 01, 0011, 000111, etc.

no 0’s

c1 = { ε }

0

one 0

c2 = { 0 }

0

two 0’s

c3 = { 00 }

0

Etc.

c4 = { 000 }

• Should x=0 and y=00 be in the same class? No!

Consider z = 1. Then xz = 01 and yz = 001. Different

outcome!

• ∞ # classes Can’t draw FA.

• Equivalence classes are usually hard to conceive of, so

we’ll rely more on a different way to show languages not

regular.

CS 461 – Sept. 14

• Practice simplifying FA’s

• Section 1.4 – Pumping lemma

–

–

–

–

Purpose is to show that a language is not regular

Proof

Examples

Can also help to determine if a language is

finite/infinite.

Non-regular languages

• Means there is no such FA for language.

• They do exist! How can a language be nonregular?

– Not because language is too big, because the largest

possible language (0 + 1)* is regular.

– Non-regular means the “shape” of the language is

defective. It requires an infinite number of states.

Finite # of states can’t precisely define the language.

You’ll discover that a finite # of states winds up

defining something else.

finite

regular

Essential idea

The pumping lemma basically says this:

• If L is regular, you should be able take almost

any word in L, and repeat some of its characters

in order to produce longer words in L.

– Incidentally, you should also be able to take away

some of its characters to produce a shorter word.

– Degenerate case: finite sets.

– “Almost any word”? Some words are not interesting

like ε and 0.

Motivation

• Consider language { 0n1n }.

• Suppose it’s regular. Then there must be some

FA for it.

– How many states does it have? Let’s pick a number

like 95.

– Then, how would we process the word 097197? While

reading the 0s, we have to visit the same state twice!

In other words, we go back to a previous state, for

example from s80 s81 s82 s80, and then

continue on to accept state.

– There must be another word that leaves out the s81

and s82 steps, such as 094197, or does them

repeatedly. But those words are not in L!

Contradiction implies that L is not regular.

More generally…

• Suppose L is regular and M is FA recognizing it.

Let p = # of states.

• Consider a word s, |s| p. We take at least p

transitions, so we visit at least p+1 states. So,

we must visit some state twice while reading the

first p symbols! Suppose we call this state q.

• Let s = xyz, where x is part of string up to first

time we visit q, and y is the part of string

between our 2 visits.

• Other words of the form xyiz are also accepted

by M. In other words, we can loop as many

times as we want, even 0 times.

A litmus test

• The pumping lemma is a guaranteed property of

any regular language.

• Analogy…

– If it’s a duck, then it will quack.

– If it’s a cow, then it will moo.

• Contrapositive proves an imposter!

– If it doesn’t quack, it’s not a duck.

– If it doesn’t moo, it’s not a cow.

• However, can’t be used to prove regularity.

Pumping lemma

• See handout

– Formal statement (if it’s a duck…)

– Contrapositive used to show nonregularity

• If language is regular, then there is some part of

any word we can “pump.”

• If we have a language we suspect is nonregular, use contrapositive!

Proving non-regularity

• It’s like a 2-player game…

– Adversary picks secret number p.

– We select any string we want, in terms of p

(e.g. 0p1p)

– Adversary will break up s into xyz subject to

constraints.

• The place to pump has length at least 1.

• The place to pump appears in the first p positions.

– Be ready to show that xyiz won’t be in

language for some i.

CS 461 – Sept. 16

• Review Pumping lemma

– How do we show language non-regular?

• Applications of FA:

– Scanning (part of compilation)

– Searching for text, using regular expression

Proving non-regularity

• It’s like a 2-player game…

– Adversary picks secret number p.

– We select any string we want, in terms of p

(e.g. 0p1p)

– Adversary will break up s into xyz subject to

constraints.

• The place to pump has length at least 1.

• The place to pump appears in the first p positions.

– Be ready to show that xyiz won’t be in

language for some i.

Example languages

•

•

•

•

•

•

•

Bit strings that are palindromes

Bit strings with equal # of 0s and 1s.

More 0s than 1s.

{ 0n : n is a perfect square }

{ 0n : n is prime }

{ 03n + 2 : n 0 }

{ 0i 1j : i is even or i j }

Notice that regular sets can’t handle counting or nonlinear

patterns.

Consider complement

Show L = { 0i 1j : i is even or i j } is not regular.

• Let’s use some set theory…

– L regular iff L’ regular

• What is L’ ?

– Hint: Need to consider (0 + 1)* - 0*1*.

– Language L’ has “and” instead of “or”, so easier to

produce a word not in language.

continued

L = { 0i 1j : i is even or i j }

Then L’ is all words with:

• The definition turned around.

Let A = { i is odd and i < j }.

• Plus all words not of the form 0*1*.

Let B = (0 + 1)* - 0*1* = 0*11*0(0 + 1)*.

Then, L’ = A union B.

Let s = 02p+1 12p+2 and i = 2 and see how it works in

pumping lemma…

CS 461 – Sept. 19

• Last word on finite automata…

– Scanning tokens in a compiler

– How do we implement a “state” ?

• Chapter 2 introduces the 2nd model of

computation

Application of FA

• Compilers! When a compiler looks at your

program, it does the following:

–

–

–

–

–

Lexical analysis (scanning: break up into tokens)

Syntax analysis

Semantic analysis

Code generation

Optimization

Comment

*

Next

char

/

=

Read

/

num

Found “/=“

Div by number

Chapter 2

• How can we represent a language?

–

–

–

–

–

English description √

FA drawing (state diagram) √

Transition table √

Regular expression √

Recursive definition!

• A context-free grammar (CFG) is a compact way

of writing a recursive definition.

Expr vs. grammar

• Basic idea to creating a grammar:

– Base case: what is smallest word in language?

(Sometimes we need > 1 base case.)

– Recursive case: how to create bigger words?

What do we append to the beginning or end of word

to create more words?

• Ex. 101* in the form of a grammar:

– Shortest word is 10.

– Create more words by appending 1s to the right end.

S 10

S S1

example

• Let’s try (00)*10 as a grammar:

– Shortest word is 10.

– Bigger words? Append 00 to the front.

S 10

S 00S

– These rules can be combined to say S 10 | 00S

examples

• Let’s try 01*0(10)*.

– Uh-oh, how do you pump in the middle? If you need

concatenation, define the language in parts. The first

part will handle 01* and the second part 0(10)*.

S AB

A 0 | A1

B 0 | B10

• (10*1)* could be written this way:

S ε | SAB

A 1 | A0

B1

Formal definition

• A context-free grammar has 3 things

– Set of terminal symbols (alphabet plus ε)

– Set of nonterminal symbols (i.e. variables in

grammar)

– Set of rules or “productions” for our recursive

definitions

• The rules have the following format:

nonterminal concat of 1+ terminals/nonterminals

• Often we have several rules for the same

nonterminal, so they are joined by “|” meaning

“or.”

More power

• CFGs are more powerful than FAs or regular

expressions because they allow us to define

non-regular languages!

• For example, 0*1* and { 0n 1n }

S AB

S ε | 0S1

A ε | A0

B ε | B1

• Wow, maybe non-regular languages are easier!

• With CFGs, we now have a 2nd class of

languages, the context-free languages.

Models

#1

#2

Model name Finite automaton Pushdown

(FA)

automaton (PDA)

Recognizes Regular

what?

languages

Context-free

languages

Text rep’n

Context-free

grammar

Regular

expression

2nd model

• Encompasses regular & non-regular languages.

• PDA is like FA, but also has a stack.

– More on PDAs in section 2.2 later.

• Section 2.1: CFGs

– Important because this is how programming

languages are defined.

– Goals:

1. Given a grammar, can we generate words;

2. Given a set, can we write a grammar for it.

Deriving words

• Here is a CFG. What is it defining?

S AB

A ε | 1A

B ε | 1B0

More practice

•

•

•

•

•

•

{ 0 i 1j : i > j }

0*11*0(0 + 1)*

(000)*11

1*0 (1 + 01*0)*

(a + ba) b*a

[bb + (a + ba) b*a]*

CS 461 – Sept. 21

Context-free grammars

• Handouts

– Hints on how to create grammar

– Examples of generating words from a grammar

• Writing a grammar for a language

• Derivations

Formal definition

• A context-free grammar has 3 things

– Set of terminal symbols (alphabet plus ε)

– Set of nonterminal symbols (i.e. variables in

grammar)

– Set of rules or “productions” for our recursive

definitions

• The rules have the following format:

nonterminal concat of 1+ terminals/nonterminals

• Often we have several rules for the same

nonterminal, so they are joined by “|” meaning

“or.”

More power

• CFGs are more powerful than FAs or regular

expressions because they allow us to define

non-regular languages!

• For example, 0*1* and { 0n 1n }

S AB

S ε | 0S1

A ε | A0

B ε | B1

• Wow, maybe non-regular languages are easier!

• With CFGs, we now have a 2nd class of

languages, the context-free languages.

Models

#1

#2

Model name Finite automaton Pushdown

(FA)

automaton (PDA)

Recognizes Regular

what?

languages

Context-free

languages

Text rep’n

Context-free

grammar

Regular

expression

2nd model

• Encompasses regular & non-regular languages.

• PDA is like FA, but also has a stack.

– More on PDAs in section 2.2 later.

• Section 2.1: CFGs

– Important because this is how programming

languages are defined.

– Goals:

1. Given a grammar, can we generate words;

2. Given a set, can we write a grammar for it.

Practice

Should be able to write a grammar for any regular

set, and some simple non-regular cases:

• { 0 i 1j : i > j }

• 0*11*0(0 + 1)*

• (000)*11

• 1*0 (1 + 01*0)*

• (a + ba) b*a

• [bb + (a + ba) b*a]*

Deriving words

• Here is a CFG. What is it defining?

S AB

A ε | 1A

B ε | 1B0

• Handouts: Generating random strings using a

grammar.

Old Mother Hubbard

She went to the ____P____

to buy him a […….T……..

but when she same back,

he was _____D_____ the …....V…….]

T and V have to rhyme,

So select them at the same time.

CS 461 – Sept. 23

Context-free grammars

• Derivations

• Ambiguity

• Proving correctness

Derivations

• A sequence of steps showing how a word is

generated using grammar rules.

• Drawing a diagram or tree can help.

• Example: derivation of 1110 from this grammar:

S AB

A ε | 1A

B ε | 1B0

S

A

B

1A

11A

11ε

11

1B0

1ε0

10

Derivations, con’d

• There are several ways to express a derivation

– Tree

– Leftmost derivation = as you create string, replace

leftmost variable. Example:

S AB 1AB 11AB 11B 111B0 1110

– Rightmost derivation

• Often, grammars are ambiguous

– For some string, 2+ derivation trees. Or,

equivalently: 2+ leftmost derivations.

– Example?

More examples

• Even number of 0’s

• Words of form { 0i 1j } where j is constrained.

i j 2i (not to be confused with { 0n 12n } )

i j 2i + 3

i j 1.5i

• Next topic: how do we show that our grammar is

correct?

Correctness

•

Given a language L and a grammar G, how do

we know if L = L(G)? Must show:

1. L L(G). All words in L can be derived by grammar.

Tell how to derive the words.

2. L(G) L. All words derived by grammar are in L.

Use induction.

First example

• Show that S ε | 0S1 is a CFG for the

language { 0n1n }

• Step #1: Any word in 0n1n can be generated by

the grammar. We explain the derivation.

– Apply the rule 0S1 n times

– Finally, apply the ε rule.

• Step #2: All words generated by the grammar

are of the form 0n1n.

– Induction on the number of times we use a rule.

– S ε for the basis, and S 0S1 for the inductive

step.

Second example

L is { even number of 0s } and G is

S ε | S1 | S0S0

Step #1. Suppose w L. How can G generate

w?

If w = ε, we’re done.

Do the following until w is ε:

• If w ends with 1s, take them off by virtue of “S1”.

• Now w ends with 0, so take 2 0’s away by virtue

of “S0S0”. We know there must be 2 0’s in w

because w had an even number to start with.

Illustration

S ε | S1 | S0S0

Let’s see how we can derive “010100”:

• The last 2 0s: S S0S0 ε0ε0 = 00

• 0101 is 010 + 1

010 is S S0S0 ε0S10 ε0ε10 = 010

1 is S S1 ε1 = 1

• We can reconstruct derivation from beginning in

form of a tree.

L(G) is in L

S ε | S1 | S0S0

Step #2: Need to show all generated words are in

L.

Base case: ε is in L. It has even number of 0s.

Recursive case. Let w be a word generated by the

grammar. Assume it has even # 0s. We can

create a larger word by applying rule “S1” or

“S0S0”. S1 adds no more 0s. S0S0 adds two

0s. Either way the # of 0s stays even.

Extra example

• Let’s show that this grammar G

S ε | 0S1 | 00S111

generates the language L = { 0i 1j | i j 1.5i }

• Step #1: any word in L can be generated

– This is the hard part, so let’s motivate the proof with

an example or two.

• Step #2: all words generated are in L

– Just induction again.

L is in L(G)

S ε | 0S1 | 00S111

• How could we generate 011 116?

– We have 5 extra 1s. So we use rule 00S111 five

times.

– Undoing these steps, # 0s = 11-5*2 = 1 and # 1s =

16-5*3 = 1. Then we use 0S1 once and we are left

with ε.

• Okay, how about 012 116?

– We have 4 extra 1s, so use rule 00S111 four times.

– Undoing these steps, # 0s = 12-4*2 = 4 and # 1s =

16-4*3 = 4. They match! So use “0S1” 4 times.

Thinking out loud…

S ε | 0S1 | 00S111

Let w = 0i 1j L. In other words, i j 1.5i

Consider the number j – i. This is the number of

times to apply rule #3 (00S111).

Note that using rule #3 (j – i) times will account for

2(j – i) zeros.

Then apply rule #2 (0S1) the “appropriate number”

of times. How many? Well, we want i 0’s and

we’ve already got 2(j – i), so we need the

difference: i – 2(j – i) = 3i – 2j.

Finishing step 1

S ε | 0S1 | 00S111

Let w = 0i 1j L. In other words, i j 1.5i

Use rule #1 once to start with empty word.

Use rule #3 (j – i) times.

Use rule #2 (3i – 2j) times.

Total # 0s = 3i – 2j + 2(j – i) = i

Total # 1s = 3i – 2j + 3(j – i) = j

Thus, the word can be generated.

L(G) is in L

S ε | 0S1 | 00S111

Now the easy part. Need to show that when we

apply any rule, we preserve: i j 1.5i

Base case: ε has no 0s or 1s.

0 0 1.5*0 √

Recursive case. Let w be generated by the

grammar with i 0s and j 1s satisfying i j 1.5i .

If we apply either rule 0S1 or 00S111, we can

show

(i + 1) (j + 1) 1.5(i + 1)

(i + 2) (j + 3) 1.5(i + 2)

(Need to work out arithmetic.)

Think about…

• Can you write a CFG for

{ 0i 1j | 0.5i j 1.5i } ?

in other words, the ratio of 1s to 0s must be

between ½ and 3/2.

CS 461 – Sept. 26

• CFG correctness

• Section 2.2 – Pushdown Automata

Correctness

•

Given a language L and a grammar G, how do

we know if L = L(G)? Must show:

1. L L(G). All words in L can be derived by grammar.

Tell how to derive the words.

2. L(G) L. All words derived by grammar are in L.

Use induction.

First example

• Show that S ε | 0S1 is a CFG for the

language { 0n1n }

• Step #1: Any word in 0n1n can be generated by

the grammar. We explain the derivation.

– Apply the rule 0S1 n times

– Finally, apply the ε rule.

• Step #2: All words generated by the grammar

are of the form 0n1n.

– Induction on the number of times we use a rule.

– S ε for the basis, and S 0S1 for the inductive

step.

Second example

L is { even number of 0s } and G is

S ε | S1 | S0S0

Step #1. Suppose w L. How can G generate

w?

If w = ε, we’re done.

Do the following until w is ε:

• If w ends with 1s, take them off by virtue of “S1”.

• Now w ends with 0, so take 2 0’s away by virtue

of “S0S0”. We know there must be 2 0’s in w

because w had an even number to start with.

Illustration

S ε | S1 | S0S0

Let’s see how we can derive “010100”:

• The last 2 0s: S S0S0 ε0ε0 = 00

• 0101 is 010 + 1

010 is S S0S0 ε0S10 ε0ε10 = 010

1 is S S1 ε1 = 1

• We can reconstruct derivation from beginning in

form of a tree.

L(G) is in L

S ε | S1 | S0S0

Step #2: Need to show all generated words are in

L.

Base case: ε is in L. It has even number of 0s.

Recursive case. Let w be a word generated by the

grammar. Assume it has even # 0s. We can

create a larger word by applying rule “S1” or

“S0S0”. S1 adds no more 0s. S0S0 adds two

0s. Either way the # of 0s stays even.

Extra example

• Let’s show that this grammar G

S ε | 0S1 | 00S111

generates the language L = { 0i 1j | i j 1.5i }

• Step #1: any word in L can be generated

– This is the hard part, so let’s motivate the proof with

an example or two.

• Step #2: all words generated are in L

– Just induction again.

L is in L(G)

S ε | 0S1 | 00S111

• How could we generate 011 116?

– We have 5 extra 1s. So we use rule 00S111 five

times.

– Undoing these steps, # 0s = 11-5*2 = 1 and # 1s =

16-5*3 = 1. Then we use 0S1 once and we are left

with ε.

• Okay, how about 012 116?

– We have 4 extra 1s, so use rule 00S111 four times.

– Undoing these steps, # 0s = 12-4*2 = 4 and # 1s =

16-4*3 = 4. They match! So use “0S1” 4 times.

Thinking out loud…

S ε | 0S1 | 00S111

Let w = 0i 1j L. In other words, i j 1.5i

Consider the number j – i. This is the number of

times to apply rule #3 (00S111).

Note that using rule #3 (j – i) times will account for

2(j – i) zeros.

Then apply rule #2 (0S1) the “appropriate number”

of times. How many? Well, we want i 0’s and

we’ve already got 2(j – i), so we need the

difference: i – 2(j – i) = 3i – 2j.

Finishing step 1

S ε | 0S1 | 00S111

Let w = 0i 1j L. In other words, i j 1.5i

Use rule #1 once to start with empty word.

Use rule #3 (j – i) times.

Use rule #2 (3i – 2j) times.

Total # 0s = 3i – 2j + 2(j – i) = i

Total # 1s = 3i – 2j + 3(j – i) = j

Thus, the word can be generated.

L(G) is in L

S ε | 0S1 | 00S111

Now the easy part. Need to show that when we

apply any rule, we preserve: i j 1.5i

Base case: ε has no 0s or 1s.

0 0 1.5*0 √

Recursive case. Let w be generated by the

grammar with i 0s and j 1s satisfying i j 1.5i .

If we apply either rule 0S1 or 00S111, we can

show

(i + 1) (j + 1) 1.5(i + 1)

(i + 2) (j + 3) 1.5(i + 2)

(Need to work out arithmetic.)

Think about…

• Can you write a CFG for

{ 0i 1j | 0.5i j 1.5i } ?

in other words, the ratio of 1s to 0s must be

between ½ and 3/2.

PDAs

What is a Pushdown Automaton?

• Like an FA, but PDA has a stack

– Finite number of states

– Stack can grow to unlimited depth

• Transition function often non-deterministic

• 2 flavors

– Accept by happy state

– Accept by empty stack (this one has fewer states)

• Can be tedious to draw, so instead give a table.

– What’s new is saying what to do with stack.

Formal definition

• A PDA has 6 things.

– Same five from FA, plus:

– Stack alphabet (can be different from Σ)

• Transition function

δ(state, tos, input) = (push/pop, new state)

both are optional

{ 0 n 1n }

• While reading 0s, push them.

• If you read 1, change state. Then, as you

read 1s, pop 0s off the stack.

• Watch out for bad input!

– Unspecified transition crash (reject)

• Now, let’s write this in the form of a table.

PDA δ for { 0n 1n }

State

Reading 0

Tos

(don’t care)

Input

0

Action Push

0

Reading 1

0

Empty

1

0

1

Go to

state

“reading

1”

Crash

Pop

0

1

Crash Crash

Notes:

Action depends on input symbol AND what’s on top of stack.

Action includes manipulating stack AND/OR changing state.

CS 461 – Sept. 28

• Section 2.2 – Pushdown Automata

– { 0n 1n }

– Palindromes

– Equal

• Next: Converting CFG PDA

{ 0 n 1n }

• While reading 0s, push them.

• If you read 1, change state. Then, as you

read 1s, pop 0s off the stack.

• Watch out for bad input!

– Unspecified transition crash (reject)

• Now, let’s write this in the form of a table.

PDA δ for { 0n 1n }

State

Reading 0

Tos

(don’t care)

Input

0

Action Push

0

Reading 1

0

Empty

1

0

1

POP & Go

to state

“reading

1”

Crash

Pop

0

1

Crash Crash

Notes:

Action depends on input symbol AND what’s on top of stack.

Action includes manipulating stack AND/OR changing state.

Example #2

• { w # wR }

(easier form of palindrome)

• Let’s design a PDA that accepts its input by

empty stack, as before.

• Think about: state, top-of-stack, input, action

PDA δ for { w # wR }

State

Before #

Tos

(don’t care)

Input

0

Action Push

0

After #

0

1

#

0

Push

1

Go to

“after

#”

Pop

1

1

#

0

Crash Crash Crash

1

#

Pop

Crash

Palindrome PDA?

State

Before #

Tos

(don’t care)

Input

0

Action Push

0

After #

0

1

#

0

Push

1

Go to

“after

#”

Pop

1

1

#

0

Crash Crash Crash

1

#

Pop

Crash

Changes needed:

Non-deterministically go to “after #” when you push 0 or 1.

Also, non-deterministically don’t push, in case we are dealing with oddlength palindrome!

Other examples

Think about these

• “equal” language

– How many states do we need? …

• More 1s than 0s.

• Twice as many 1s as 0s.

– Hint: think of the 0s as counting double.

Equal PDA

State

(Just one state)

ε

Tos

Input

0

Action Push

0

0

1

1

0

1

0

1

Push

1

Push

0

Pop

Pop

Push

1

CS 461 – Oct. 3

• Converting a CFG into a PDA

• Union, intersection, complement of CFL’s

• Pumping lemma for CFL’s

CFG PDA

Algorithm described in book

• Have state 1 push S on stack, and go to state 2.

• State 2 actions:

– (Note that any symbol in grammar could be tos, and

any terminal could be input.)

– When tos is variable, ignore input. Action is to

replace variable with its rules on stack.

• Multiple rules non-determinism

• Push symbols out of order.

• Push ε means leave stack alone.

– When tos is terminal, need action for when input=tos:

just pop it. Don’t need action for !=, just let nondeterministic instance disappear.

S AB

Example:

A ε | 1A2

B ε | B3

State

1

Tos

ignore

2

S

A

B

1

2

3

ignore

ignore

1

2

3

Pop

Pop

Pop

Input

ignore ignore

Action

Push Pop S Pop A

S

Push

goto 2

AB

Pop A

Push

1A2

Pop B

Pop B

Push B3

Notice how the grammar rules are

encoded into the machine!

Let’s trace 1122333

State

1

2

Tos

ignore

S

A

B

1

2

3

ignore

ignore

1

2

3

Pop

Pop

Pop

Input

ignore ignore

Action

Push Pop S Pop A

S

Push

goto 2

AB

1

1

A

A A 2

A 2 2 2

S B B B B

Pop A

Push

1A2

Pop B

A

2 2

B

2 2 2

B 3

B B B B 3 3

Pop B

Push B3

B

3 3

3 3 3

3 3 3 3

Combining CFLs

• Let’s write grammars for

L 1 = { 1 i 2i 3j }

and

L2 = { 1 i 2 j 3 j }

• Grammar for L1 U L2 ?

• How about ∩ ?

Simple technique.

L1 ∩ L2 = { 1n 2n 3n }

Soon, we’ll prove this is not CFL.

What can we conclude?

• Complement? Remember: A ∩ B = (A’ U B’)’

Pumping lemma

L is a CFL implies:

There is a p,

such that for any string s, |s| >= p

We can break up s into 5 parts uvxyz:

| v y | >= 1

| v x y | <= p

and uvixyiz L.

• How does this differ from the first pumping

lemma?

In other words

• If L is a CFL, then for practically any word w in L,

we should be able to find 2 substrings within w

located near each other that you can

simultaneously pump in order to create more

words in L.

• Contrapositive: If w is a word in L, and we can

always create a word not in L by simultaneously

pumping any two substrings in w that are near

each other, then L is not a CFL.

Gist

• A string can grow in 2 places.

– When we write recursive rules, we can add symbols

to left or right of a variable.

• Ex.

S #A#

A ε | 1A2

Look at derivation of: #111…222#

• Even a complex rule like S S1S1S2 can be

“simplified”, and still only need 2 places to grow the

string.

– This is because any CFG can be written in such a way that there

are no more than 2 symbols on the right side of .

Example

• L = { 1n 2n } should satisfy the pumping lemma.

Let p = 2. Strings in L of length >= 2 are:

{ 12, 1122, 111222, … }. All these words have

both a 1 and a 2, which we can pump.

Just make sure the 2 places to pump are within

p symbols of each other. So let’s say they are in

the middle.

u

v x y

z

11111…1 1 ε 2

22222….2

Could p = 1 work?

CS 461 – Oct. 5

• Pumping lemma #2

– Understanding

– Use to show a language is not a CFL

• Next: Applications of CFLs

– Expression grammars and Compiling

Pumping lemma

L is a CFL implies:

There is a p,

such that for any string s, |s| >= p

We can break up s into 5 parts uvxyz:

| v y | >= 1

| v x y | <= p

and uvixyiz L.

• How does this differ from the first pumping

lemma?

In other words

• If L is a CFL, then for practically any word w in L,

we should be able to find 2 substrings within w

located near each other that you can

simultaneously pump in order to create more

words in L.

• Contrapositive: If w is a word in L, and we can

always create a word not in L by simultaneously

pumping any two substrings in w that are near

each other, then L is not a CFL.

Gist

• A string can grow in 2 places.

– When we write recursive rules, we can add symbols

to left or right of a variable.

• Ex.

S #A#

A ε | 1A2

Look at derivation of: #111…222#

• Even a complex rule like S S1S1S2 can be

“simplified”, and still only need 2 places to grow the

string.

– This is because any CFG can be written in such a way that there

are no more than 2 symbols on the right side of .

Example

• L = { 1n 2n } should satisfy the pumping lemma.

Let p = 2. Strings in L of length >= 2 are:

{ 12, 1122, 111222, … }. All these words have

both a 1 and a 2, which we can pump.

Just make sure the 2 places to pump are within

p symbols of each other. So let’s say they are in

the middle.

u

v x y

z

11111…1 1 ε 2

22222….2

Could p = 1 work?

Example 2

• { w # wR }. Think about where we can grow.

Let p = 3. All strings of length 3 or more look like

w # wR where | w | >= 1.

As a technicality, would other values of p work?

Non-CFLs

• It’s possible for a language to be non-CFL!

Typically this will be a language that must

simultaneously pump in 3+ places.

• Ex. How could we design a PDA for { 1n2n3n }?

Adversary’s Revenge

• In Game #1, how did we win? Ex. { 0n 1n }.

–

–

–

–

Adversary chose p.

We chose s.

Adversary broke up s = xyz subject to constraints.

We were always able to pump and find words outside

L.

• Game #2 strategy

– Adversary’s constraints looser. The middle 3 parts

have to be within p of each other. Can be anywhere

in the string, not just in first p symbols of word.

{ 1n 2n 3n }

• Let p be any number.

• Choose s = 1p2p3p.

• Let s = uvxyz such that |vxy| <= p and |vy| >= 1.

Where can v and y be?

– All 1’s

– All 2’s

– All 3’s

– Straddling 1’s and 2’s

– Straddling 2’s and 3’s

• In every case, can we find a word not in L?

More examples

• { 1 i 2j 3k : i < j < k }

– What should we chose as s?

– How can the word be split up?

• {ww}

–

–

–

–

Let s = 0p1p0p1p. Where can v & y be?

Same section of 0’s and 1’s.

In neighboring sections

Either v or y straddles a border.

CS 461 – Oct. 7

• Applications of CFLs: Compiling

• Scanning vs. parsing

• Expression grammars

– Associativity

– Precedence

• Programming language (handout)

Compiling

• Grammars are used to define programming

language and check syntax.

• Phases of a compiler

source

code

scanner

stream of

tokens

parser

parse

tree

Scanning

• Scanner needs to know what to expect when

eating your program.

–

–

–

–

identifiers

numbers

strings

comments

• Specifications for tokens can be expressed by

regular expression (or regular grammar).

• While scanning, we can be in different states,

such as inside a comment.

Parser

• Purpose is to understand structure of program.

• All programming structures can be expressed as

CFG.

• Simple example for + and –

expr expr + digit | expr – digit | digit

digit 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

How would we derive the string 9 – 5 + 2 ?

9–5+2

expr expr + digit | expr – digit | digit

digit 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

expr

expr - digit

digit

5

9

expr

+

Leftmost derivation: expr expr + digit

expr – digit + digit

digit – digit + digit

9 – digit + digit

9 – 5 + digit

9–5+2

digit

2

“parse tree”

Left & right recursion

• What is the difference between these 2

grammars? Which one is better?

expr expr + digit | expr – digit | digit

digit 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

expr digit + expr | digit – expr | digit

digit 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

• Let’s try 9 – 5 + 2 on both of these. The

grammar must convey the order of operations!

• Operators may be left associative or right

associative.

+-*/

Question:

• How do we write grammar for all 4 operators?

Can we do it this way…

expr expr + digit | expr – digit |

expr * digit | expr / digit |

digit

digit 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

• BTW, we can ignore “digits” and from now on just

replace them with “num”, and understand it’s any single

number.

Precedence

• (* /) bind stronger than (+ -)

• (+ -) separate better than (* /)

• Need to break up expression into terms

– Ex. 9 – 8 * 2 + 4 / 5

– We want to say that an expression consists of “terms”

separated by + and –

– And each term consists of numbers separated by *

and /

– But which should we define first, expr or term?

Precedence (2)

• Which grammar is right?

expr expr + term | expr – term | term

term term * num | term / num | num

Or this one:

expr expr * term | expr / term | term

term term + num | term – num | num

Let’s try examples 1 + 2 * 3

and

1*2+3

Moral

• If a grammar is defining something hierarchical,

like an expression, define large groupings first.

• Lower precedence operators appear first in

grammar. (They separate better)

– Ex. * appears lower in parse tree than + because it

gets evaluated first.

• In a real programming language, there can be

more than 10 levels of precedence. C has ~15!

C language

• Handout

– How does the grammar begin?

– Where are the mathematical expressions?

– Do you agree with the precedence?

– Do you see associativity?

– What else is defined in grammar?

– Where are the terminals?

CS 461 – Oct. 10

• Review PL grammar as needed

• How to tell if a word is in a CFL?

– Convert to PDA and run it.

– CYK algorithm

– Modern parsing techniques

Accepting input

• How can we tell if a given source file (input

stream of tokens) is a valid program?

Language defined by CFG, so …

– Can see if there is some derivation from grammar?

– Can convert CFG to PDA?

• Exponential performance not acceptable. (e.g.

doubling every time we add token)

• Two improvements:

– CYK algorithm, runs in O(n3)

– Bottom-up parsing, generally linear, but restrictions

on grammar.

CYK algorithm

• In 1965-67, discovered independently by Cocke,

Younger, Kasami.

• Given any CFG and any string, can tell if

grammar generates string.

• The grammar needs to be in CNF first.

– This ensures that the rules are simple. Rules are of

the form X a or X YZ

• Consider all substrings of len 1 first. See if

these are in language. Next try all len 2, len 3,

…. up to length n.

continued

• Maintain results in an

NxN table. Top right

portion not used.

– Example on right is for

testing word of length

3.

• Start at bottom; work

your way up.

• For length 1, just look

for “unit rules” in

grammar, e.g. Xa.

1..3

1..2

1..1

X X

X

2..3

2..2

3..3

continued

• For general case i..j

– Think of all possible

ways this string can be

broken into 2 pieces.

– Ex. 1..3 = 1..2 + 3..3

or 1..1 + 2..3

– We want to know if

both pieces L. This

handles rules of form

A BC.

• Let’s try example from

3+7+. (in CNF)

1..3

1..2

1..1

X X

X

2..3

2..2

3..3

337 3+7+ ?

S AB

A 3 | AC

B 7 | BD

C3

D7

For each len 1 string, which

variables generate it?

1..1 is 3. Rules A and C.

2..2 is 3. Rules A and C.

3..3 is 7. Rules B and D.

1..3

1..2

1..1

A, C

X X

X

2..3

2..2

A, C

3..3

B, D

337 3+7+ ?

S AB

A 3 | AC

B 7 | BD

C3

D7

Length 2:

1..2 = 1..1 + 2..2 =

(A or C)(A or C) = rule A

2..3 = 2..2 + 3..3 =

(A or C)(B or D) = rule S

1..3

1..2

A

1..1

A, C

X X

X

2..3

S

2..2

A, C

3..3

B, D

337 3+7+ ?

S AB

A 3 | AC

B 7 | BD

C3

D7

Length 3: 2 cases for 1..3:

1..2 + 3..3: (A)(B or D) = S

1..1 + 2..3: (A or C)(S) no!

We only need one case to

work.

1..3

S

1..2

A

1..1

A, C

X X

X

2..3

S

2..2

A, C

3..3

B, D

CYK example #2

Let’s test the word baab

S AB | BC

A BA | a

B CC | b

C AB | a

1..4

1..3

1..2

Length 1:

‘a’ generated by A, C

‘b’ generated by B

1..1

B

X X X

2..4

X X

2..3 3..4

X

2..2 3..3 4..4

A, C A, C B

baab

S AB | BC

A BA | a

B CC | b

C AB | a

Length 2:

1..2 = 1..1 + 2..2 = (B)(A, C) = S,A

2..3 = 2..2 + 3..3 = (A,C)(A,C) = B

3..4 = 3..3 + 3..4 = (A,C)(B) = S,C

1..4

1..2

S, A

X X X

2..4

X X

2..3 3..4

B

S, C X

1..1

B

2..2 3..3 4..4

A, C A, C B

1..3

baab

S AB | BC

A BA | a

B CC | b

C AB | a

Length 3: [ each has 2 chances! ]

1..3 = 1..2 + 3..3 = (S,A)(A,C) = Ø

1..3 = 1..1 + 2..3 = (B)(B) = Ø

2..4 = 2..3 + 4..4 = (B)(B) = Ø

2..4 = 2..2 + 3..4 = (A,C)(S,C) = B

1..4

1..2

S, A

X X X

2..4

X X

B

2..3 3..4

B

S, C X

1..1

B

2..2 3..3 4..4

A, C A, C B

1..3

Ø

Finally…

S AB | BC

A BA | a

B CC | b

C AB | a

Length 4 [has 3 chances!]

1..4 = 1..3 + 4..4 = (Ø)(B) = Ø

1..4 = 1..2 + 3..4 = (S,A)(S,C) = Ø

1..4 = 1..1 + 2..4 = (B)(B) = Ø

Ø means we lose!

baab L.

However, in general don’t give up if

you encounter Ø in the middle of

the process.

1..4

Ø

1..2

S, A

X X X

2..4

X X

B

2..3 3..4

B

S, C X

1..1

B

2..2 3..3 4..4

A, C A, C B

1..3

Ø

CS 461 – Oct. 12

Parsing

• Running a parse machine

– “Goto” (or shift) actions

– Reduce actions: backtrack to earlier state

– Maintain stack of visited states

• Creating a parse machine

– Find the states: sets of items

– Find transitions between states, including reduce.

– If many states, write table instead of drawing

Parsing

• CYK algorithm still too slow

• Better technique: bottom-up parsing

• Basic idea

S AB

A aaa

B bb

At any point in time, think about where we could be while parsing the

string “aaabb”.

When we arrive at aaabb. We can reduce the “aaa” to A.

When we arrive at Abb, we can reduce the “bb” to B.

Knowing that we’ve just read AB, we can reduce this to S.

• See handouts for details.

Sets of items

• We’re creating states.

• We start with a grammar.

First step is to augment it

with the rule S’ S.

• The first state I0 will

contain S’ S

• Important rule: Any time

you write before a

variable, you must

“expand” that variable.

So, we add items from

the rules of S to I0.

Example: { 0n 1n+1 }

S 1 | 0S1

We add new start rule

S’ S

State 0 has these 3 items:

I 0:

S’ S

S 1

Expand

S

S 0S1

continued

• Next, determine

transitions out of state 0.

δ(0, S) = 1

δ(0, 1) = 2

δ(0, 0) = 3

I’ve written destinations

along the right side.

State 0 has these 3 items:

I 0:

S’ S

1

S 1

2

S 0S1

3

• Now we’re ready for state

1. Move cursor to right to

become S’ S

I 1:

S’ S

continued

• Any time an item ends

with , this represents a

reduce, not a goto.

• Now, we’re ready for

state 2. The item S 1

moves its cursor to the

right: S 1

This also become a

reduce.

I 0:

S’ S

S 1

S 0S1

1

2

3

I1:

S’ S

r

I 2:

S 1

r

continued

• Next is state 3. From

S 0S1, move cursor.

Notice that now the is in

front of a variable, so we

need to expand.

• Once we’ve written the

items, fill in the

transitions. Create new

state only if needed.

δ(3, S) = 4 (a new state)

δ(3, 1) = 2 (as before)

δ(3, 0) = 3 (as before)

I 0:

I 1:

I2:

I 3:

S’ S

S 1

S 0S1

S’ S

S 1

1

2

3

r

r

S 0 S1

S 1

S 0S1

4

2

3

continued

• Next is state 4. From

item S 0 S1, move

cursor.

• Determine transition.

δ(4, 1) = 5

Notice we need new state

since we’ve never seen

“0 S 1” before.

I 0:

I 1:

I2:

I 3:

I 4:

S’ S

S 1

S 0S1

S’ S

S 1

S 0 S1

S 1

S 0S1

1

2

3

r

r

4

2

3

S 0S 1

5

Last state!

• Our last state is #5.

Since the cursor is at the

end of the item, our

transition is a reduce.

• Now, we are done finding

states and transitions!

• One question remains,

concerning the reduce

transitions: On what

input should we reduce?

I 4:

S’ S

S 1

S 0S1

S’ S

S 1

S 0 S1

S 1

S 0S1

S 0S 1

1

2

3

r

r

4

2

3

5

I 5:

S 0S1

r

I 0:

I 1:

I2:

I 3:

CS 461 – Oct. 17

• Creating parse machine

– Convert grammar into sets of items

– Determine goto and reduce actions

• On what input do we reduce?

– Whatever “follows” the nonterminal we’re reducing to.

• Declaration grammar

0n 1n+1

• There are 5 states.

When the cursor is at the

end of the item, our

transition is a reduce.

• Now, we are done finding

states and transitions!

• One question remains,

concerning the reduce

transitions: On what

input should we reduce?

I 4:

S’ S

S 1

S 0S1

S’ S

S 1

S 0 S1

S 1

S 0S1

S 0S 1

1

2

3

r

r

4

2

3

5

I 5:

S 0S1

r

I 0:

I 1:

I2:

I 3:

When to reduce

If you are at the end of an item such as S 1 ,

there is no symbol after the telling us what

input to wait for.

– The next symbol should be whatever “follows” the

variable we are reducing. In this case, what follows

S. We need to look at the original grammar to find

out.

– For example, if you were reducing A, and you saw a

rule S A1B, you would say that 1 follows A.

– Since S is start symbol, $ (end of input) follows S.

• For more info, see parser worksheet.

– New skill: for each grammar variable, what follows?

First( )

To calculate first(A), look at A’s rules.

• If you see A c…, add c to first(A)

• If you see A B…, add first(B) to first(A).

Note: don’t put $ in first( ).

Follow( )

What should be included in follow(A) ?

•

•

•

•

If A is start symbol, add $.

If you see Q …Ac…, add c.

If you see Q …AB…, add first(B).

If you see Q …A, add follow(Q).

Note: don’t put ε in follow( ).

CS 461 – Oct. 19

• Examples

– Calculator grammar

– Review first( ) and follow( )

– Declaration grammar

• How to handle ε in grammar

– Need to change how we find first( ) and follow( ).

First( )

To calculate first(A), look at A’s rules.

• If you see A c…, add c to first(A)

• If you see A B…, add first(B) to first(A).

– If B can yield ε, continue to next symbol in rule until

you reach a symbol that can represent a terminal.

• If A can yield ε, add ε to first(A).

Note: don’t put $ in first( ).

Follow( )

What should be included in follow(A) ?

•

•

•

•

If A is start symbol, add $.

If you see Q …Ac…, add c.

If you see Q …AB…, add first(B).

If you see Q …A, add follow(Q).

• If you see Q …ABC, and B yields ε, add first (C).

• If you see Q …AB, and B yields ε, add follow(Q).

Note: don’t put ε in follow( ).

Example

Try this grammar:

S AB

A ε | 1A2

B ε | 3B

First(B) = ε, 3

First(A) = ε, 1

First(S) = ε, 1, 3

(note in this case A ε)

Follow(S) = $

since S is start symbol

Follow(A) = 2, 3, $

we need first(B)

since B ε, we need $

Follow(B) = $

Try this one

Let’s try the language ((1*2(3+4)*(56)*)*

Rules

S’ S

S ε | SABC

A 2 | 1A

B ε | 3B | 4B

C ε | 56C

First

Follow

answer

Let’s try the language ((1*2(3+4)*(56)*)*

Rules

First

Follow

S’ S

ε, 1, 2

$

S ε | SABC

ε, 1, 2

1, 2, $

A 2 | 1A

1, 2

3, 4, 5, $, 1, 2

B ε | 3B | 4B

ε, 3, 4

5, $, 1, 2

C ε | 56C

ε, 5

$, 1, 2

CS 461 – Oct. 21

Begin chapter 3

• We need a better (more encompassing) model

of computation.

• Ex. { 1n 2n 3n } couldn’t be accepted by PDA.

– How could any machine accept this language?

– Somehow need to check 1s, 2s and 3s at same time.

– We should also be able to handle 1n 2n 3n 4n , etc.

Turing Machine

• 3rd model of computation

– Alan Turing, 1936

– Use “tape” instead of stack

– Can go left or right over the input!

• Every TM has:

–

–

–

–

Set of states, including 1 start and 1 accept state

Input alphabet

Tape alphabet, including special “blank” symbol

Transition function

δ (state, input) = (state, output, direction)

The tape and δ

• Tape is infinite in both directions

– Blanks straddle the input on the tape.

• Begin reading at leftmost input symbol.

• As soon as you enter accept state, halt.

– You don’t have to read all the input.

δ (state, input) = (state, output, direction)

– You may change state.

– You may change the symbol that’s on the tape.