hpc_at_sheffield2013.. - The University of Sheffield High

advertisement

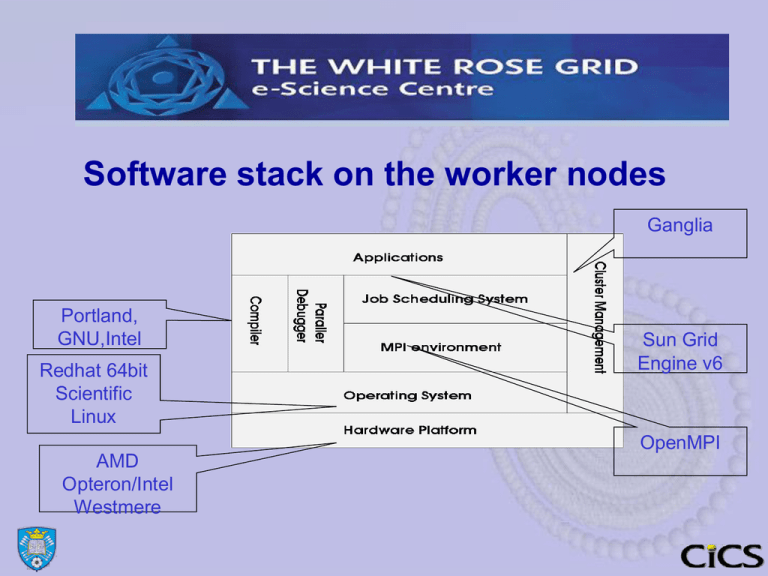

Software stack on the worker nodes Ganglia Portland, GNU,Intel Redhat 64bit Scientific Linux AMD Opteron/Intel Westmere Sun Grid Engine v6 OpenMPI Application packages • Following application packages are available on the worker nodes via the name of the package. ▬ ▬ ▬ ▬ ▬ ▬ ▬ ▬ ▬ ▬ ▬ ▬ maple , xmaple matlab R abaquscae abaqus ansys fluent fidap dyna idl comsol paraview Keeping up-to-date with application packages From time to time the application packages get updated. When this happens; – news articles inform us of the changes via iceberg news. To read the news- type news on iceberg or check the URL: http://www.wrgrid.group.shef.ac.uk/icebergdocs/news.dat – The previous versions or the new test versions of software are normally accessed via the version number. For example; abaqus69 , nagexample23 , matlab2011a Running application packages in batch queues icberg have locally provided commands for running some of the popular applications in batch mode. These are; runfluent , runansys , runmatlab , runabaqus To find out more just type the name of the command on its own while on iceberg. Setting up your software development environment • • • • • • Excepting the scratch areas on worker nodes, the view of the filestore is identical on every worker. You can setup your software environment for each job by means of the module commands. All the available software environments can be listed by using the module avail command. Having discovered what software is available on iceberg, you can then select the ones you wish to use by usingmodule add or module load commands You can load as many non-clashing modules as you need by consecutive module add commands. You can use the module list command to check the list of currently loaded modules. Software development environment • Compilers – PGI compilers – Intel Compilers – GNU compilers • Libraries – NAG Fortran libraries ( MK22 , MK23 ) – NAG C libraries ( MK 8 ) • Parallel programming related – OpenMP – MPI ( OpenMPI , mpich2 , mvapich ) Managing Your Jobs Sun Grid Engine Overview SGE is the resource management system, job scheduling and batch control system. (Others available such as PBS, Torque/Maui, Platform LSF ) • • • • Starts up interactive jobs on available workers Schedules all batch orientated ‘i.e. non-interactive’ jobs Attempts to create a fair-share environment Optimizes resource utilization SGE worker node SGE worker node SGE worker node SGE worker node C Slot 1 B Slot 1 A Slot 1 C Slot 2 Queue-B C Slot 1 B Slot 1 B Slot 3 B Slot 2 B Slot 1 C Slot 3 C Slot 2 C Slot 1 B Slot 1 A Slot 2 A Slot 1 Queue-A SGE worker node Queue-C SGE MASTER node Queues Policies Priorities JOB X JOB Y Share/Tickets JOB Z JOB O JOB N Resources Users/Projects JOB U Job scheduling on the cluster Submitting your job There are two SGE commands submitting jobs; – qsh or qrsh : To start an interactive job – qsub : To submit a batch job There are also a list of home produced commands for submitting some of the popular applications to the batch system. They all make use of the qsub command. These are; runfluent , runansys , runmatlab , runabaqus Managing Jobs monitoring and controlling your jobs • There are a number of commands for querying and modifying the status of a job running or waiting to run. These are; – qstat or Qstat (query job status) – qdel (delete a job) – qmon ( a GUI interface for SGE ) Running Jobs Example: Submitting a serial batch job Use editor to create a job script in a file (e.g. example.sh): #!/bin/bash # Scalar benchmark echo ‘This code is running on’ date ./linpack Submit the job: qsub example.sh `hostname` Running Jobs qsub and qsh options -l h_rt=hh:mm:ss The wall clock time. This parameter must be specified, failure to include this parameter will result in the error message: “Error: no suitable queues”. Current default is 8 hours . -l arch=intel* -l arch=amd* Force SGE to select either Intel or AMD architecture nodes. No -l mem=memory sets the virtual-memory limit e.g. –l mem=10G (for parallel jobs this is per processor and not total). Current default if not specified is 6 GB . -l rmem=memory Sets the limit of real-memory required Current default is 2 GB. need to use this parameter unless the code has processor dependency. Note: rmem parameter must always be less than mem. -help Prints a list of options -pe ompigige np -pe openmpi-ib np -pe openmp np Specifies the parallel environment to be used. np is the number of processors required for the parallel job. Running Jobs qsub and qsh options ( continued) -N jobname By default a job’s name is constructed from the job-script-filename and the job-id that is allocated to the job by SGE. This options defines the jobname. Make sure it is unique because the job output files are constructed from the jobname. -o output_file Output is directed to a named file. Make sure not to overwrite -j y Join the standard output and standard error output streams recommended -m [bea] -M email-address Sends emails about the progress of the job to the specified email address. If used, both –m and –M must be specified. Select any or all of the b,e and a to imply emailing when the job begins, ends or aborts. -P project_name Runs a job using the specified projects allocation of resources. -S shell Use the specified shell to interpret the script rather than the default bash shell. Use with care. A better option is to specify the shell in the first line of the job script. E.g. #!/bin/bash -V Export all environment variables currently in effect to the job. important files by accident. Running Jobs batch job example qsub example: qsub –l h_rt=10:00:00 –o myoutputfile –j y myjob OR alternatively … the first few lines of the submit script myjob contains $!/bin/bash $# -l h_rt=10:00:00 $# -o myoutputfile $# -j y and you simply type; qsub myjob Running Jobs Interactive jobs qsh , qrsh • These two commands, find a free worker-node and start an interactive session for you on that worker-node. • This ensures good response as the worker node will be dedicated to your job. • The only difference between qsh and qrsh is that ; qsh starts a session in a new command window where as qrsh uses the existing window. Therefore, if your terminal connection does not support graphics ( i.e. XWindows) than qrsh will continue to work where as qsh will fail to start. Running Jobs A note on interactive jobs • • • Software that requires intensive computing should be run on the worker nodes and not the head node. You should run compute intensive interactive jobs on the worker nodes by using the qsh or qrsh command. Maximum ( and also default) time limit for interactive jobs is 8 hours. Managing Jobs Monitoring your jobs by qstat or Qstat Most of the time you will be interested only in the progress of your own jobs thro’ the system. • Qstat command gives a list of all your jobs ‘interactive & batch’ that are known to the job scheduler. As you are in direct control of your interactive jobs, this command is useful mainly to find out about your batch jobs ( i.e. qsub’ed jobs). • qstat command ‘note lower-case q’ gives a list of all the executing and • waiting jobs by everyone. Having obtained the job-id of your job by using Qstat, you can get further information about a particular job by typing ; qstat –f -j job_id You may find that the information produced by this command is far more than you care for, in that case the following command can be used to find out about memory usage for example; qstat –f -job_id | grep usage Managing Jobs qstat example output State can be: r=running , qw=waiting in the queue, E=error state. t=transfering’just before starting to run’ h=hold waiting for other jobs to finish. job-ID prior name user state submit/start at queue slots ja-task-ID ------------------------------------------------------------------------------------------------------------------------------------------------206951 0.51000 INTERACTIV bo1mrl 206933 0.51000 do_batch4 pc1mdh 206700 0.51000 l100-100.m mb1nam 206698 0.51000 l50-100.ma mb1nam 206697 0.51000 l24-200.ma mb1nam 206943 0.51000 do_batch1 pc1mdh 206701 0.51000 l100-200.m mb1nam 206705 0.51000 l100-100sp mb1nam 206699 0.51000 l50-200.ma mb1nam 206632 0.56764 job_optim2 mep02wsw 206600 0.61000 mrbayes.sh bo1nsh 206911 0.51918 fluent cpp02cg r r r r r r r r r r qw qw 07/05/2005 09:30:20 bigmem.q@comp58.iceberg.shef.a 1 07/04/2005 16:28:20 long.q@comp04.iceberg.shef.ac. 1 07/04/2005 13:30:14 long.q@comp05.iceberg.shef.ac. 1 07/04/2005 13:29:44 long.q@comp12.iceberg.shef.ac. 1 07/04/2005 13:29:29 long.q@comp17.iceberg.shef.ac. 1 07/04/2005 17:49:45 long.q@comp20.iceberg.shef.ac. 1 07/04/2005 13:30:44 long.q@comp22.iceberg.shef.ac. 1 07/04/2005 13:42:07 long.q@comp28.iceberg.shef.ac. 1 07/04/2005 13:29:59 long.q@comp30.iceberg.shef.ac. 1 07/03/2005 22:55:30 parallel.q@comp43.iceberg.shef 18 07/02/2005 11:22:19 parallel.q@comp51.iceberg.shef 24 07/04/2005 14:19:06 parallel.q@comp52.iceberg.shef 4 Managing Jobs Deleting/cancelling jobs qdel command can be used to cancel running jobs or remove from the queue the waiting jobs. • To cancel an individual Job; qdel job_id Example: qdel 123456 • To cancel a list of jobs qdel job_id1 , job_id2 , so on … • To cancel all jobs belonging to a given username qdel –u username Managing Jobs Job output files • • When a job is queued it is allocated a jobid ( an integer ). Once the job starts to run normal output is sent to the output (.o) file and the error o/p is sent to the error (.e) file. ▬ ▬ • • • The default output file name is: <script>.o<jobid> The default error o/p filename is: <script>.e<jobid> If -N parameter to qsub is specified the respective output files become <name>.o<jobid> and <name>.e<jobid> –o or –e parameters can be used to define the output files to use. -j y parameter forces the job to send both error and normal output into the same (output) file (RECOMMENDED ) Monitoring the job output files • The following is an example of submitting a SGE job and checking the output produced qsub myjob.sh job <123456> submitted qstat –f –j 123456 (is the job running ?) When the job starts to run, type ; tail –f myjob.sh.o123456 Problem Session • Problem 10 – Only up to test 5 Managing Jobs Reasons for job failures – SGE cannot find the binary file specified in the job script – You ran out of file storage. It is possible to exceed your filestore allocation limits during a job that is producing large output files. Use the quota command to check this. – Required input files are missing from the startup directory – Environment variable is not set correctly (LM_LICENSE_FILE etc) – Hardware failure (eg. mpi ch_p4 or ch_gm errors) • Finding out the memory requirements of a job Virtual Memory Limits: ▬ ▬ • Default virtual memory limits for each job is 6 GBytes Jobs will be killed if virtual memory used by the job exceeds the amount requested via the –l mem= parameter. Real Memory Limits: Default real memory allocation is 2 GBytes ▬ Real memory resource can be requested by using –l rmem= ▬ Jobs exceeding the real memory allocation will not be deleted but will run with reduced efficiency and the user will be emailed about the memory deficiency. ▬ When you get warnings of that kind, increase the real memory allocation for your job by using the –l rmem= parameter. ▬ rmem must always be less than mem Determining the virtual memory requirements for a job; ▬ ▬ ▬ ▬ qstat –f –j jobid | grep mem The reported figures will indicate - the currently used memory ( vmem ) - Maximum memory needed since startup ( maxvmem) - cumulative memory_usage*seconds ( mem ) When you run the job next you need to use the reported value of vmem to specify the memory requirement Managing Jobs Running arrays of jobs • Add the –t parameter to the qsub command or script file (with #$ at beginning of the line) – Example: –t 1-10 • This will create 10 tasks from one job • Each task will have its environment variable $SGE_TASK_ID set to a single unique value ranging from 1 to 10. • There is no guarantee that task number m will start before task number n , where m<n . Managing Jobs Running cpu-parallel jobs • • Parallel environment needed for a job can be specified by the: -pe <env> nn parameter of qsub command, where <env> is.. ▬ openmp : These are shared memory OpenMP jobs and therefore must run on a single node using its multiple processors. ▬ ompigige : OpenMPI library- Gigabit Ethernet. These are MPI jobs running on multiple hosts using the ethernet fabric ( 1Gbit/sec) ▬ openmpi-ib : OpenMPI library-Infiniband. These are MPI jobs running on multiple hosts using the Infiniband Connection ( 32GBits/sec ) ▬ mvapich2-ib : Mvapich-library-Infiniband. As above but using the MVAPICH MPI library. Compilers that support MPI. ▬ ▬ ▬ PGI Intel GNU Setting up the parallel environment in the job script. • Having selected the parallel environment to use via the qsub – pe parameter, the job script can define a corresponding environment/compiler combination to be used for MPI tasks. • MODULE commands help setup the correct compiler and MPI transport environment combination needed. List of currently available MPI modules are; mpi/pgi/openmpi mpi/pgi/mvapich2 mpi/intel/openmpi mpi/intel/mvapich2 mpi/gcc/openmpi mpi/gcc/mvapich2 • For GPU programming with CUDA libs/cuda • Example: module load mpi/pgi/openmpi Summary of module load parameters for parallel MPI environments TRANSPORT qsub parameter ------------------------COMPILER to use -pe openmpi-ib -pe ompigige -pe mvapich2-ib PGI mpi/pgi/openmpi mpi/pgi/mvapich2 Intel mpi/intel/openmpi mpi/intel/mvapich2 GNU mpi/gcc/openmpi mpi/gcc/mvapich2 Running GPU parallel jobs GPU parallel processing is supported on 8 Nvidia Tesla Fermi M2070s GPU units attached to iceberg. • In order to use the GPU hardware you will need to join the GPU project by emailing iceberg-admins@sheffield.ac.uk • You can then submit jobs that use the GPU facilities by using the following three parameters to the qsub command; -P gpu -l arch=intel* -l gpu=nn where 1<= nn <= 8 is the number of gpu-modules to be used by the job. P stands for project that you belong to. See next slide. Special projects and resource management • • • • • • Bulk of the iceberg cluster is shared equally amongst the users. I.e. each user has the same privileges for running jobs as another user. However, there are extra nodes connected to the iceberg cluster that are owned by individual research groups. It is possible for new research project groups to purchase their own compute nodes/clusters and make it part of the iceberg cluster. We define such groups as special project groups in SGE parlance and give priority to their jobs on the machines that they have purchased. This scheme allows such research groups to use iceberg as ordinary users “with equal share to other users” or to use their privileges ( via the –P parameter) to run jobs on their own part of the cluster without having to compete with other users. This way, everybody benefits as the machines currently unused by a project group can be utilised to run normal users’ short jobs. Job queues on iceberg Queue name short.q Time Limit (Hours) System specification 8 long.q 168 Long running serial jobs parallel.q 168 Jobs requiring multiple nodes openmp.q 168 Shared memory jobs using openmp gpu.q 168 Jobs using the gpu units Beyond Iceberg • • • Iceberg OK for many compute problems N8 tier 2 facility for more demanding compute problems Hector/Archer Larger facility for grand challenge problems (pier review process to access) N8 HPC – Polaris – Compute Nodes • • • • • • • 316 nodes (5,056 cores) with 4 GByte of RAM per core (each node has 8 DDR3 DIMMS each of 8 GByte). These are known as "thin nodes". 16 nodes (256 cores) with 16 GByte of RAM per core (each node has 16 DDR3 DIMMS each of 16 GByte). These are known as "fat nodes". Each node comprises two of the Intel 2.6 GHz Sandy Bridge E5-2670 processors. Each processor has 8 cores, giving a total core count of 5,312 cores Each processor has a 115 Watts TDP and the Sandy Bridge architecture supports "turbo" mode Each node has a single 500 GB SATA HDD There are 4 nodes in each Steelhead chassis. 79 chassis have 4 GB/core and 4 more have 16 GB/core N8 HPC – Polaris – Storage and Connectivity • Connectivity ▬ • The compute nodes are fully connected by Mellanox QDR InfiniBand high performance interconnects File System & Storage ▬ ▬ 174 TBytes Lustre v2 parallel file system with 2 OSSes. This is mounted as /nobackup and has no quota control. It is not backed up and files are automatically expired after 90 days. 109 TBytes NFS filesystem where user $HOME is mounted. This is backed up. Getting Access to N8 • • Note N8 is for users whose research problems require greater resource than that available through Iceberg Registration is through Projects ▬ ▬ ▬ Ask your supervisor or project leader to register their project with the N8 Users obtain a project code from supervisor or project leader Complete online form provide an outline of work explaining why N8 resources are required Steps to Apply for an N8 User Account • • Request an N8 Project Code from supervisor/project leader Complete user account online application form at ▬ • Note: ▬ ▬ ▬ • http://n8hpc.org.uk/research/gettingstarted/ If your project leader does not have a project code Project leader should apply for a project account at http://n8hpc.org.uk/research/gettingstarted/ Link to N8 information is at ▬ http://www.shef.ac.uk/wrgrid/n8 Getting help • Web site – http://www.shef.ac.uk/wrgrid/ • Documentation – http://www.shef.ac.uk/wrgrid/using • Training (also uses the learning management system) – http://www.shef.ac.uk/wrgrid/training • Uspace – http://www.shef.ac.uk/wrgrid/uspace • Contacts – http://www.shef.ac.uk/wrgrid/contacts.html