Components of a Time Series

advertisement

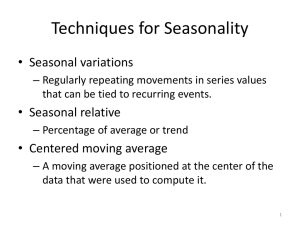

ECON 251 Research Methods 11. Time Series Analysis and Forecasting 1 Introduction Any variable that is measured over time in sequential order is called a time series. We analyze time series to detect patterns. The patterns help in forecasting future values of the time series. Future expected value The time series exhibit a downward trend pattern. 2 Components of a Time Series A time series can consist of four components. • • • • Long - term trend (T) Cyclical effect (C) Seasonal effect (S) Random variation (R) A trend is a long term relatively smooth pattern or direction, that persists usually for more than one year. 3 Components of a Time Series A time series can consists of four components. • • • • Long - term trend (T) Cyclical effect (C) Seasonal effect (S) Random variation (R) A cycle is a wavelike pattern describing a long term behavior (for more than one year). 6-88 12-88 6-89 12-89 6-90 Cycles are seldom regular, and often appear in combination with 4 other components. Components of a Time Series A time series can consists of four components. • • • • Long - term trend (T) Cyclical effect (C) Seasonal effect (S) Random variation (R) The seasonal component of the time-series exhibits a short term (less than one year) calendar repetitive behavior. 6-88 12-88 6-89 12-89 6-90 5 Components of a Time Series A time series can consists of four components. • • • • Long - term trend (T) Cyclical effect (C) Seasonal effect (S) Random variation (R) Random variation comprises the irregular unpredictable changes in the time series. It tends to hide the other (more predictable) components. We try to remove random variation thereby, identify the other components. 6 Time-series models There are two commonly used time series models: The additive model yt = Tt + Ct + St + Rt The multiplicative model yt = Tt x Ct x St x Rt 7 Smoothing Techniques To produce a better forecast we need to determine which components are present in a time series To identify the components present in the time series, we need first to remove the random variation This can be easily done by smoothing techniques: • moving averages • exponential smoothing 8 Moving Averages A k-period moving average for time period t is the arithmetic average of the time series values where the period t is the center observation. Example: A 3-period moving average for period t is calculated by (yt+1 + yt + yt-1)/3. Example: A 5-period moving average for period t is calculated by (yt+2 + yt+1 + yt + yt-1 + yt-2)/5. 9 Example: Gasoline Sales To forecast future gasoline sales, the last four years quarterly sales were recorded. Calculate the three-quarter and five-quarter moving average. Data Period 1 2 3 4 5 6 Year/Quarter Gas Sales 1 1 39 2 37 3 61 4 58 2 1 18 2 56 10 Solution 3 period moving average 5 period moving average (yt+1 + yt + yt-1)/3 (yt+2 + yt+1 + yt + yt-1 + yt-2)/5 • Solving by hand Period 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 Gas Sales 39 37 61 58 18 56 82 27 41 69 49 66 54 42 90 66 3-period moving Avg. 5-period moving Avg. 52.00 45.67 44.00 52.00 55.00 50.00 45.67 53.00 61.33 56.33 54.00 62.00 66.00 46.00 55.00 48.20 44.80 55.00 53.60 50.40 55.80 56.00 60.20 63.60 * 45.67 * * * 42.60 * * 11 Example: Gasoline Sales Moving Average 100 Actual 50 3pr MA 15 13 11 9 7 5 3 1 0 Notice how the averaging process removes some of the random variation. There is some trend component present, as well as seasonality. 12 Example: Gasoline Sales The 5-period moving average removes more variation than the 3-period moving average. Too much smoothing may eliminate patterns of interest. Here, the seasonality component is removed when using 5-period moving average. 100 80 60 40 20 0 1 2 3 4 5 6 7 8 5-period moving average 9 10 11 12 13 14 15 16 3-period moving average Too little smoothing leaves much of the variation, which disguises the real patterns. 13 Example – Armani’s Pizza Sales Below are the daily sales figures for Armani’s pizza. Compute a 3-day moving average. (Armani TS.xls) Monday Tuesday Wednesday Thursday Friday Saturday Sunday Week 1 35 42 56 46 67 51 39 Week 2 38 46 61 52 73 58 42 The moving average that is associated with Monday of week 2 is? 14 Centered moving average With even number of observations included in the moving average, the average is placed between the two periods in the middle. To place the moving average in an actual time period, we need to center it. Two consecutive moving averages are centered by taking their average, and placing it in the middle between them. 15 Example – Armani’s Pizza Sales Calculate the 4-period moving average and center it, for the data given below: Period Time series Moving Avg. 1 15 2 27 (2.5) 19.0 3 20 (3.5) 21.5 4 14 (4.5) 17.5 5 25 6 11 Centerd Mov.Avg. 20.25 19.50 16 Example – Armani’s Pizza Sales Armani’s pizza is back. Compute a 2-day centered moving average. Week 1 Monday 35 Tuesday 42 Wednesday 56 Thursday 46 Friday 67 Saturday 51 Sunday 39 The centered moving average that is associated with Friday of week 1 is? 17 Components of a Time Series A time series can consist of four components. • Long - term trend (T) • Cyclical effect (C) • Seasonal effect (S) Random variation (R) A trend is a long term relatively smooth pattern or direction, that persists usually for more than one year. 18 Trend Analysis The trend component of a time series can be linear or non linear. It is easy to isolate the trend component by using linear regression. • For linear trend use the model y = b0 + b1t + e. • For non-linear trend with one (major) change in slope use the quadratic model y = b0 + b1t + b2t2 + e 19 Example – Pharmaceutical Sales (Identify trend) Annual sales for a pharmaceutical company are believed to change linearly over time. Based on the last 10 year sales records, measure the trend component. Start by renaming your years 1, 2, 3, etc. Year 1990 1991 1992 1993 1994 Sales 18.0 19.4 18.0 19.9 19.3 Year 1995 1996 1997 1998 1999 Sales 21.1 23.5 23.2 20.4 24.4 20 Example – Pharmaceutical Sales (Identify trend) Solution • Using Excel we have yˆ 17 . 28 . 6254 (11 ) 24 . 1599 30 25 20 Sales Regression Statistics Multiple R 0.829573 R Square 0.688192 Adj. R Sq 0.649216 Std Error 1.351968 Observations 10 Forecast forFit period t Line Plot11 15 yˆ 17 . 28 . 6254 t 10 5 0 ANOVA 0 df Regression Residual Total Intercept t 5 SS MS F Sig. F 1 32.27345 32.27345 17.65682 0.002988 8 14.62255 1.827818 9 46.896 Coeffs. S.E. t Stat P-value 17.28 0.92357 18.71 6.88E-08 0.625455 0.148847 4.202002 0.002988 10 15 11 21 Example – Cigarette Consumption Determine the long-term trend of the number of cigarettes smoked by Americans 18 years and older. Data (Cigarettes.xls) Consumption 5000 4000 3000 2000 1000 0 1950 1960 1970 1980 Year 1955 1956 1957 1958 1959 1960 1961 . . 1990 Per Capita Consumption 3675 3718 3762 3892 4066 4197 4284 . . 2000 Solution • Cigarette consumption increased between 1955 and 1963, but decreased thereafter. • A quadratic model seems to fit this pattern. 22 Example – Cigarette Consumption Regression Statistics Multiple R 0.9821756 R Square 0.9646688 Adj. R Sq 0.962759 Std Error 97.98621 Observations 40 4500 4300 4100 3900 3700 3500 3300 3100 2900 2700 2500 The quadratic model fits the data very well. 0 ANOVA df Regression Residual Total Intercept t t-sq 10 20 2 yˆ 3 ,730 . 689 71 . 380 t 2 . 558 t SS MS F 2 9699558.4 4849779.2 505.11707 37 355248 9601.2974 39 10054806 30 40 Sig. F 1.38332E-27 Coeffs S.E. t Stat P-value 3730.6888 48.903765 76.28633 2.67E-42 71.379694 5.5009971 12.975774 2.429E-15 -2.558442 0.130116 -19.66278 3.518E-21 23 Example Forecast the trend components for the previous two examples for periods 12, and 41 respectively. • yˆ 17 . 28 . 6254 t yˆ ( t 12 ) yˆ ( t 41 ) • yˆ 3, 730 . 689 71 . 380 t 2 . 558 t 2 yˆ ( t 12 ) yˆ ( t 41 ) 24 Components of a Time Series A time series can consists of four components. Long - term trend (T) • Cyclical effect (C) • Seasonal effect (S) Random variation (R) A cycle is a wavelike pattern describing a long term behavior (for more than one year). 6-88 12-88 6-89 12-89 6-90 Cycles are seldom regular, and often appear in combination with 25 other components. Measuring the Cyclical Effects Although often unpredictable, cycles need to be isolated. To identify cyclical variation we use the percentage of trend. • Determine the trend line (by regression). • Compute the trend value yˆ t for each period t. • Calculate the percentage of trend by y t yˆ t 100 26 Example – Energy Demand Does the demand for energy in the US exhibit cyclic behavior over time? Assume a linear trend and calculate the percentage of trend. Data (Energy Demand.xls) • The collected data included annual energy consumption from 1970 through 1993. Find the values for trend and percentage of trend for years 1975 and 1976. 27 Example – Energy Demand Now consider the multiplicative model y t Tt C t R t yt yˆ t Tt C t R t Tt (Assuming no seasonal effects). C t Rt The regression line represents trend. No trend is observed, but cyclicality and randomness still exist. Rate/Predicted Rate 110 105 100 95 90 85 1 3 5 7 9 11 13 15 17 19 21 23 28 (66.4/69.828)100 Period Line Fit Plot 90 yˆ 69 . 3 . 502 t Consumption 85 80 75 Cnsptn Pred. cnsmptn % of trend 66.4 69.7 72.2 74.3 72.5 . . 69.8283 70.3299 70.8314 71.3329 71.8344 . . 95.0903 99.1044 101.9322 104.1595 100.9265 . . 70 65 110.0000 60 0 5 10 15 105.0000 20 25 Period 100.0000 When groups of the percentage of trend alternate around 100%, the cyclical effects are present. 30 95.0000 90.0000 85.0000 1 3 5 7 9 11 13 15 17 19 21 23 29 Components of a Time Series A time series can consists of four components. Long - term trend (T) Cyclical effect (C) • Seasonal effect (S) Random variation (R) The seasonal component of the time-series exhibits a short term (less than one year) calendar repetitive behavior. 6-88 12-88 6-89 12-89 6-90 30 Measuring the Seasonal effects Seasonal variation may occur within a year or even within a shorter time interval. To measure the seasonal effects we can use two common methods: 1. constructing seasonal indexes 2. using indicator variables We will do each in turn 31 Example – Hotel Occupancy Calculate the quarterly seasonal indexes for hotel occupancy rate in order to measure seasonal variation. Data (Hotel Occupancy.xls) Year Quarter Rate Year Quarter Rate Year Quarter Rate 1991 1 0.561 1993 1 0.594 1995 1 0.665 2 0.702 2 0.738 2 0.835 3 0.800 3 0.729 3 0.873 4 0.568 4 0.600 4 0.670 1992 1 0.575 1994 1 0.622 2 0.738 2 0.708 3 0.868 3 0.806 4 0.605 4 0.632 32 Example – Hotel Occupancy Perform regression analysis for the model y = b0 + b1t + e where t represents the chronological time, and y represents the occupancy rate. Rate Time (t) Rate 1 0.561 2 0.702 3 0.800 4 0.568 5 0.575 6 0.738 7 0.868 8 0.605 . . . . yˆ . 639368 . 005246 t 0 5 10 t 15 20 The regression line represents trend. 33 Example – Hotel Occupancy Now let’s consider the multiplicative model, and let’s DETREND data y t Tt S t R t (Assuming no cyclical effects) Tt S t R t St Rt yˆ t Tt yt The regression line represents trend. Rate/Predicted rate No trend is observed, but seasonality and randomness still exist. 1.5 1 0.5 0 1 3 5 7 9 11 13 15 17 19 34 Example – Hotel Occupancy Rate/Predicted rate 0.870 1.080 1.221 0.860 0.864 1.100 1.284 0.888 0.865 1.067 1.046 0.854 0.879 0.993 1.122 0.874 0.913 1.138 1.181 0.900 To remove most of the random variation but leave the seasonal effects, average the terms StRt for each season. Rate/Predicted rate 1.5 1 0.5 0 1 3 5 7 9 11 13 15 17 19 Average ratio for quarter 1: (.870 + .864 + .865 + .879 + .913)/5 = .878 Average ratio for quarter 2: (1.080+1.100+1.067+.993+1.138)/5 = 1.076 Average ratio for quarter 3: (1.222+1.284+1.046+1.123+1.182)/5 = 1.171 Average ratio for quarter 4: (.861 +.888 + .854 + .874 + .900)/ 5 = .875 35 Example – Hotel Occupancy Interpreting the results • The seasonal indexes tell us what is the ratio between the time series value at a certain season, and the overall seasonal average. • In our problem: 7.6% above the annual average Annual average occupancy (100%) 17.1% above the 117.1% annual average 12.5% below the annual average 107.6% 12.2% below the annual average 87.8% 87.5% Quarter 1 Quarter 2 Quarter 3 Quarter 4 Quarter 1 Quarter 2 Quarter 3 Quarter 4 36 Creating Seasonal Indexes Normalizing the ratios: • The sum of all the ratios must be 4, such that the average ratio per season is equal to 1. • If the sum of all the ratios is not 4, we need to normalize (adjust) them proportionately. Suppose the sum of ratios equaled 4.1. Then each ratio will be multiplied by 4/4.1 (Seasonal averaged ratio) (number of seasons) Seasonal index = Sum of averaged ratios In our problem the sum of all the averaged ratios is equal to 4: .878 + 1.076 + 1.171 + .875 = 4.0. No normalization is needed. These ratios become the seasonal indexes. 37 Example Using a different example on quarterly GDP, assume that the averaged ratios were: Quarter 1: .97 Quarter 2: 1.03 Quarter 3: .87 Quarter 4: 1.00 Determine the seasonal indexes. 38 Deseasonalizing time series Seasonally adjusted time series = Actual time series Seasonal index By removing the seasonality, we can: • compare data across different seasons • identify changes in the other components of the time - series. 39 Example – Hotel Occupancy A new manager was hired in Q3 1994, when hotel occupancy rate was 80.6%. In the next quarter, the occupancy rate was 63.2%. Should the manager be fired because of poor performance? Method 1: • compare occupancy rates in Q4 1994 (0.632) and Q3 1994 (0.806) • the manager has underperformed he should be fired Method 2: • compare occupancy rates in Q4 1994 (0.632) and Q4 1993 (0.600) • the manager has performed very well he should get a raise 40 Example – Hotel Occupancy Method 3: • compare deseasonalized occupancy rates in Q4 1994 and Q3 1994 Seasonally adjusted time series = Actual time series Seasonal index Seasonally adjusted Actual occupancy rate in Q4 1994 = occupancy rate in Q4 Seasonal index for Q4 1994 Recall: Seasonally adjusted occupancy rate in Q3 1994 the manager has indeed performed well he should be maybe get a raise 41 Recomposing the time series Recomposition will recreate the time series, based only on the trend and seasonal components – you can then deduce the value of the random component. yˆ t Tˆ t Sˆ t (. 639 . 0052 t ) Sˆ t In period #1 ( quarter 1): yˆ 1 Tˆ1 Sˆ 1 (. 639 . 0052 (1))(. 878 ) . 566 In period #2 ( quarter 2): yˆ 2 Tˆ 2 Sˆ 2 (. 639 . 0052 ( 2 ))( 1 . 076 ) . 699 Actual series Smoothed series 0.9 0.8 0.7 0.6 The linear trend (regression) line 0.5 1 3 5 7 9 11 13 15 17 19 42 Example – Recomposing the Time Series Recompose the smoothed time series in the above example for periods 3 and 4 assuming our initial seasonal index figures of: Quarter 1: 0.878 Quarter 3: 1.171 Quarter 2: 1.076 Quarter 4: 0.875 and equation of: yˆ t Tˆ t Sˆ t (. 639 . 0052 t ) Sˆ t yˆ 3 ( 0 . 639 0 . 0052 * t ) * SI 3 ( 0 . 639 0 . 0052 * 3 ) * 1 . 171 yˆ 4 ( 0 . 639 0 . 0052 * t ) * SI 4 ( 0 . 639 0 . 0052 * 4 ) * 0 . 875 43 Seasonal Time Series with Indicator Variables We create a seasonal time series model by using indicator variables to determine whether there is seasonal variation in data. Then, we can use this linear regression with indicator variables to forecast seasonal effects. If there are n seasons, we use n-1 indicator variables to define the season of period t: • [Indicator variable n] = 1 if season n belongs to period t • [Indicator variable n] = 0 if season n does not belong to period t 44 Example – Hotel Occupancy Let’s again build a model that would be able to forecast quarterly occupancy rates at a luxurious hotel, but this time we will use a different technique. Data (Hotel Occupancy.xls) Year Quarter Rate Year Quarter Rate Year Quarter Rate 1991 1 0.561 1993 1 0.594 1995 1 0.665 2 0.702 2 0.738 2 0.835 3 0.800 3 0.729 3 0.873 4 0.568 4 0.600 4 0.670 1992 1 0.575 1994 1 0.622 2 0.738 2 0.708 3 0.868 3 0.806 4 0.605 4 0.632 45 Example – Hotel Occupancy Use regression analysis and indicator variables to forecast hotel occupancy rate in 1996 Data Qi = 1 if quarter i occur at t 0 otherwise Quarter 1 belongs to t = 1 y 0.561 0.702 0.800 0.568 0.575 0.738 0.868 0.605 . . Quarter 2 does not belong to t = 1 Quarter 3 does not belong to t = 1 Quarters 1, 2, 3, do not belong to period t = 4. t 1 2 3 4 5 6 7 8 . . Q1 1 0 0 0 1 0 0 0 . . Q2 0 1 0 0 0 1 0 0 . . Q3 0 0 1 0 0 0 1 0 . . 46 The regression model There is insufficient evidence to conclude y = b0 + b1t + b2Q1 + b3Q2 + b4Q3 +e that seasonality Regression Statistics There is sufficient evidence to causes occupancy Multiple R 0.943022 conclude that trend is present. rate in quarter 1 be R Square 0.88929 different than this of Adj R Sq. 0.859767 Std. Error 0.03806 Good fit quarter 4! Observations 20 ANOVA df Regression Residual Total Intercept t Q1 Q2 Q3 SS MS F 4 0.174531 0.043633 30.12217 15 0.021728 0.001449 19 0.196259 Coeffs 0.55455 0.005037 0.003512 0.139275 0.205237 S.E. 0.024812 0.001504 0.02449 0.024258 0.024118 t Stat 22.35028 3.348434 0.143423 5.74134 8.509752 P-value 6.27E-13 0.004399 0.887865 3.9E-05 3.99E-07 Sig. F 5.2E-07 Seasonality effects on occupancy rate in quarter 2 and 3 are different than this of quarter 4! 47 The estimated regression model y = b0 + b1t + b2Q1 + b3Q2 + b4Q3 +e Regression Statistics Multiple R 0.943022 R Square 0.88929 Adj R Sq. 0.859767 Std. Error 0.03806 Observations 20 df Intercept t Q1 Q2 Q3 yˆ 21 . 555 . 00504 ( 21) . 0035 (1) . 664 yˆ 22 . 555 . 00504 ( 22 ) . 139 (1) . 805 yˆ 23 . 555 . 00504 ( 23 ) . 205 (1) . 876 yˆ 24 . 555 . 00504 ( 24 ) ANOVA Regression Residual Total yˆ t . 555 . 00504 t . 0035 Q1 . 139 Q 2 . 205 Q 3 SS MS F 4 0.174531 0.043633 30.12217 15 0.021728 0.001449 19 0.196259 Coeffs 0.55455 0.005037 0.003512 0.139275 0.205237 S.E. 0.024812 0.001504 0.02449 0.024258 0.024118 t Stat 22.35028 3.348434 0.143423 5.74134 8.509752 P-value 6.27E-13 0.004399 0.887865 3.9E-05 3.99E-07 . 675 Sig. F 5.2E-07 b4 b2 b0 b3 1 2 3 4 48 Time-Series Forecasting with Autoregressive (AR) models Autocorrelation among the errors of the regression model provides opportunity to produce accurate forecasts. Correlation between consecutive residuals leads to the following autoregressive model: yt = b0 + b1yt-1 + et This is type of model is termed a first-order autoregressive model, and is denoted AR(1). A model which also includes both yt-1 and yt-2 is a second order model AR(2) and so on. Selecting between them is done on the basis of the significance of the highest order term. If you reject the highest order term, rerun the model without it. Apply the same criterion for the new highest order term, and so on. 49 Example – Consumer Price Index (CPI) Forecast the increase in the Consumer Price Index (CPI) for the year 1994, based on the data collected for the years 1951 -1993. Data (CPI.xls) t 1 2 3 4 . . 43 Y (t) 7.9 2.2 0.8 0.5 . . 3 50 Example – Consumer Price Index (CPI) Regress the Percent increase (yt) on the time variable (t) it does not lead to good results. Y (t) 16 14 Yt = 2.14 + .098t 12 10 r2 = 15.0% 8 (+) 6 4 2 (-) (-) 0 -2 0 10 20 30 t 40 50 The residuals form a pattern over time. Also, this indicates the potential presence of first order autocorrelation between consecutive residuals. 51 Example – Consumer Price Index (CPI) An autoregressive model appears to be a desirable technique. The increase in the CPI for periods 1, 2, 3,… are predictors of the increase in the CPI for periods 2, 3, 4,…, respectively. Y (t) 7.9 2.2 0.8 0.5 -0.4 . . Y (t-1) 7.9 2.2 0.8 0.5 . . Regression Statistics Multiple R 0.797851 R Square 0.636566 Adj. R Sq. 0.62748 Std. Error 1.943366 Observations 42 df Intercept Y (t-1) Forecast for 1994 ( t 44 ) : yˆ 44 . 8066 . 78705 y 43 . 8066 . 78705 ( 3 ) 3 . 16775 ANOVA Regression Residual Total yˆ t . 8066 . 78705 y t 1 SS MS F Sig. F 1 264.5979 264.5979 70.06111 2.51E-10 40 151.0669 3.776673 41 415.6648 Coeffs S.E. t Stat P-value 0.806644 0.506089 1.59388 0.118835 0.787045 0.094029 8.370251 2.51E-10 52 16 Compare 14 linear trend model Y (t) 12 10 8 6 4 2 autoregressive model 0 -2 0 10 20 30 40 50 Y (t) t -5 16 14 12 10 8 6 4 2 0 -2 0 5 Y (t-1) 10 15 53 Selecting Among Alternative Forecasting Models There are many forecasting models available * o o * o o* * Model 1 ? o* o* o o* * Model 2 Which model performs better? 54 Model selection To choose a forecasting method, we can evaluate forecast accuracy using the actual time series. The two most commonly used measures of forecast n accuracy are: • Mean Absolute Deviation MAD t 1 y t Ft n • Sum of Squares for Forecast Error n SSE SSFE (y t Ft ) 2 t 1 In practice, SSE is SSFE are used interchangeably. 55 Model selection Procedure for model selection. 1. Use some of the observations to develop several competing forecasting models. 2. Run the models on the rest of the observations. 3. Calculate the accuracy of each model using both MAD and SSE criterion. 4. Use the model which generates the lowest MAD value, unless it is important to avoid (even a few) large errors - in this case use best model as indicated by the lowest SSE. 56 Example – Model selection I Assume we have annual data from 1950 to 2001. We used data from 1950 to 1997 to develop three alternate forecasting models (two different regression models and an autoregressive model), Use MAD and SSE to determine which model performed best using the model forecasts versus the actual data for 1998-2001. Year 1998 1999 2000 2001 Actual y 129 142 156 183 Forecast value Reg 1 Reg 2 136 118 148 141 150 158 175 163 AR 130 146 170 180 57 Example – Model selection I Solution Actual y in 1998 • For model 1 MAD Forecast for y in 1998 129 136 142 148 156 150 183 175 4 6 . 75 SSE (129 136 ) (142 148 ) 2 2 (156 150 ) (183 175 ) 185 2 2 • Summary of results MAD SSE Reg 1 6.75 185 Reg 2 8.5 526 AR 5.5 222 58 Example – Model selection II For the actual values and the forecast values of a time series shown in the following table, calculate MAD and SSE. Forecast Value Actual Value Ft 173 186 192 211 223 MAD SSE yt 166 179 195 214 220 59