Topic_7

advertisement

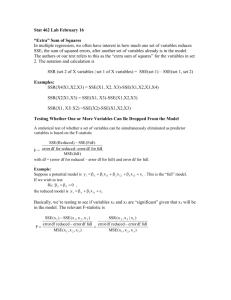

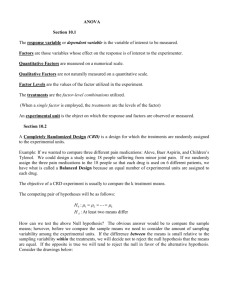

Topic 7: Analysis of Variance Outline • • • • • • • Partitioning sums of squares Breakdown degrees of freedom Expected mean squares (EMS) F test ANOVA table General linear test Pearson Correlation / R2 Analysis of Variance • Organize results arithmetically • Total sum of squares in Y is Y Y 2 i • Partition this into two sources – Model (explained by regression) – Error (unexplained / residual) ˆ Y ˆ Y Yi Y Yi Y i i Total Sum of Squares • MST is the usual estimate of the variance of Y if there are no explanatory variables • SAS uses the term Corrected Total for this source • Uncorrected is ΣYi2 • The “corrected” means that we subtract of the mean Y before squaring Model Sum of Squares • • • • ˆ Y SSR= Y i 2 dfR = 1 (due to the addition of the slope) MSR = SSR/dfR KNNL uses regression for what SAS calls model • So SSR (KNNL) is the same as SS Model Error Sum of Squares • • • • ˆ SSE= Yi -Y i 2 dfE = n-2 (both slope and intercept) MSE = SSE/dfE MSE is an estimate of the variance of Y taking into account (or conditioning on) the explanatory variable(s) • MSE=s2 ANOVA Table Source Regression df 1 SS Yˆ Y MS 2 i SSR/dfR ˆ Error n-2 Yi -Y SSE/dfE i ________________________________ Total n-1 2 Y -Y i 2 SSTO/dfT Expected Mean Squares • • • • MSR, MSE are random variables 2 2 2 E(MSR) 1 X i X E(MSE) When H0 : β1 = 0 is true 2 E(MSR) =E(MSE) F test • F*=MSR/MSE ~ F(dfR, dfE) = F(1, n-2) • See KNNL pgs 69-71 • When H0: β1=0 is false, MSR tends to be larger than MSE • We reject H0 when F is large If F* F(1-α, dfR, dfE) = F(.95, 1, n-2) • In practice we use P-values F test • When H0: β1=0 is false, F has a noncentral F distribution • This can be used to calculate power • Recall t* = b1/s(b1) tests H0 : β1=0 • It can be shown that (t*)2 = F* (pg 71) • Two approaches give same P-value ANOVA Table Source Model Error Total df SS MS F P 1 SSM MSM MSM/MSE 0.## n-2 SSE MSE n-1 **Note: Model instead of Regression used here. More similar to SAS Examples • Tower of Pisa study (n=13 cases) proc reg data=a1; model lean=year; run; • Toluca lot size study (n=25 cases) proc reg data=toluca; model hours=lotsize; run; Pisa Output Number of Observations Read Number of Observations Used Source Model Error Corrected Total Analysis of Variance Sum of Mean DF Squares Square 1 15804 15804 11 192.28571 17.48052 12 15997 13 13 F Value 904.12 Pr > F <.0001 Pisa Output Root MSE Dependent Mean Coeff Var 4.18097 R-Square 0.9880 693.69231 Adj R-Sq 0.9869 0.60271 (30.07)2=904.2 Variable Intercept year (rounding error) Parameter Estimates Parameter Standard DF Estimate Error t Value Pr > |t| 1 -61.12088 25.12982 -2.43 0.0333 1 9.31868 0.30991 30.07 <.0001 Toluca Output Number of Observations Read Number of Observations Used Source Model Error Corrected Total Analysis of Variance Sum of Mean DF Squares Square 1 252378 252378 23 54825 2383.71562 24 307203 25 25 F Value Pr > F 105.88 <.0001 Toluca Output Root MSE Dependent Mean Coeff Var 48.82331 R-Square 0.8215 312.28000 Adj R-Sq 0.8138 15.63447 (10.29)2=105.88 Variable Intercept lotsize DF 1 1 Parameter Estimates Parameter Standard Estimate Error 62.36586 26.17743 3.57020 0.34697 t Value 2.38 Pr > |t| 0.0259 10.29 <.0001 General Linear Test • A different view of the same problem • We want to compare two models – Yi = β0 + β1Xi + ei (full model) – Yi = β0 + ei (reduced model) • Compare two models using the error sum of squares…better model will have “smaller” mean square error General Linear Test • Let SSE(F) = SSE for full model SSE(R) = SSE for reduced model F (SSE(R)-SSE(F)) (df R df F ) SSE(F) df F • Compare with F(1-α,dfR-dfF,dfF) Simple Linear Regression • • • • • • • SSE(R) Yi b0 Yi Y SSTO 2 SSE(F) SSE dfR=n-1, dfF=n-2, dfR-dfF=1 F=(SSTO-SSE)/MSE=SSR/MSE Same test as before This approach is more general 2 Pearson Correlation • r is the usual correlation coefficient • It is a number between –1 and +1 and measures the strength of the linear relationship between two variables r (X X)(Y Y) (X X) ( Y Y) i i 2 i i 2 Pearson Correlation • Notice that r b1 (X X) (Y Y) 2 i 2 i b1 (s X sY ) • Test H0: β1=0 similar to H0: ρ=0 R2 and r2 (X i X) 2 2 r b1 (Y Y ) 2 i SSR SSTO 2 • Ratio of explained and total variation R2 and r2 • We use R2 when the number of explanatory variables is arbitrary (simple and multiple regression) • r2=R2 only for simple regression • R2 is often multiplied by 100 and thereby expressed as a percent R2 and r2 • R2 always increases when additional explanatory variables are added to the model • Adjusted R2 “penalizes” larger models • Doesn’t necessarily get larger Pisa Output Source Model Error Corrected Total Analysis of Variance Sum of Mean DF Squares Square 1 15804 15804 11 192.28571 17.48052 12 15997 F Value 904.12 R-Square 0.9880 (SAS) = SSM/SSTO = 15804/15997 = 0.9879 Pr > F <.0001 Toluca Output Source Model Error Corrected Total Analysis of Variance Sum of Mean DF Squares Square 1 252378 252378 23 54825 2383.71562 24 307203 F Value Pr > F 105.88 <.0001 R-Square 0.8215 (SAS) = SSM/SSTO = 252378/307203 = 0.8215 Background Reading • May find 2.10 and 2.11 interesting • 2.10 provides cautionary remarks – Will discuss these as they arise • 2.11 discusses bivariate Normal dist – Similarities and differences – Confidence interval for r • Program topic7.sas has the code to generate the ANOVA output • Read Chapter 3