Test #2

advertisement

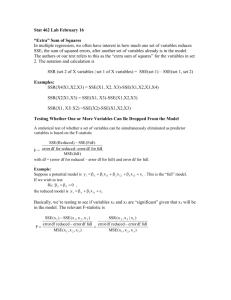

Test #2 Answers STAT 870 Fall 2012 Complete the problems below. Make sure to fully explain all answers and show your work to receive full credit! 1) (62 total points, p. 300 of KNN) A researcher studied the effects of the charge rate and temperature on the life of a new type of power cell in a preliminary small-scale experiment. The charge rate (X1) was controlled at three levels (0.6, 1.0 and 1.4 amperes) and the ambient temperature (X2) was controlled at three levels (10, 20, 30C). Factors pertaining to the discharge of the power cell were held at fixed levels. The life of the power cell (Y) was measured in terms of the number of discharge-charge cycles that a power cell underwent before it failed. The data is available at http://www.chrisbilder.com/test.htm. Below is how I read the data set into R. set1<-read.table(file = "C:\\data\\test2.csv", header=TRUE, sep = ",") head(set1) Note that there are n = 11 observations. Answer the following questions. a) (6 points) Find the second order sample regression model using X1 and X2 to predict Y. > > mod.fit<-lm(formula = y ~ x1 + x2 + x1:x2 + I(x1^2) + I(x2^2), data = set1) summary(mod.fit) Call: lm(formula = y ~ x1 + x2 + x1:x2 + I(x1^2) + I(x2^2), data = set1) Residuals: 1 2 -21.465 9.263 11 13.202 3 12.202 4 41.930 5 6 -5.842 -31.842 7 8 9 21.158 -25.404 -20.465 10 7.263 Coefficients: Estimate Std. Error t value Pr(>|t|) (Intercept) 337.7215 149.9616 2.252 0.0741 . x1 -539.5175 268.8603 -2.007 0.1011 x2 8.9171 9.1825 0.971 0.3761 I(x1^2) 171.2171 127.1255 1.347 0.2359 I(x2^2) -0.1061 0.2034 -0.521 0.6244 x1:x2 2.8750 4.0468 0.710 0.5092 --Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Residual standard error: 32.37 on 5 degrees of freedom Multiple R-squared: 0.9135, Adjusted R-squared: 0.8271 F-statistic: 10.57 on 5 and 5 DF, p-value: 0.01086 The sample regression model is Ŷ = 337.7215 - 539.5175X1 + 8.9171X2 + 171.2171 X12 -0.1061 X22 + 2.8750X1X2 b) (8 points) Suppose you were using one of these new power cells. Find the appropriate 95% interval estimate of its life for a charge rate of 1.0 and power cell stored at 20. Interpret the interval. Use the second order model in part a) when calculating the interval. 1 ˆ h t(1 / 2,n p) Var(Yh(new ) Y ˆh ) , where Var(Yh(new) Y ˆh ) The interval formula is Y MSE (1 Xh ( XX)1 Xh ) and Xh = (1, 1, 20) The prediction interval is: 69.31 < Y < 256.37. I am 95% confident that the lifetime of the power cell will be between 69.31 and 256.37 discharge-charge cycles. > predict(object = mod.fit, newdata = data.frame(x1 = 1, x2 = 20), interval = "prediction", level = 0.95) fit lwr upr 1 162.8421 69.31035 256.3739 c) (6 points) Provide three ways in R in which the first order terms and an interaction can be coded into a formula statement for the lm() function. Note that you do not need to find the actual sample regression model. x1 + x2 + x1:x2, x1*x2, (x1+x2)2 d) (6 points) Write out what the X matrix would be for the model of E(Y) 0 1X1 2 X2 3 X1X2. You only need to write out the first and last observations in your X matrix to receive credit. 1 X11 X12 X 1 Xn1 Xn2 X11X12 1 0.6 10 6 Xn1Xn2 1 1.4 30 42 Note that the model.matrix() function in R could be used to help find this as well. e) (15 points) Perform the appropriate hypothesis test to compare the models of E(Y) 0 1X1 2 X2 to E(Y) 0 1X1 2 X2 3 X1X2 4 X12 . Use = 0.05. i) ii) iii) iv) v) > > Ho: 3 = 4 = 0 vs. Ha: at least one ≠ 0 F = 1.18, p-value of 0.3694 = 0.05 Because 0.3694 > 0.05, do not reject Ho. There is not sufficient evidence to indicate the extra terms in the Ha model are needed. mod.fit1<-lm(formula = y ~ x1 + x2, data = set1) mod.fit2<-lm(formula = y ~ x1 + x2 + x1:x2 + I(x1^2), data = set1) > anova(mod.fit1, mod.fit2) Analysis of Variance Table Model 1: Model 2: Res.Df 1 8 2 6 y ~ x1 + x2 y ~ x1 + x2 + x1:x2 + I(x1^2) RSS Df Sum of Sq F Pr(>F) 7700.3 5525.4 2 2175.0 1.1809 0.3694 f) Consider the models of E(Y) = 0 + 1X1, E(Y) = 0 + 1X2, and E(Y) = 0 + 1X1 + 2X2 and answer the following questions. 2 i) (7 points) Using the AIC criteria, which model of the three is the best? Explain. The model with X1 and X2 since it has the smallest AIC value. Using my fit.stat() function: > > > > > sum.fit<-summary(mod.fit1) mod1<-fit.stat(model = y ~ mod2<-fit.stat(model = y ~ mod3<-fit.stat(model = y ~ rbind(mod1, mod2, mod3) Rsq AdjRsq AIC.stat 1 0.8729444 0.8411805 111.2790 2 0.3086191 0.2317990 127.9137 3 0.5643253 0.5159170 122.8340 x1 + x2, data = set1, MSE.all = sum.fit$sigma^2) x1, data = set1, MSE.all = sum.fit$sigma^2) x2, data = set1, MSE.all = sum.fit$sigma^2) SBC.stat PRESS Cp 112.8706 14790.59 3.00000 129.1074 67914.22 36.53249 124.0277 36136.55 20.43206 ii) (7 points) Using the R2 criteria, which model of the three is the best? Explain. The model with X1 and X2 since it has the largest R2 value and there is a significant improvement from the one variable models to the two variable model. iii) (7 points) Calculate the AIC for the second order model. Is this better than the model(s) you chose previously? Explain. > AIC(mod.fit, k = 2) [1] 113.0456 The AIC value is 113.0456 which is larger than the value for the first-order model chosen above. Therefore, the second order model is not better. 2) (38 total points) Answer the following questions. a) (6 points) Why is the adjusted R2 preferred over R2 as a measurement of model fit? The adjusted R2 adjusts for the number of predictor variables in the model. Unlike the R 2, the adjusted R2 can not be made to increase by adding unimportant variables to the model. b) (10 points) For the regression model of E(Y) = 0+ 1X1 + + gXg + g+1Xg+1+ + p-1Xp-1, the partial F-test uses the following statistic F SSE(X1,..., Xg ) SSE(X1,..., Xg, Xg1,..., Xp1 ) (p 1 g) SSE(X1,..., Xg , Xg1,..., Xp1 ) (n p) for the test of Ho: g+1 = = p-1=0 vs. Ha: At least one of the ’s in Ho are not 0. Why do large values of F indicate evidence against the null hypothesis? Answer the question without reference to the critical value or a p-value. The key part to answering this question is to understand what is represented by the numerator. Notice that if SSE(X1, …, Xg) is very large compared to SSE(X1, …, Xg, Xg+1, …, Xp-1), Fwill be very large. This occurs when the additional variables of Xg+1, …, Xp-1 substantially lower the SSE. Thus, these additional variables are important given the other variables are in the model. c) (6 points) Why do values of Cp close to p indicate a good model? 3 Cp SSE(X1,..., Xp-1 ) (n 2p) MSE(X1,..., XP-1 ) SSE(X1,..., Xp-1 ) (n p)MSE(X1,..., Xp-1 ) n p when the p-1 predictor variables are MSE(X1,..., XP-1 ) MSE(X1,..., XP-1 ) about just as good as all of the P-1 variables. This leads Cp to be approximately n – p – (n – 2p) = p. Note that d) (10 points) Explain stepwise forward selection. This is a combination of forward selection and backward elimination. The process begins with two steps of forward selection in order to add two predictor variables to a model with no variables to begin with. Backward elimination is then performed to see if any of these two predictor variables can be removed. Forward selection and backward elimination are then alternated between to see if any more variables can be added or removed from the model. The process stops when there are no more variables that can be added or removed. e) (6 points) What is the difference between SSR(X2) and SSR(X2 | X1)? Explain. SSR(X2) is the regular sums of squares for regression without any other predictor variables in the model. SSR(X2 | X1) measures the amount that SSE is reduced by adding X2 to a regression model with X1 already in it. Because this measures the reduction in SSE, it is examining the amount of additional information being explained by X 2 (this is why we call it a SSR). 3) (3 points, extra credit) What year did Reggie Miller get inducted into the Naismith Memorial basketball hall of fame? 2012 – Note that we discussed during class whether or not Reggie Miller was a member of the hall of fame. While we were not sure, one could have looked it up after class 4