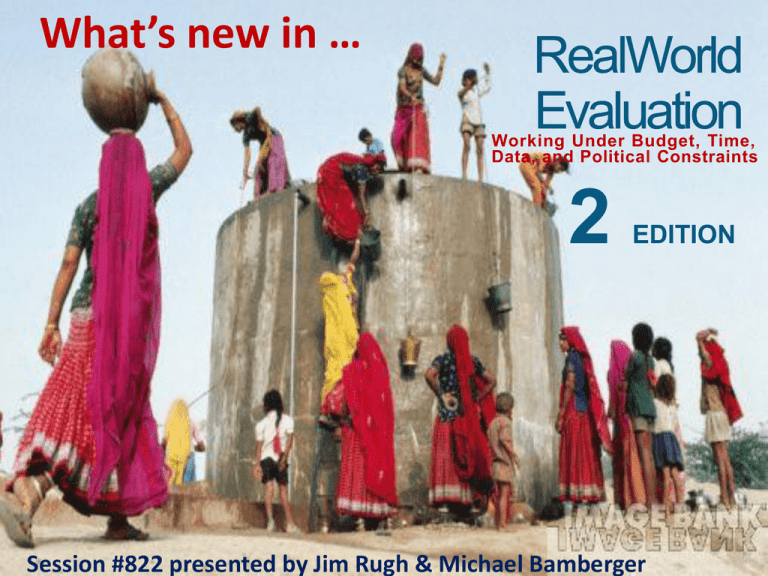

what`s new in the new version

advertisement

What’s new in … RealWorld Evaluation Working Under Budget, Time, Data, and Political Constraints 2 EDITION Session #822 presented by Jim Rugh & Michael Bamberger 1 Session Outline 1. Additional evaluation designs 2. A fresh look at non-experimental designs 3. Understanding the context 4. Broadening the focus of program theory 5. The benefits of mixed-method designs 6. Evaluating complicated and complex programs 7. Greater focus on responsible professional practice 8. Quality assurance and threats to validity 9. Organizing and managing evaluations 10.The road ahead (issues still to be addressed) 2 What’s New in RealWorld Evaluation? Evaluation Designs 3 Design #1.1: Longitudinal Experimental Design P1 X C1 P2 X C2 P3 P4 C3 C4 Project participants Research subjects randomly assigned either to project or control group. Comparison group baseline midterm end of project evaluation post project evaluation 4 Design #1.2: Longitudinal Quasi-experimental P1 X C1 P2 X C2 P3 P4 C3 C4 Project participants Comparison group baseline midterm end of project evaluation post project evaluation 5 Design #2.1: Experimental (pre+post, with comparison) P1 X P2 C1 C2 Project participants Research subjects randomly assigned either to project or control group. Comparison group baseline end of project evaluation 6 Design #2.2A: Quasi-experimental (pre+post, with comparison) P1 X P2 C1 C2 Project participants Comparison group baseline end of project evaluation 7 Design #2.2B: Quasi-experimental (retrospective baseline) P1 X P2 C1 C2 Project participants Comparison group baseline end of project evaluation 8 Design #3.1: Double Difference starting at mid-term X P1 X C1 P2 C2 Project participants Comparison group midterm end of project evaluation 9 Design #4.1A: Pre+post of project; post-only comparison P1 X P2 C Project participants Comparison group baseline end of project evaluation 10 Design #4.1B: Post + retrospective of project; post-only comparison P1 X P2 C Project participants Comparison group baseline end of project evaluation 11 Design #5: Post-test only of project and comparison X P C Project participants Comparison group end of project evaluation 12 Design #6: Pre+post of project; no comparison P1 X P2 Project participants baseline end of project evaluation 13 Design #7: Post-test only of project participants X P Project participants end of project evaluation 14 The 7 Basic RWE Design Frameworks D e s i g n T1 T4 cont.) (endline) (ex-post) X P3 C3 P4 C4 X P2 C2 X P2 C2 X X P2 C2 X X P1 C1 X X P2 X X P1 (baseline) (intervention) 1 P1 C1 X 2 P1 C1 X 3 4 X P1 5 6 7 P1 T2 X T3 X (midterm) P2 C2 P1 C1 (intervention, 15 What’s New in RealWorld Evaluation? A fresh look at non-experimental evaluation designs Non-Experimental Designs [NEDs] • NEDs are impact evaluation designs that do not include a matched comparison group • Outcomes and impacts assessed without a conventional counterfactual to address the question – “what would have been the situation of the target population if the project had not taken place?” 17 Situations in which an NED may be the best design option • Complex programs • Not possible to define a comparison group • When the project involves complex processes of behavioral change • outcomes not known in advance • Many outcomes are qualitative • Projects operate in different local settings • When it is important to study implementation • Project evolves slowly over a long period of time 18 Some potentially strong NEDs A. B. C. D. E. Interrupted time series Single case evaluation designs Longitudinal designs Mixed method case study designs Analysis of causality through program theory models F. Concept mapping 19 A. Interrupted time series Alcohol-related driving accidents Antidrinking law Monthly reports of driving accidents 20 B. Single case designs Phase 1 Baseline Observation and rating Treatment Phase 2 Phase 3 Post-test Observation and rating Baseline Observation and rating Treatment Post-test Observation and rating Baseline Observation and rating Treatment Post-test Observation and rating 21 Single case designs The same subject or group may receive the treatment 3 times under carefully controlled conditions or • • different groups may be treated each time. • The baseline and posttest are rated by a team of experts – usually based on observation • If there is a significant change in each phase the treatment is considered to have produced an effect 22 A Mixed-Method Case Study [Non-Experimental] Design Inputs Implementation Outputs Contextual analysis Process analysis Program theory + theory of change National household survey Qualitative data collection Defining a typology of individuals, households, groups or communities Selecting a representative sample of cases: Random or purposive Ensuring sample is large enough to generalize Preparation and analysis of case studies 23 What’s New in RealWorld Evaluation? Understanding the Context 24 The Importance of context Political context Project implementation Economic context Outcomes Security: Environmental, conflict, domestic violence Institutional context Impacts sustainability Socio-cultural characteristics What’s New in RealWorld Evaluation? Broadening the focus of program theory 26 What’s New in RealWorld Evaluation? Benefits of MixedMethod Designs 27 What’s New in RealWorld Evaluation? Complex Evaluation Framework 28 Simple projects, complicated programs and complex development interventions Large, complex Complex interventions Complicated programs Small, simple Simple projects • country-led planning and evaluation • Non linear • Many components or services • Often covers whole country • Multiple and broad objectives • May provide budget support with no clear definition of scope or services • multiple donors and agencies • context is critical • May include a number of projects and wider scope • Often involves several blueprint approaches • Defined objectives but often broader and less precise and harder to measure • Often not time-bound • Context important • multiple donors and national agencies • • • • • • “blue print” producing standardized product relatively linear Limited number of services Time-bound defined and often small target population Defined objectives 29 The Special Challenges of Assessing Outcomes for Complex Programs 1. Most conventional impact evaluation designs cannot be applied to evaluating complex programs 2. No clearly defined activities or objectives – General budget and technical support integrated into broader government programs – Multiple activities – Target populations not clearly defined – Time-lines may not be clearly defined 30 Special challenges continued 3. Multiple actors 4. No baseline data 5. Difficult to define a conventional comparison group Alternative approaches for defining the counterfactual for complex interventions 1. Theory driven evaluation 3. Quantitative approaches 4. Qualitative approaches 5. Mixed method designs 6. Rating scales 7. Integrated strategies for strengthening the evaluation designs 32 STRATEGIES FOR EVALUATING COMPLEX PROGRAMS Counterfactual designs • Attribution analysis • Contribution analysis • Substitution analysis Theorybased approaches Qualitative approaches Quantitative approaches Mixed method designs Rating scales strengthening alternative counterfactuals • “Unpacking complex programs” • Portfolio analysis •Reconstructing baseline data • Creative use of secondary data • Secondary data •Triangulation Estimating impacts The valueadded of agency X Net increase in resources for a program What’s New in RealWorld Evaluation? Greater Focus on Responsible Professional Practice 34 What’s New in RealWorld Evaluation? Quality Assurance and Threats to Validity 35 Quality assurance framework Objectivity/ credibility Internal validity Threats to validity worksheets Design validity Quantitative Statistical validity Qualitative Construct validity External validity Mixed method What’s New in RealWorld Evaluation? Organizing and Managing Evaluations 37 Organizational and management issues 1. Planning and managing the evaluation A. B. C. D. E. Preparing the evaluation Recruiting the evaluators Designing the evaluation Implementing the evaluation Reporting and dissemination the evaluation findings F. Ensuring the implementation of the recommendations Organization and management [continued] 2. 3. 4. 5. Building in quality assurance procedures Designing “evaluation ready” programs Evaluation capacity development Institutionalizing impact evaluation systems at the country and sector levels What’s New in RealWorld Evaluation? The Road Ahead 40 The RWE Perspective on the Methods Debate: Limitations of RCTs 1. Inflexibility. 2. Hard to adapt sample to changing circumstances. 3. Hard to adapt to changing circumstances. 4. Problems with collecting sensitive information. 5. Mono-method bias. 6. Difficult to identify and interview difficult to reach groups. 7. Lack of attention to the project implementation process. 8. Lack of attention to context. 9. Focus on one intervention. 10.Limitation of direct cause-effect attribution. Consequences Consequences Consequences DESIRED IMPACT OUTCOME 1 OUTCOME 2 OUTCOME 3 A more comprehensive design OUTPUT 2.1 OUTPUT 2.2 OUTPUT 2.3 A Simple RCT Intervention Intervention Intervention 2.2.1 2.2.2 2.2.3 To attempt to conduct an impact evaluation of a program using only one pre-determined tool is to suffer from myopia, which is unfortunate. On the other hand, to prescribe to donors and senior managers of major agencies that there is a single preferred design and method for conducting all impact evaluations can and has had unfortunate consequences for all of those who are involved in the design, implementation and evaluation of international development programs. The RWE Perspective on the Methods Debate: Limitations of RCTs In any case, experimental designs, whatever their merits, can only be applied in a very small proportion of impact evaluations in the real world. What else do we address in the “Road Ahead” final chapter? 1. Mixed Methods: The Approach of Choice for Most RealWorld Evaluations 2. Greater Attention Must Be Given to the Management of Evaluations 3. The Challenge of Institutionalization 4. The Importance of Competent Professional and Ethical Practice 5. The Importance of Process 6. Creative Approaches for the Definition and Use of Counterfactuals 7. Strengthening Quality Assurance and Threats to Validity Analysis 8. Defining Minimum Acceptable Quality Standards for Conducting Evaluations Under Constraints • •EDI T I O N •This book addresses the challenges of conducting program evaluations in real-world contexts where evaluators and their clients face budget and time constraints and where critical data may be missing. The book is organized around a seven-step model developed by the authors, which has been tested and refined in workshops and in practice. Vignettes and case studies—representing evaluations from a variety of geographic regions and sectors—demonstrate adaptive possibilities for small projects with budgets of a few thousand dollars to large-scale, long-term evaluations of complex programs. The text incorporates quantitative, qualitative, and mixed-method designs and this Second Edition reflects important developments in the field over the last five years. •N e w t o t h e S e c o n d E d i t i o n : Adds two new chapters on organizing and managing evaluations, including how to strengthen capacity and promote the institutionalization of evaluation systems Includes a new chapter on the evaluation of complex development interventions, with a number of promising new approaches presented Incorporates new material, including on ethical standards, debates over the “best” evaluation designs and how to assess their validity, and the importance of understanding settings Expands the discussion of program theory, incorporating theory of change, contextual and process analysis, multi-level logic models, using competing theories, and trajectory analysis Provides case studies of each of the 19 evaluation designs, showing how they have been applied in the field •“This book represents a significant achievement. The authors have succeeded in creating a book that can be used in a wide variety of locations and by a large community of evaluation practitioners.” •—Michael D. Niles, Missouri Western State University •RealWorld Evaluation •RealWorld •Evaluation •Bamberger Rugh •Mabry •2 •“This book is exceptional and unique in the way that it combines foundational knowledge from social sciences with theory and methods that are specific to evaluation.” •—Gary Miron, Western Michigan University •“The book represents a very good and timely contribution worth having on an evaluator’s shelf, especially if you work in the international development arena.” •—Thomaz Chianca, independent evaluation consultant, Rio de Janeiro, Brazil •2 •E D I T I O N •MANDATORY SPACE REQUIRED BY BANG FOR SUSTAINABLE FORESTRY INITIATIVE LOGO •Working Under Budget, Time, •Data, and Political Constraints •Michael •Bamberger •Jim •Rugh •Linda •Mabry •EDIT IO N