Variables Aleatorias Independientes: Covarianza y Correlación

advertisement

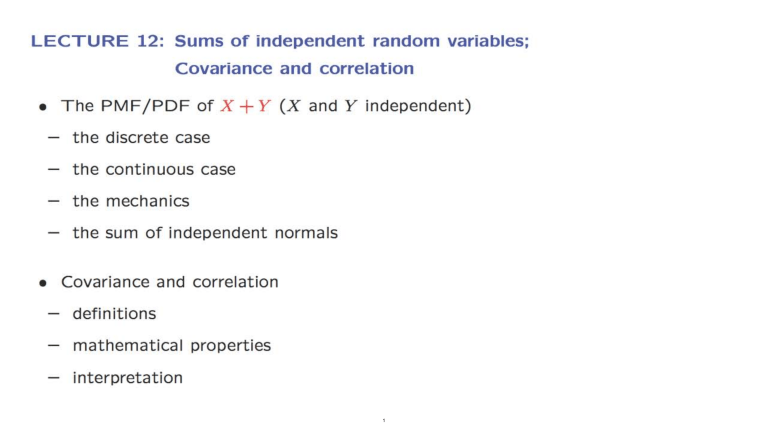

LECTURE 12: Sums of independent random variables;

Covariance and correlation

•

The PMF/PDF of X

+y

eX and Y independent)

the discrete case

the continuous case

the mechanics

the sum of independent normals

•

Covariance and correlation

definitions

mathematical properties

interpretation

1

The distribution of X

• z=

X

+ Y;

the discrete case

x

•

known PMFs

•.•

pz (z) = L PX(x)PY(z ­ x)

X, Y ind epe nd ent , discrete

~()(II')

PZ(3) =

+ Y:

+.f(i<";O,y~;) +.f(x,I,'1',£)-I-'··

f'"

T

•

P.,.

y

•

(0,3 )

• (1.2)

•

:: ~ PI< (x)

(2 , I )

?C­

3.0

•

2

f'( (~-:;d

.'

~.

'1

Discrete convolution mechanics

pz(z) = L Px(x)PY(z - x)

x

PX

py

2/3

'/3

3/6

' /6

• To find pz(3 ):

' /6

3/ 6

' /6

Flip (horizontally) the PMF of Y

' /6

- 2

- 1

,

Put it underneath the PMF of X

3/ 6

' /6

Right-shift the flipped PMF by 3

' /6

Cross-mu ltipl y and add

Repeat for other va lues of

3

Z

The distribution of X

• z=

X

+ Y;

+ Y:

the continuous case

x

X, Y ind e pend ent , continuous

•

known PDFs

\

y

pz ( z) = L PX(x)PY(z - x)

/C onditional on X = x:

,:- t)' ~ Co "570.", t -

:7

<--

fz(z) = J:fx (:;:)fy ( z - x) dx

=::x.+

y

;>

~=

=

'1'+3

f~I1((~ 13) '" -5'(-1-> ))/2-i'~) = rYH (~) = fy (e-3)

fzllJ2-I~)= fy(~-z-)

Joint PDF of Z and X:

)x 2-(?:)'?-);;:' h (~)

f~+~ r:x:)-=f".(x-b)

7'(=(&oo

- ?:)

Loo

From 'joint to the marginal: fz(z)

•

x~?

Same mechanics as in discrete case (flip, shift, etc.)

4

fx,z(x , z) dx

The sum of independent normal r.v.'s

fz(z) = L : fxCx)hCz - x) dx

Z=X + Y

fx Cx ) =

Jz(z) =

1

Vhax

J:

e - (x - ~u x )2 / 2a:-

fxCx)fy(z - x) dx

OO

J

- 00

(a lg ebra)

1

J2;a x

1

exp

{

(x-I'x)

2a;

2

1

} J2;a y

(z - I' x - I' y)'

....

exp

{

-

"

(z-X-I'Y)

2a~

N

The sum of finitely many independent normals is normal

5

2

}

dx

iJ:2~fY.1.

Covariance

•

Definition for general case:

Zero-mean , discrete X and Y

cov(X, Y) =

if independent: E[XY] =

=G[x] f [If J <:. 0

y

".

... "... .. .

. ..

.

.

.

.. . . . - . . .

. .. .. .

. .

.

.

'*

.

.

•

x

..

•

·.. . .

·. .

· '.

· .. .

. . ..

.

E[XY]

'70

E [Y]~l

• independent

cov(X, Y) = 0

(converse is not true)

.

•

.

-

L'v>d 0 ~E[(7\-G[xJ)l t;[Y-G[yl]

y

.

E [~X - E[X]~. iY

E[XY]

x

y

(0, I )

.

<0

(-1 ,0)

( I ,0)

(0 . - 1)

6

Covariance properties

=E[CX- [[;r])~J

o VQr(x) :: £[xfJ -(£0J)%.

cov(X, Y) =

E [(~ -~ ). !(:: -;; E (':])]

cov(X,X)

=E[xr] - E[xt;[yJ]

- E [G[K] 'f]

cov(aX

+ b, Y)

=

.,; £[>rl(] - G['<j £[yJ

'fJ +- [;[x - yJ

(Q55".... e 0 ... eG\""s)

= 1:[(0,)( +h) 'fj = a.f[XY] ~h

~

cov(X, Y

+ ~) = f

Q. Co

rGD= [x] Fry J]

v (x,,()

[x ('(+ 2:-)J

cov(X, Y) = E (XY] - E (X]E(Y]

~r;[xYJ-t-[['X2]" CO.,(K/-r)-!­

~ov()(,~)

7

The variance of a sum of random variables

va r(X 1

+ X2)

=

Ef

(x,

-I-

x: 'L

­

f [)( I + ;>(,1

((X,

-f[X,J)

=E

-

Y

+ (1(2 - f

[~t.]),

:.[ (ir, -[Ix r ])'! + (~2. - f[X1.1Y"

+J- (x,-£[x,])(X2. -f[r11)

~ \/~I'(X,)+V~r(Xl)

8

+i

Cov

(x ,,)X2-)

•

The variance of a sum of random variables

var(X1

+ X2)

var(X 1 +

('Q.\5CA ...

= Var(X1)

... + X n ) =

e 0

t.o<EIQ"'S)

+ Var(X2) + 2cov(X1 , X2)

6[(I(,l- ..• +tr~ )2.1

""

=f Z x.

:2 A; ?(~

1.

•

T

•

(, '::.. I

;·=L) ... ~ /-t....

i. i- j

1-.2,

, -:f I

n

var(X1

+ ... + X n ) = L

i= 1

var(Xi) +

L

{ ( i,j ) : i ,ejl

9

Cov(x;/~.)

COV(Xi , X j )

The Correlation coefficient

•

p(X, Y)

Dimensionless version of covariance:

_

E (X - E [X]) . (Y - E [Y])

"x

- 1

< P<

cov(X, Y)

-

1

•

Measure of the degree of "association" between X and Y

•

Ind ependent

'*

p

= 0, " uncorrelated"

.

(converse is not true)

• Ipi =

•

1

cov(aX

'*

(X - E[X]) = c(Y - E[Y])

+ b, Y) =

a· cov(X, Y)

"Y

( X, X) =

p

v

0\ '!.

(X)

=1

'l­

17"

(linearly related)

p(aX

10

+ b, Y)

=

0\. . CO v

101 I 0",

(X,

(Tv

'r' )

= ~ It" (c>.)

•

F (><,'f)

Proof of key properties of the correlation coefficient

p(X, Y) =

E (X - E [X]) . (Y - E[Y])

ax

•

- 1

ay

<P<

1

A ssum e, for simpl icity, zero means and unit varian ces, so tha t p(X, Y) = E[XY)

E [(X _ py)2] =

Ipi =

1- J. P £[111 J t Ft E ['12.J

= I -2f~+ft.",.

o!::

If

E [Ki

1 , th en

X =f

'(

~ X

/- f~

='(

11

0 r

1_(".t;>_O - :;pl.S/

X

=- r

Interpreting the correlation coefficient

•

p(X, Y)

_

cov(X, Y)

aXay

Association does not imply causation or influence

X: math aptitude

Y: musical ability

•

Correlation often reflects underlying, common, hidden factor

f

Assume , Z, V, Ware independent

I

(X, Y) =- -J.2: -Vi ~

I

z-'

X= Z +V

--

Assume, for simplicity, that Z, V, W have zero means, unit variances

0> = vi,

Vo.r(x)=vo.l'(?)tVClr(v)::.1 =" 0"''2:,::.V2.

COli (X)y) =-1;[(6 + v)(Z of w>J = I; [6 2

I {;' [v~] + F

J

o

= J..

12

f6 WJ t

o

+-

J

£[vw

0

Correlations matter...

•

A real-estate investment company invests $10M in each of 10 states.

At each state i , the return on its investment is a random variable X i .

with mean 1 and standard deviation 1.3 (in millions).

10

var(X 1 + ... + X lO ) =

L

i= 1

•

L

var(Xi ) +

cov(X i , X j )

E[>(J-+ ..... X,.]:.IO

(i.j ): i", j }

If the X i are uncorrelated. then:

var(X1 + ... +XlO) =

•

) O· ( I.~

) 2.

::::

I

O,O'(X1+"'+ X lO) =

6.)

p

~ 9:

Co V (X',') Xi);:

O'X(

"')

r

2.

V~r(X, 4­

f"J' ::10'\"0,) +l)O.I.52=15''7

If for i

i= j ,

p(Xi, X j ) =

13

'-1./

&;;:: 0 ~"I.~ 1'/.'3,

= 1.5'2­

MIT OpenCourseWare

https://ocw.mit.edu

Resource: Introduction to Probability

John Tsitsiklis and Patrick Jaillet

The following may not correspond to a particular course on MIT OpenCourseWare, but has been provided by the author as an individual learning resource.

For information about citing these materials or our Terms of Use, visit: https://ocw.mit.edu/terms.