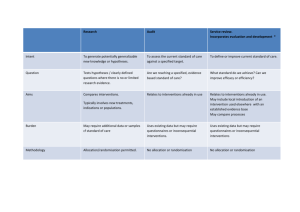

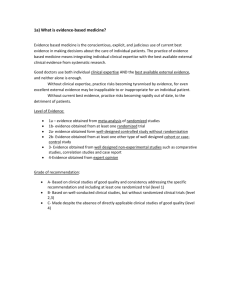

Test, Learn, Adapt: Developing Public Policy with Randomised Controlled Trials Laura Haynes

advertisement