Mixed methods in health services research: Pitfalls and pragmatism

advertisement

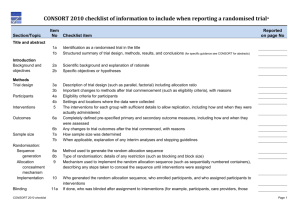

Mixed methods in health services research: Pitfalls and pragmatism Robyn McDermott Mixed Methods Seminar JCU 16-17 October 2014 What’s special about health services (and much public health) research? • Interventions are complex • Settings are complex • Standard control groups may not be feasible/ethical/acceptable to services and/or communities • “Contamination” is a problem • Unmeasured bias/confounding • Secular behaviour and policy change over time can be strong, sudden and unpredictable • Context is very important but often poorly described • Example of the DCP “Improving reporting quality” checklists • CONSORT: RCTs with updates for cluster RCTs • TREND: Transparent Reporting of Evaluations with Non-randomised Designs (focused on HIV studies initially) • PRISMA: Reporting systematic reviews of RCTs • STROBE: Reporting of observational studies • MOOSE: Reporting systematic reviews of observations studies Complex interventions • Review of RCTs reported over a decade • Less than 50% had sufficient detail of the intervention to enable replication (Glasziou, 2008) • Even fewer had a theoretical framework or logic model • Systematic reviews of complex interventions often find small if any effects, or contradictory findings. This may be due to conflating studies without taking account of the underlying theory for the intervention (eg Segal,2012: Early childhood interventions) TREND has a 22-item checklist http://www.cdc.gov/trendstatement/pdf/trendstatement_trend_checklist.pdf Item 4: Details of the interventions intended for each study condition and how and when they were actually administered, specifically including: • • • • • • Content: what was given? Delivery method: how was the content given? Unit of delivery: how were the subjects grouped during delivery? Deliverer: who delivered the intervention? Setting: where was the intervention delivered? Exposure quantity and duration: how many sessions or episodes or events were intended to be delivered? How long were they intended to last? • Time span: how long was it intended to take to deliver the intervention to each unit? • Activities to increase compliance or adherence (e.g., incentives) Suggestions for improvements to TREND and CONSORT Armstrong et al, J Public Health, 2008 • Introduction: Intervention model and theory • Methods: Justify study design choice (eg compromise between internal validity and complexity and constraints of the setting) • Results: Integrity (or fidelity) of the intervention • Context, differential effects and multi-level processes • Sustainability: For public health interventions, beyond the life of the trial Theoretical framework and logic model for an intervention effect (should be in the introduction- example from the Diabetes Care Project – DCP, 2012-14) Study design choice (methods section) • Strengths and weaknesses of the chosen study design • Operationalization of the design including: – Group allocation, – Choice of counterfactual, – Choice of outcome measures, and – Measurement methods Implementation Fidelity Part of process evaluation • • • • Information on the Intensity, Duration and Reach of the intervention components and, If and how these varied by subgroup (and how to interpret this) Effectiveness will vary by Context Context elements can include • Host organization and staff • System effects (eg funding model, use of IT, chronic care model for service delivery) • Target population Multilevel processes Informed by Theoretical Model used eg Ottowa Charter framework for prevention effectiveness studies may involve analysis of • Individual level data • Community level data • Jurisdictional level data • Country level data Differential effects and Sub-group analysis • Counter to the RCT orthodoxy of effectiveness trials there may be value in looking at differential effects by SES, gender, ethnicity, geography, service model (eg CCHS) • Even when there is insufficient statistical power in individual studies • Potential advantage is the possibility of a pooled analysis of studies eg by SES impact Sustainability • Beyond the life of the trial (follow up typically very short) • Important for policy • But not for your journal publication • Sustainability research may require separate study design and conduct And finally….. How do you put all this together and stay in journal word limits? • For briefings • For journals • For reports which will realistically get read?

![Gunning presentation [PPTX 699.68KB]](http://s2.studylib.net/store/data/014976236_1-85e3268015b810d2235568b8329b35de-300x300.png)