Automated Gene Classification using Nonnegative Matrix Factorization on Biomedical Literature

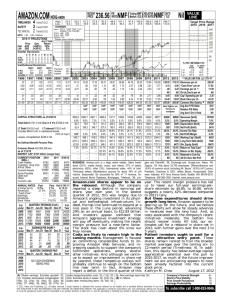

advertisement