Recognizing Facial Expressions by Tracking Shapes

advertisement

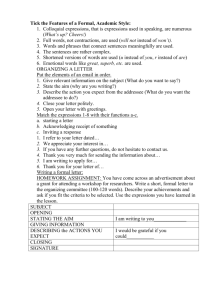

Recognizing Facial Expressions by Tracking Shapes Atul Kanaujia, Rutgers University, USA Dimitris Metaxas, Rutgers University, USA Understanding Non-verbal Communication Facial Expressions Recognition • 6 Universal Facial Expressions i.e. Anger, Joy, Disgust, Surprise, Sadness and Fear. • Detecting Deception by recognizing the emotional state during interrogation. e.g. Criminal investigation, interviews Recognizing Expressions by Tracking Facial Features Extend Active Shape Models to handle Localized shape deformation. • Expressions and AU detection Track facial features across long image sequences. Use Conditional Random Fields to label sequence of tracked shapes as expressions. Recognize 6 Universal facial expressions Detecting and Tracking Feature Shapes Why Active Shape Models ? Active Shape Models Active Appearance Model + Larger Capture Range Compared to + Lesser Land marks needed. AAM ( Search along profile) + More Robust to textured + Faster convergence Images + Less affected by Illumination variations. - Takes Longer to converge - Large influence due to changes - Does not use grey level information in Appearance and Illumination - Needs finely located landmarks - Poor Performance with textured background Courtesy: Tim Cootes et al. Comparing Active Shape Models with Active Appearance Models Shape Subspace for Localized Deformation • Accurate tracking of Facial expressions largely depends on the characteristics of the shape basis vector. • Localized Representation using Local Factor Analysis (LFA), Local ICA, Non-negative Matrix Factorization (NMF). • NMF – Factorizes the observed shapes into approximate Non-Negative Linear combination of basis vectors. Where • KL Divergence: Local Non-negative Matrix Factorization • Localization of the NMF basis representation can be improved by adding sparsity, orthogonality and expressiveness constraints in the KL divergence cost function1 • Shapes are regularized using ridge regression during ASM search Regularization Factor chosen empirically Effect of Varying Control Parameters Localized NMF basis Holistic PCA basis 1 Learning Spatially localized, parts-based representation, Stan Z. li, X. W. Hou, and H. Zhang Local NMF Basis (Stan et al.) + α∑(i,j) WTiWj - µ∑(i,i) HiHTi NMF vs PCA (Qualitative Comparison) NMF Basis PCA Basis NMF vs PCA (Quantitative Comparison) Prediction accuracy on Cohn-Kanade database on Facial Expressions. • • • PCA vectors ranged from 35 – 45 (captured 98% variance) Trained on specific emotion NMF with 40 basis vectors gave consistently better results. Tracking the Features Active Shape Model search cannot be used at every frame. Tracking using KLT point tracker • Distorts the facial feature shapes Constrain the shape to lie within the Local NMF subspace at every frame. Constrain Shape Tracker Results Expression Recognition Conditional Model for labeling Sequences • Conditional Random Fields provide a framework to probabilistically label sequences, given an observation sequence. • Unlike generative models like HMM, CRF learns the conditional P(Labels | Observations) but does not explicitly model the marginal P(Observations) • CRF does not have label bias problem due to global normalization. • A wide array of overlapping features can be included. Conditional Random Fields – Linear Chain Model Labels Cliques Observations Hammersley Clifford Theorem Indicator Function (Label – Label) Observation Dependent Partition Function Local NMF basis coefficients relative to Neutral Face. (Label – Observation) Experiments Experimental Setup • Active Shape Model based on Local NMF was trained on 400 face images obtained from Variety of sources. • We use Local NMF encodings(40 basis vector) as the 2D features. • 7 sequence labels – Neutral, Anger, Disgust, Fear, Joy, Sadness and Surprise. • All sequences began from neutral and were randomly concatenated to allow transitions between any 2 states. • The Model was trained on Cohn-Kanade database – Trained on 150 sequences – 25 for each expression – Trained shapes on 30 Subjects • Testing was done on 120 sequences , 20 for each expression Results – Surprise Overall Frame recognition rate on 20 test sequences – SURPRISE - 95.2% Results – Fear Overall Frame recognition rate on 20 test sequences – FEAR – 94.8% Results – Sad Overall Frame recognition rate on 20 test sequences – SAD – 92.1% Results – Joy Overall Frame recognition rate on 20 test sequences – JOY – 94.8% Results – Anger Overall Frame recognition rate on 20 test sequences – ANGER – 74.7% Results – Disgust Overall Frame recognition rate on 20 test sequences – DISGUST – 84.2% Multiple Expressions – Surprise, Joy and Fear • Recognizing Multiple Expressions - Surprise, Joy and Fear • Due to small shape changes for the “Anger” expression, the “Neutral” state tends to be confused with “Anger” Conclusion An expression recognition framework based on tracking feature shapes is proposed. The Tracking of shapes is improved by using Local NMF instead of PCA. For the expressions with large shape changes, the recognition rates are ~ 93% Subtle expressions like Disgust and Anger have lower recognition rates.