Chapter 3: Single Machine Models

advertisement

IOE/MFG 543

Chapter 3: Single machine models

(Sections 3.3-3.5)

1

Section 3.3:

Number of tardy jobs 1||S Uj

Number of tardy jobs

– Often used as a benchmark for

managers (or % of on-time jobs)

– Some jobs may have to wait really

long

2

Characterization of an

optimal schedule for 1||S Uj

Split jobs into two sets

J=Jobs that are completed on time

Jd=Jobs that are tardy

The jobs in J are scheduled according

to an EDD rule

The order in which the jobs in Jd are

completed is immaterial (since they

are already tardy)

3

Algorithm 3.3.1 for 1||S Uj

1.

2.

Set

J=,

Jd=,

Jc ={1,…,n} (set of jobs not yet

considered for scheduling)

Determine job j* in Jc which has the EDD, i.e,

dj*=min{dj : jJc}

Add j* to J

Delete j* from Jc

Go to 3

4

Algorithm 3.3.1 for 1||S Uj

(2)

3.

4.

If due date of job j* is met, i.e., if SjJ pj ≤ dj*

go to 4,

otherwise

let k* be the job in J which has the longest

processing time, i.e., pk*=maxjJ {pj}

Delete k* from J

Add k* to Jd

If Jc= STOP

otherwise go to 2.

5

Algorithm 3.3.1 for 1||S Uj

(3)

Computational complexity O(nlog(n))

– If it is implemented efficiently!

Theorem 3.3.2

– Algorithm 3.3.1 yields an optimal

schedule for 1||S Uj

– Proof: By induction

6

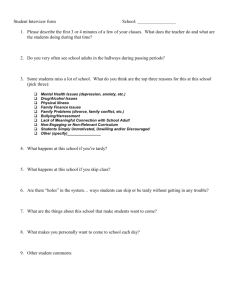

Example 3.3.3

Use Algorithm 3.3.1 to determine the

schedule that minimizes S Uj

– How many jobs are tardy?

job j

1

2

3

4

5

pj

7

8

4

6

6

dj

9

17

18

19

21

7

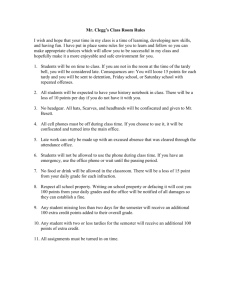

Total weighted number of

tardy jobs 1||S wjUj

NP-hard

– No polynomial time algorithm exists

If all jobs have the same due dates

– i.e., d=dj for all jobs

– Knapsack problem=> pseudopolynomial

Why not use WSPT? job j

– Example 3.3.4

What happens

when WSPT is used?

What about 2-3-1?

pj

1

11

2

9

3

90

wj

dj

12

100

9

100

89

100

8

Section 3.4:

The total tardiness 1||S Tj

A more practical performance measure

than the number of tardy jobs

– May schedule the tardy jobs to minimize

total tardiness

The problem is NP-hard in the ordinary

sense

– A pseudopolynomial dynamic

programming algorithm exists

9

Dynamic programming

See Appendix B

Dynamic programming is an efficient

sequential method for total

enumeration

For 1||S Tj we can use Lemmas 3.4.1.

and 3.4.3. to eliminate a number of

schedules from consideration

10

Lemma 3.4.1.

If pj≤pk and dj≤dk, then there exists an

optimal sequence in which job j is scheduled

before job k

Proof: The result is fairly obvious, so we

omit the proof (Good exercise!)

Consequences:

– We can eliminate from consideration all

sequences that do not satisfy this condition

11

Lemma 3.4.2.

For some job k let C'k be the latest

completion time of job in any optimal

sequence S'

Consider two sets of due dates: d1, …, dn

and d1, …,dk-1,max(dk,C'k), dk+1, dn

– S' is optimal for the first set and S'' is optimal for

the second set

Lemma: Any sequence that is optimal for

the second set of due dates is optimal for

the first set as well

– Proof: Skip

12

Enumerate the jobs by

increasing due dates

Assume d1≤d2≤…≤dn

Let job k be such that

pk=max(p1,…,pn)

– Lemma 3.4.1=> there is an optimal

sequence such that jobs 1,…,k-1 are

scheduled before job k

– The n-k jobs k+1,…,n are scheduled

either before or after job k

13

Lemma 3.4.3

There exists an integer d, 0≤d≤n-k,

such that there is an optimal sequence

S in which job k is preceded by all jobs

j with j≤k+d and followed by all jobs j

with j>k+d

– Effectively reduces the number of

schedules to be considered

– Proof: Skip

14

Consequences of Lemma

3.4.3.

There is an optimal sequence that

processes the jobs in this order:

1.

2.

3.

jobs 1,2,…,k-1,k+1,…,k+d in some order

job k

jobs k+d+1, k+d+2, …, k+n in some order

How do we determine this sequence?

– Algorithm 3.4.4.

15

Notation for Algorithm

3.4.4.

Ck(d)=Sj≤k+dpj is the completion time of job k

J(j,l,k) is the set of jobs in {j,j+1,…,l} that

have a processing time less than or equal to

job k and excludes job k itself

V(J(j,l,k),t) is the total tardiness of the jobs in

J(j,l,k) under an optimal sequence assuming

that this set starts processing at time t

k' is the job in J(j,l,k) with the largest

processing time, i.e., pk'=max{pj : j J(j,l,k)}

16

Algorithm 3.4.4. for 1||S Tj

Initial conditions

V(Ø,t)=0

V({j},t)=max(0,t+pj-dj)

Recursive relation

V(J(j,l,k),t)=mind{V(J(j,k'+d,k'),t)

+max(0,Ck'(d)-dk')+V(J(k'+d+1,l,k'),Ck'(d))}

Optimal value function:

V({1,…,n},0)

17

The complexity of

Algorithm 3.4.4.

At most n3 subsets J(j,l,k)

At most Spj time points

=>at most n3Spj recursive equations

Each equation requires at most O(n)

operations

Overall complexity is O(n4Spj)

=> pseudopolynomial

18

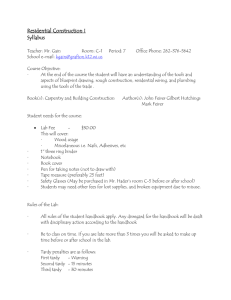

Example 3.4.5.

Given the following data, use

Algorithm 3.4.4. to solve the problem

1||S Tj

job j

pj

dj

1

121

260

2

79

266

3

147

266

4

83

336

5

130

337

19

Section 3.5.

The total tardiness 1||S wjTj

Thm. 3.5.2: The problem 1||S wjTj is

strongly NP-hard

– 3 partition reduces to 1||S wjTj

A dominance result exists. Lemma 3.5.1:

– If there are two jobs j and k with dj≤dk , pj≤pk

and wj≥wk then there is an optimal sequence in

which job j appears before job k.

Branch and bound for 1||S wjTj

– Branch: Start by scheduling the last jobs

– Bound: Solve a transportation problem

20

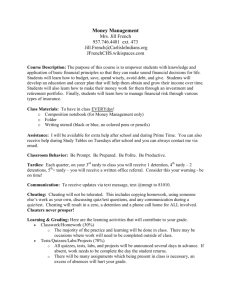

Summary of Chapter 3:

Single machine models

1||S wjCj

1||S wj(1-erCj)

1||Lmax

1|prec| hmax

1|rj|Lmax

1||S Uj

1||S Tj

WSPT rule, works for chains too

WDSPT rule, works for chains too

EDD rule

Algorithm 3.2.1.

Branch and bound

Algorithm 3.3.1.

Dynamic programming Alg. 3.4.4.

21